A week of Linux instead of Windows

Date:

This week is my vacation from work, during which I wanted to relax from enterprise code, as well as work on a more substantial project of my own: DevProject 2022, where I attempt to demonstrate cloud native application development from an idea to something running in "production".

But instead of just that, I also decided to try using only distributions of GNU/Linux for the entire week, instead of my usual OS of Microsoft Windows:

Join me in my adventure, where I'll explain both my reasoning behind this, as well as my own experiences.

Why use Linux

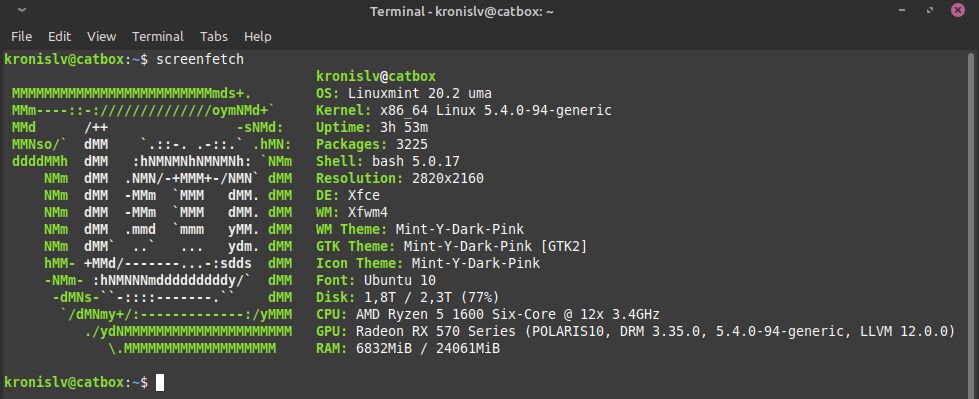

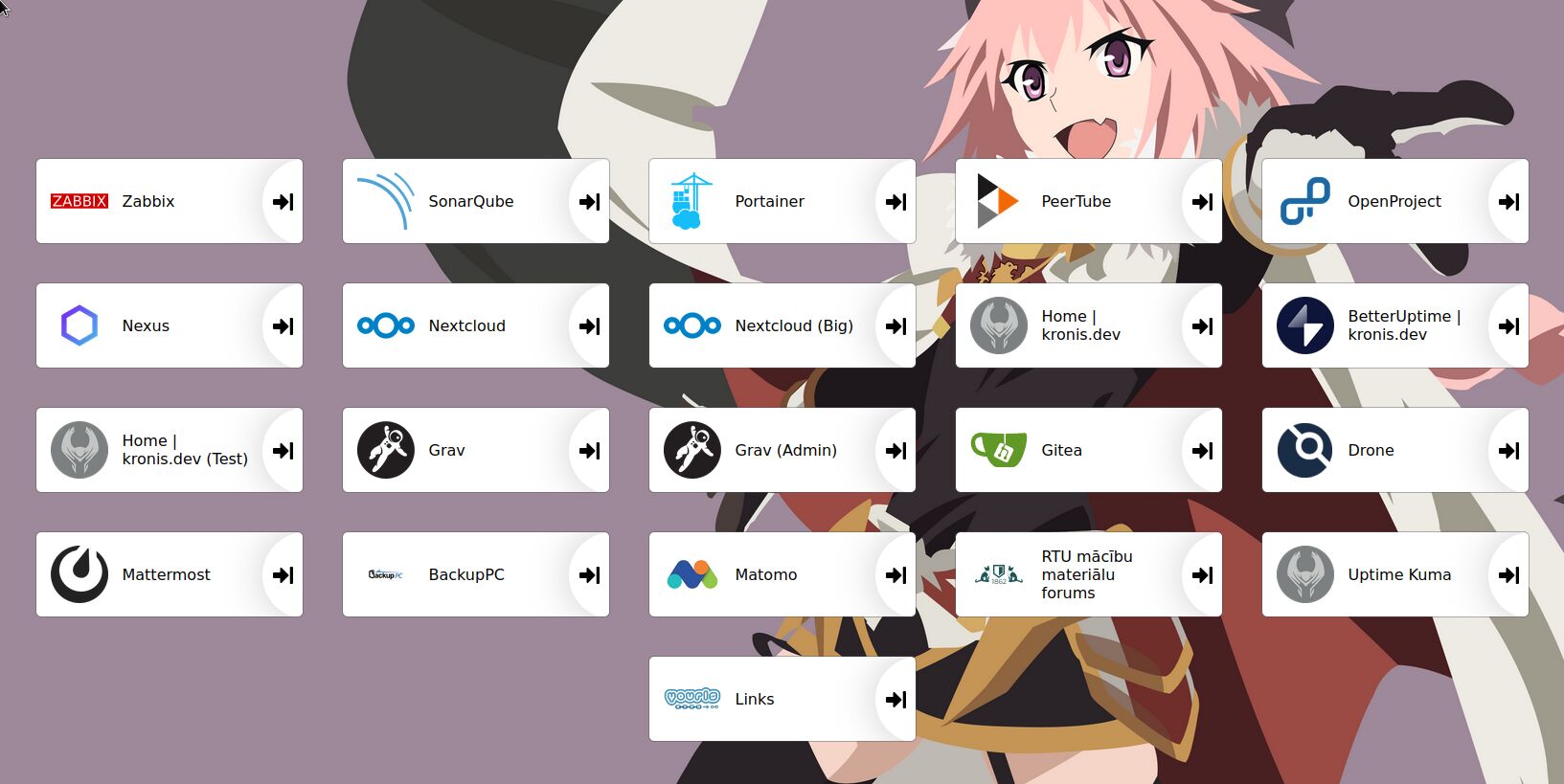

For starters, all of my servers both at home and in my little rented piece of "the cloud" run distros of GNU/Linux: I've had some bad experiences with Windows Server professionally and driver support can be challenging in something like FreeBSD, as well as the amount of tutorials and information about Linux is hard to beat. Almost all of the web based software that I use, like Nextcloud, OpenProject, Gitea, Drone CI, Sonatype Nexus, SonarQube, Zabbix and many others, including this blog, also runs on various variations of Linux (maybe the occasional Alpine Linux container in there, so not just GNU/Linux):

(even the Heimdall dashboard above runs in a container that's based on Linux)

The same applies to almost all of the software that I build myself, from my homepage, to sites like Quick Answers and even the aforementioned DevProject 2022. This matters, because building software on Windows (or trying to run it in a container on Windows, deal with bind mount peculiarities, file system permissions and remote debugging) but actually running it on Linux can be a somewhat painful experience. When possible, it can be really nice to have even your development environment be closer to what your software will actually run on, instead of a leaky abstractions like containers (though they're great for other reasons).

Not only that, but there's this joke about "The Year of Linux on the Desktop" going around: that Linux enthusiasts are saying that any day now the OS will be a capable and viable choice for using it as your daily driver. Historically, there have been a variety of issues on the desktop, with driver support (especially for GPUs, due to the sometimes closed source nature of those projects), software and game support (some things are just not ported over), conflicting and often rapidly moving pieces of the OS (for example, whatever is going on with the various sound systems, or desktop compositors, or whole desktops like Unity being swapped out for GNOME, or GNOME having updates that some people didn't like and so on).

Yet, a lot of that doesn't necessarily apply to me, at least as much as passionate people on the Internet would suggest it does. Hence, I want my own biased view on the issue, with the points that matter to me explored in a bit more detail. Admittedly, I mostly use Windows due to the aforementioned software and video game support, but why not try something a bit different for a week? Furthermore, Windows increasingly feels like it's moving in a direction that I'm not overjoyed about, my hardware might not even be compatible with Windows 11 and honestly I'm kind of broke at the moment, so you can't beat free!

Which Linux distro to use

So, which distro should you use? This is a question that's pretty hard to answer, because everyone has opinions about it, each person has different reasoning behind those and oftentimes the choice will be somewhat superficial, as long as you go for one of the more popular distros... unless you want to do something specific or niche and this choice will make the difference between success and utter failure. You see, instead of the consistency that something like Windows brings, in the world of Linux there are various distributions with different package managers, different (supported) desktop environments, different ports of software, sometimes even the same pieces of software work a bit differently (e.g. Apache2/httpd in DEB/RPM distros).

In my case, I've generally stuck to the two larger groups of distros out there: DEB and RPM. The former mostly for personal stuff and freelancing, whereas the latter mostly in professional environments.

That said, my experiences with RPM distros (RHEL, CentOS, Rocky Linux, Alma Linux) have been somewhat soured for a variety of reasons. The biggest here would probably be Red Hat "killing" CentOS, at least in the way it previously worked: for many out there it was a free option that's RHEL compatible that they liked to put on a server and have it get updates for almost 10 years thanks to the long EOL policy, which was retired by Red Hat. Rocky and Alma both stepped up, which is nice, but they have large shoes to fill, others turned to Oracle Linux and frankly it's a bit of a fragmented mess at the moment.

In addition, typically you'd run something like Fedora locally if you want a RPM distro to work with, but it has a pretty short lifecycle, at least when compared to the alternatives. Oh, and RPM distros seem opinionated about how to do certain things, like running Docker instead of Podman used to be somewhat broken (needing masquerades for the firewall, or DNS broke), SELinux makes me dislike the OS strongly (and turning it off is not a good "solution" for certain software not playing nicely with it) and there are a few other annoying details like that, which are sub-optimal both on servers and on the desktop.

That's why I increasingly look in the direction of DEB distros (Debian and Ubuntu), which have been more of a positive experience for me. Most software is available, most software just works, you have easy choices between running free and proprietary code (which is especially relevant for drivers). Don't get me wrong, there have been really annoying design decisions, like the whole concept of snap packages, which sidestep how you'd normally manage software on the OS (through apt) and take away some control over updates, especially when something like AppImage and Flatpak already exist and do certain things better.

So, in a sense, every choice that you can make will have drawbacks, the impact of which depends on what you want to do. Personally, I'd suggest that you generally stick with whatever is popular. For example, go to DistroWatch and pick from one of the most popular distros, ideally, one that has remained near the top of community's interest for years:

In my case, that would be Ubuntu. Ubuntu LTS is a great solution for servers, recently I moved all of my container images over to Ubuntu LTS and they also have a decent desktop offering. It doesn't have the latest and greatest packages, but I don't care about that much of the time: I just want something relatively recent (or a way to set up something relatively recent, even if I have to occasionally use a PPA), with a stable base system, so I can get things done.

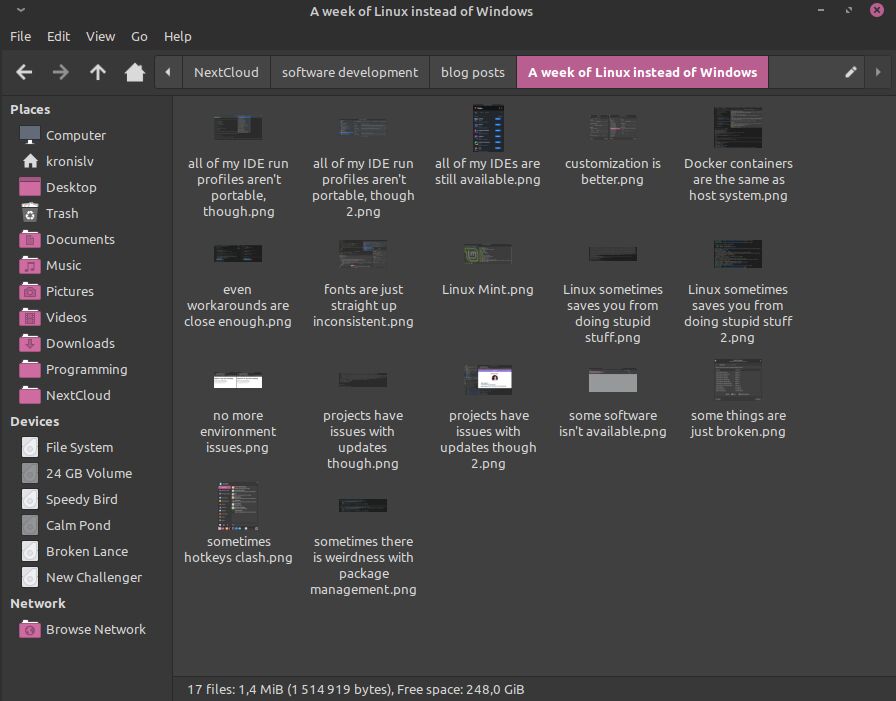

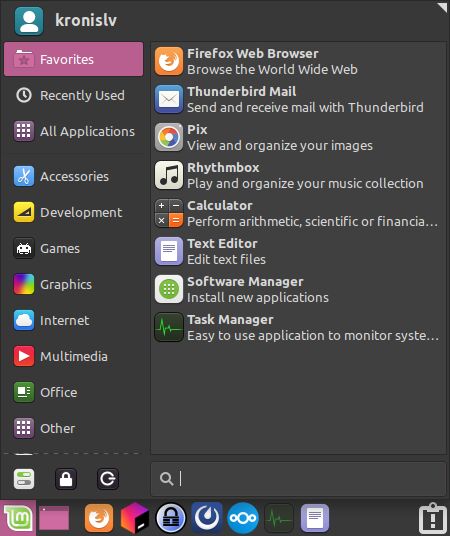

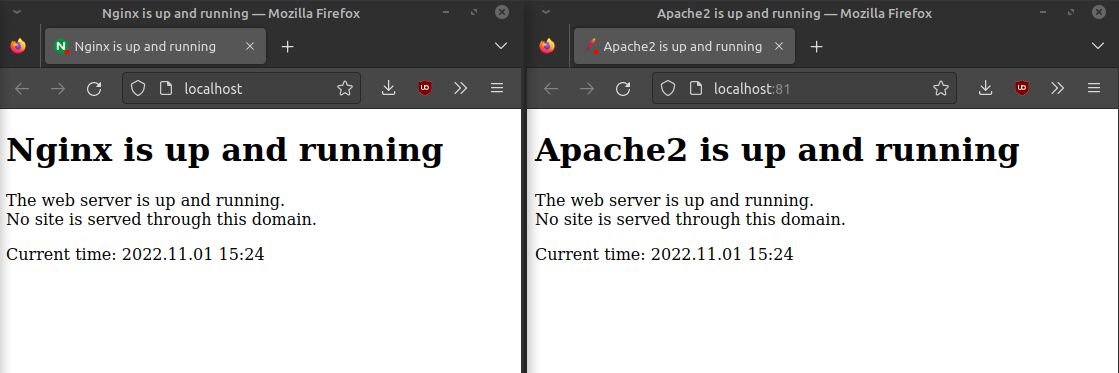

So of course the answer here is to not use Ubuntu on the desktop and instead go for Linux Mint:

Wait, what? You heard that right, I'm instead going for something that's based on Ubuntu, instead of using it directly locally. My reasoning is that the packages are close enough, as well as I get access to a tested distro with the XFCE desktop environment.

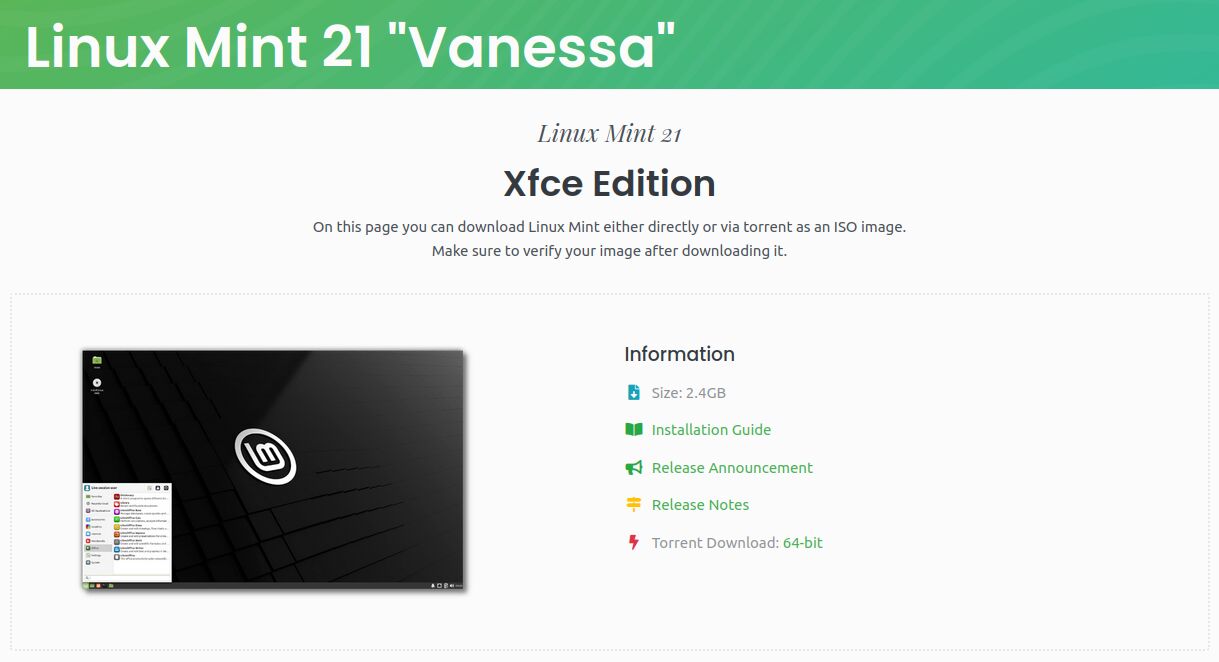

Linux Mint actually provide an Xfce Edition, which fits my needs well:

For Ubuntu itself there are also projects like Xubuntu or Lubuntu (the latter of which still has a seemingly misleading search result at the top of many search engines), but in my experience, those aren't quite as well put together or as stable. So now I get the best of both worlds: the ecosystem of Ubuntu, the boring stability and dependability of XFCE, without venturing too far outside of what's tested to have to debug odd issues at 1 AM. Eureka!

Oh, and because I'm lazy, I'll be using a slightly older LTS version, which was already setup in a dual boot configuration. It would be nice to walk you through the install process, but the point of this article is to mostly talk about the day to day differences, since the setup is okay for most OSes out there. So, without further ado, with a distro choice under our belt, let's get started on those differences!

Look and feel

The first thing I have to say, is that many of the Linux distros out there do customization and consistency better than Windows, full stop:

Windows has explored a variety of different graphic design and UX patterns over the years, which has not only resulted in an inconsistent look, but has also seemingly taken away more and more control over how one's desktop looks and functions. Some people like to point out how there are many different design systems in Windows, some of which you'll run into simultaneously. Personally, I'm just bummed out about something as basic as vertical taskbars no longer being a thing in Windows 11.

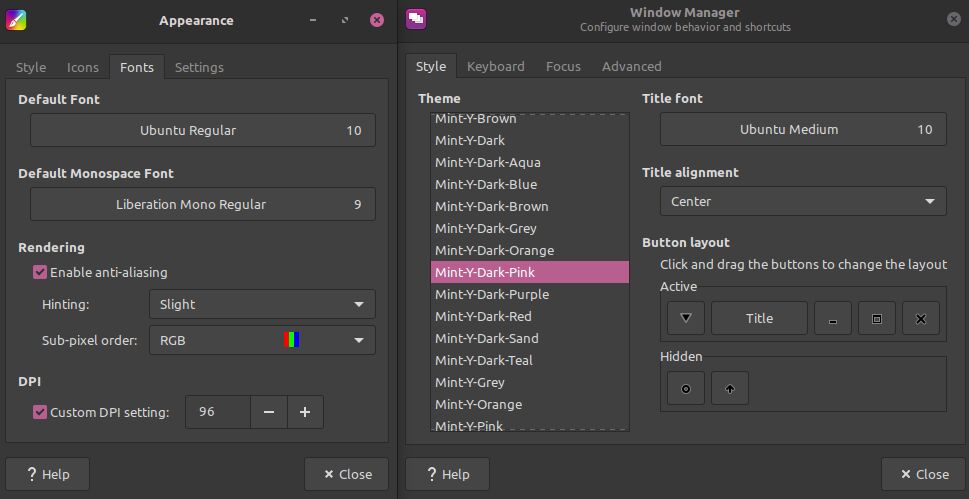

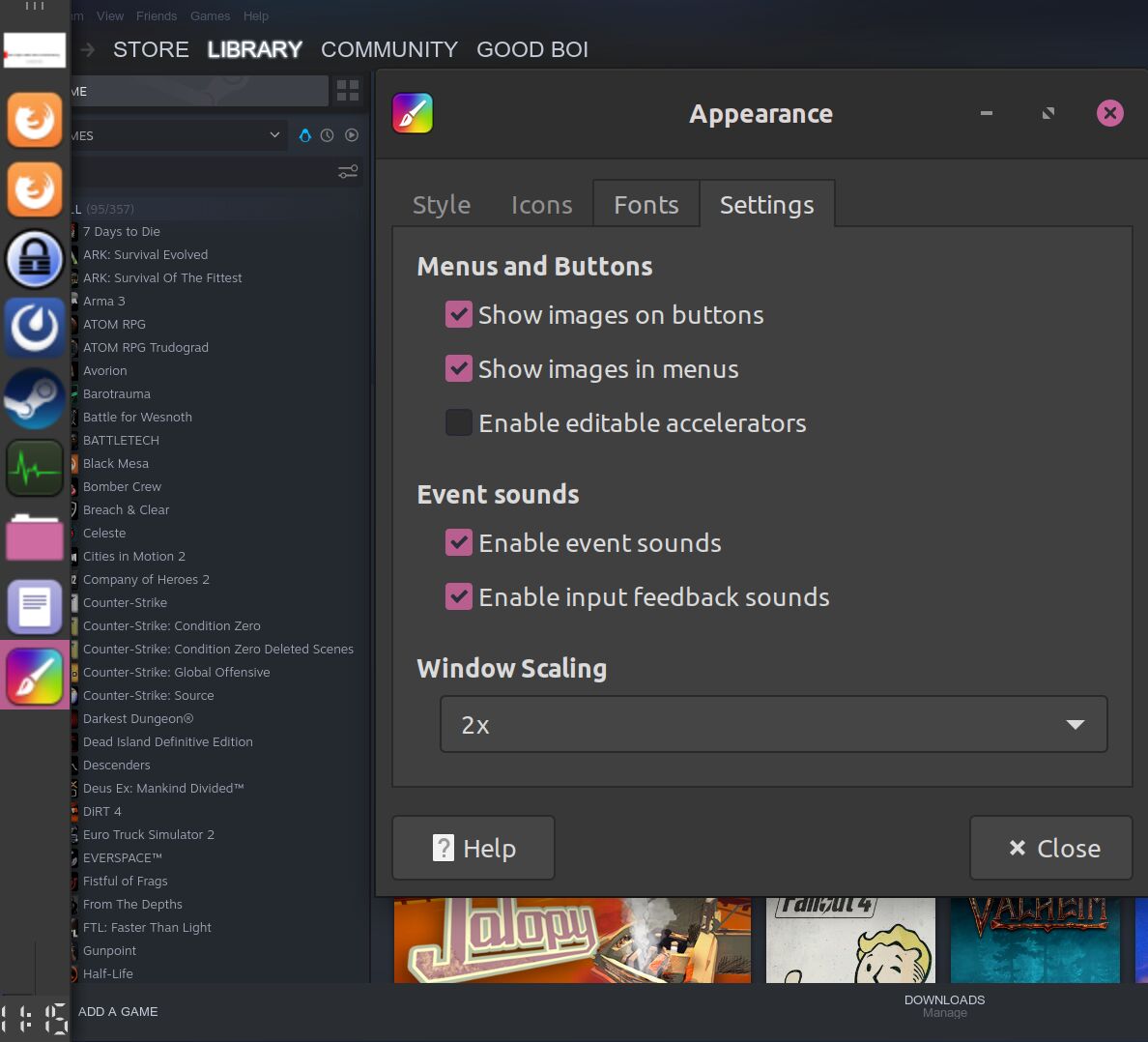

Linux also has some of those design system issues, but as long as you stick within the confines of a particular distro and don't mix software from different environments (like some from GNOME and some from KDE), you'll have a more consistent experience. Furthermore, you can also customize things to your heart's content, with most of what you'd like being easily accessible in most cases:

There's everything from font sizes, color themes, window compositing and decorations, icons that you want to use, window borders as well as behavior: whether and how you want to snap windows, how you want to cycle through them, what hotkeys you want and so on. And this is with XFCE, a desktop that's known to be lightweight and isn't even the most customizable out there. It's great! I can have a bottom taskbar on my vertical monitor and vertical taskbars on my landscape monitors. I can customize everything to my liking and get a look and feel that makes me feel comfy, much of which is available out of the box, I don't even need to mess around with dotfiles.

Of course, there are also issues, seemingly basic things that are broken, which can be extremely odd at times. For example, suppose I am used to the Windows way of snapping windows to the sides of the screen, something like Windows/Super + arrow keys.

I can bind such controls, but in my setup they just don't work:

Also, there are apparently settings for customizing how close to the side of the screen I need to be to snap windows, but it doesn't seem to work all that well. It affects how far different windows need to be from one another to snap together, but I still need to be around 5-15 pixels from the edge of the screen for snapping to work, which is annoying.

Not only that, but it seems like window snapping zones straight up don't work and cannot be customized (easily) for my vertical monitor, which was also broken on Windows, but I'd have hoped that I could have a top and a bottom slot, instead of having to manually drag windows around until they look good enough:

Oh, and if we're talking about things that are broken on both Windows and Linux, select software just loves to ignore window scaling settings. I wanted to take a crisp screenshot of the games on my Steam account (to show the count of available titles vs the ones that work on Linux), but that just didn't work and as an added bonus, the icon scaling was also messed up:

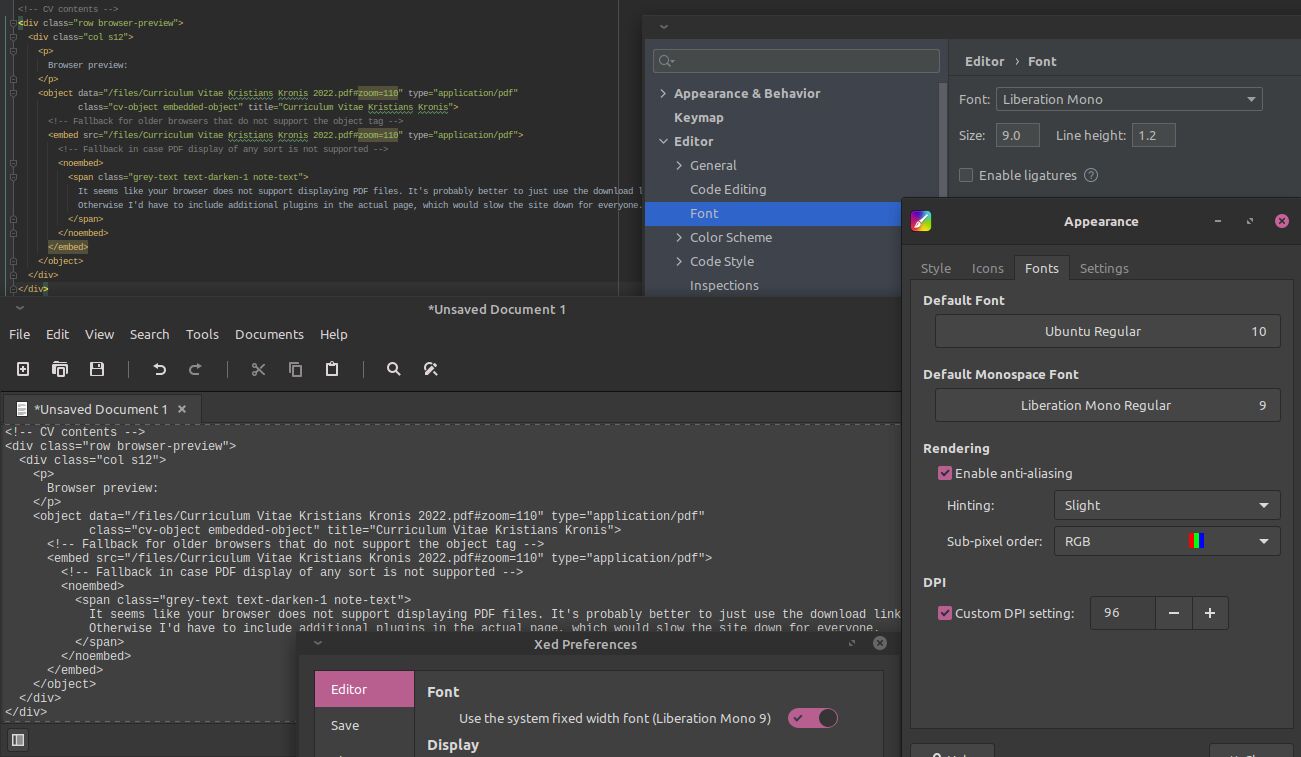

In addition to that, much like on Windows, some software just has its own ideas about how fonts should scale. For example, if I compare the xed text editor and some of the JetBrains IDEs, the font sizes feel visually different, despite the OS setting being the same as what's present in the IDE configuration, perhaps due to DPI or something else:

Furthermore, sometimes the start menu opens, when I'm using a hotkey combination that has the Super key in it, but when I care about the rest of the combination being read, instead of the menu popping up randomly:

I have to say, however, that mouse acceleration just somehow feels better in Linux. I have messed around with the settings a lot in Windows, yet have never gotten close to how pleasant it is to use the mouse in Linux. Also, it might just be me, but the typing latency just generally feels worse in Windows and is a snappier experience in Linux, though the difference might depend on the software that's used and might be pretty minuscule.

Overall, I have to say that I'm conflicted. Linux lets you do more and feels like a frank attempt at giving you the power to customize your own experience based on your desires. But sometimes it remains just that: an attempt. It's not quite a "death by a thousand cuts" experience, but is definitely one where you'll run into annoying kinks, much like the window snapping on Windows also is by default. One might suggest that I just need to look into a different desktop environment, but then I'll just run into something that trades these issues for other ones.

What's the point of jumping around 5 different distros if I can't get something basic working properly. I want things to work on my current distro, not be told that it's my fault for expecting a stable and dependable desktop user experience. Worse yet, people might sometimes erroneously claim that it's possible to fix some or all of these with a bit of effort: which might be true for them and their skills, but not for someone with a limited amount of free time or just knowledge about systems programming, or the intricacies of whatever software they want to just work.

But when it works, it works nicely and is definitely usable enough to be a daily driver, maybe with a bit of blood and sweat to figure out the whole window snapping thing and other stuff (which I solved on Windows with FancyZones, but I'll talk about that later).

Package management

Whereas it's debatable whether Windows does UI functionality (aside from customization) better than Linux, depending on what you care about, package management is where Linux wins, hands down. In Debian and Ubuntu, I have the apt package manager, which makes for a great experience, which is pretty much also the story on RPM distros, where you work with yum.

It is typically enough to just run a few terminal commands and most of your software and drivers will be updated shortly afterwards:

# Fetch what updates and packages are available

sudo apt update

# Upgrade the currently installed packages

sudo apt upgradeNot only that, but installing new packages is similarly easy:

# Fetch what updates and packages are available

sudo apt update

# Install a package by name, latest version

sudo apt install wgetYou can also use some GUI tools if you want to have an easier experience on your desktop and don't care too much about automating things with scripting:

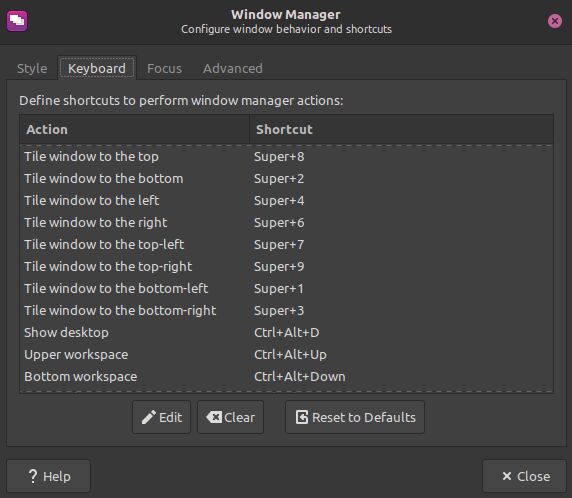

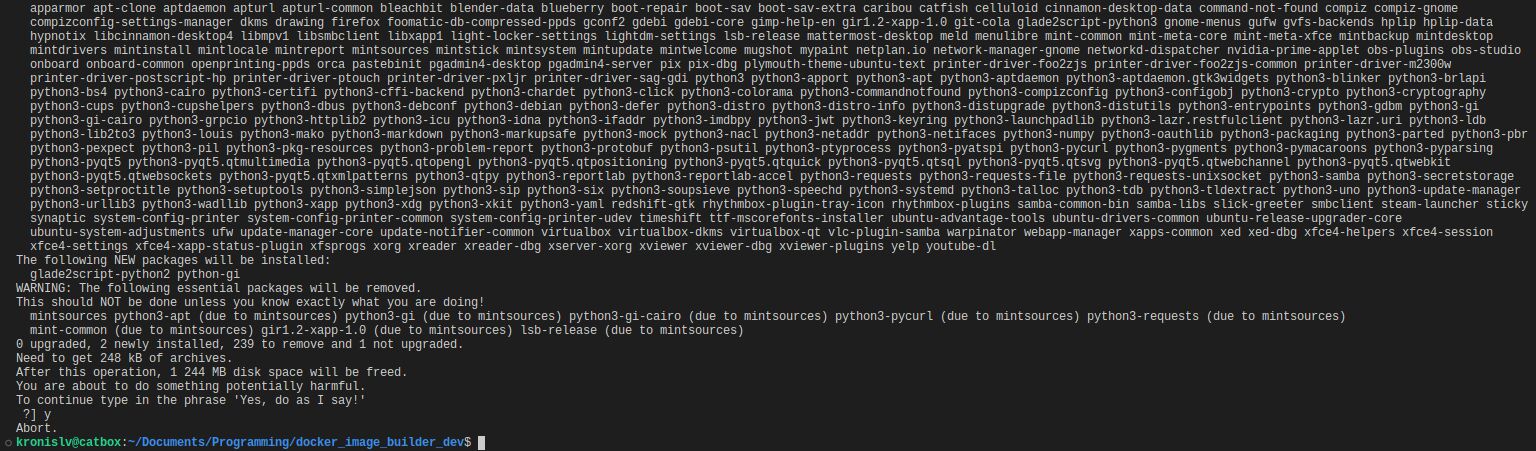

Linux will also sometimes save you from doing stupid things. For example, I wanted to reinstall Python from zero, but doing so would remove a bit too much software that the OS relies on, so I got a nice warning. Apparently it had happened so often previously and others had gotten burnt by it, that me even instinctively typing in y to accept didn't work, but instead I'd have to explicitly say that I know what I was doing, because it actually wasn't what I thought I was doing:

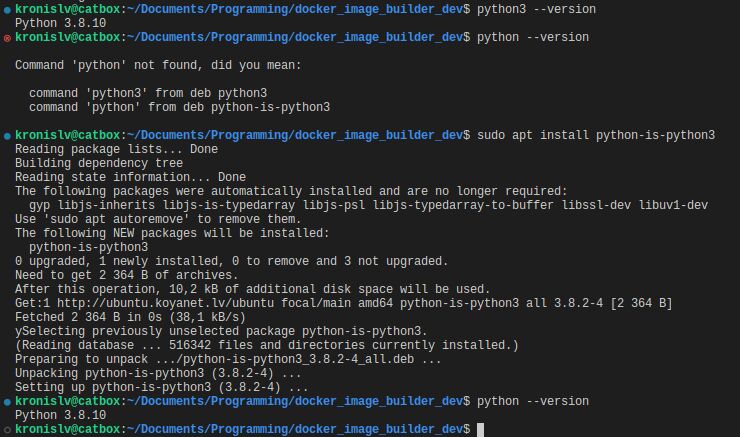

Not only that, but it feels like the package maintainers just care about software. In regards to Python, I wanted python to run the equivalent of python3. While I could have traced down where the executable is with which python and then set up a symlink with ln -s FILE LINK, instead I could just install a package that does it for me (or the opposite, python running python2, for legacy software):

The guard rails won't always be there for you, admittedly, because if you want to screw up your install with a few fat fingered commands, you can still definitely do it. But honestly managing most if not all your software through a package manager is in my eyes the best possible approach, especially when the packages are available in numerous mirrors, or you can choose where you want to get your third party packages from, if you want something more niche, all with the same method of installing and updating them.

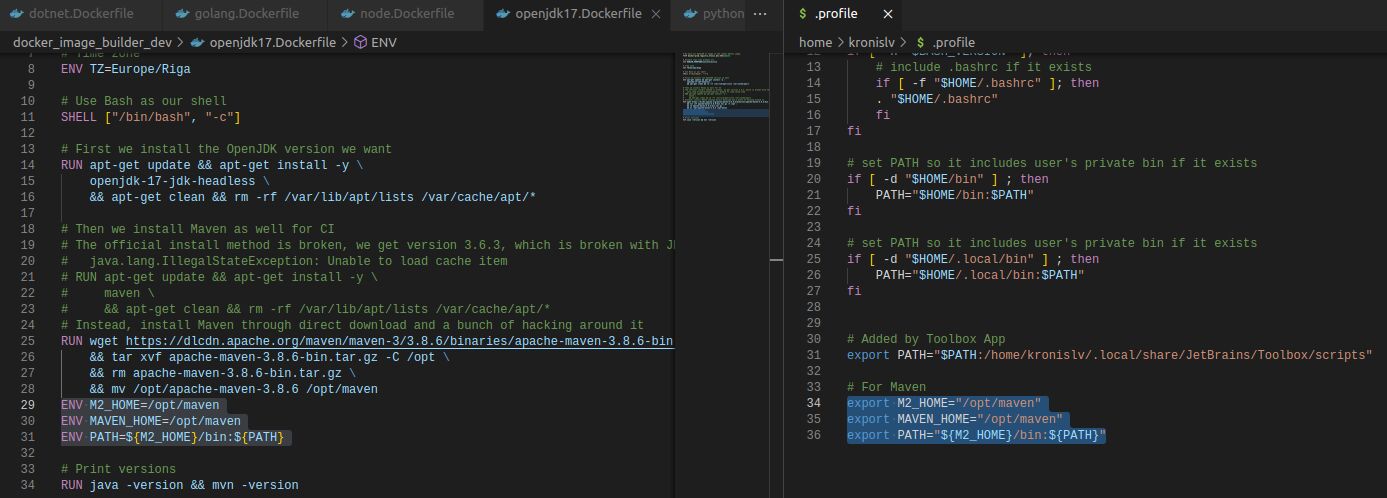

Oh, and remember how I said that things that run in containers will now be pretty close to what I have on my system? That's wonderful, for when I want the same version of .NET, the same version of Java, the same version of Node or any other dependencies, installed the same way, so that I don't have to always use containers for local development (remote debugging/instrumentation is sometimes a pain). Even things that are a little bit different aren't different enough for the changes not to be easily carried over, for example, when installing Maven directly:

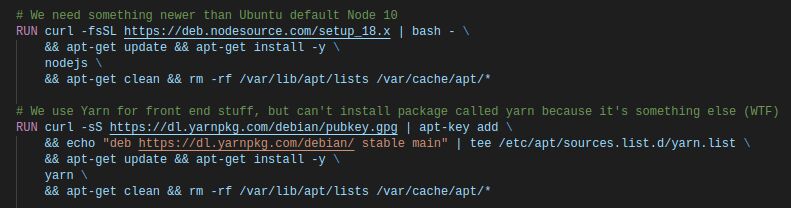

That said, sometimes you will still need to do shady or stupid things, when the official packages in older LTS versions are EOL and you want something newer instead. Sometimes it'll be third party repositories (which isn't the worst thing ever, as long as you trust the parties behind them), or even worse, running various scripts and needing annoying workarounds for things that shouldn't be problems:

In short, as long as all of your software is available through package managers, there isn't any experience out there that's better. This doesn't even prevent you from grabbing and using statically linked software either, of course (something like the Godot game engine, which I'll mention later). But when the packages aren't available in the official repos or even in the third party repos, you might need all sorts of ugly hacks and whatnot.

This is also kind of why I'm not overjoyed with snaps and such, because while they do some good in regards to consistent environments and easier packaging, it also feels like they're making things harder for people who just want to use apt and be done with it. Then again, I use Docker for packaging much of my software for servers to run, so I definitely support the idea behind it, especially when you want to have multiple versions of software running in parallel, or want to limit the fallout of some software that works kinda bad.

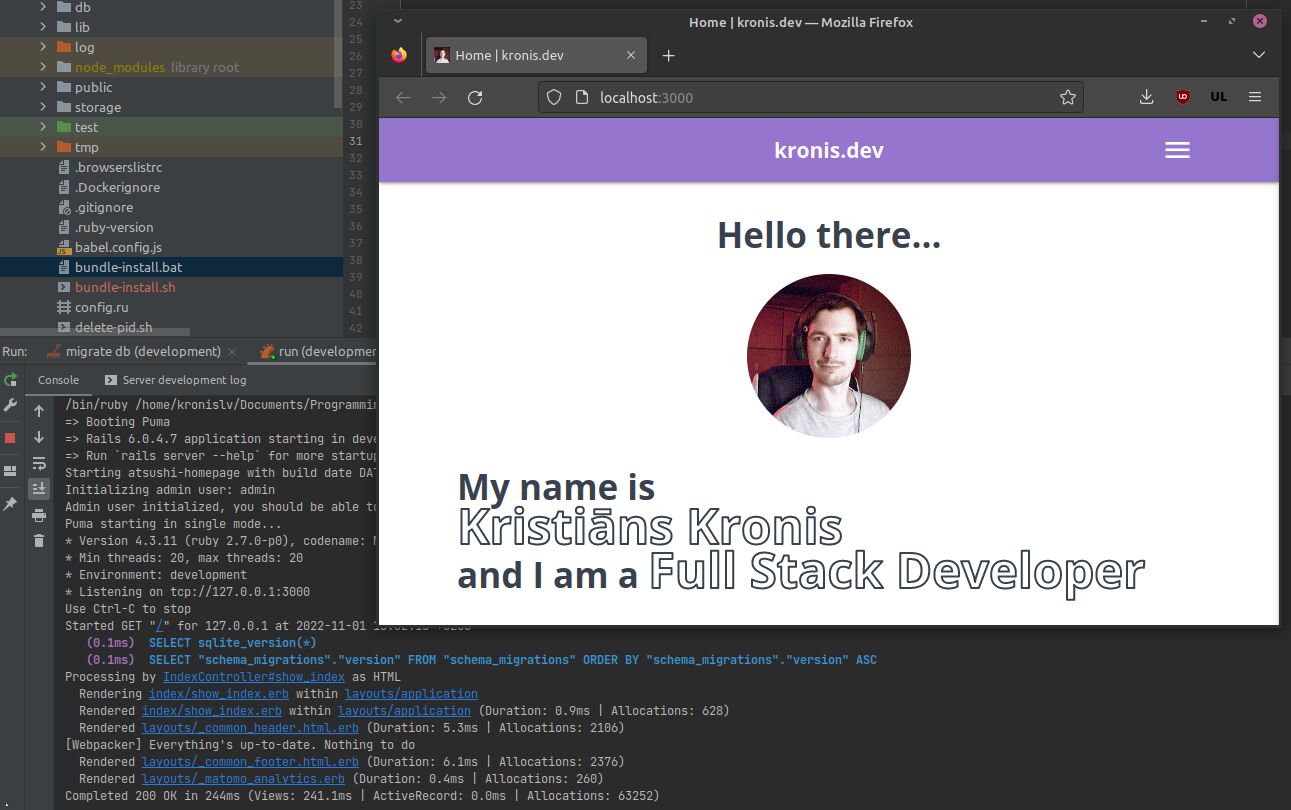

Development, the good things

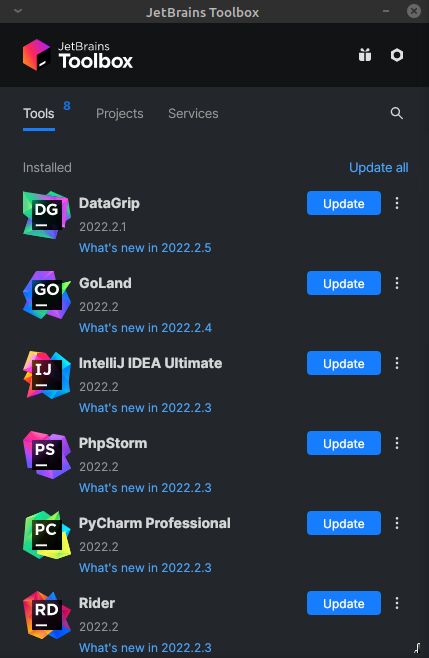

So, first up, let's actually talk a bit about software development, which takes up a certain portion of my life! Most of the tools that you'll be used to while doing development will also be available on various Linux distros. In my case, that is the majority of the IDEs that JetBrains offer.

Maybe that's a little bit of cheating because they're not "really" native software, at least as far as the UI is concerned, but since they work and seem mostly consistent, that's good enough (plus the actual IDEs are great to use):

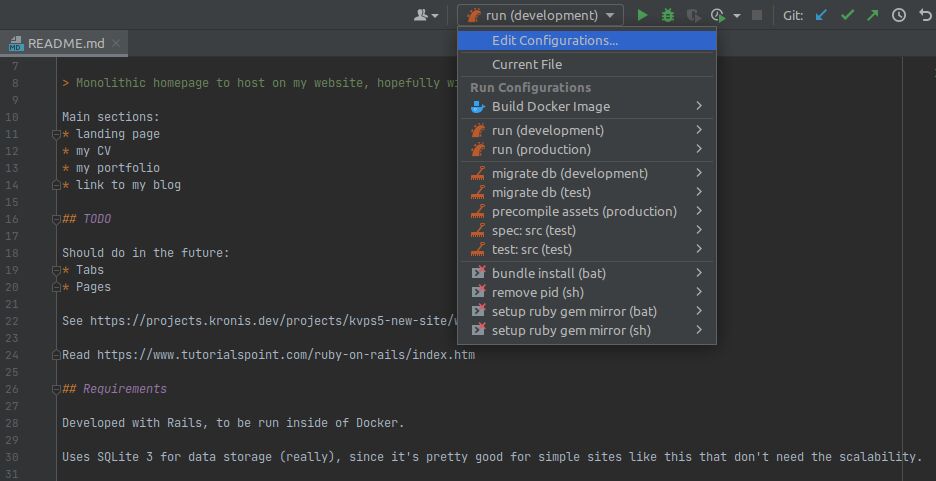

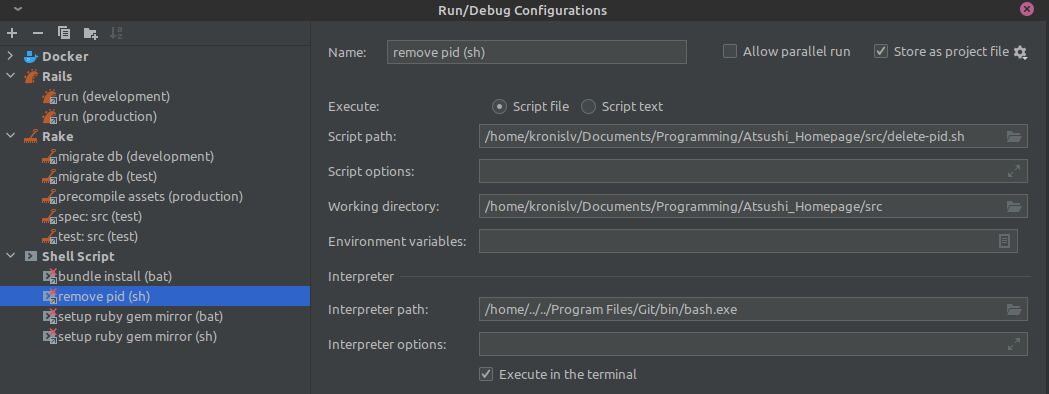

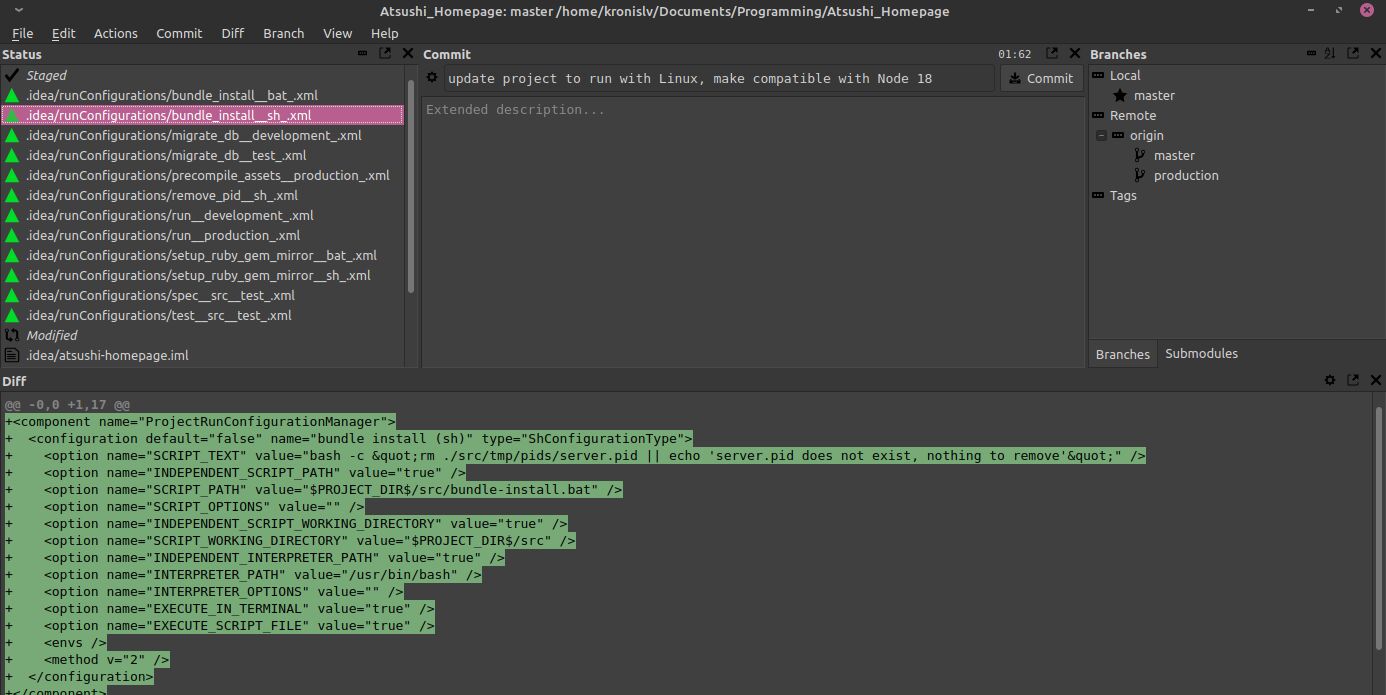

It isn't ideal, though, since the IDE run profiles in the case of JetBrains IDEs won't really always be portable. For example, I decided to do some development on my homepage (since it's still not finished), but none of the shell scripts showed up as launchable:

Why is that? Because for some exceedingly odd reason, the shell interpreter setup always does some weird as hell stuff with the file paths, choosing to mix the home path, with some upper directory paths (which don't actually resolve to anything), with a relative path to where Git Bash is on Windows:

Unfortunately Windows needed the full path (which the IDE transformed into a relative one and then later to whatever this abomination is on Linux) because otherwise it wouldn't execute Git Bash, despite it being in PATH. So, I end up with needing separate run profiles for each OS, even though it's just a boring Bash script that I want to run. Luckily, it's not a bit issue, just an annoying detail, a bit of the OS is leaking through the IDE.

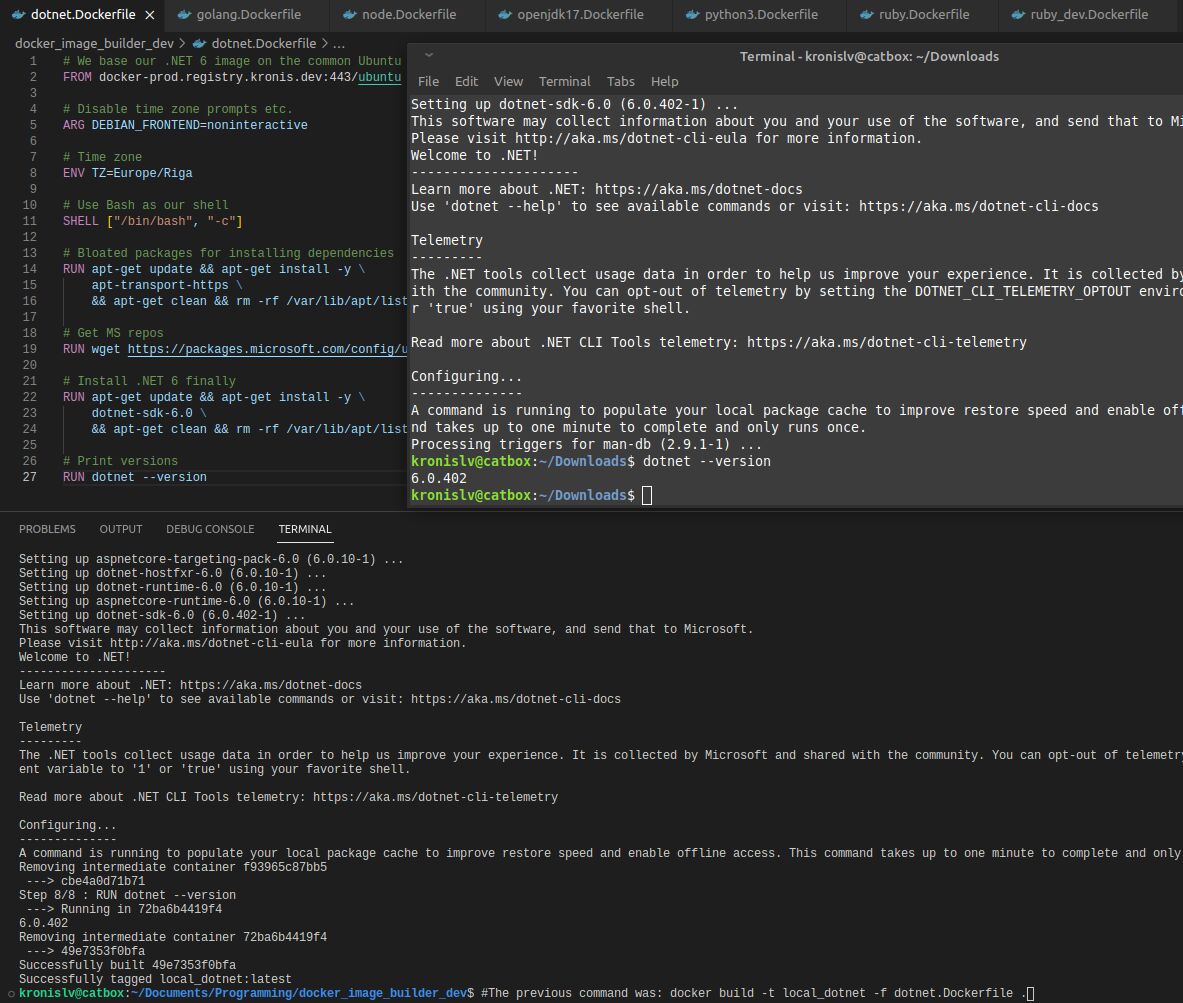

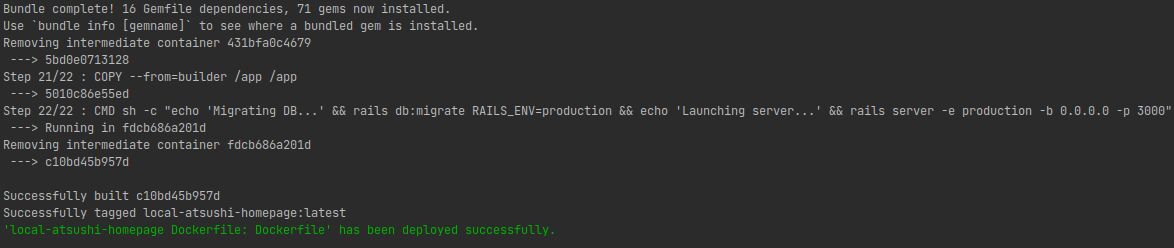

But you know what's way better? Being able to run the same thing inside of containers and on the host system. Now my containers instruct what dependencies I'll need for developing software in certain stacks, which in practice makes for an easy experience, as opposed to messing around with installing everything in 10 different ways on Windows:

I cannot overstate how much easier this makes my life. Plus, if I decide to run a newer version of Ubuntu or even something like Rocky Linux on my servers, these containers will keep working. And once I'll inevitably need to upgrade to the next Ubuntu LTS for the containers, I'll be able to lead by example with my desktop distro and have an easier time debugging everything that will actually break (or alternatively just run the old containers locally with remote debugging, when such a need will arise).

I actually have a concrete example of how bad things were on Windows in comparison, in particular in regards to building containers. Guess what? Everything is consistent in regards to building the containers as well now, since even the underlying file system doesn't have weirdness with permissions either. No more pulling my hair out whilst being utterly puzzled about what exactly went wrong.

It just works:

Also, did I mention the shell? Look at this little window here:

This window opens you an entire world of possibilities! If you develop software, want to do some scripting and automation, or even just use Linux to its fullest, you'll need to spend some time in the shell. Mine is pretty boring, it's just a regular Bash setup with whatever terminal emulator came out of the box.

But guess what: it's leaps and bounds better than what something like Git Bash offers, because you have the entirety of GNU tools at your disposal. No more leaky abstractions over the NTFS file system, no more being stuck with whatever is available out of the box, no more mucking about with WSL2.

For many kinds of software development, GNU/Linux is just the choice that you should reach for if you want an experience that works well.

Development, the bad things

So, what are the bad things about development on Linux? I'd say the same that you'll get on other systems: code will still rot and depending on the ecosystem that you want to use, things will keep breaking if you do updates, or you'll end up using deprecated, dead and insecure software if you don't.

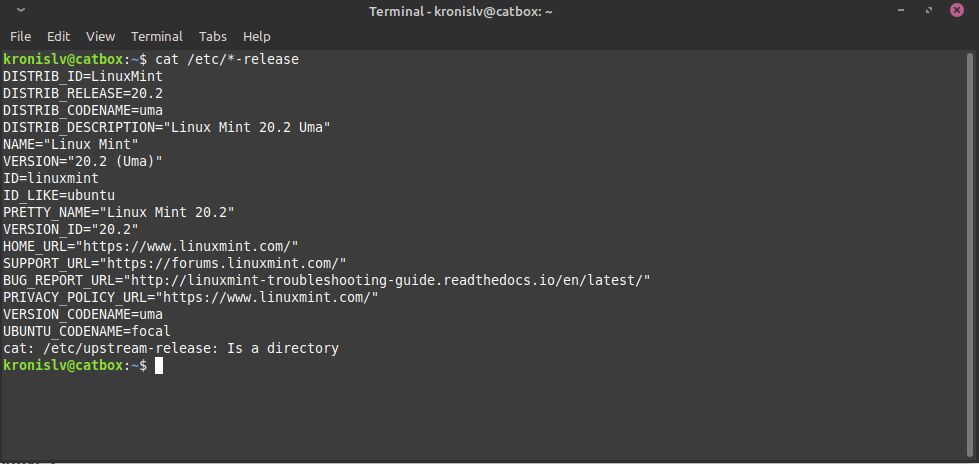

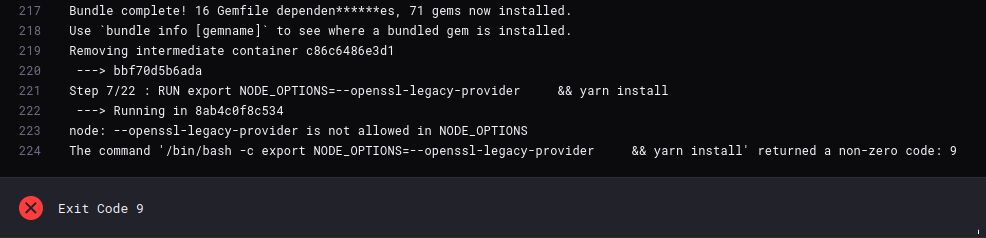

Let me give you an example with my homepage. I wanted to simply launch the boring Rails project and get the environment up and running locally, however was greeted by this instead:

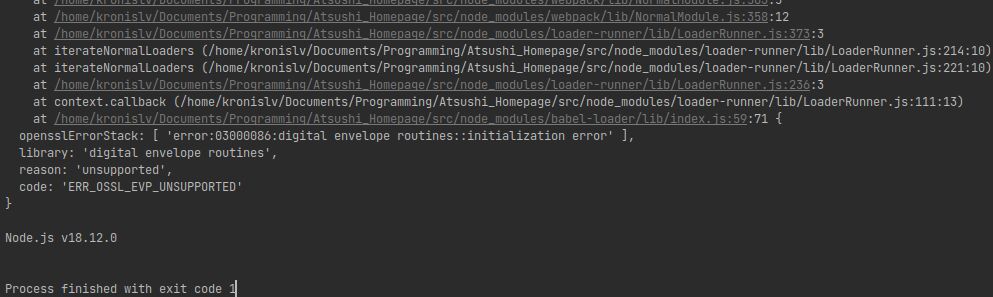

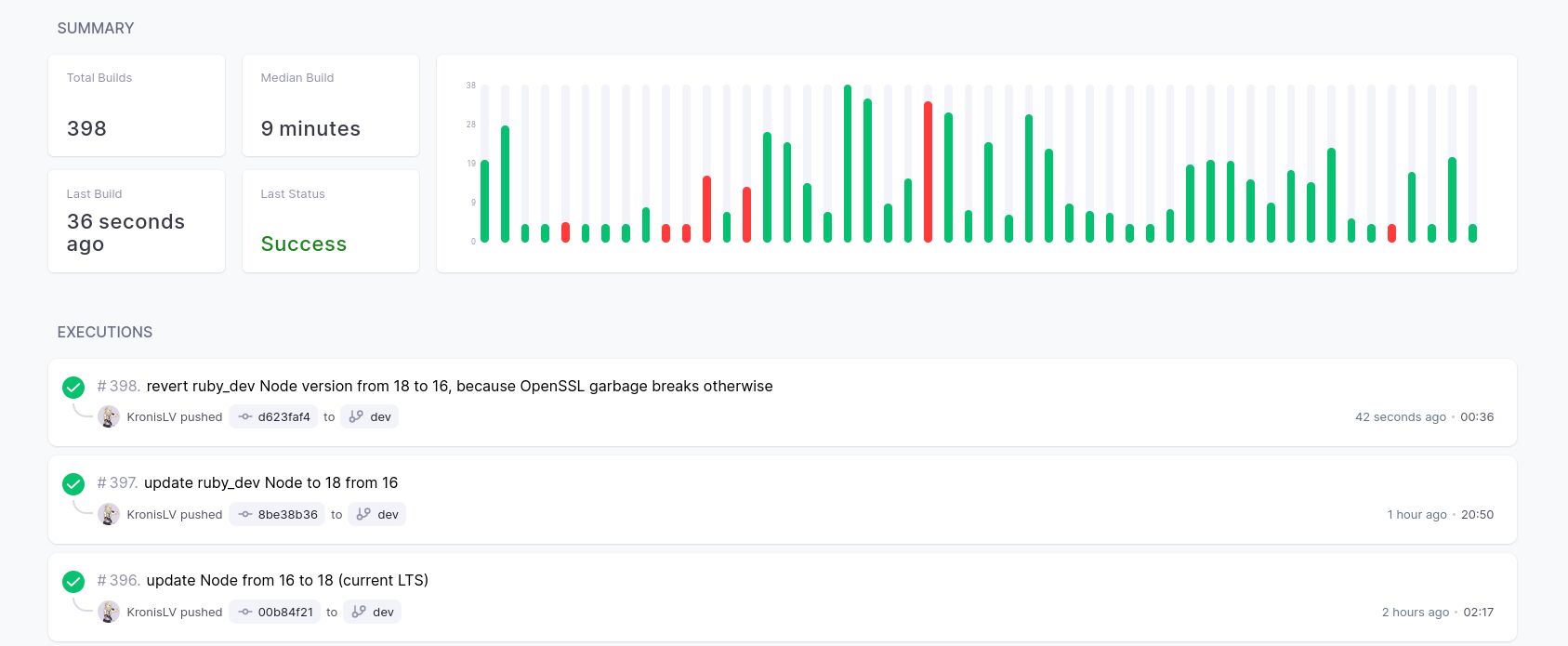

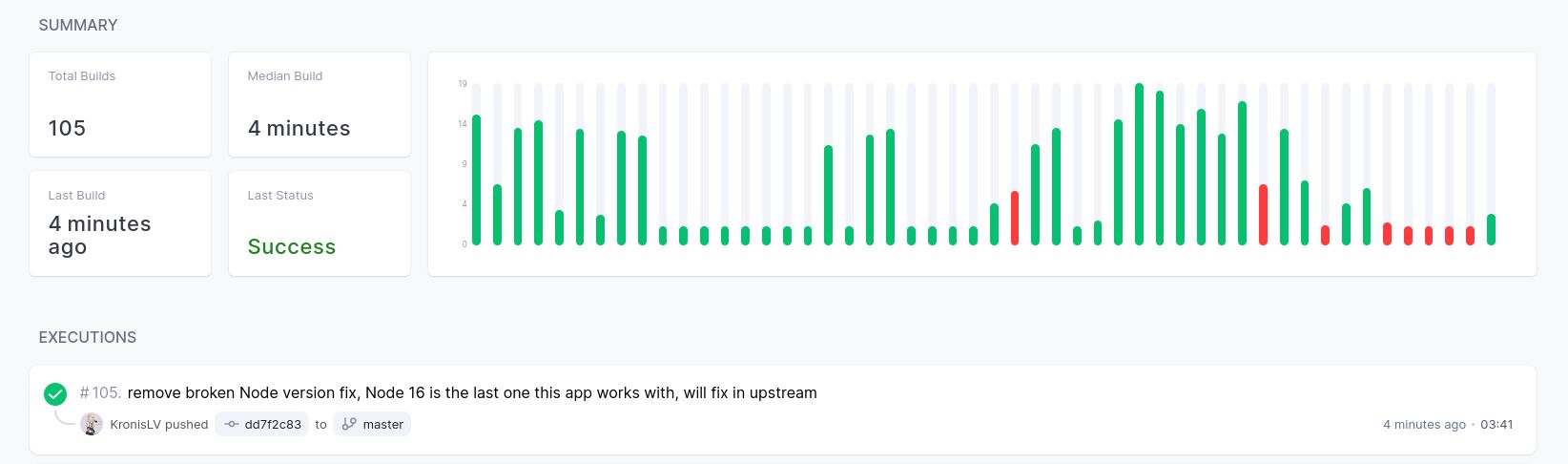

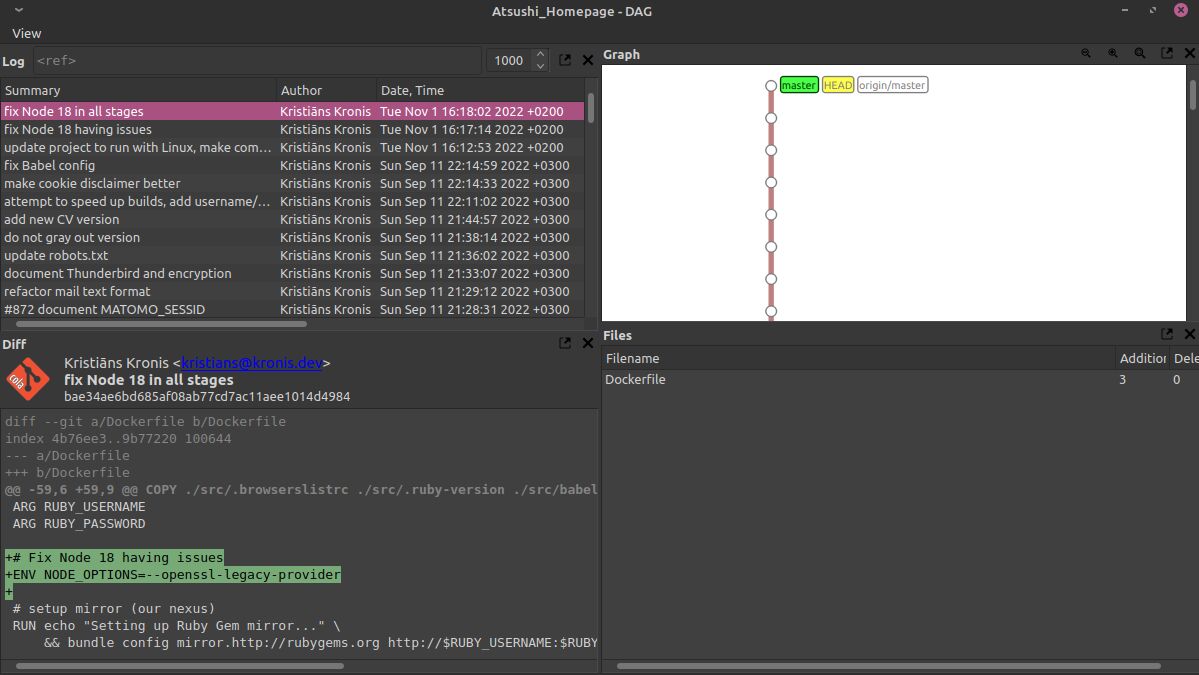

Why is that? Because I recently moved over from Node 16 to Node 18, which is the current LTS version at the time of writing this. But sadly, it seems like the version update changed some internals and now my code breaks, a story as old as time:

Worse yet, while there is a workaround, it seems like it only works locally, when I use the mentioned NODE_OPTIONS=--openssl-legacy-provider inside of my IDE launch configuration, as well as when I try to build containers locally:

But trying to build a Docker container on the CI node, from the same Dockerfile and with the same configuration, it fails:

It shouldn't happen. It shouldn't be possible. And yet that's exactly what happened, I have no idea why whatsoever. Do I care about the particulars? Not really, no, because over time you'll still run into odd cases like this and it will just be easier to stick with the old version of Node or whatever toolchain you need, or eventually migrate over the whole project to something more contemporary.

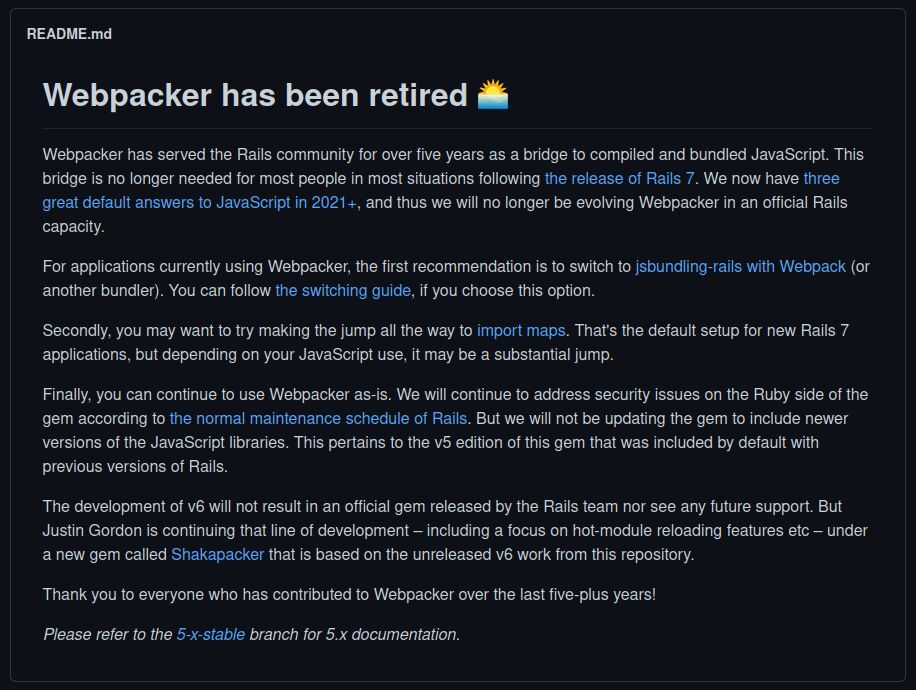

We often like to sit on our high horse and claim that we should keep everything up to date, but sadly that's just not the path of least resistance to have everything work:

Just look at how much Java 8 or Python 2 software is running out there, or something older than PHP 8, or even the old Perl out there in the wild. Sometimes it's not just the toolchain or package versions that need updating, sometimes you'll need to migrate away from entire sets of solutions, for example, in my case Webpacker for Rails won't receive any fixes for working with Node 18:

Thus, everything I need to be up to date to have my software work looks a bit like this:

- an up to date version of Ruby on Rails

- an up to date version of Node

- an up to date version of dependencies like Webpacker

Which is a shame, because I cannot update my version of Node, without breaking Webpacker and since the latter is deprecated, it won't receive updates for this. What's more, upgrading across major versions of frameworks can be a massive pain, thus it might be better to treat the entire project as starting over and copying what works and rewriting what doesn't bit by bit. In my case, using server side rendering made for really easy development, but makes for worse ability to migrate than a boring REST API and a SPA application would. Oh well.

In practice, this means that attempts to explore your options will oftentimes mean having to change both your upstream dependencies (like server Ansible configuration for packages, or container base images):

And then also changes in the project itself:

Don't get me the wrong way: Windows and any other OS also has these problems. Some stacks that move ahead a bit more quickly (e.g. Node versus something like Pascal, though very few do web development in the latter) will mean more problems. A lot of the time these won't be impossible to solve, but the more projects you have up and running and need to keep alive, the more time you'll need for all of it. I only have so much time, though.

You will have to live with the knowledge that basically all of the software that you write and use will rot eventually, much like the Windows registry or the contents of my home directory do.

Software and alternatives to what Windows has

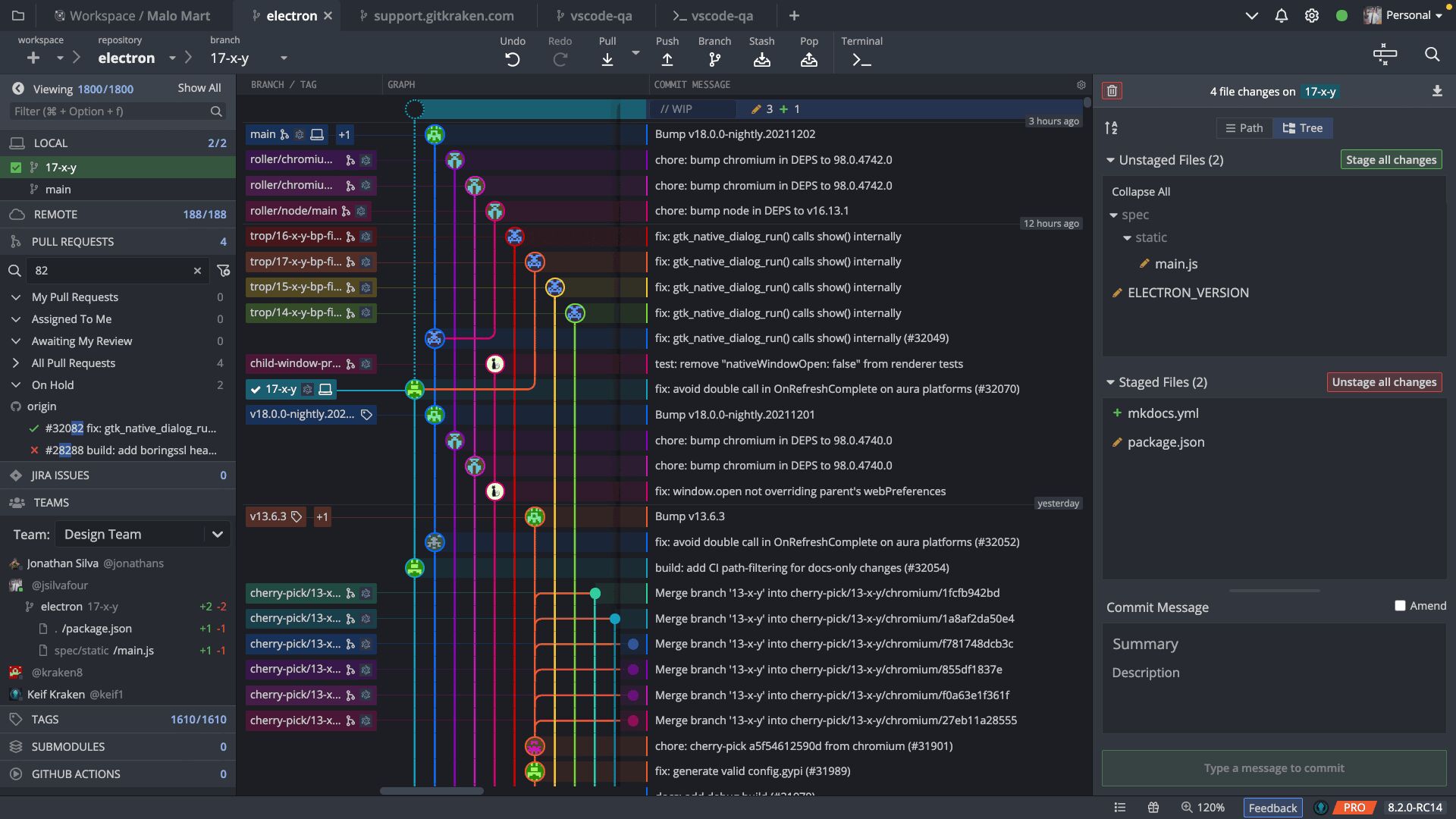

On a bit more positive note, you know what is nice? A free piece of software called SourceTree, that makes working with Git about as pleasant as I want it to be. It doesn't abstract away the wrong things, it makes common actions more user friendly and easy to do, as well as adds a nice visual UI on top of Git. It's actually so good, that both professionally and at home, I haven't dropped down to the Git CLI for months:

But there's a little bit of a problem: it's Windows and Mac only, so no SourceTree on Linux! Which is a bit of a bummer, true, but it's not like there aren't capable alternatives. Something that's still simple and gets pretty close is Git Cola. Even without looking for anything more fancy, you can easily get visual diffs, being able to stage files one by one at a glance, work with branches and all of the other common actions:

They did make a bit of an odd choice of making the Git graph and history view a separate piece of software, but generally it's decent:

You can still use whatever is integrated in your IDE or text editor (like Git Lens for Visual Studio Code, for example), of course, but personally I like a separate piece of software that's not tied to a specific editor. Also, if you don't mind paid software, then I have to say that GitKraken is an excellent choice in this space - it is to Git UIs, what JetBrains products are to IDEs; one of the best options out there, I'd say even better than SourceTree:

That's generally the story so far: most of the software that Windows has, also has an equivalent on the various Linux distros. Now, many might say that something like GIMP isn't as good as Photoshop, but since I use GIMP on both platforms, I couldn't really say. It is also true, however, that much of the commercial software still will have Windows as their main platform of choice, which might complicate things.

However, things now are better than they were a decade before. For example, if you want a video editor, you're no longer limited to only running Sony Vegas (or Windows Movie Maker, if we're talking about way back) on Windows. Now you can use even fully featured software like DaVinci Resolve on the platform of your choice:

The trend also goes in the opposite direction as well, even in the same video editing space. Personally, I've used some software with roots in *nix on Windows as well, since Kdenlive provides builds for multiple platforms:

Apart from the mentioned software packages, the same goes for:

- office suites like LibreOffice

- browsers like Firefox

- modeling software like Blender

- recording software like OBS

- audio editors like Audacity

- picture viewers like XnView MP

- video players like VLC

- encryption software like VeraCrypt

- password managers like KeePass

- archive managers like PeaZip

- virtualization solutions like VirtualBox

- container runtimes like Docker

and many others! The list above isn't exhaustive, but I can't help but to feel wholesome vibes at the trend, of there being less of a platform lock in and the opportunity for more consistency across whatever platforms or combination thereof you want to use. I'm not telling folks who are comfortable with MS Office to ditch it, not at all, but personally I like launching the same software package on whatever OS I'm on and being familiar with it instantly, instead of having to deal with the context switching overhead.

It's a pretty good time, because you can do most things on most OSes, without too many issues.

Software that Windows does better

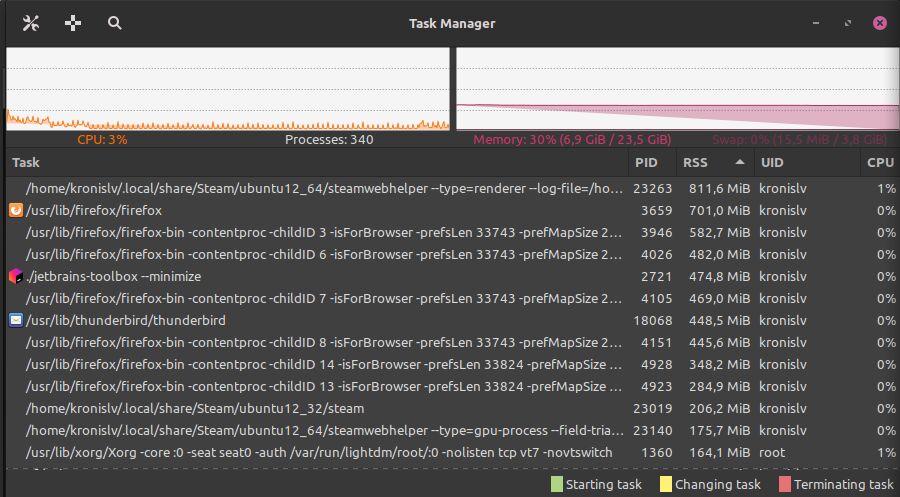

I'll admit, however, that Windows has some excellent software as well, that sadly hasn't really made its way over to Linux. Let's start with something silly, the Windows Task Manager:

It is pretty much the best type of software for getting information about the systee of your system at a glance: everything from your CPU and RAM usage, to how much load your disks are dealing with, how much data is being pushed over the network, as well as even your GPU load! For comparison, here's what my Linux distro has out of the box (the RAM graph is a little bit buggy):

It works, sure, but it's not as nice. The good thing is that someone out there will have probably written something close to what Windows has, or you could get most of the data through something like iotop or ntop, but for an out of the box experience, Windows has everything you need here (as well as the reasonably nice Resource Monitor program).

Next up, there's Notepad++ which I still think is one of the better "true" text editors (versus something like Visual Studio Code, which leans more into being a lightweight IDE with some plugins), an alternative for which I still haven't quite found on Linux:

(there have been and will be attempts to do this, though for basic stuff you can just use xed or gedit or whatever your distro has by default, they're fine)

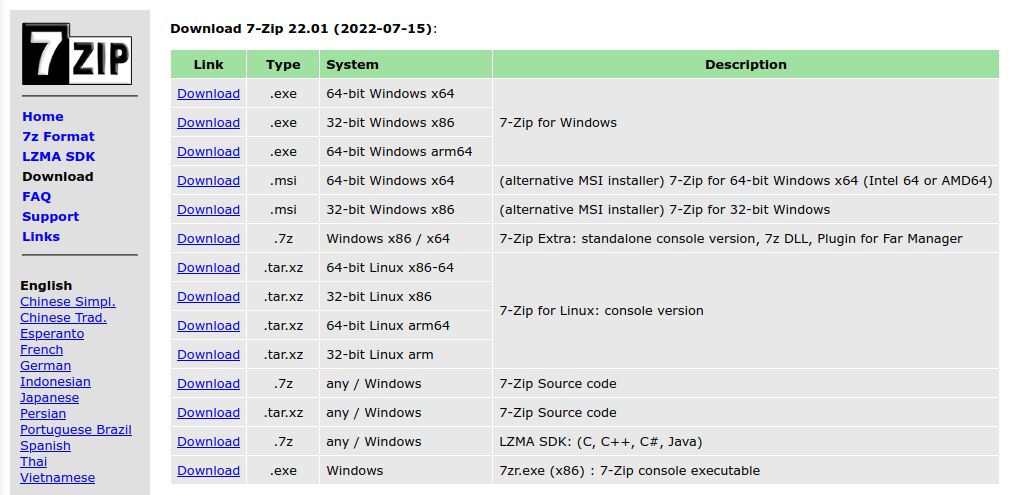

Another piece of software that I really like on Windows is 7-Zip, quite possibly my favorite archive management program, with a really nice Explorer integration:

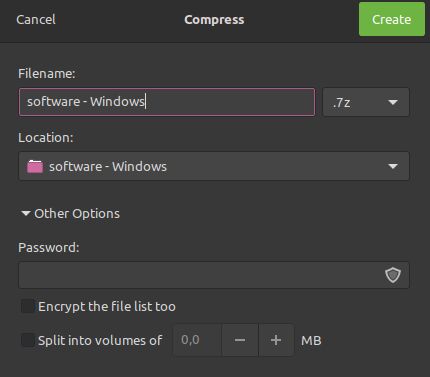

As you can see above, you can get its CLI version for Linux, but I'm using it primarily just for its UI, though PeaZip is passable on Linux as well. For very basic usage you can also just stick with whatever archival software your file manager in Linux has, though it can be woefully lacking, options wise:

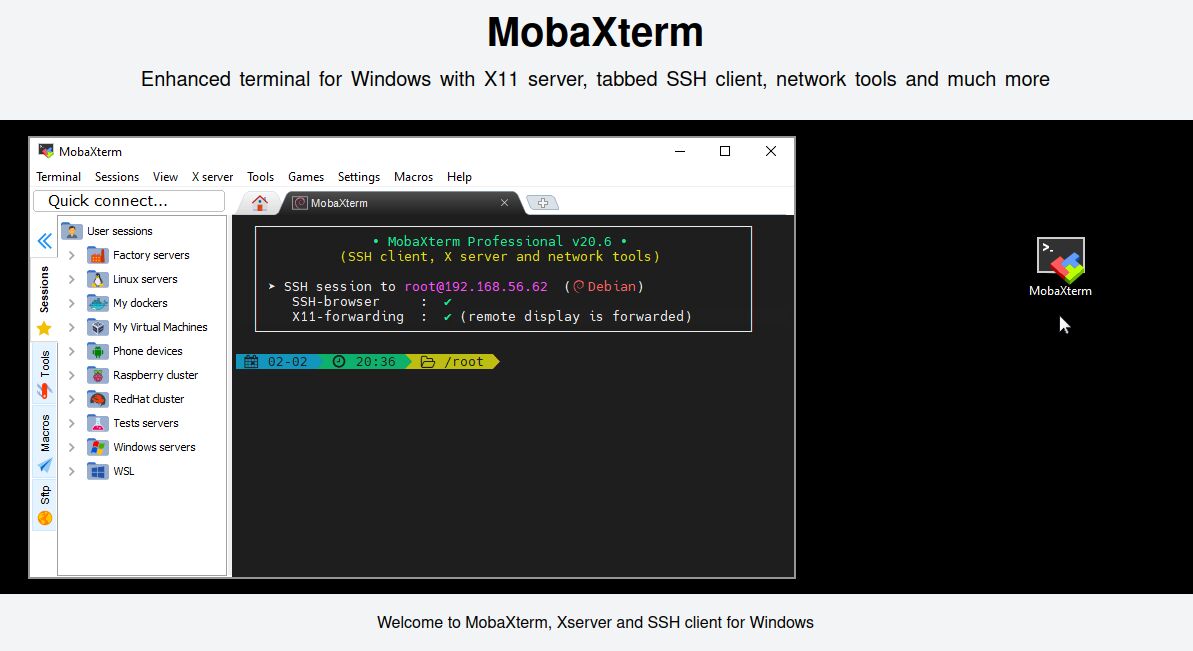

Next up, Windows also has this one excellent program for various remote connections: MobaXTerm. It has a free version but you'll probably want the paid one, which has no limits for connection counts. It lets you group your SSH connections, have RDP/VNC connections, SFTP connections, as well as a bunch of others, all in a very nice interface:

Now, I've tried replacing it with mRemoteNG and WinSCP on Windows as well for when the free version isn't sufficient, but Linux just doesn't have that many great options in this space, which is surprising. Am I missing some really great software in Linux here? I'm not sure, but the multi exec functionality alone is great in MobaXTerm (for example, if I want to edit the crontab manually for 2 or 4 servers at the same time).

The closest I've found for managing connections in Linux is Remmina which works, but isn't as good. Luckily, many distros will also have remote file system integration in the file browser, so connecting to those and browsing them is actually pretty easy, removing the need for something like RaiDrive on Windows.

Next up: content creation! Sometimes I like to stream games and such on Twitch, where I use a virtual avatar (VTuber) instead of a webcam, because that feels more comfortable for me. For managing certain aspects of mine, I like to use StreamElements, but sadly it seems like their OBS plugin doesn't support anything but Windows:

That isn't the worst thing ever, I'll admit, but the avatar software that I like to use, VUP, is also Windows only. Maybe it's a bit of a niche package, a lot of people use VSeeFace instead and they have a page for running on Linux, but as you can see in that page, it's all a little bit patchy and not as stable or polished as one would hope.

The good news, however, is that I can at least get some of the plugins for OBS, like for making my audio sound a bit less worse, on Linux as well, so much of my Windows audio filter setup should carry over with a bit of work:

Based on what I've currently tried and know, streaming on Linux is definitely possible, but this is one of those areas, where you can't expect to have something close to a 1:1 experience. It's more likely that you'd have to use the stream chat in your browser, as well as look at other metrics there, import your widgets as regular overlays in the regular OBS and so on. It still is doable, but not as nice or easy.

Also, remember how I said that both Windows and Linux have somewhat broken window tiling by default? Well, on Windows there's this excellent piece of software called FancyZones, which lets you customize how window snapping should work:

It doesn't "really" change how the OS window tiling works, since you just end up with non-fullscreen/non-expanded windows that are simply resized to whatever size was calculated based on your snapping settings (such as a screen having left and right slots, or upper and lower slots, each with a given margin between the windows and taskbar), but it's still what I wish I could use in my Linux distro of choice. It's an excellent piece of software, that wouldn't be out of place in the OS by default.

Just take a window, drag it while holding Shift and let go once the snap preview shows you what will happen. XFCE already has dragging around windows by holding the mouse on them anywhere with the ALT key pressed, so this would be a nice step up, if someone could pull it off. Once again, it probably depends on the distro, since distros with the Cinnamon desktop already have something a little bit like that out of the box.

Software that Windows does better, for GPUs

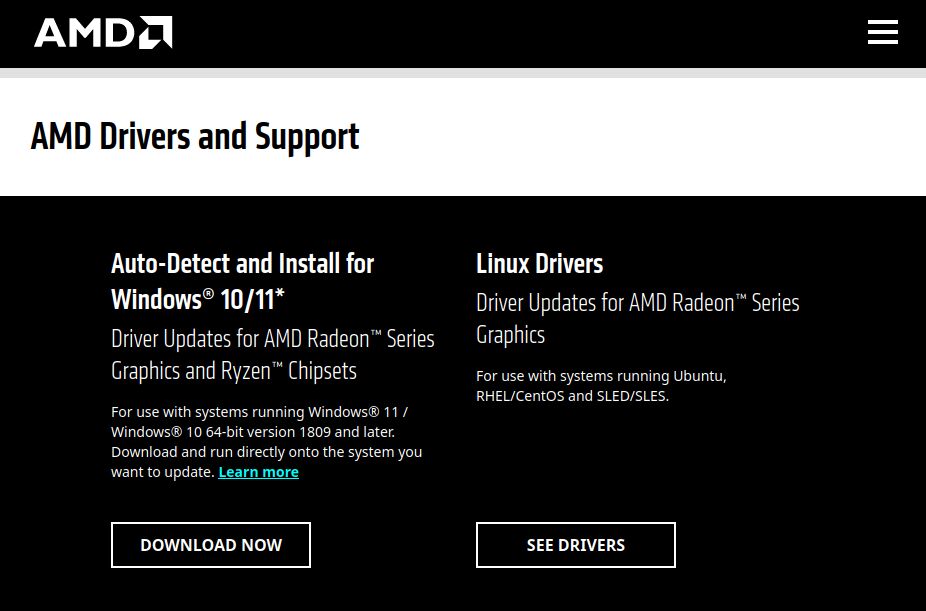

This deserves a separate section, because it is important, but gets a little bit long. In regards to gaming and running graphically intensive applications, I think that the AMD Radeon Software is a must, regardless of the OS, if you have an AMD card like I do.

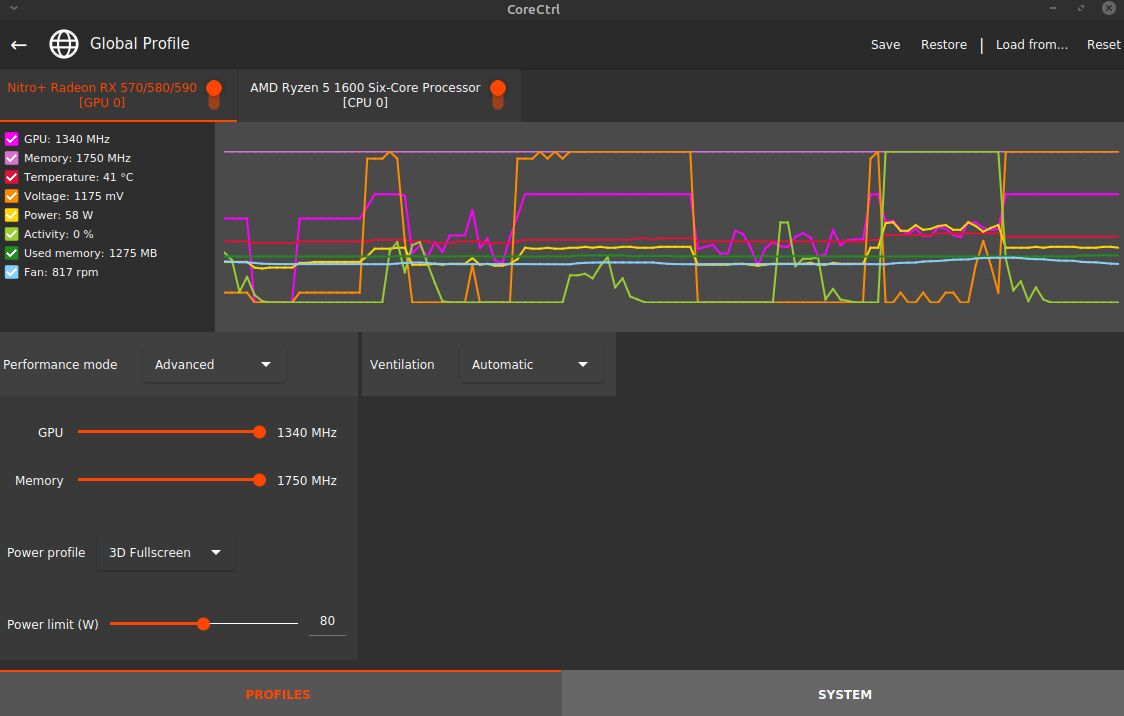

While GPUs generally work decently on Linux, as long as you have the proper drivers installed (which should happen automatically nowadays, for the most part), one invaluable piece of software is something to let you tune how exactly they work. In my case, I use the software to limit the power that my GPU can draw, not to overload the PSU and also to hopefully increase the lifespan of the card:

I only increase the power, when I'm streaming and my avatar starts lagging, or the stream frame rate falls below 30, which in my eyes is the lower bound for things being tolerable. Yet, when we look on the AMD site, Linux doesn't even have the software itself up for download, only the drivers:

Well, if you do a bit of digging, you can apparently find a release of the software that's meant for Linux in particular, which gives us a .deb file to download:

However, installing it has changes related to the kernel and DKMS gets involved which isn't exactly alarming on its own right, but is definitely a little bit concerning. To the point of me no longer being sure whether the system will even reboot properly the next time, or I'll end up needing to do a bunch of Googling to revert the changes, if the driver is badly written, incompatible, or there are other subtle differences between Ubuntu and the distro I'm using.

In the end, it actually turned out, that it's still just the drivers, not the graphical utility that I wanted! Why call it 90% the same way as the GUI application, then? What's worse, it appears that there is something that's called even closer to the Windows desktop offering, but it was only supported for Ubuntu 16.04 and it still may or may not be just the driver:

Now, luckily there is CoreCtrl which you can use on Linux, to achieve much of the same:

However, even if I have no problem running third party software for controlling my GPU (which might damage hardware in some circumstances), CoreCtrl doesn't quite achieve the same results as AMD software. You see, by default the card controls its own GPU and memory clock values, which means that when idle the GPU draws around 40 W of power. However, if I want to set a limit for how much W in total it can use, it also makes me set the GPU and memory clock values, which will them be fixed: so at idle the GPU will use about 60 W of power.

This is not at all necessary and isn't welcome, when I just want to set a top power limit and let it sort out the rest on its own. In short, while AMD has decent drivers for Linux that work (for the most part), their software naming is kind of weird and misleading and you can clearly see where their priorities lie - not in providing desktop software for Linux users. CoreCtrl is a really nice project that kind of makes up for those shortcomings, but not really.

Game development and interactive content

Okay, so why don't we talk about games for a little bit, which is something that matters to a lot of folks. Actually, let's talk about something a bit more general at first - interactive content and making it.

For example, even things like GUI application development have historically been difficult: on Linux you have GTK and Qt, whereas Windows has Win32, WPF and UWP, though you can also run GTK and Qt apps on Windows for the most part. Or some tech stacks have their own solutions, like Java has Swing and JavaFX. And then there's something like Pascal and Lazarus, which decided to smush together bunches of those aforementioned options in a sort of meta framework that picks the target based on your build setup.

And that's just getting a bunch of UI components rendering on the screen. Imagine what the situation was for game development, where you needed to use GPUs and their drivers, to render graphical content with 3D models and shaders and other effects at 60 to 144 Hz. While the whole GUI app development has been infected by the likes of Electron which is good from a development point of view, but worse than what Pascal and Lazarus did in most other conceivable aspects, I'm here to tell you that things are better in regards to games and other interactive content.

Actually, Unity, Unreal and Godot all are cross platform, both the editor and platform support for where your games or applications will be capable of running. They each have their own input management, sound and graphical effects, physics, networking, UI components and everything else that you might need, so in that regard, there is less fragmentation. Just pick an ecosystem that you want to be a part of and make something awesome!

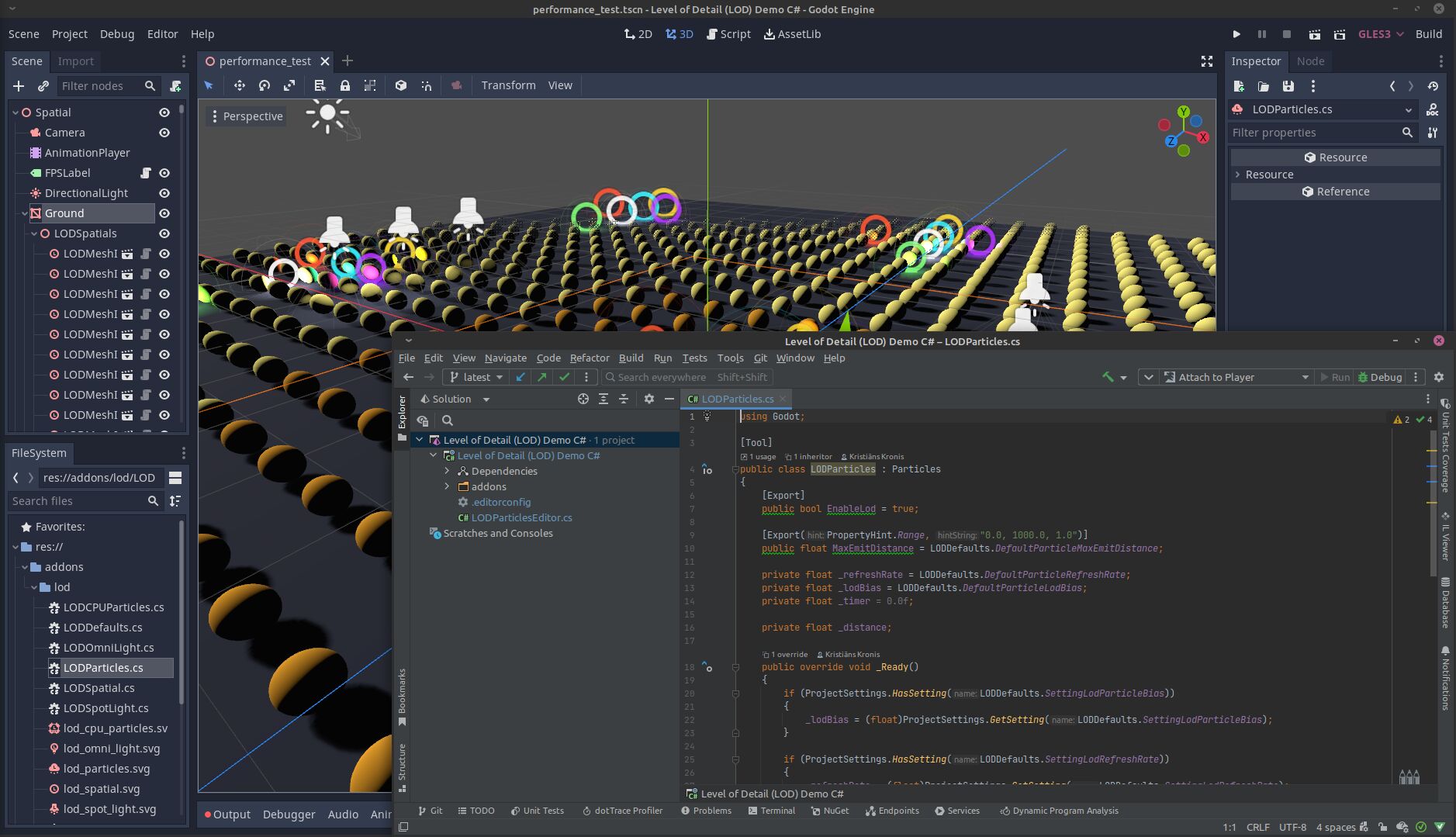

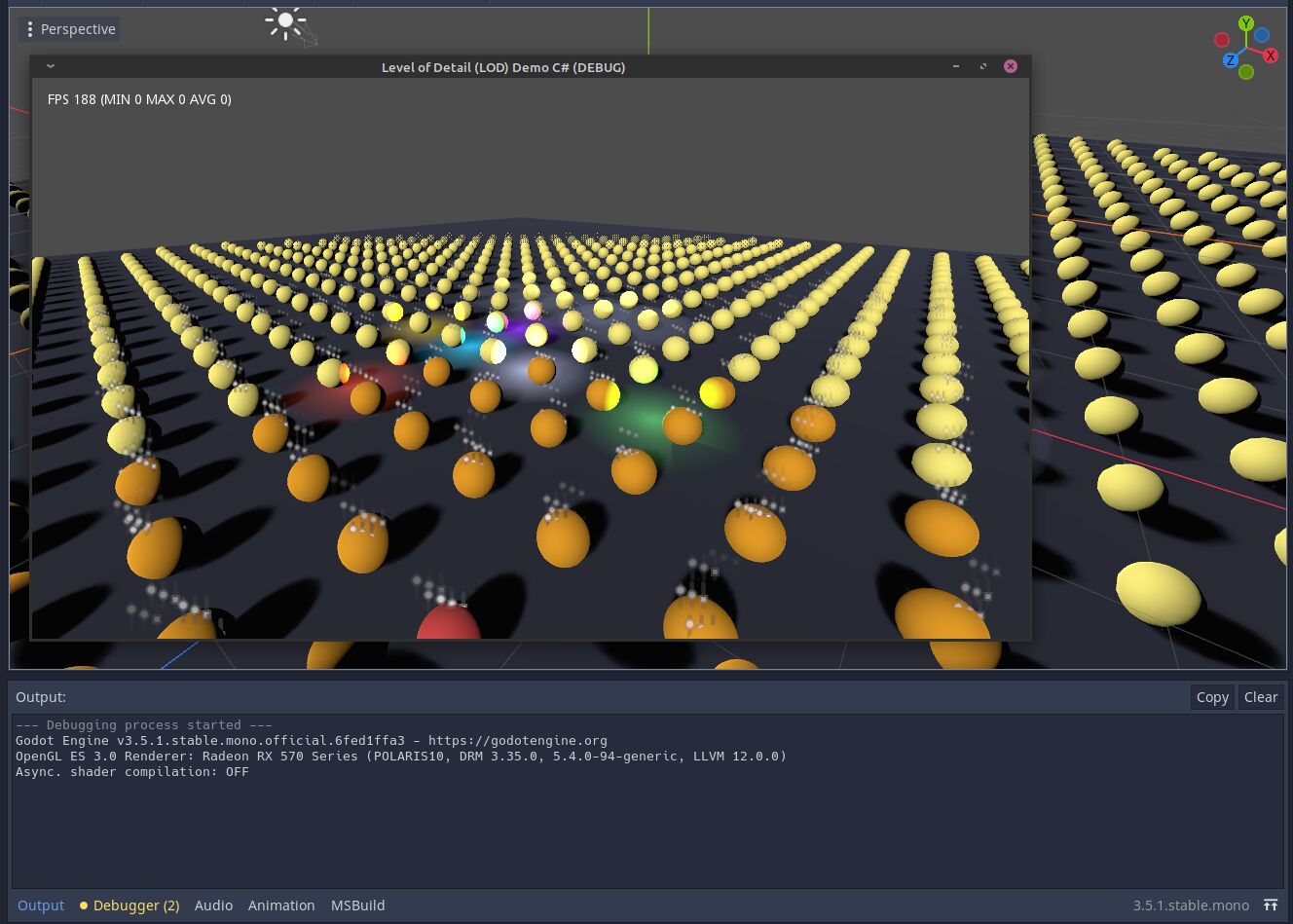

Here's an example of me getting one of my former Godot projects up and running in Linux in less than 5 minutes:

Both the IDE and the game engine work, as does actually running the project:

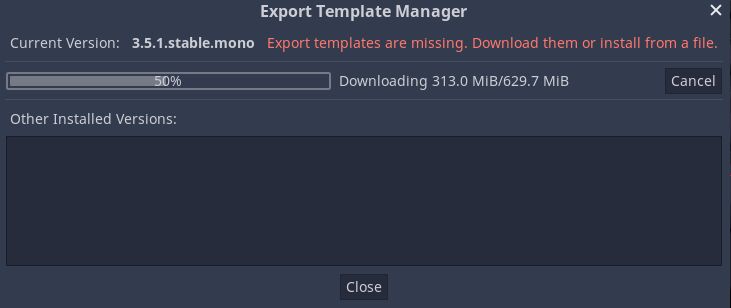

Well, suppose we want to export the game, how would that look like? Well, first we need to get the export templates:

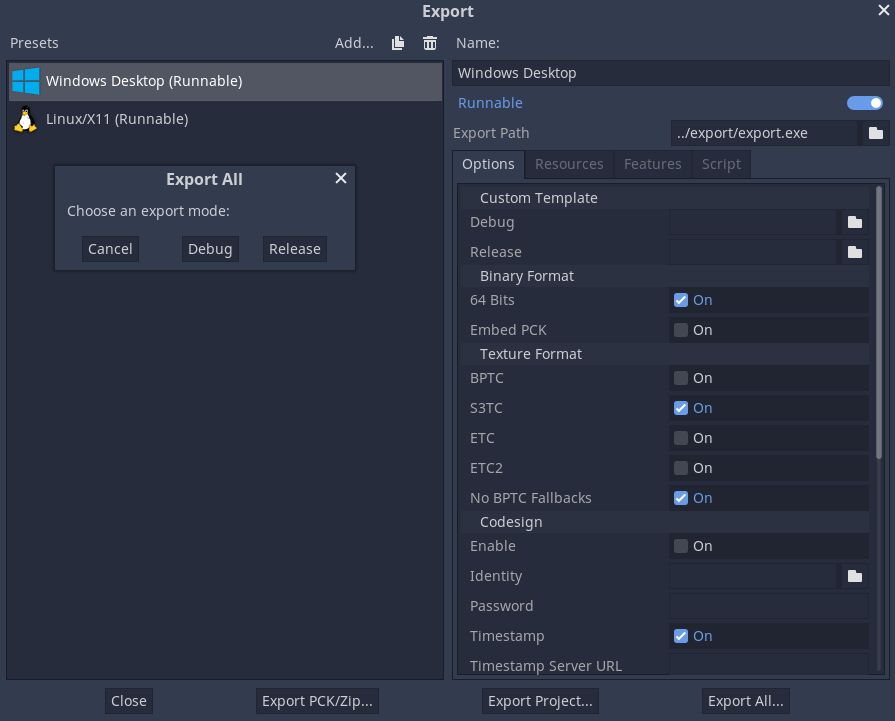

And then we can configure the export for whatever platforms are supported by the game engine:

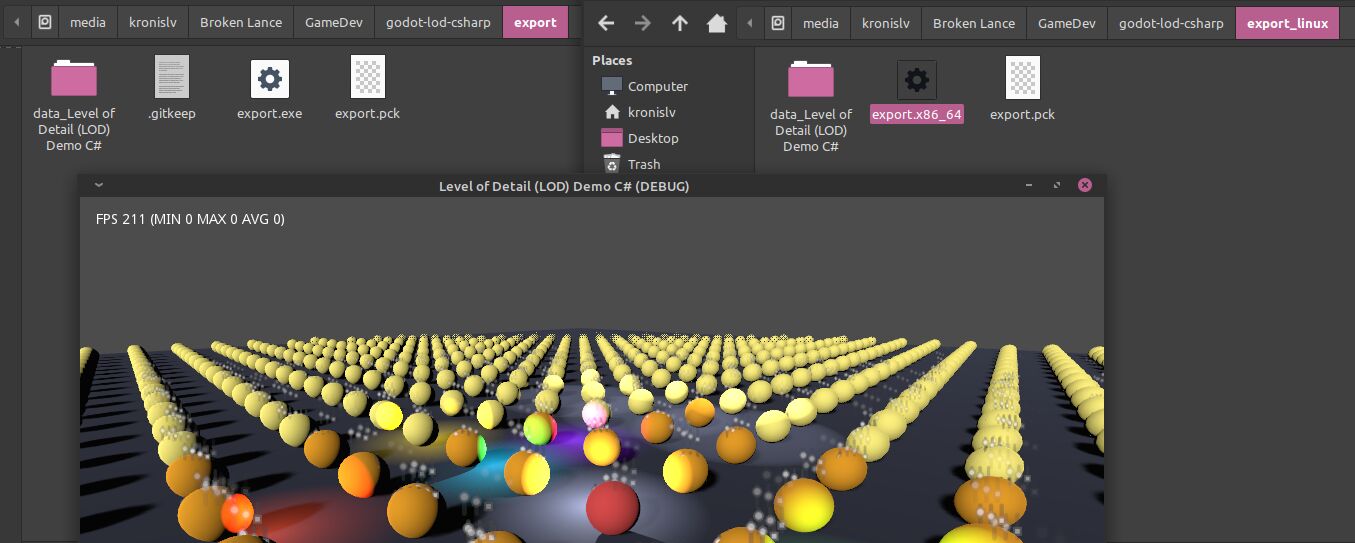

And then, a little while later, you have your project exported, from where it can be run:

Now, Godot is just my engine of choice here, because running it is also very easy - I just got the binary off of their website and it just worked. But the trend here is clear, in that things are about as easy as they can get, as long as you don't do anything too niche or out there. So what does this mean for gamers and game developers? For one, it's actually not too hard to get started with some basic platform support for Linux. Of course, some will point out that the Linux gamers are the minority and are responsible for many bug reports, but for some that additional bit of profit will be welcome.

Also, it's nice to see that entire platforms aren't getting left behind just because of the network effect. This is especially relevant, because these game engines can also be used to build some rather nice and useful software, like Material Maker. Actually, back in the day, I even once used Unity in a project to visualize stock prices across various characteristics and how those change over time, in a 3D space.

Running games and ProtonDB

But what about actually running games?

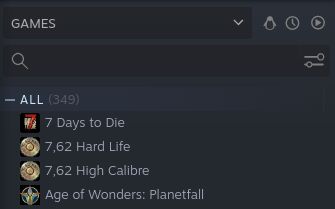

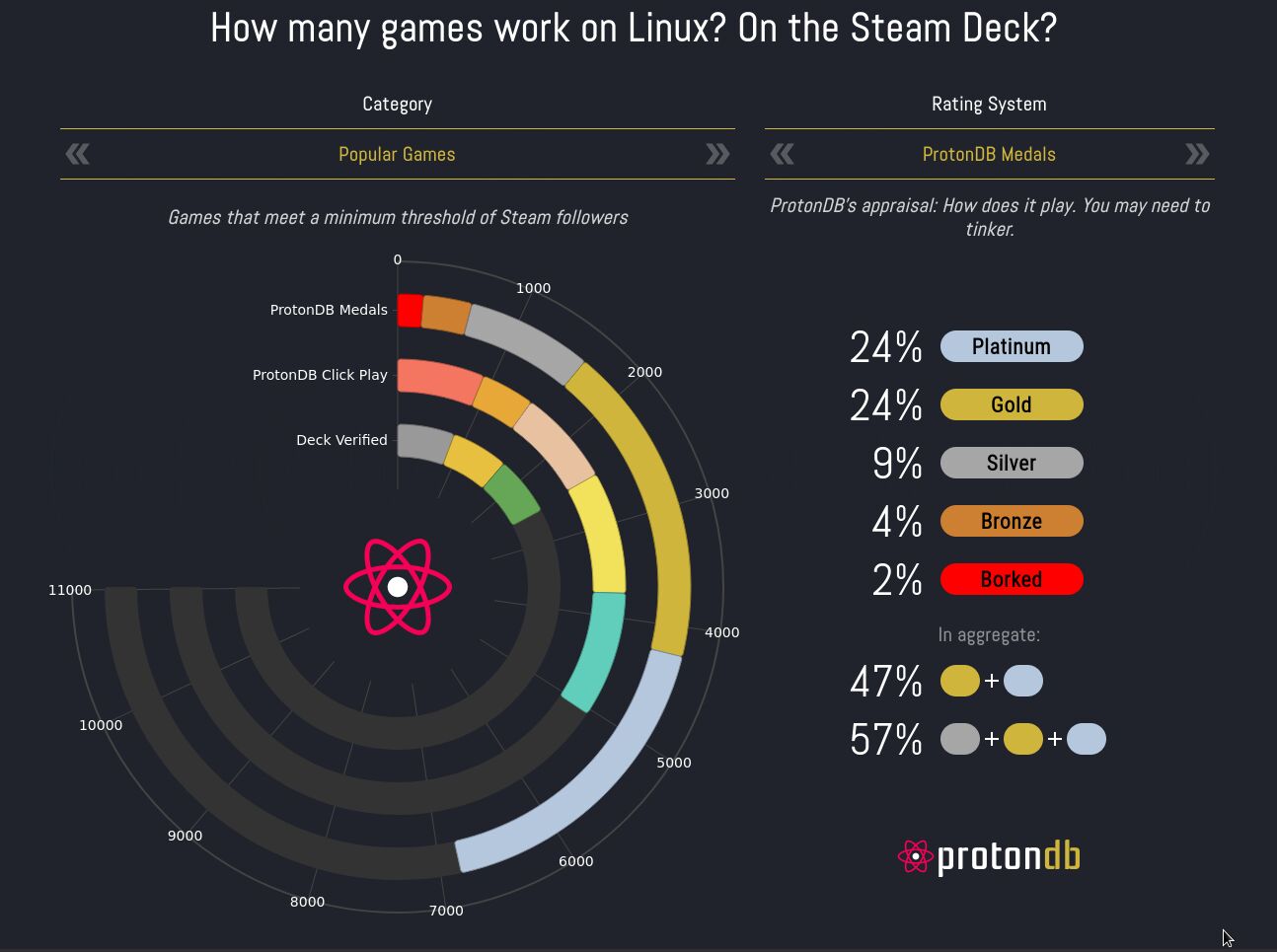

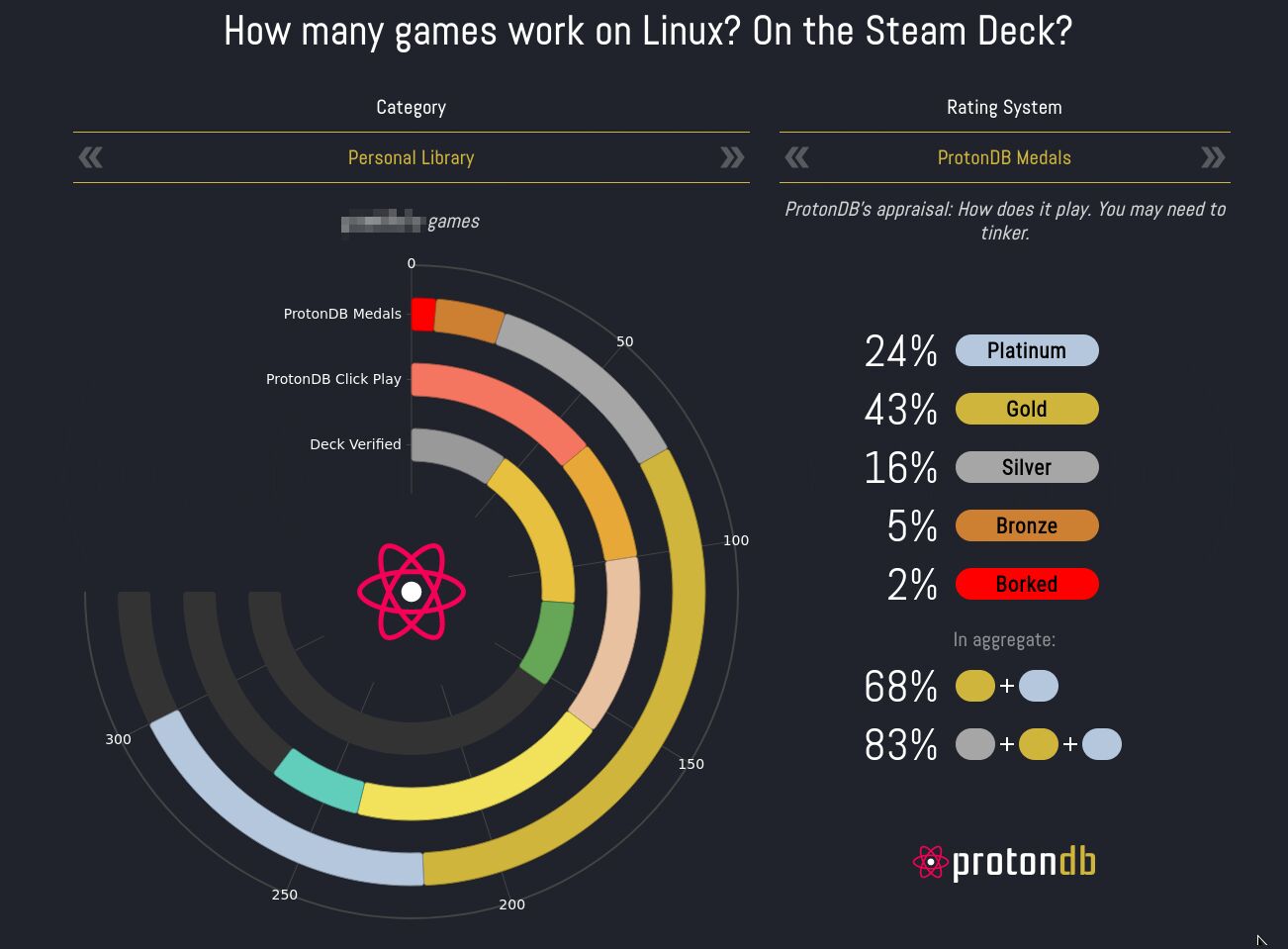

Let's take a quick look at my Steam library. In it, I have 349 games in total, some of which are free, others I picked up as a package deal on discount, the majority of which are various indie games:

Steam has this helpful filter, where you can only look a the ones that are supported by Linux, which drops the count down to 94 games. In other words, about 27% of the games that I have run on Linux natively:

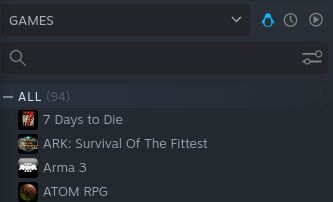

This is not excellent. However, in recent years the people behind Steam have come up with Proton, which builds on top of Wine to let you run some traditionally Windows-only games on Linux. Luckily, turning it on is as easy as fiddling with some of the settings in Steam:

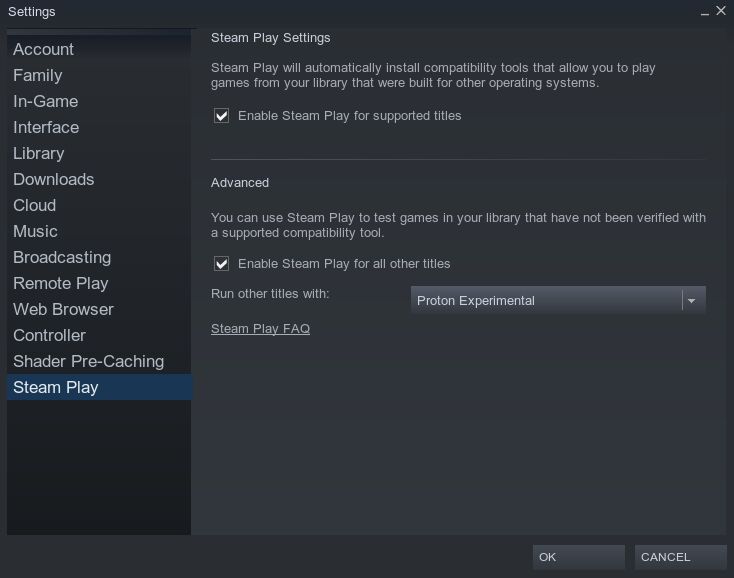

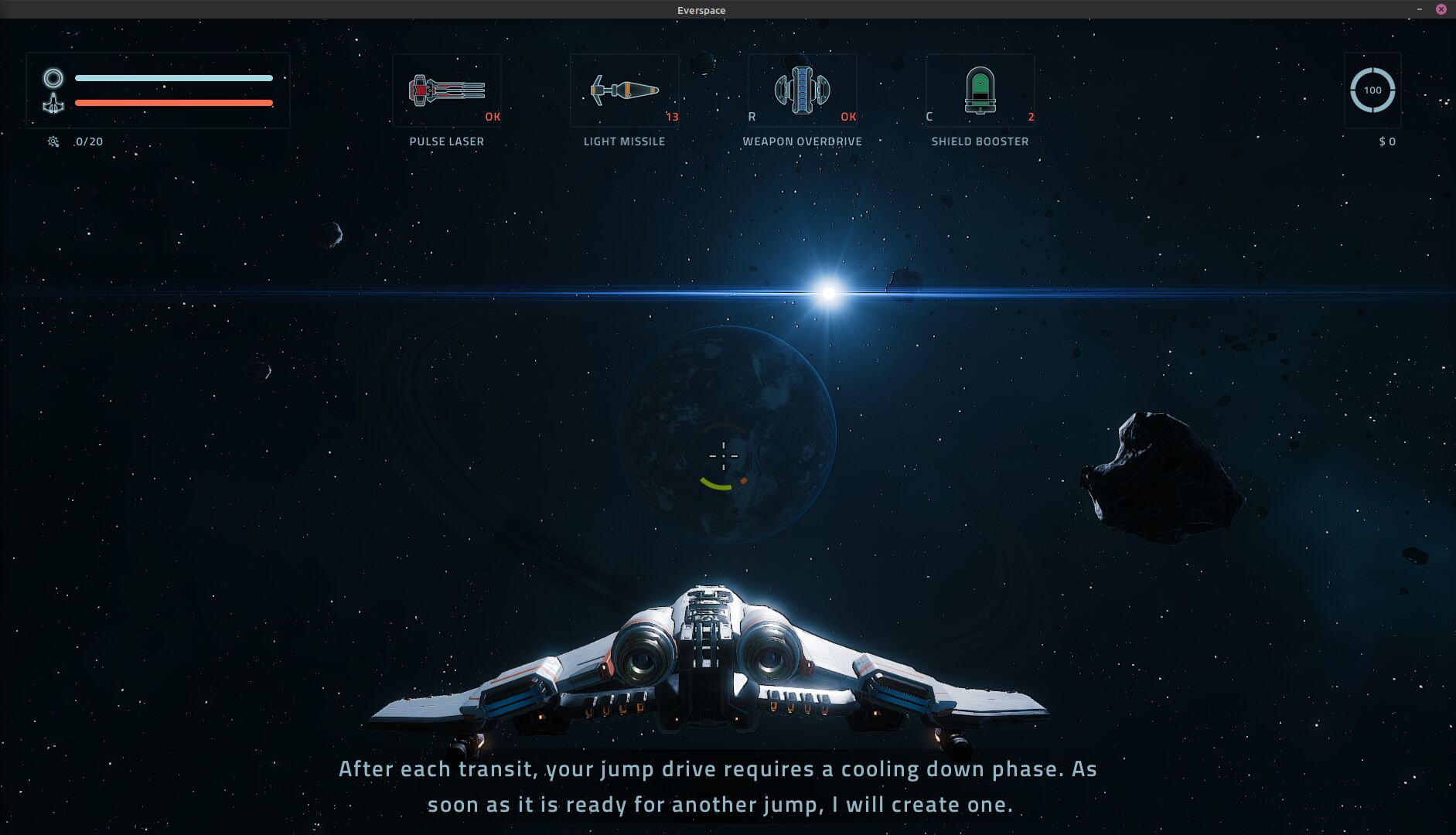

Then, the experience is about as easy as it normally is for Steam users. Now, regardless of whether a game uses Proton or not, sometimes you might need to deal with slightly technical choices in regards to getting the game run properly, as I demonstrate here with Everspace:

Either way, things work surprisingly nice for the most part, here's the game menu with no issues:

And jumping into the game also seems to work nicely, the game even respects my horizontal sidebar, which almost never happened on Windows:

Now, about Proton in particular, you can actually see what the state of game support is on the ProtonDB site, if you're interested in some specific titles, or other criteria:

It also lets you log in with your Steam account, share your Steam library and let you see how many of the games that you have can run and how well:

If you want to, you can even go through all of the titles and see whether they're supported or not:

You might think that it's a bit silly that I'm getting excited about something as mundane as games working and even being available on the platform, but honestly gaming is one of the only things that are really keeping me and many of the people out there sitting on Windows in the first place. Something like Proton is a godsend in that regard and it feels like things will only get better with time, so essentially this is how the gradual adoption of Linux for gaming looks like, especially with projects like Steam Deck on the horizon.

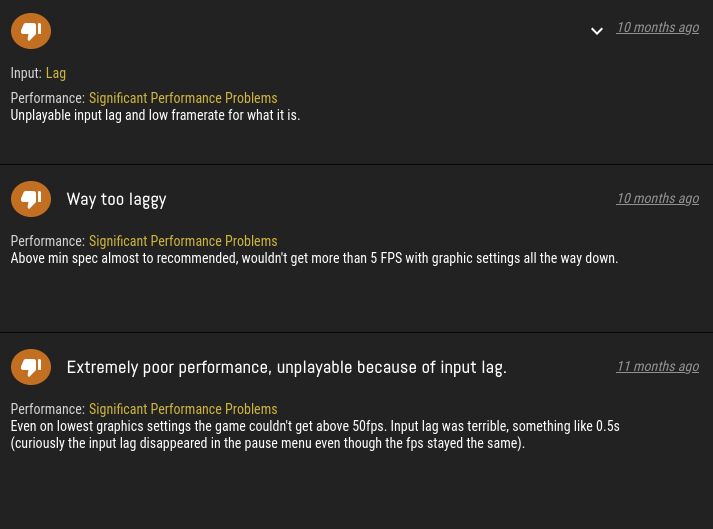

However, let's not delude ourselves. There are titles that still aren't playable and perhaps won't be in the near future. Something like Cooking Simulator, for example, will install, but running it will fail:

I quite literally ended up with a downloaded and installed game that wouldn't launch. Now, I'm not sure exactly how I'd get the console output or logs from the launch, or a crash dump, but since this is just a little experiment, I'll just let the title remain broken and uninstall it for now. Now, the good thing is that some people on the site share their own experiences with any given game, though it varies from the game apparently working for some, the frame rate being pretty bad for many and it outright refusing to launch for some others as well, implying that there's some sort of a hardware or configuration component to this as well:

Then, of course, there are some games that are outright unavailable, due to them not being on Steam in the first place. Do you like something like Genshin Impact? Well, good luck getting it running on Linux, because it's not even an officially supported platform in any capacity:

While some of the more technically inclined people could try to get it running on their own accord, doing so in a game with online accounts and unsupported configurations might risk getting your account suspended. I'm not saying that it's okay for that to be done, it's just the reality that we have to deal with.

Also, things are weird even for the same publisher sometimes. For example, War Thunder, a game by Gaijin is supported on Linux:

However, another game by them, one that feels like it shares the same engine, Enlisted, does not have support for Linux:

Surely there's no good technical decision for this choice, right? Well, I can't say for sure, maybe the game is just early in its development and they're focusing on getting the features in order, maybe they haven't finished porting or testing it on Linux, perhaps they have concerns about bots or anti-cheat solutions, or something else. But regardless of what I think about it, that's just the status quo and there's nothing that I personally can do about it.

Gaming on Linux exists, you're no longer stuck with just something like SuperTuxCart (albeit there are excellent open source games out there too, like 0 .A.D. and OpenTTD), but if you want to game on Linux, you'll just need to accept the fact that a significant part of whatever collection you might have just won't work. Game engines are surely improving on that front as I showed above, but some of the older titles, like MechWarrior 4 will either have enough of a cult following to get them working, will sort of work, or will forever remain broken until someone will come along and fix them.

Personally, I almost wish that I could boot Windows inside of Linux from the physical disk partition on which it resides (on the same disk as the Linux boot partition) and run it as a window or set of windows inside of Linux, with GPU passthrough. Something like QubesOS, but with a focus on gaming instead of just security. One can surely dream.

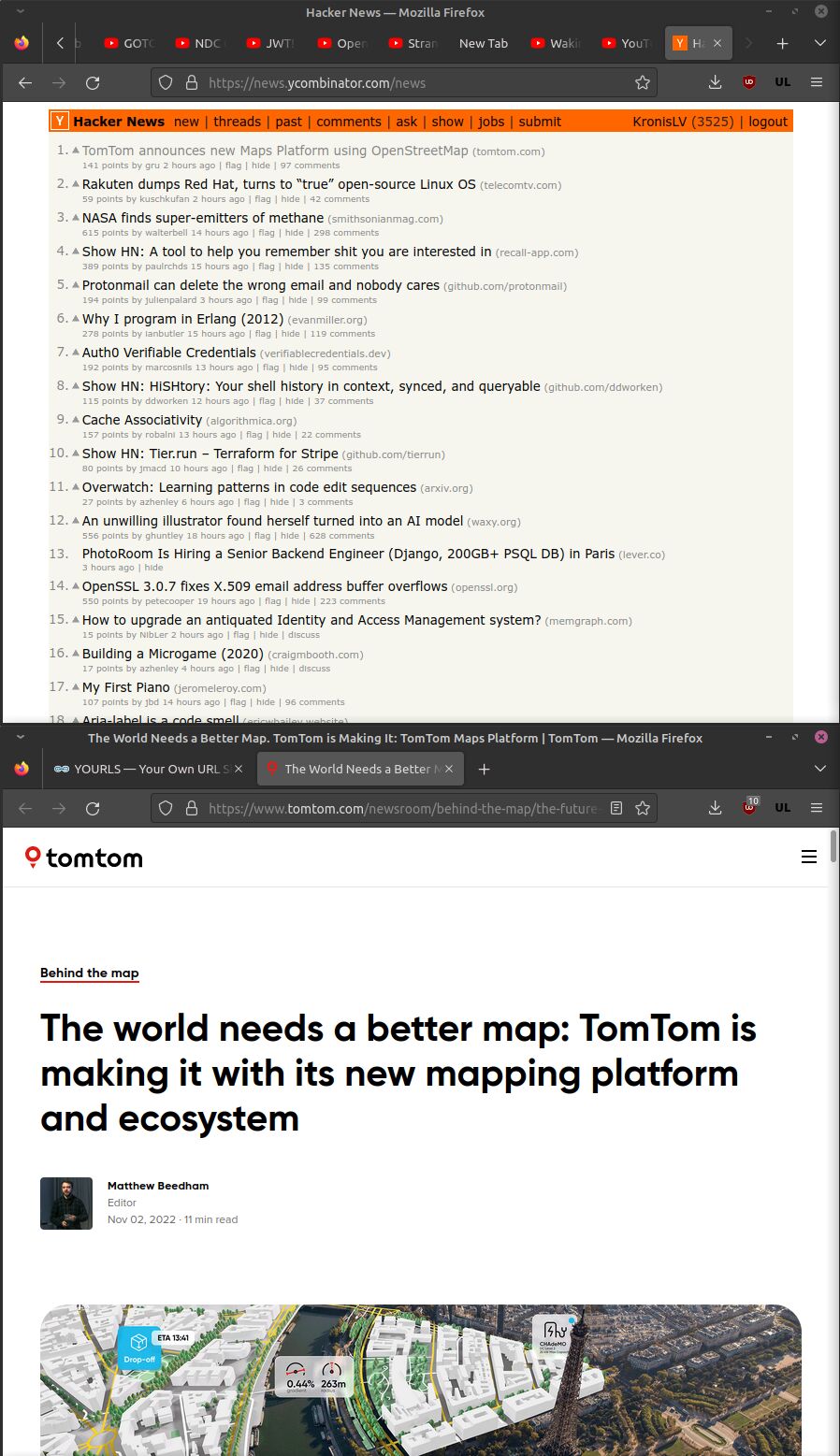

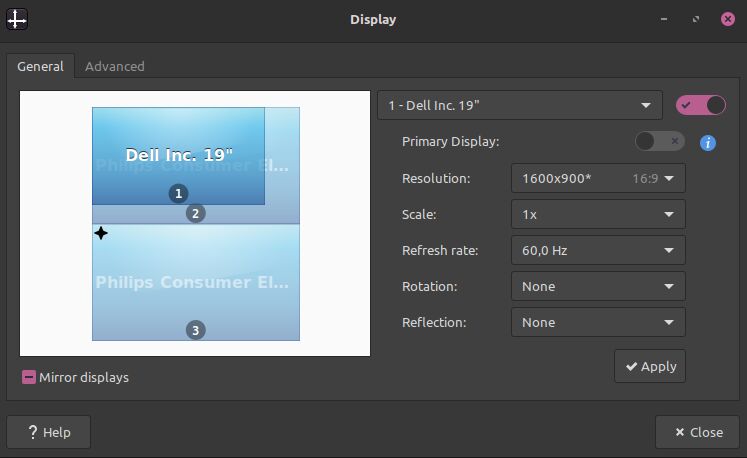

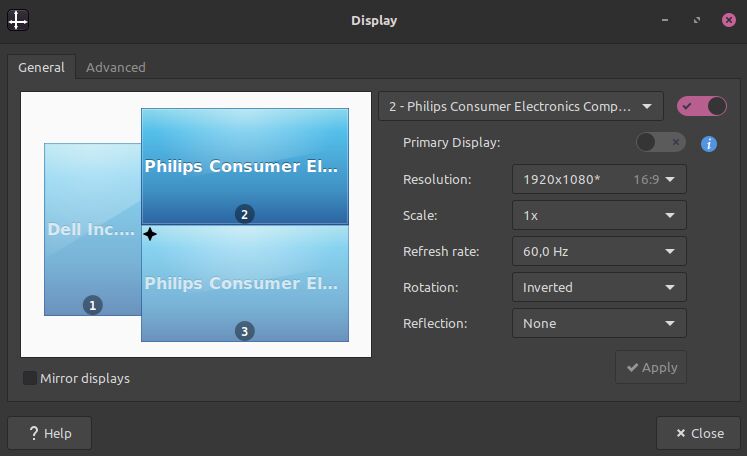

Broken display settings

One of the other things that are a bit annoying are various display options, which sometimes seem like they randomly reset. The Linux Mint distro seems generally passable in this regard and I haven't had to whip out arandr manually since using Xubuntu a while back, but it's still awkward sometimes.

Because of a non-typical desktop layout (one vertical monitor and the top monitor is flipped), my setup looks like this in the login screen, before my custom settings have been applied:

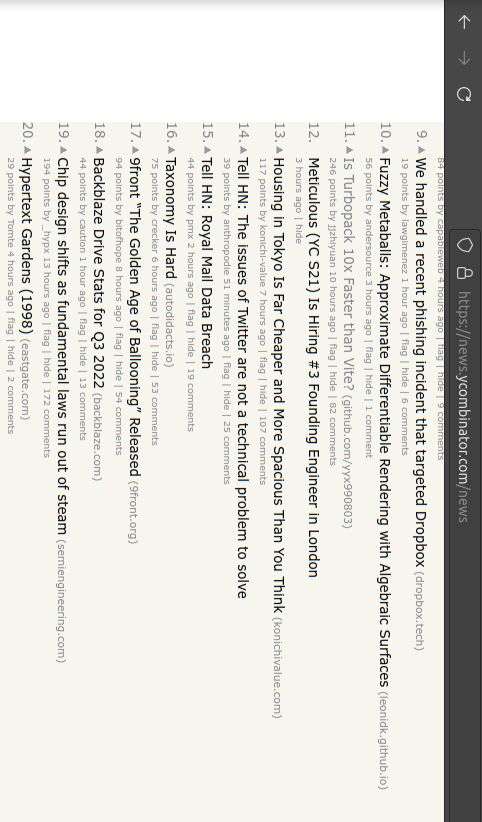

Astolfo is a cute character and all, but why make the boi rotate that much? Also, after putting the computer to sleep, I came back to the whole setup being a bit garbled:

Now, I'm not sure about you, but while I'm okay with news that make me occasionally scratch my head, I'm a bit less enthusiastic about having to turn my head at a 90 degree angle to read HackerNews posts:

It's not the end of the world either, it's just a bit annoying that I had to reset everything manually to workable values:

I guess I'm just a bit lucky here, in that my laptop that is running Linux is generally stable and hasn't had issues like this, or issues with fans (it's an underpowered netbook for the most part), though I've heard of some folks with laptops having issues with Linux, sleep and hibernate functionality, fan control and so on. And then there are problems like this on the desktop, which may or may not be caused by the distro. Oh well.

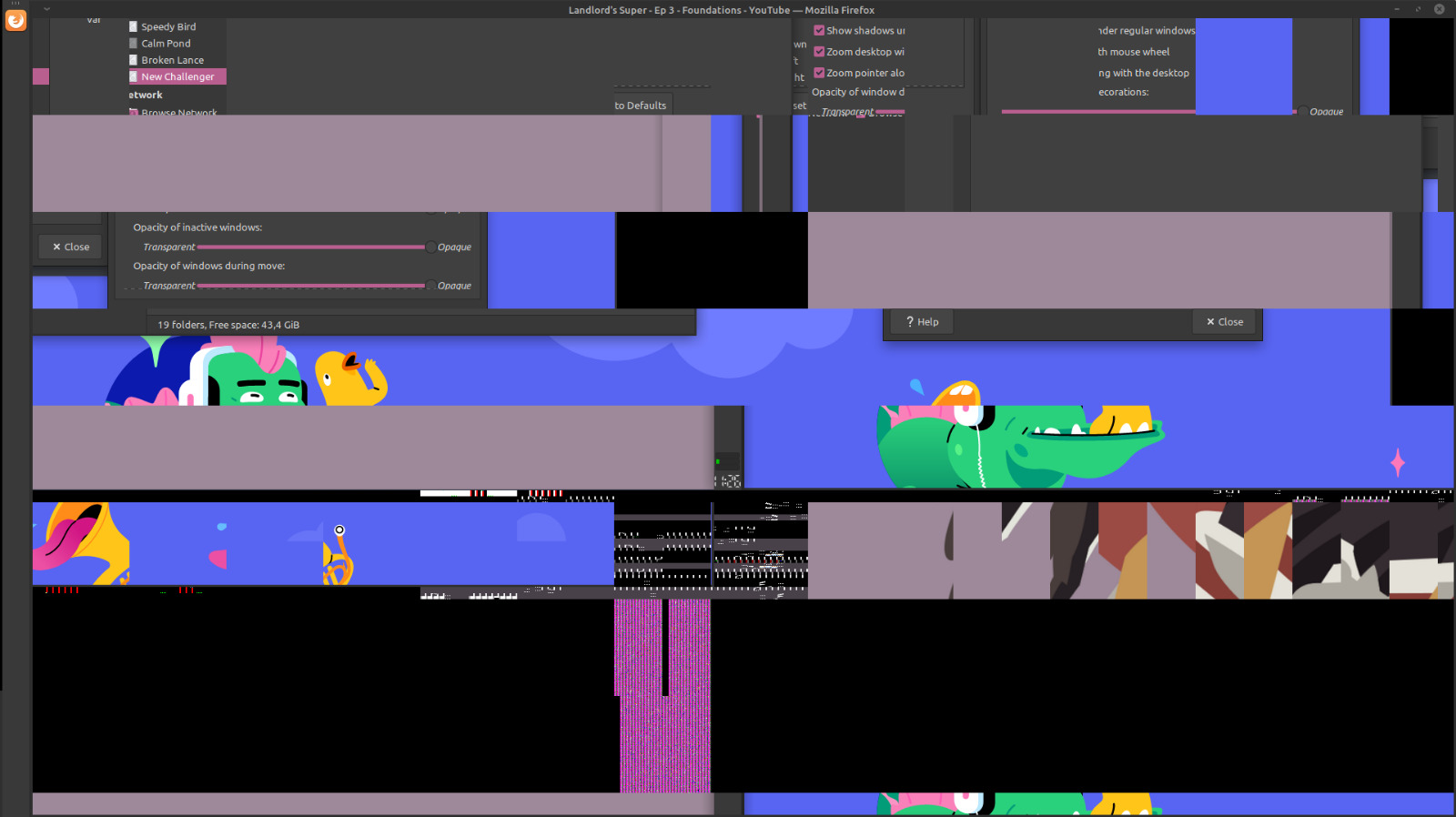

Cthulhu on your system

But what about when you want to summon Cthulhu to make your day a little bit more interesting?

Like so:

Ph'nglui mglw'nafh Cthulhu R'lyeh wgah'nagl fhtagn

Behold, the results:

Or rather, something peculiar happening to just the taskbar panel:

It feels like a throwback to the days of similar glitches when dragging around windows in the older versions of Windows:

What actually caused that issue? It's hard to say, in one case I ran a graphical program that needed OpenGL, but in the other I've no idea, was just looking through the appearance settings in XFCE and tried signing into Discord. It's unfortunate that you need to get used to a bit of instability in regards to the graphics drivers (this was before I tried installing AMD Radeon software, not sure if it's worse or better now), though thankfully at least it only happens occasionally, like it also does in Windows.

The exception, however, is the fact that in Windows the screen will flash a few times and the driver will essentially restart, whereas in Linux I needed a system restart. One can probably claim that the driver support and such will get better in time, or that this is a fault of the distro or some of the software that I'm using, of course, not that it helps me fix the issue.

Some thoughts about major releases, security and learning curves

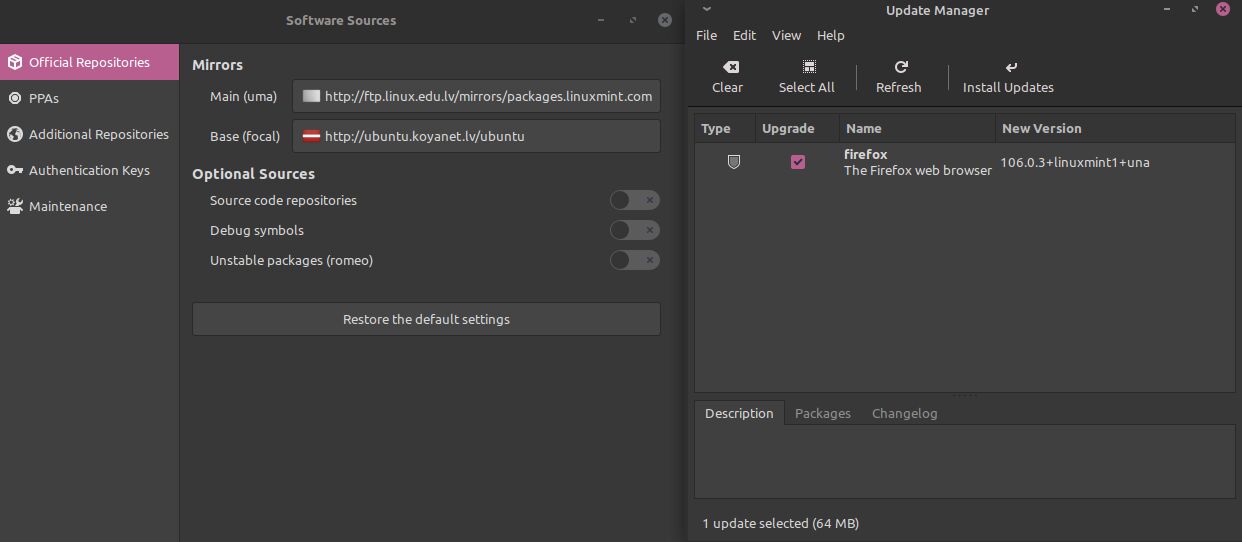

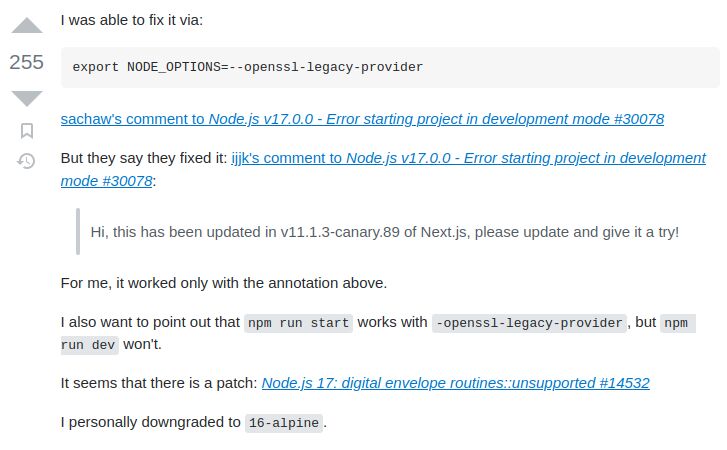

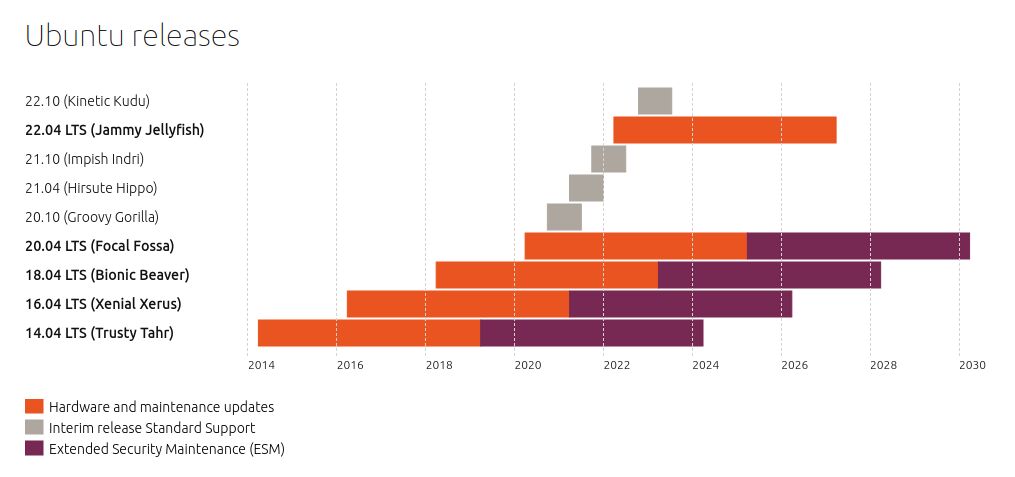

Now, the current OS version that I'm running, corresponds to Ubuntu 20.04 LTS, which has an EOL date of 2025, much like Windows 10 does:

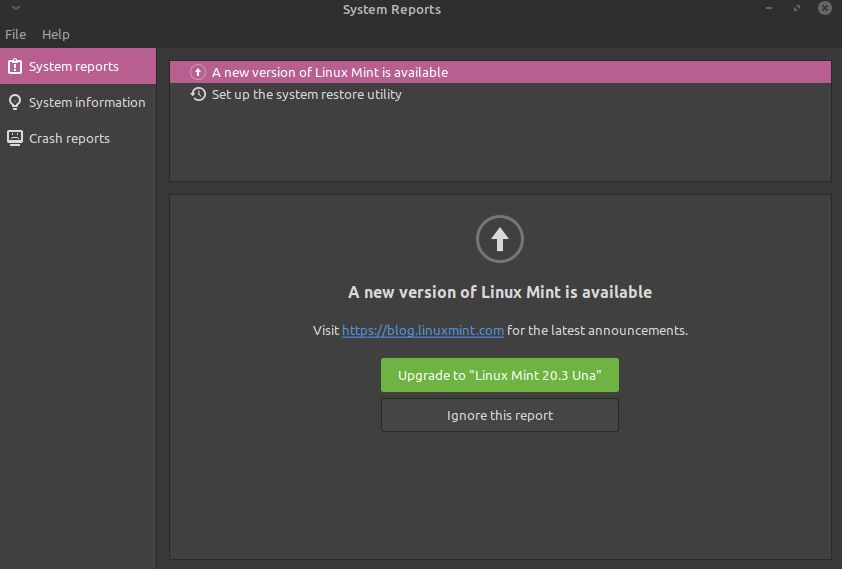

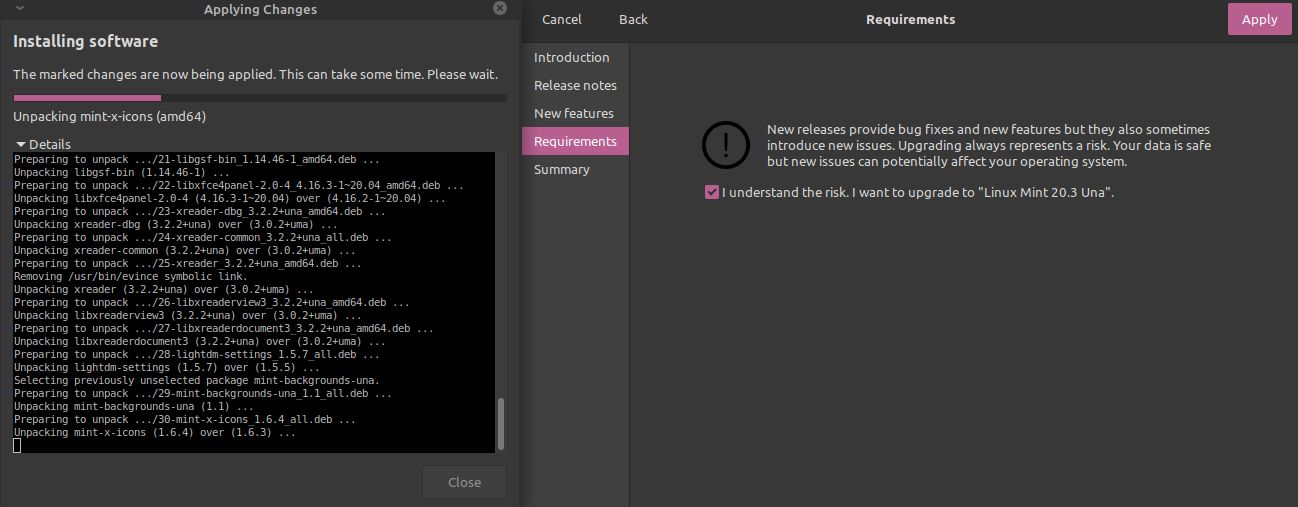

This also means that my distro will need upgrading once that time comes, or shortly after. Actually, the next version has already been released and is available, which I can install on top of my current one:

This is about as good as upgrades between versions of Windows being offered go, except that there really aren't financial incentives here, so the upgrade process is pretty smooth and simple. Now, there have been distros out there that do something even more crazy, like converting CentOS into Oracle Linux with a script, but generally this ability to do upgrades across releases is really nice, which just makes me sad when some distros don't offer that ability.

Then again, this might sometimes lead to hard to reproduce bugs and nobody wants the instructions in regards to having a certain package running or an environment being prepared to have: "Okay, start with Ubuntu 16.04, then upgrade to Ubuntu 18.04 and finally go to Ubuntu 20.04". Now, I'm not saying that you'll even have problems that often if you try to keep an install alive across multiple versions, but in my experience it's just a good idea to have plentiful backups and occasionally start over fresh. I might just be able to say that, because I don't have years of dotfiles to look after, and mostly just run software in its stock configuration (maybe messing around with fonts in IDEs and such), whatever that may be, with any customizations being superficial and non-essential for things to work.

On an unrelated note, folks sometimes say that you don't really need to worry about viruses on Linux distros as much, which I'd argue is mostly just due to Linux on the desktop being a smaller target. There can absolutely be shady and downright malicious scripts out there. While some people will suggest that you should never pipe curl output to Bash, the fact of the matter is that running random .deb packages that you got online isn't much better, so you're still putting yourself at risk whenever you venture outside of the relatively safe space that is the official package repository. What's worse, is that the prevailing opinion seems to be that virus scanners in general are unnecessary, even in places like HN, while I've personally have had my behind be saved by them more than once (and not just false positives, mind you). Even Windows Defender is better than nothing, but Linux coming with nothing by default irks me somewhat. It feels careless.

Also, there absolutely is a learning curve to be taken into account, when picking an OS and I'd suggest that in general Linux will be a bit harder to get accustomed to than the more GUI centric approach of Windows. Compare how using something like Debian or Ubuntu is through SSH and then look at how Windows Server works with its RDP-centric approach of clicking around a GUI. It's not entirely dissimilar to how the scene looks on the desktop: when things will break on Linux, you'll need to drop down to the terminal. And for many folks, it's going to be hard to run the suggested CLI commands that they found online, when they'll have no idea what a terminal is or how to open it.

Summary

At the end of all this, I don't think I actually have any strong feelings about GNU/Linux on the desktop in the current year. It's a bit better than I expected, but a bit worse than I hoped for.

If I had to sum things up in a few short points, in comparison to Windows:

- Linux is definitely usable on the desktop, probably to the point where it can easily be your daily driver

- there are things that are better than in Windows or other OSes, like package management and the UI consistency

- it also feels better for development than Windows does, especially when you want to use containers

- some things are about as broken as in Windows by default (e.g. window snapping, display scaling)

- one of the things that are better about Windows is all of the good software that's available for it, not all of which is cross platform

- neither OS will necessarily save you from needing to deal with updates, breaking changes or other issues

- gaming and things like GPU power management still aren't where I'd like them to be on Linux, though they are gradually improving

In summary, Linux can be frustrating at times, but I find that it inspires joy and a sense of comfort, when things do work. How much of either of those emotions you'll need to deal with probably depends on what you're trying to do. It's actually nice to see how long of a way it's come and how people still put in the effort to make it better for everyone, oftentimes for free altogether! Now, there is a darker side to open source, something that regards financial troubles and the sustainability of it, but perhaps that's a story for another day.

Personally, I'd also like to experiment with Mac, but sadly I consider myself as too poor to splurge on such a device. My savings are currently less than 40'000 Euros (I invested a portion in bank managed funds, but the economical situation and geopolitical events made most of that value plummet and the inflation isn't helping either) and spending a significant amount of money on a device is hard to justify, especially when my current setup is pieced together from used parts on AliExpress. If anything, that does speak positively of the Linux driver support situation, that these things even work (though on my netbook that also runs Linux, I had to compile custom Wi-Fi drivers; but on the bright side it went from an unusable 200 Euro Windows laptop, to a usable Linux one).

Also, a while back there was this song on the YouTube channel "Epic Rap Battles of History", where a portrayal of Bill Gates had a rap battle with Steve Jobs. A lyric from the Steve Jobs character in that video always stuck with me:

A man uses the machines that you build to sit down and pay his taxes.

A man uses the machines I build to listen to The Beatles while he relaxes.

And that's actually pretty close to what I want: a machine that will let me pay my taxes, let my Java CRUD apps compile without headaches and maybe, just maybe, enjoy a video game or listen to some song in the background... if PulseAudio or whatever I'm stuck using will be in a good mood that day.

Update

By the way, I updated from the 20.2 release that I had running to 20.3 shortly after finishing up this post:

It was a surprisingly simple thing to do and it's nice that there is a UI option for triggering this process, instead of manually messing around with package repositories and other such things. I don't doubt that upgrading across major releases could also turn out to be a similarly doable process, as long as the system configuration isn't too niche. But I guess I'm sticking with the equivalent of Ubuntu 20.04 LTS for a year or so longer, so that most of the issues with the new release will already be either solved or will have workarounds by the time I'll upgrade.

Other posts: « Next Previous »