How i migrate apps between servers in 2021

Date:

There are plenty of reasons for why you might want to migrate an app over to another server. For example, hardware resources are better suited to hosting it on another node (SSD instead of HDD, a better CPU), you have a desire to isolate it from other apps and software that would be running on the node, availability issues or simply a difference in uptime between some nodes (for example, in a data centre vs a homelab), bandwidth limitations and so on.

I actually recently ran into a situation like that: i figured that running a particular task management app, OpenProject, on my homelab servers would be more reasonable than to keep hosting it on my VPSes, since those are more expensive and i don't really need the higher availability that it'd provide. Essentially, it's a self-hosted alternative to Jira for organizing the projects that i'm working on:

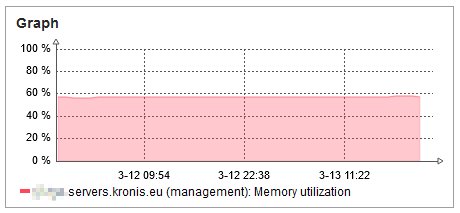

Since that particular app is a bit memory hungry, moving it off of the server could help me free up its memory a bit and i could use it for running other apps:

And yet, despite decades of advances in computing, this process can be complicated and troublesome more often than not!

For example, if you are running your software on your own servers, it might be a case of moving the actual HDDs elsewhere or cloning their contents. Of course, this only works if the entire server is dedicated to the application, which i don't believe should really be the case, especially if you want to have as high of a density as possible. Another option would be running apps in separate VMs, but even so many cloud platforms don't support running random virtual machine images or mounting virtual machine disk files, so that's a no go for hybrid configurations. Also, running too many VMs may actually put a pretty big strain on the underlying hardware, in my case it wasn't even an issue of CPU utilization or memory usage, but i found that the single HDD that i was running VMs off of had terrible IO latency, so much so that Zabbix kept complaining about it all the time. So what's left?

Another option would be to attempt moving the application itself, without affecting the actual OS, however here we run into the issue that modern servers aren't really all that well suited for it either - it's not always easy to figure out where our persistent data lives and where you could find the data that's necessary to actually run the app, which we wouldn't necessarily care about. Of course, even if all of the data was under "/data" on the server, it'd still be possible that you'd end up with a different version of the software installed on the new server, which would be incompatible with the data that you've carried over. And even if you could get the exact same version, this process of discovering what to carry over and how would be different for almost every app that uses a different storage mechanism: storing data in the file system, SQLite file based database, MySQL, PostgreSQL, MongoDB, or even more niche technologies, alongside their configuration files which may be strewn out all across the file system.

So what can be done about it? In my case, it's actually pretty easy.

Run everything in containers

My solution to that is to run almost all of the software on my servers inside of containers. Thanks to this, i can not only easily schedule and launch apps, but it also provides me a clear overview of both their versions, configuration parameters and where the persistent data is stored. For example, in the case of OpenProject, the Docker Swarm definition for it would look a bit like the following:

version: '3.4'

services:

openproject:

image: openproject/community:VERSION_TAG_HERE

environment:

- SECRET_KEY_BASE=SECRET_KEY_VALUE_HERE

- EMAIL_DELIVERY_METHOD=smtp

# ... SMTP parameters go here

volumes:

- /home/USER/docker/openproject/data/openproject/var/openproject/assets:/var/openproject/assets

- /home/USER/docker/openproject/data/openproject/var/openproject/pgdata:/var/openproject/pgdata

networks:

- caddy_prod_prod_network

deploy:

placement:

constraints:

- node.hostname == OLD.servers.kronis.eu

resources:

limits:

cpus: '0.75'

memory: 1536M

networks:

caddy_prod_prod_network:

driver: overlay

attachable: true

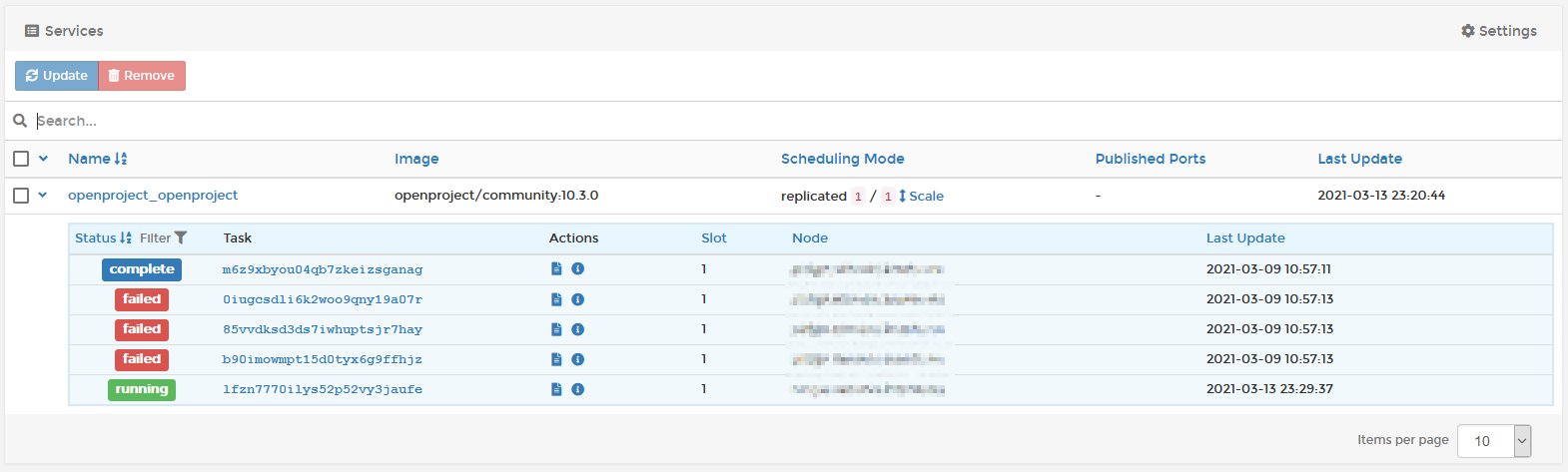

external: trueEssentially, all i'd need to change here to have that exact same version of the software running on another server, would be to change OLD.servers.kronis.eu to NEW.servers.kronis.eu. But admittedly, that's not all of the story! I'd still need to handle moving the data over, as well as updating the domain. As it turns out, handling the data isn't hard in my case either! First, i just stop the instance of the app i want to carry over, though, to avoid any chance of corrupted data copies:

Then, i can proceed with copying over the data to the new server.

Always have a separate directory for the data

Ideally, you shouldn't care whether your app is using MySQL, PostgreSQL, MongoDB or any other technology that may or may not be complicated. Instead, since all of those use certain directories in the file system, bind mounts in Docker allow us to mount certain directories from the container to others in the file system. Thus, it's possible for me to have a separate directory for each of the Docker Swarm stacks and also the containers. For example, here's one of the Docker Swarm bind mount directories:

/home/USER/docker /openproject /data /openproject /var/openproject/assets :/var/openproject/assets

/BIND_MOUNT_DIRECTORY /STACK_NAME /data /CONTAINER_NAME /DIRECTORY :/DIRECTORYBecause of this, it becomes extremely easy for me to carry over data to another server, first i just archive it:

cd ~/docker

sudo tar -czvf openproject.tar.gz ./openprojectThen, i can use almost any method of copying files to the new server, in this case opting for a simple SCP transfer:

scp openproject.tar.gz USER@NEW.servers.kronis.eu:/home/USER/docker/openproject.tar.gzOnce i have the file on the new server, i can extract its contents to the same directory and get rid of the archive:

sudo tar -xvf openproject.tar.gz

rm openproject.tar.gzThen it becomes possible to update the deployment description for Docker Swarm and launch the container on the new server, to make sure that the data was carried over successfully:

After that, all that's left is to carry over the DNS records and reconfigure the ingress!

Have a centralized way to manage ingress

Finally, we need to make web requests actually go to our app. The first thing we might want to do is update the DNS records, which is done easily enough. In this case, instead of an A record, i instead have a CNAME record which points to the A record for the server that the app will be running on. This eliminates the need to remember IP addresses or to wonder about what is running where:

Another revelation that came to me was that maintaining a separate instances of Apache/httpd, Nginx, Tomcat or any other web/application server just doesn't scale all that well! Especially if you do have a need for HTTPS certificates, even configuring Certbot can be a time consuming activity if you need to do it for 20 different apps, not even mentioning the idea of managing certificates manually!

So, my approach was to use Caddy, a free web server with automatic HTTPS for managing ingress points across all of my servers. Now, since i do want to minimize the impact of outages or misconfiguration, each server runs a separate instance of the server, with the configuration that's needed for the apps running on it stored in a Caddyfile. Thus, all i need to do to have requests correctly proxied, is have the following configuration:

https://MY_APP.kronis.dev {

gzip

proxy / openproject:80 {

transparent

}

tls MY_EMAIL_ADDRESS {

# ca https://acme-staging-v02.api.letsencrypt.org/directory

}

}In the example above, i sometimes uncomment the CA link, to initially use Let's Encrypt's staging environment, to prevent bad configurations from making my servers hit rate limits. But in comparison with Apache/httpd and even Nginx configs, this is way shorter and more readable.

This will ensure, that once i restart the Caddy container, it'll pick up on the changes, request the certificate and i'll be able to use the application without any hassles! That's all that i needed to do, apart from also cleaning up the old data (which i can do, because everything's also backed up periodically, deleting too much by accident could also be reverted somewhat easily).

Summary and pitfalls

I would say that my setup isn't perfect, not by a long shot - ideally, all of this migrating could be done automatically, and the steps could be done one by one with automatically only proceeding once the previous ones have succeeded. Additionally, Caddy is a bit finnicky about failures, for some stupid reason it likes to bring down the entire container instead of just outputting a warning into the logs, like "3 out of 90 sites failed certificate provisioning, please check their configuration". What's worse, the developer seems to have a bit of an attitude in regards to this matter, so nothing will most likely be done about it!

A half-working zombie server is not a good alternative because you would not want your web server running only some of your config, for the same reason you would not want to execute a truncated shell script.

Not only is that false equivalency, but also that's exactly what i want - if i'm serving 100 different applications, i don't want all of them to roll over because just one failed to do that. Furthermore, Caddy's love for hitting rate limits whilst checking for bad configuration is just stupid beyond belief. And putting something like:

deploy:

restart_policy:

delay: 300sis only a temporary workaround that's just not good enough.

And yet, despite Caddy's flaws and the amount of manual work that's necessary, i still think that this approach is entirely suitable for my needs. It doesn't use distributed file systems or complex clusters that would need bunches of managing. I don't want to utilize those either, because then i'd spend 90% of my time troubleshooting and maintaining infrastructure that'd keep me from actually getting things done! Using bind mounts for file system directories, simple archives and Docker Swarm is the easiest way that i've found so far to address the issues mentioned above. Even moreso, it works in a hybrid cloud setting with no issues and has served me really well so far!

Other posts: « Next Previous »