How i organize my Docker deployments in 2021

Date:

A post on Reddit over at /r/selfhosted asked people to share how they organize their Docker containers. I did see that the majority of the people there simply used Docker Compose or something of the sort, which is totally fine - yet my setup is a bit more interesting, so i'd like to briefly tell all of you about it!

OS for the servers

Typically i use Debian or Ubuntu, though anything that supports Docker should suffice (though RPM distros had DNS problems with the 19.XX versions and older).

Sadly, nowadays it seems like the longer term support distros like CentOS are a lost cause, seeing as now only Oracle Linux remains after CentOS was killed by Red Hat and there are quite a few risks with actually using it. Other projects, like Rocky Linux might take a while to actually become popular enough to be offered by most of the VPS hosts out there, which i'd need, seeing as i run a hybrid setup.

For now, i host many of my VPSes on Time4VPS, which allows using said OS images for setting up servers, which is a pretty typical representation of the bigger distros that are usually available:

Speaking of which, you should totally check them out - they're one of the more affordable VPS hosts that can actually been trusted, seeing as i've successfully been using them for years. And hey, if you like the article, the affiliate link could help me host this very blog!

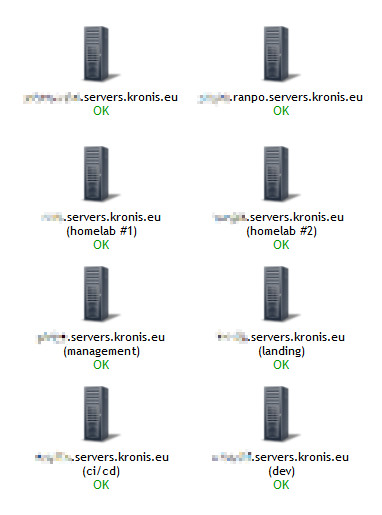

Setting up the servers

Seeing as i won't always rely on cloud-init scripts, i do need a way to connect to servers and to set them up in an automated way that uses mostly standard technologies. In the case of GNU/Linux that is probably SSH for connecting to the server and whatever shell that is available (or maybe Python) as well as the GNU tools or distro package managers for getting things done.

A while ago i actually created my own tool, Astolfo Cloud Servant for this, as a part of my Master's degree, to compare them against the other stuff that's used in the industry:

It used SSH and Bash (though many of the scripts could work with the regular Bourne Shell directly) to execute its scripts, sending over simple commands and retrieving their output with the Paramiko library, thus eliminating the need for something like Python on the remote system, instead allowing it to be only present in the local container in which the tool would work.

However, for most other circumstances i'd go with established tools within the industry, such as Ansible playbooks, which allow defining the system packages and any directory structure in YAML format, all of which can be versioned and deployed with GitLab CI. This is just one of the many examples of GitOps in practice - while my tool could also definitely work like that (it being able to be called even as a part of other Bash scripts), personally i rather enjoy Ansible because of its community support.

Orchestrating container clusters

Many fail at introducing Docker and containers into their infrastructure because of a lack of knowledge and tools to support their goals.

Some just run regular Docker containers and find that the churn that they have to deal with is much like that of manually launching services on their servers, as opposed to using something like systemd services. Others attempt to use Docker Compose and run into problems when their deployments need to use more than just one server. Some people don't even know about the overlay networks which has lead to some rather nasty problems.

What's worse, in the end many reach for tools like Kubernetes, which are utterly overkill for most of the deployments out there and will only ensure that huge amounts of resources will need to be wasted to help them deal with the technical complexity of it. Admittedly, there are turnkey solutions which can be selfhosted, such as using Rancher and maybe Kubernetes distributions like K3s but i've seen people reaching for shiny technologies too often without knowing their drawbacks. While their CVs look nice afterwards, this is akin to picking Apache Kafka just because you need a simple message bus and then needing a team to manage just that one deployment, which is precisely why i've decided to make a better choice.

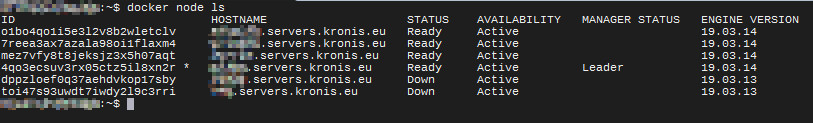

Therefore, for orchestrating my clusters, i use Docker Swarm, which allows taking Compose Files and adding additional parameters to them, to distribute the containers and therefore the load across many different servers.

In the end, this approach is far easier than using Kubernetes and Swarm is included with Docker out of the box. Furthermore, i've only had problems a few times with these clusters over many years of running them and in case something goes wrong, it's also relatively easy to recreate said clusters. And the file format really is the same as that of Docker Compose so interop with local development is trivial.

Managing container clusters

For managing Docker Swarm: Portainer. This is hands down the only and best option for managing your Swarm cluster in a good way (since projects like Swarmpit are buggy and the discoverability/UX of the CLI is insufficient) - it supports everything from creating new deployments, altering the existing ones, adding webhooks for easy CI redeploys, viewing the logs and resource usage in near real time, filtering them etc:

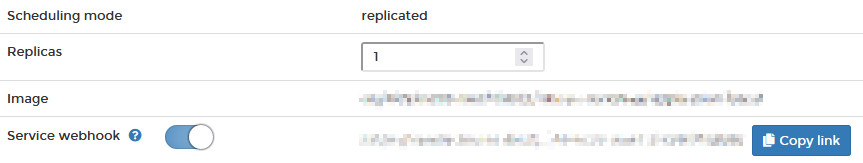

Some might disagree with me and would claim that using the CLI is always better, because it lends itself to being automated better. I won't contest that it is indeed a major boon, but my counterquestion would be - how often do you actually make new environments altogether? Redeploying application versions - sure, that should be a regular part of your CI/CD cycle, that something like GitLab CI handles, for which Portainer incidentally also supports adding webhooks:

But making new environments is something that i think should actually be done manually - as long as that only implies doing manual validation steps but mostly otherwise means copying your already prepared YAML definition file into a stack. That way, you minimize the human error by having definitions for your infrastructure as code, but at the same time you're not risking overprovisioning environments with something like Terraform and also making sure that the computer is actually about to do what you want it to.

Lastly, Portainer also gives exceedingly good observability, by giving you an immediate overview at what's going on:

In my opinion, this is the closest that we'll get to easily managing the software that's on your server in a reproducible and user friendly way, like Cockpit Project attempts to do with administering servers. Yet, with containers you get the added benefit of a simpler alternative to systemd, not having to trust individual packages as much and being able to run 20 parallel and different versions of PHP or anything else, if you need to.

Ingress

Now, for self hosting the next concern is running some sort of a web server or a load balancer to handle the incoming connections and SSL/TLS termination. Many cloud vendors offer this sort of functionality, but it can actually be pretty expensive and also locks you to a particular vendor, which isn't suitable for my needs.

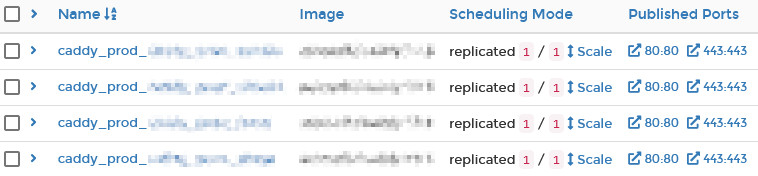

To that end, i use Caddy webserver containers (one on each server) with Docker overlay networks, so that references can be made across stacks and reverse proxies can be established by using container names for DNS queries. Furthermore, Caddy's simple syntax and integration with Let's Encrypt allows for easy SSL/TLS certificate provisioning and automatic renewal:

For now, i configure a separate service for each of the server, with two different deployments and separate overlay networks for the production and development servers, as you can see. This way, i can redeploy each one individually if i need it to react to new configuration changes. Also, if any of the servers were to be hit with DDoS or would run into problems with the redeployment, in that case only those particular servers would be affected, not the entire load balancer. Also, any bandwidth charges and DNS records correspond to that one individual server - this sort of decentralization works for me wonderfully!

Storage

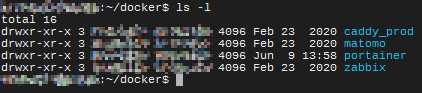

For persistent storage: so far i'm using bind mounts. Docker volumes have horrible observability, so instead being able to open directories on my server is way easier both when i need to have a look at the data, as well as for backups. The pitfalls here are the fact that i manually need to create these folders and to organize the proper access permissions for the containers.

For shared storage: sadly Docker Swarm lacks the concept of Kubernetes' PVCs, so instead i'm just using NFS to mount a directory from a remote server locally (for example, where Caddy stores certificates, so load can be balanced amongst multiple servers with the same certificate, if they share the domain). There are distributed alternatives like GlusterFS or Ceph, but that would basically be overengineering.

Backups

For my backups, i actually use rsync and SSH thanks to BackupPC, to connect to all of my cloud servers from my homelab one and then pull all of the data that i care about to the local TB HDDs that are on my table - this being way more cost effective than using cloud storage of any sort. Also, with this approach, i'd argue that my data is way more safe, seeing as an entire class of untrusted party and data leak related risks are noticeably decreased.

(note: i cannot actually show you screenshots of the platform right now, given that i'm writing this article in the middle of the night and my servers have gone to sleep so that there wouldn't be any noise and i could sleep as well)

Admittedly, the solution takes some work to actually get it to work properly (such as to have the proper file access rights) and some tinkering might be needed to hit the balance between the frequency of incremental backups and the full ones, as well as to cope with the fact that the internet usage might be pretty large for most residental connections. However, despite that, i've found it to be a powerful and non vendor locked tool which is great!

Monitoring

As for monitoring, i'm still not on the hype train of using Grafana and Prometheus, but instead i'm using a smaller and older solution that's specialized for monitoring servers and happens to be a local project: Zabbix.

In my case, it allows me to automatically figure out a bunch of different metrics that should be collected about my servers and to do so, also allowing for automatic alerting when certain values exceed safe thresholds, currently by e-mail. The interface can be somewhat antiquated, but thankfully the software feels reasonably well optimized and is really easy to set up on any server - you just need to install the agent, enable its service and also configure it to allow access from your Zabbix server.

Summary

There are many aspects to every software deployment and running your own hybrid cloud isn't always awfully easy, yet there's thankfully a lot of free and open source projects and tools out there, that can make all of this both easier and cost effective! Of course, this does take constant work to keep running, but thankfully containers can also ease some of that pain. To that note, i'm curious to see where i'll be in 10 years and how things will have changed.

Perhaps i could even make an optimistic prediction:

In 2031 most of the software that i'll be using for my hybrid cloud will have sane defaults and will be easy to set up in immutable ways. There will also be a clear distinction between the files that are needed for the runtime versus the persistent data directories. And even in cases where the technical complexity could get pretty high, there will be projects, much like we have K3s, which will allow utilizing standards compliant, yet technically simpler alternatives.

Other posts: « Next Previous »