Using AI for learning programming

Date:

In the recent years, AI has gotten a lot of attention. At first, a lot of it was hype around what the technology could some day do, but more recently there have been gradual improvements with some practical application towards everything from generating text to even art, albeit not without controversy.

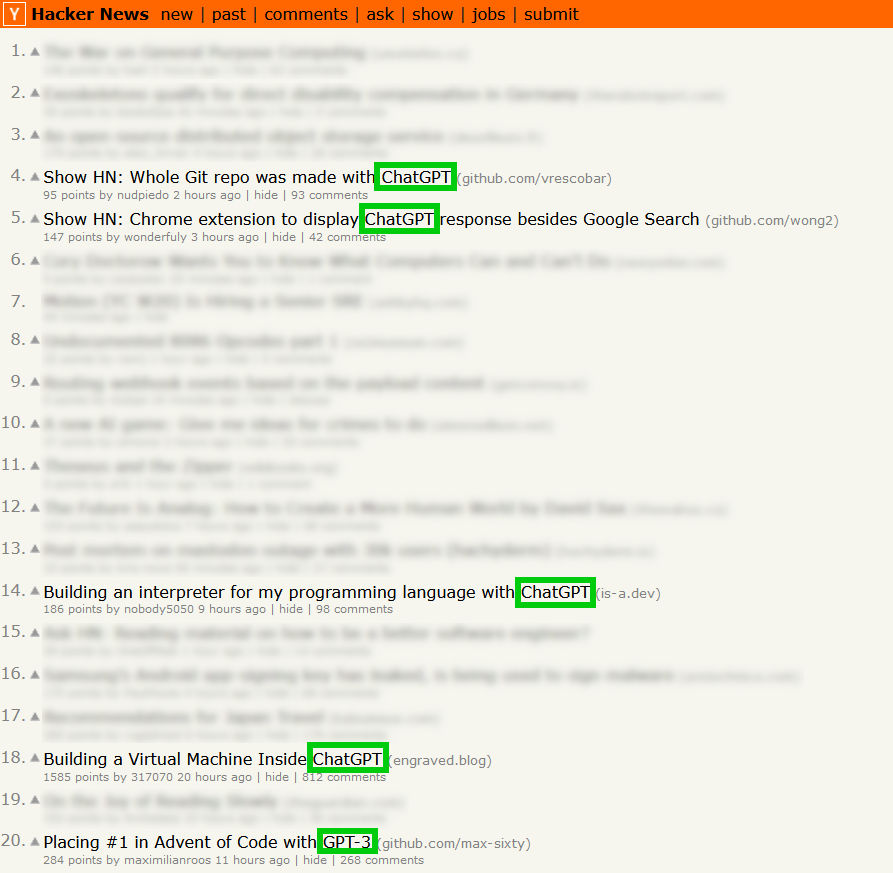

Now, imagine opening one of your favorite technology sites and a good deal of the posts on the front page are about AI:

That's rather interesting, you might say! It just so happens to be shortly after ChatGPT was released by OpenAI. They're also the lovely people behind projects like DALL·E 2, an AI solution for generating images. This time, however, they're going after the goal of building an AI solution that can chat. One could say that the industry has seen many attempts at doing that previously, everything from digital assistants, to attempts at creating fun experiences, like AI Dungeon - an AI storyteller that can narrate adventures for you, like when you'd play a game of Dungeons & Dragons.

However, seeing OpenAI going after that goal more seriously now and the hype that it generates does make one wonder, whether they've created something a bit more substantial than the previous applications of the technology. Maybe something that could be useful for writing code, just like how GitHub Copilot made writing code easier for anyone who can point an AI at their code and go: "Hey, I'd like some help with what I'm trying to do here, please."

And, since ChatGPT is focused on conversational AI capabilities, presumably with lots of data available in the system as a whole, let's take advantage of it (currently) being free and do just that: see whether we can learn anything new about programming with its help:

So, first up, let's figure out what problem we want to solve.

What we are going to do

My initial goals are rather simple: to ask this AI to tell me a bit about a feature in a programming language that I might not be super knowledgeable about and then to experiment around with some code, ask questions and hopefully have it be a productive learning experience. In particular, I think I'd like to learn a bit more about the Go programming language - most of the work I've done so far has been in Java and .NET, but I'd like to get more comfortable with Go, so I can use it for more than just small pet projects or the occasional microservice. Thus, this is an excellent excuse to do so!

Now, before I've even asked the AI to tell me anything about code, or offer its algorithms or examples to me, many might immediately say something along the lines of: "Hey, AI is known to hallucinate, that is, confidently state wrong things and give you wrongful information."

That is absolutely true, so I'll also state that you should never just blindly trust what any AI tells you: most of our current tech seems to have lots of issues of both only having short term memory (for example, if you use something like AI Dungeon or Novel AI to weave a story and it keeps forgetting details), as well as having no concept of it being fallible itself. Then again, you know what else has problems with information that can get out of date quickly, or be outright wrong? The Internet.

I won't be alone, if I'll say that I've often looked up code examples that either don't follow the best practices, or just straight up no longer work: be it because they're for Python 2 when only Python 3 is supported anymore, or perhaps because they're referencing an older framework version (especially prevalent with Ruby on Rails a while back, if I recall correctly). That said, many of those partially incorrect answers can still be good for one thing: "exploratory learning".

You don't need to trust every piece of information that you find as an authoritative and true source. In our modern day world, I'd say that you need to verify most claims, be it whether something that you hear on the news, read in the summary of some scientific research piece, or when you want to run some code - the compiler giving you output that works as expected will generally be a pretty good way to make sure that you're on the right track! But getting there will take some effort in many cases.

So, let's talk a little bit about that first!

Exploratory learning

A lot of the time when I try to find out new information, a lot of it is Googling around (or using other search engines or sources) and experimenting with various approaches and bits of code. I know that I've found a proper solution when my code finally runs properly and I understand how I got there.

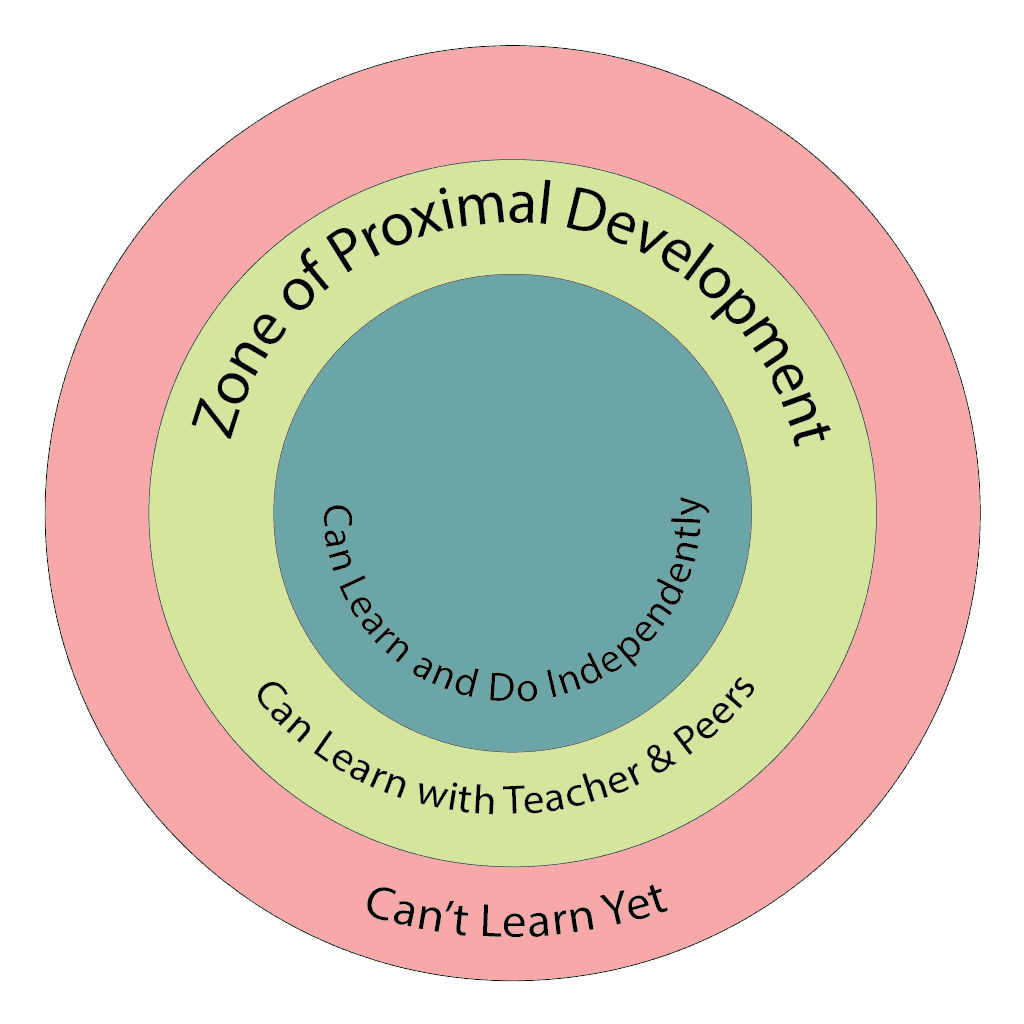

But at the same time, a lot of it can be being stuck in the zone of proximal development. There are these three stages of learning a new skill.

For example, in regards to learning a new language, it might look like this:

- A: Tasks You Cannot Accomplish With Assistance - this is where you will start out, your code won't compile, you won't know how the language works or what is wrong, even the terms will be unknown to you

- B: Tasks You Can Accomplish With Assistance - with documentation, compiler messages, or someone to help you, you'll be able to get your code to compile and eventually, work correctly

- C: Tasks You Can Accomplish Without Assistance - with more practice and experience, this is where you'll end up, being able to use the language in your daily life, possibly professionally

Now, when writing code in particular, the first stage won't be that pronounced: if you go from Java to .NET or vice versa, you will probably have some idea of how your code will work. The same can be said for most modern languages in some regard: like Node, Go, Ruby, Python, even PHP. Of course, if you venture too far off this beaten path and try to learn Rust, C, C++ or Haskell, you might get more familiar with this stage in particular.

For example, asking "What is a monad?" might provide the following answer:

All told, a monad in X is just a monoid in the category of endofunctors of X, with product × replaced by composition of endofunctors and unit set by the identity endofunctor.

That would surely take a bit more work, than just getting comfortable with the differences between prefixing variables with $ and @, as well as what they mean in various languages.

Then, there's the second stage: almost any developer out there will be more or less familiar with using StackOverflow for getting answers to their questions and what to do about various error messages. Many might as well look up language guides when learning a new language, most modern ones even have guided tours. For example, the Go programming language has A Tour of Go which walks you through getting started.

Now, in theory, this should be enough to help you get from the second stage to the third. But that's not always the case - what if you don't understand some of the information that's offered and want it phrased differently or elaborated upon? What if you don't have friends or colleagues who personally use Go and cannot help you with that at the moment? What if your StackOverflow question gets left unanswered, or even is just downvoted and doesn't give what you need? Or maybe you get the desire to learn more about the language and want to keep doing that while you still have the motivation and time, instead of in a week when your questions get answered?

That's where AI comes in, at least that's my theory. If you get AI that is sufficiently good, it should be able to help you along and, even if it's wrong in many cases, offer you terms and examples that you can play around with. I'd say that one of the good things about GitHub Copilot and other AI autocompletes isn't the fact that they produce excellent code, because they don't - instead, they give you something to get you started and prevent you from being stuck in this zone of proximal development. Sometimes that sort of a push is all that you need.

Let's get started, first impressions

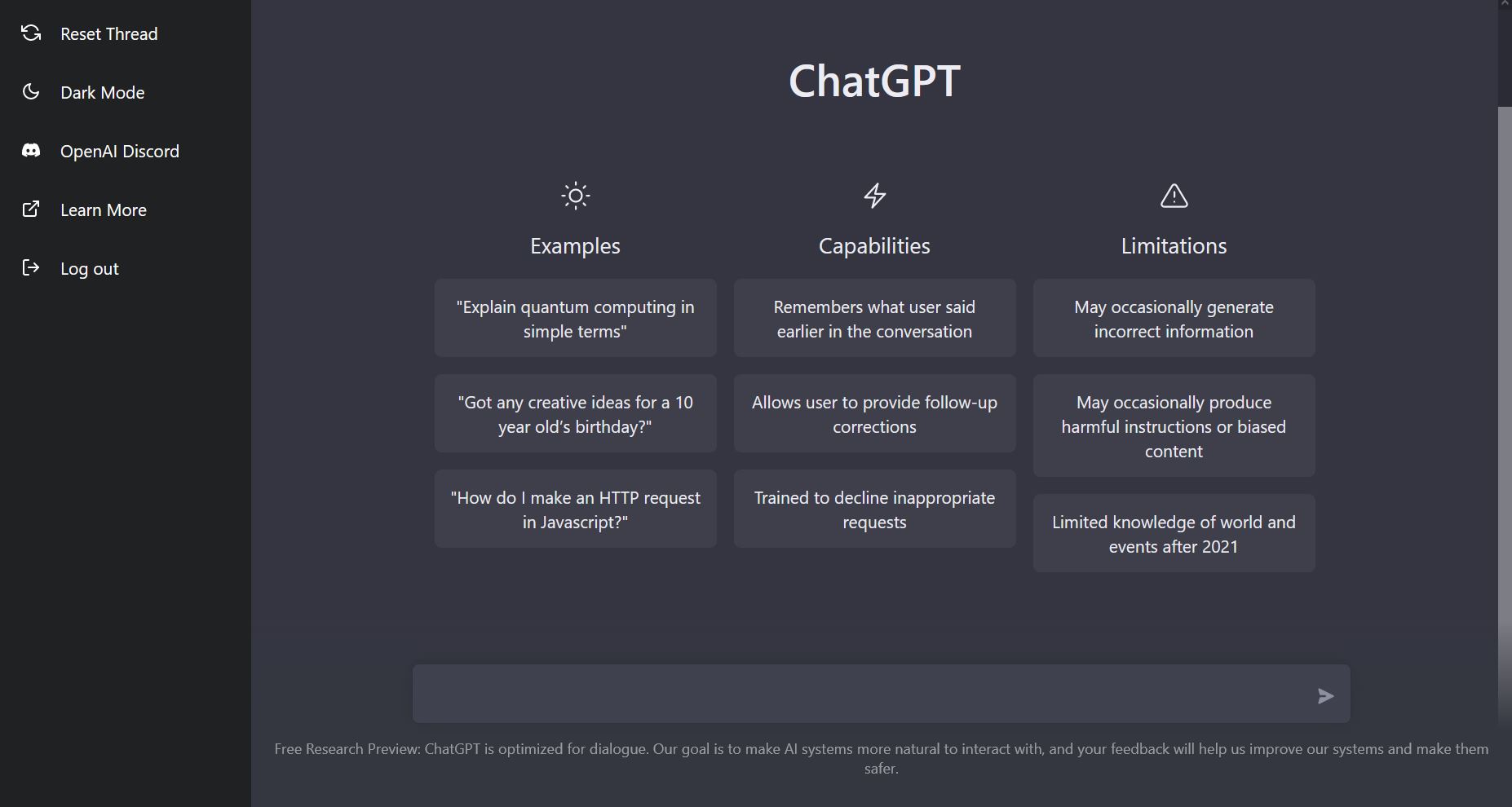

After registering and logging into OpenAI, we're greeted by a rather simple interface, not unlike a regular chatroom and in line with what most other offerings out there do:

So, for starters, let's look at A Tour of Go, the first example of channels you get:

So, let's set the stage and ask the AI to know more about channels in Go:

Would you look at that, it actually gives us a little writeup about what channels are and how they can be used! But it doesn't end there, in addition to that little description we're also given a code example of how one could use channels, which is commented and doesn't appear to just be ripped out of A Tour of Go either:

In addition to that, we get a little bit of an explanation of the code in question, of how it works:

Now, first up, where does all of this come from, did the AI just generate some code of its own? It doesn't quite appear so. If we look up snippets of the code on a search engine of our choice, we're brought to "Go by Example: Channels", which looks a lot like what we just got:

Only the summary below and the intro above seem to actually be different from what's in the site, though it's curious to see notes besides the code becoming code comments here. But there's another concern here: let's look at the license that Go by Example has:

It is "Creative Commons Attribution 3.0 Unported License", which is explained in simpler terms here, with one particular aspect jumping out:

I don't think I can see a reference to Go by Example in the UI of ChatGPT anywhere, which might be a little bit concerning to some.

Either way, let's move on for now and check another important thing - does the code actually run? For that, I have a project setup locally with GoLand and whatever Go version feels recent at the time (1.19.3 in my case), where I can just copy the code and check whether it runs:

Seems like the code actually runs, so no problems there! But that's not exactly groundbreaking, now is it? So far it's like the AI just took some data from its training set, which essentially amounted to it picking the equivalent of a Google search result for us.

Let's interact with the AI more

Let's test how it works when we actually try asking for it to do something to the example code.

What we have so far is the following:

package main

import (

"fmt"

)

func main() {

// Create a new channel with make(chan val-type).

// Channels are typed by the values they convey.

messages := make(chan string)

// _Send_ a value into a channel using the `channel <-`

// syntax. Here we send `"ping"` to the `messages`

// channel we made above, from a new goroutine.

go func() { messages <- "ping" }()

// The `<-channel` syntax _receives_ a value from the

// channel. Here we'll receive the `"ping"` message

// we sent above and print it out.

msg := <-messages

fmt.Println(msg)

}Personally, I think that it would be nice to get rid of the comments for now and maybe try extracting the function so it sits next to main, not inline, maybe even give it a name of its own, just to make everything easier on the eyes. Not really learning much about the functionality of channels yet, just making everything more comfy for myself. So let's see if we can do that:

Oh hey, that worked! So the AI seems to at least have the notion of what is a code comment and how to delete them, which is nice. Next up, let's see whether it can extract the function. It would actually be really nice if the code output had line numbers that I could refer to, but for now let's just try to awkwardly tell it what we want:

No problems so far there either. It seems to extract the function and even tells us what it did below:

However, that's not really a good refactoring. What use is a function that can only send one particular message to the channel? Let's ask for further refactoring:

Once again, it does what we ask, at least for the most part. It didn't rename the first parameter to channel and left it as messages instead. It does provide an explanation for the changes it did do, however:

Now, some people might think that these changes are pretty needless, but it's still nice to see that the AI understands roughly what I want to get out from it and is able to provide said outputs with a bit of effort on my part. This is not that different from giving a co-worker some code feedback, except that results are returned in a second or two. I'm under no illusions that this wouldn't break under any non-trivial cases, or anything outside the scope of small snippets for now, but it's still nice to see that things so far work.

The first bit of wrong code

Hmm, why not introduce another adjacent concept into the mix? After all, I might be interested in sending something more than just string messages to my channel, right? So, let's ask for some generics, to see whether it knows of them or not:

Here, it actually gives us some output, with both of the parameters replaced with interface{} and an explanation of how that works in Go (which is not quite what I wanted, but it might not be up to date with language features just yet, or understand exactly what I'm asking for), alongside a disclaimer about how use of interfaces like this can make code more difficult to reason about:

However, this is where we run into problems! This is the code that it gives us:

package main

import (

"fmt"

)

func sendToChannel(messages chan interface{}, message interface{}) {

messages <- message

}

func main() {

messages := make(chan string)

go sendToChannel(messages, "ping")

msg := <-messages

fmt.Println(msg)

}The thing is, it doesn't actually work:

The AI says it should, but it doesn't. This is why we shouldn't trust it and instead do a bit of exploration of the error message in question:

cannot use messages (variable of type chan string) as type chan interface{} in argument to sendToChannelSo, what does the Internet tell us? Something that's vaguely useful but obviously is not our exact case:

I recall AngularJS error messages back in the day, which linked you to a documentation page, which actually explained things with your exact variable names for known errors. So, for example, you'd get a URL like the following https://docs.angularjs.org/error/$compile/baddir?p0=MyDirective- from the console output and would be greeted by the corresponding documentation page:

While I really disliked AngularJS (mostly due to the projects that I saw it being used on), I rather enjoyed the approach.

If such a documentation site was running locally, I could easily imagine myself having very few if any objections to actually using something like that, or having a plugin to have the full library/framework documentation right there in the IDE! It is too often that you search for your particular case on the Internet, just to find something like what you're looking for, but not exactly.

Now, can the AI help us here? Let's ask:

Well, the good news is that it can definitely help us here with no issues. It tells me that the method expects the channel to have the interface{} type but instead we pass it a string type from our main method and even lists the exact channel definition that goes wrong. This is super helpful, in case any of the error messages that you get aren't.

It even goes in a bit more detail about the bit of code that causes the issue and gives us a name for it: "a type mismatch error", which is surely going to be useful if we then want to Google (or DuckDuckGo, I guess) for more information, as well as explore the topic in more detail. For example, we could stumble upon the following:

This is definitely a useful part of learning, just finding and absorbing more information on the topic, so it helps to know vaguely what to look for. Though we probably want to fix our code first, before jumping into the topic more deeply. Either way, it's nice that the AI helps us streamline our learning a little bit, or at least gives us ideas of things to look up.

Let's make the AI fix its errors

The AI still had a few things to say about what we can actually do to fix the error that we are getting now, even offering multiple options for how to do it:

So, let's accept the suggestion:

(notice that even my typo didn't confuse the AI)

And voila, we get the following code:

package main

import (

"fmt"

)

func sendToChannel(messages chan interface{}, message interface{}) {

messages <- message

}

func main() {

messages := make(chan interface{})

go sendToChannel(messages, "ping")

msg := <-messages

fmt.Println(msg)

}It also works as expected:

Let's explore Go channel directions, while we're at it

Now, we did stumble upon the channel type page, which also told us of channel directions, so why not ask for a demonstration of that?

This time, it seems to get things right, both in regards to adding the direction and doing the renaming. It even adds a little blurb about the tradeoffs that we're making here, except that it doesn't seem to recognize that a method called sendToChannel would probably only ever be used for sending things to a channel:

Why not ask for a method that does the opposite and instead reads the data from a channel and also use it in the code?

Once again, it seems to perform as expected and gives us a working example!

Here's the code in question:

package main

import (

"fmt"

)

func sendToChannel(targetChannel chan<- interface{}, message interface{}) {

targetChannel <- message

}

func readFromChannel(targetChannel <-chan interface{}) interface{} {

return <-targetChannel

}

func main() {

messages := make(chan interface{})

go sendToChannel(messages, "ping")

msg := readFromChannel(messages)

fmt.Println(msg)

}Of course, at this point our code looks a little bit like something you'd see in Java (just kidding), so why not ask for something subjective instead:

(Note: I've removed a paragraph or two of output, because it did the thing where AI repeats the same thing, just with slightly different wording; which wouldn't fit on the screen for a simple screenshot)

Even now, the response itself seems decent. It adds that we could have error handling in the code, as well as timeouts. It also tells us that channels are a first class citizen in Go, so in many other cases we won't really need to get too carried away with extracting methods for everything. It doesn't seem as useful as what a person who has actually used the things would tell us, but on a surface level, there doesn't seem to be anything particularly wrong with the answer, it's just a bit wordsy.

Let's make the channels actually useful

But sending just a boring "ping" to the channel is a bit useless, so let's ask the AI to use more data for our test code example:

Now, the code is getting a bit more long, but it also added code to the read method to get all of the output as well, which is nice, especially because it explains the change:

The code still works as expected, no surprises here:

But surely that's not how you're actually supposed to work with channels, right? What if we want to keep receiving data until the channel would signal to us that it has nothing more to give? Let's ask about that, without using the term "close", since we might know nothing about Go in particular:

Okay, so the theoretical explanation is on point, but what about the implementation? Here things once again are not ideal:

It doesn't put the code in the actual method that we setup for reading data from channel, it actually tosses that method aside altogether. Of course, you can also notice that it would read values from the channel until the channel will be closed... right after it has already closed the channel in the lines above!

The code once again breaks, we make it worse

It actually starts explaining the changes, but then just gives up, so it's not that different from me on a Monday morning in that regard!

However, the code also no longer works:

So, let's try to have it fix that for us, with what might seem like a reasonable guess on our end:

Except that the offered code changes now cause a panic and the program crashes:

If we try to debug the code, we see that there is no message for us to use and the "ok" variable indicates that the channel is closed, so trying to read from it wouldn't be a good idea:

However, the error seemed to claim that the issue is with actually sending data, not reading it, which might feel odd. What we do know right now, however, is that the changes we asked for were erroneous and we should revert them:

It even seems to elaborate why we might have been getting a runtime error.

Let's fix our own code (but not really), and try to tell the AI to do the same

If we look around, we might get some helpful comments online about similar circumstances:

So, it would appear that we should only close the channel when we've finished writing to it. The first idea that we might get would be to add a new parameter to our sendToChannel function, and make the channel be closed on the last loop iteration, after we've written the last bit of data to it:

After only testing it once and seeing that it succeeds (frankly whether we get the race condition is a coin flip), let's ask the AI to make similar changes:

It looks pretty chaotic, like something that you typically shouldn't see in a code review under the suggestions to be implemented (perhaps not without line breaks and code snippets), but the AI does admirably and changes everything as suggested:

Of course, that still doesn't fix our code and there is that pesky race condition. It's almost as if goroutines are not guaranteed to execute in order, which has been clearly visible in our logs up until now. For example, you might get the "last" iteration somewhere in the middle of the execution, which will close the channel and result in errors:

So now what?

Let's ask the AI on how to fix everything

First, let's admit that our first hunch was wrong and get rid of the changes:

So, we're back to the previous bit of code that produced no output, however at least the AI could do what we asked (apart from maybe missing the bit where I didn't want close present in the first place). I don't see myself using it instead of version control anytime soon, but it seems to be able of both adding, editing and also removing code, sometimes with instructions that are a little bit on the vague side.

Either way, let's get even more vague and admit that we know nothing and would like help. Ideally, there would be something along the lines of isWritingDone (that would insert the equivalent of an EOF) and then isReadingDone (which would be set after stumbling upon this EOF), but that doesn't appear to be the case:

The problem here appears to be the fact that the AI basically gives us the same code example as before. It increasingly feels like channels aren't necessarily the right tool for the job here. If we actually go ahead and do a bit of searching online, once again StackOverflow has the answers that we seek. Not only that, but we can also pick up some new syntax along the way:

for message := range messages {

fmt.Println(message)

}However, what about telling the AI that its solution doesn't work for us here?

Not only does it give us a message that isn't much more helpful, so nothing along the lines of: "A common mistake when trying to work with channels is..." but it also doesn't give us complete code:

We even tell it that it can skip bits of the output in case that's too long for its character limit, but it chooses to post them anyways:

And somehow also the rest of the code:

Which then also doesn't work and gives us a deadlock error:

By now, we've found a bit of information about sync.WaitGroup but I don't think that I'd be able to get working code out of the AI, nor does it seem keen on acknowledging that its solutions don't work for me.

Here's how far the AI got me, code wise:

package main

import (

"fmt"

)

func sendToChannel(targetChannel chan<- interface{}, message interface{}) {

targetChannel <- message

}

func readFromChannel(targetChannel <-chan interface{}) interface{} {

return <-targetChannel

}

func main() {

messages := make(chan interface{})

for i := 5; i <= 10; i++ {

go sendToChannel(messages, i)

}

// Use a separate goroutine to close the channel

// after all values have been sent

go func() {

for i := 5; i <= 10; i++ {

<-messages

}

close(messages)

}()

// Use a for loop to read values from the channel

// until the channel is closed

for {

msg, ok := <-messages

if !ok {

// The channel is closed, so exit the loop

break

}

fmt.Println(msg)

}

}Instead, what I actually wanted it to help me get to, was a bit more like this:

package main

import (

"fmt"

"sync"

)

func sendToChannel(targetChannel chan<- interface{}, message interface{}, waitGroup *sync.WaitGroup) {

targetChannel <- message

waitGroup.Done() // writing to channel is finished

}

func readFromChannel(targetChannel <-chan interface{}) interface{} {

return <-targetChannel

}

func main() {

const howMuchDataWeHave = 6 // we need to know the length of data ahead of time

messages := make(chan interface{}, howMuchDataWeHave) // we use a buffered channel here now

var waitGroup = new(sync.WaitGroup)

for i := 0; i < howMuchDataWeHave; i++ {

message := i + 5 // this could honestly have nothing to do with the index, could be a DB index or whatever

waitGroup.Add(1) // we note that we're going to be initializing data

go sendToChannel(messages, message, waitGroup) // in particular, we could use goroutines for lots of parallel data initialization

}

waitGroup.Wait() // we ensure that we'll only close the channel, after all the writing has been done

close(messages)

for message := range messages { // despite the channel being closed, we'll be able to iterate over it for reading with range

fmt.Println(message)

}

}Now, don't get me wrong, it provided some solutions for problems along the way, as well as suggestions, but clearly didn't quite understand what I was after. It can be helpful in the process of learning things, of course, but at the same time has the drawback that it won't come up with many new ideas or challenge yours, suggesting that perhaps you need to rethink your approach. Like how above I needed to explicitly ask it for help with fixing an error that it could figure out how to do, but still sent me code with that error in the first place.

Or how it didn't suggest that perhaps a buffered channel with a wait group for writing data might have been more like what I wanted. Or, going a step further, critique the current implementation that I made and offer suggestions about how to improve it, or perhaps simply explain first, how normally you wouldn't need a goroutine to write data to a channel for something as simple as a text value you already have, but how that might be better for something like initializing data from the database in parallel. Or maybe how assumptions about when to close the channel might need to be made with consideration, because those goroutines will execute in a pretty random order.

Sadly that's all the time I have for now, but that should be enough for some first impressions!

Summary

Overall, to me it felt like a mixed experience, but still one that left me feeling cautiously optimistic about the future of AI.

First up, the AI felt mostly reasonable when asking it arbitrary programming questions about a popular mainstream programming language. The code examples, at least initially, seemed to be "borrowed" from other places, but the AI showed that it is capable of adding new code, editing existing code and even removing bits that I didn't want. Sometimes it did decide to ignore certain instructions, or do incomplete refactoring, but with a nudge here and there it seemed to do okay in that regard, like doing pair programming with someone a bit unfocused might be like - except that its responses were faster than anyone is able to write code.

However, the code itself and the answers themselves weren't perfect, obviously. It's good enough for getting some inspiration about how to approach issues and explain how some particular bits of code might work, but there's no actual guarantee of all of that being correct, even for seemingly simple examples like the above code. While the code looks like it should work, there is nuance to it that the AI simply glosses over or doesn't quite understand what I expect as the output - something that any person would.

It can occasionally throw crumbs your way and help you with exploration a little bit, but short of being able to write tests that either pass or fail and allowing it to iterate on those until everything is green, it's going to give you suggestions at best. Either way, where it currently is, it's capable enough to occasionally help you a little bit and guide you towards researching what you might need, though don't expect it to write all of your code for you, or even always give you the right feedback.

Then again, A Tour of Go tells you how to allow a single goroutine close the channel that it writes to, but not how to handle doing that once across multiple goroutines, to close the channel after all of them are finished and somehow also allow reading all of the values:

Some things are just harder than you'd expect at first glance, regardless of whether you use AI for them, or go in blindly and write some code without knowing handy tricks.

But why try to use AI for such a use case in the first place, instead of just GitHub Copilot alone? Because it actually talks to me, in the sense that it explains things about the code that it has written, the meaning of variables or certain operators and why/how/when you might want to use them.

I recall a few years ago there was this product, the goal of which was to be able to point your mouse cursor at a piece of language syntax and it would tell you what you are looking for. No, not just bring up documentation for a library method, but tell you what:

targetChannel <-chan interface{}in Go might be and how that could be different from:

targetChannel chan<- interface{}To me, that feels as important, if not more important than just generating new code. After all, a lot of our time is spent reading existing code, which might not always have documentation and comments to explain what it does, or which might have all sorts of peculiarities that we might gloss over. Having an automated solution that's capable of helping you in any way is generally a great idea to make your experience more bearable, which is definitely one of the better aspects of using an IDE already.

In my opinion, various AI assisted tools are likely to be a continuation of improvements in that direction, though not without their own issues. You might have noticed a "Try again" button in interface of ChatGPT, which is actually a way around some of the issues of the current implementations - with other AI's, like Novel AI you also sometimes find yourself repeatedly re-generating bits of text just to see whether any of the responses will be better, because what you get currently is somewhat underwhelming. Of course, our current AI models might simply have no ways around certain issues, such as the understanding of context, or ways to validate which claims are true and not, which will need to be addressed before there's greater utility in them.

I'd argue that in regards to code we might just be in a better spot than trying to understand what people are saying or what the meaning behind those words is - because text is easier to parse and computer code in particular will either fit a set of constraints and be valid code that works, or not. Of course, you might also get code that compiles but has runtime exceptions which is an unfortunate reality of most of our current programming languages, but since we can split many of our problems up into smaller algorithms or methods, it can also sometimes be easy to reason about them, which makes programming easier for AI in some regards, especially when there's lots of available code out there.

Future predictions

You know, in addition to the summary above, let's do something fun and be a bit optimistic here: I foresee that in the future AI will have great use in making development flow more easily, so let's make some predictions!

I hope that around 2025 I'll be able to: have AI generate code that fits within the style and conventions of a project that I might be working on (e.g. what else is in the project directory), or have it do light refactoring based on common patterns, like a tuned version of GitHub Copilot with more knowledge of context. This would most likely be an incremental improvement upon what we already have.

I hope that around 2030 I'll be able to: point AI at a piece of my code alongside some error output from trying to run the code and have it give me actionable solutions on how to fix it. Not only that, but maybe try some of those solutions (perhaps in a sandboxed environment) until the tests pass, provided that tests can even be written for it (easier for back end code, harder or at least slower for front end code). It'd be a bit like decreasing iteration times in the typical process of: "Read the error message, look up whether someone has had similar issues online, see how their issue maps to yours, implement bits of their solution, test whether things improve, repeat if they don't."

I hope that around 2035 I'll be able to: have AI act as a constant companion in regards to writing code. If my current IDE already has context suggestions occasionally pop up, I foresee that we might end up with a highly optimized version of this, which would suggest me new language features or things that are useful to know from framework or library release notes. Maybe it'll help me do some super boring refactoring for the new version that's released and help me stay up to date? Perhaps it'll pull in crowdsourced information on what breakages might be expected and will help me address those? Maybe it'll see that I'm writing some bad code or at least sub-optimal code live and will suggest me how to improve it?

I hope that around 2040 I'll be able to: point AI at a discussion in code review, where me and my colleague are at a disagreement, and have the AI offer us additional arguments, that might support either or both positions, based on what other projects have done similarly or differently. So something that goes a bit beyond static code analysis that's based just on rules, but also looks at what's happened in GitHub Issues industry wise and can at least link those for further consideration. Who knows, at that point we might have a proper AI and language server integration, linter integration and other things like that, possibly even making AI smart enough to assist with lots of badly written code and legacy projects in some way.

People might suggest that eventually AI might be the one writing the code, though I wouldn't quite rush that far just yet - I'm sure that at first, it's just going to be a nice bit of help for those willing to embrace it and help us in our programming process. After all, nowadays hardly anyone is opposed against using IntelliSense or any of the smart code completion alternatives, outside of a few text editing purists.

Other posts: « Next Previous »