I blew through 24 million tokens in a day

Date:

Suppose I have a legacy project, or even just an older project that I worked on previously and now want to carry over the mechanisms that made it work, to a new one.

All sorts of custom functionality, wrappers around the underlying library components with custom functionality, like being able to tell when any input field or input element has been touched and a form should be considered dirty (prompt before navigating away), as well as links and navigation logic that integrates with this, custom utilities for i18n built on top of pre-existing solutions, validators, date and number formatting utilities (maybe with moment.js and currency.js integrated) and so much more.

There might be dozens, or sometimes hundreds of files in a project that otherwise has over a thousand source files, obviously not coupled as loosely as it might be (e.g. a collection of separate packages), because clearly nobody has the time for setting that up and that's never how these things evolve over projects that span 5 or more years.

Yet, I don't want the business functionality. I don't want the constants from that project. I don't want the router routes, but I want the logic for having nested routes, I want the logic for highlighting the route group in the navbar/sidebar when a route below that is active, I want at least some of the permission checks and the more generic bits of redirects to the login page, error handlers and error boundaries and so much more.

You'll notice that this example is front end centric, but it might as well be back end centric as well. In either case, what I have a lot of are requirements, since otherwise I'd be writing everything from scratch and that never works out well, especially when there are perfectly serviceable implementations somewhere for me to reference, instead of rediscovering the edge cases anew. But maybe I want to migrate it all to TypeScript, maybe I want to move from Vuetify to Quasar, perhaps I even want to explore implementing the same functionality with Pinia, or get rid of it.

The one thing I don't have, however, is time. Nor do I think I necessarily have enough motivation or working memory to go through 100 components and update all of them in a specific way. Everyone has deadlines and even if it's a personal project, the evenings are only so long. So, it's the perfect use case for generative AI, right?

Generative AI and its main problem

Well, sort of. Having generative AI at your fingertips does a few good things:

- it follows boilerplate adjacent tasks pretty well

- even more so if you can give examples of what to do and not to do

- furthermore, whatever it spits out isn't too hard to verify as correct/incorrect

- it doesn't get tired and demotivated, you can also steer it in a particular direction easily

In other words, it's like having a very motivated junior developer that sometimes does completely erroneous things, but at the same time has like no ego and will carry out your bidding, which you will never get with real human beings (nor should you expect that). In other words, it's a tool.

Unfortunately, hundreds of files is still a lot, even for most of the AI solutions out there. Not if you're swimming in money, like 1000 EUR a month to spend on it and above, as these people did a while back:

Frustrated [with the LLM-isms, like changing failing tests into skipped tests, overcomplicating changes, unnecessary dependencies], I tried switching from Claude Sonnet to the new o3 thinking model. I knew o3 was painfully slow, so I took the time to write out exactly what I knew, and what I wanted the solution to look like, and gave it some time to work. To my surprise, the response was… great?

The more I tried it, the more I found o3’s improved ability to use tools, assess progress, and self-correct led to results that were actually worth the wait. I found myself expanding what terminal commands I allowed the agent to run, helping it get further than ever before. When I completed a hard “o3-grade” task and moved on to something simpler, I was increasingly tempted to leave it on o3 instead of switching back. Sonnet was faster in theory. But o3 was faster in practice.

The only problem was, it was costing a fortune.

Depending on the task, my o3 conversations were averaging roughly $5 of Cursor requests each, or about $50 a day. That… is a lot of money.

Still, we were in a hurry. And what is a startup if not a series of experiments? So I turned to my co-founder.

A: Jenn, I have a proposal. You’re going to hate it. ... So you know I’ve been getting really good results from o3. I propose we try just defaulting to o3 for the next 3 weeks until our demo, and increase our Cursor spending cap to $1000/mo.

B: That’s a lot of money.

A: I know. It’s ridiculous. This is ridiculous. But also, when we hire a Founding Engineer, they will cost a lot more than that. Like, 10x more.

B: …Okay. Let’s try it. If it’s not worth the cost, we’ll go back.

A: So we tried it. And, to both of our horror, it was worth the cost.

They go into a bit more detail, if you're interested in their experience, go read that post: but I'm linking it because it's an interesting look at things. "This tool that still needs a human to manage it and is sometimes flawed still has value in it, and even if the sticker price might give some people shock, it's still far cheaper than a full time employee."

Understandably, that kind of thing might make sense if you're a business and if you want to support your developers. I would honestly expect most businesses out there to make the best tools available to them, as per The Joel Test, although don't overlook the part where I say "available" instead of "forced to use", which I'd apply to very few tools - other than maybe automatic code formatting and linters with fixes applied whenever possible, for the sake of consistency and getting out of your way otherwise.

I, however, am not a company with 1000 EUR to burn per month, even spending 100 EUR on Google Gemini API tokens hurt. Yet, at the same time, as the task above might let you know, I want to work with larger amounts of data than most users of generative AI will, to the point where most models I could run on-prem just wouldn't have enough memory for the context, even with 4bit KV caches and 4bit quantized models, aside from the models themselves not being very good.

Inevitably, I have to look at cloud options and my main problem with generative AI isn't that it's unethical or that it's stealing, or that it might replace my job and those of countless people and lead to an economic crash that could turn out way worse than that of 2008, all while killing human creativity and possibly cognitive abilities in the process - it's that there just isn't enough AI at my disposal. Yes, I sometimes write in an absurdist style.

So, I looked for what are my options, realistically.

Shilling for Cerebras Code

Most of the services out there go out the window, Claude Code and Gemini and ChatGPT subscriptions are all too restrictive in regards to the rate limits and how many tokens you can shuffle around - with them, my only option would be paying per token and while I can do that for a smaller amount of queries, it just wouldn't do for my scale.

Eventually I found a subscription by a company that's making their own chips that are quite different from Nvidia's GPUs - Cerebras:

I won't try to get into the technical aspects of it all, nor try to figure out why they aren't 10x bigger than they currently are, but the bottom line is that they can run LLMs at speeds that sometimes are 10-20x faster than other providers. Their materials say up to 30x, but that's probably some theoretical figure or varies on a case by case basis, but the bottom line here is: they're fast.

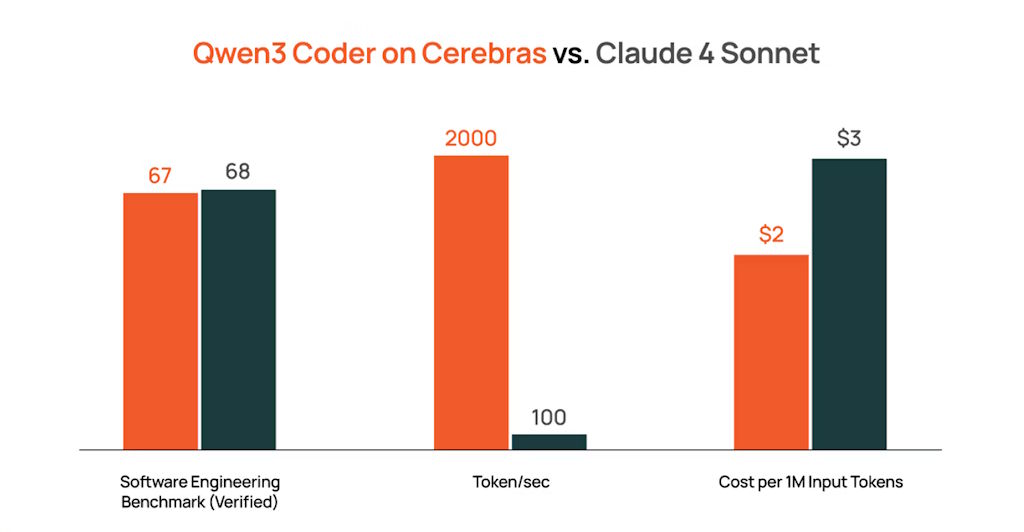

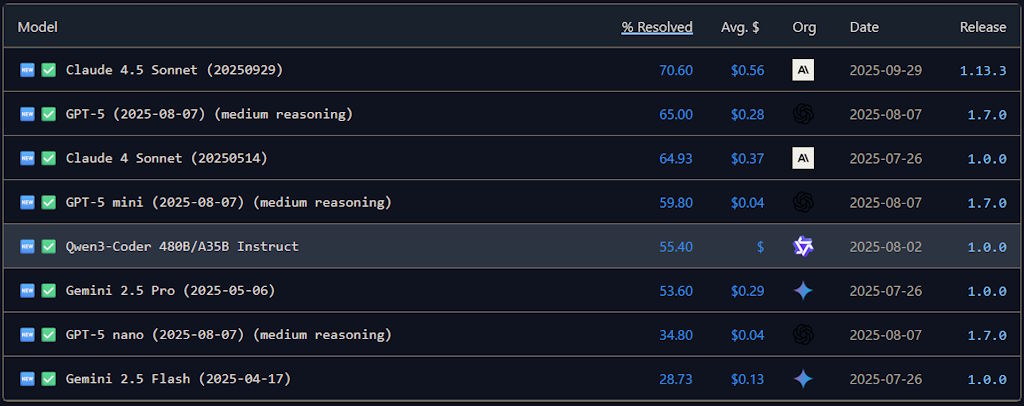

Similarly, see the Qwen3 Coder model they mention? It's the 480B variety, that scores pretty favourably in comparison with most of the other models you can find out there and holds its own against Google's offering, both in my personal experience, as well as some of the benchmarks on SWE-bench:

I am slightly frontloading this to explain that this setup isn't the cutting edge when it comes to the actual model quality, but it's more than competent and at that speed needing 2 or 3 attempts to get things right is a matter of a few seconds.

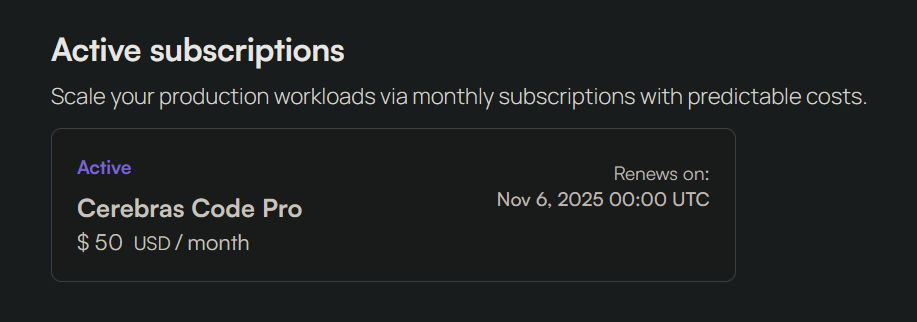

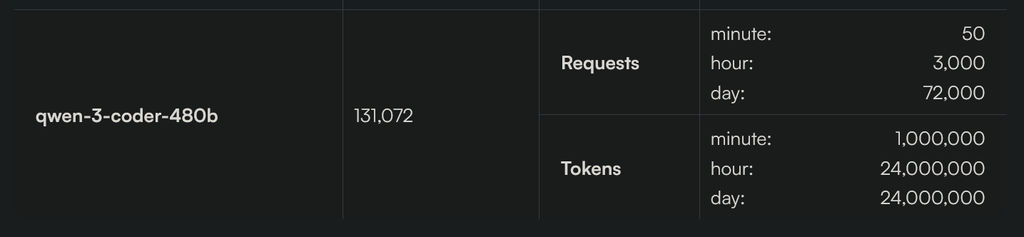

Essentially, that resolves my issue of now having access to enough AI, because they have a plan that's 50 USD a month:

It also includes some pretty good limits in regards to what you can do (more than any other provider), just look at that amount of tokens you can use:

131k of context isn't great, but is decent for most low-medium complexity programming tasks, even when you need to reference related functionality and so on, even if sometimes you'll need to make the model write its progress into a text file and reference that, especially after context compression.

At the same time, they now let you use 24'000'000 tokens per day. 24 million. That is seriously a lot. For comparison, if I needed that amount and I would be paying for some decent models, here's how much that would cost per input/output tokens for me:

| Model | Cost per 24M Tokens |

|---|---|

| Sonnet 4.5 (input) | 72 USD |

| Gemini 2.5 Pro (input) | 30 USD |

| GPT-5 (input) | 30 USD |

| Sonnet 4.5 (output) | 360 USD |

| Gemini 2.5 Pro (output) | 240 USD |

| GPT-5 (output) | 240 USD |

Even assuming an 80% input and 20% output split, it'd be:

| Model | Cost per 24M Tokens |

|---|---|

| Sonnet 4.5 | 130 USD |

| Gemini 2.5 Pro | 72 USD |

| GPT-5 | 72 USD |

And that's per day. If we extrapolate per month (assuming hitting those limits every day, unlikely but for the sake of comparison):

| Model | Monthly Cost |

|---|---|

| Sonnet 4.5 | 3880 USD |

| Gemini 2.5 Pro | 2160 USD |

| GPT-5 | 2160 USD |

| Cerebras | 50 USD |

I rounded some of the results, but the difference here is clear. Plus, with Cerebras letting you do 50 requests per second, you can just let your IDE or whatever solution you use for agentic type of work fly and not worry too much about artificial rate limits. Maybe 1 second delay between requests if you want to be super sure that you won't see rate limit requests.

I cannot overstate how cool having a subscription like this is and I earnestly hope that they don't discontinue it. It seems to me like a great way to attract more attention towards Cerebras, even though I can't begin to make any guesses about the financial feasibility of it on their part, given how much a single of their chips costs to begin with.

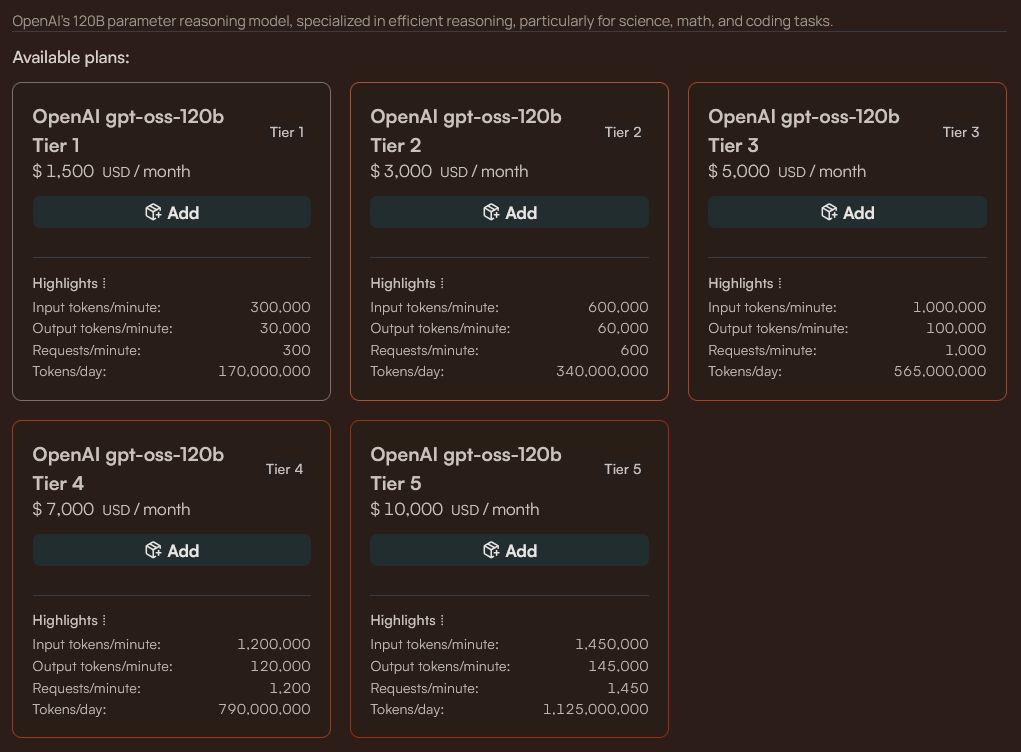

As an individual user, I'd say give them a shot! Just don't look at how expensive the other enterprise-oriented plans are:

So, with that in mind, I have a new daily driver, a subscription that doesn't make me feel like I'm being ripped off, all while also leaving the door open for me to pay for other APIs when I need to (like getting feedback on a problem from multiple different LLMs, given that training data differs).

Surely 24 million tokens is such an outrageously large amount that I could never run into rate limits, right?

Let's burn down some forests

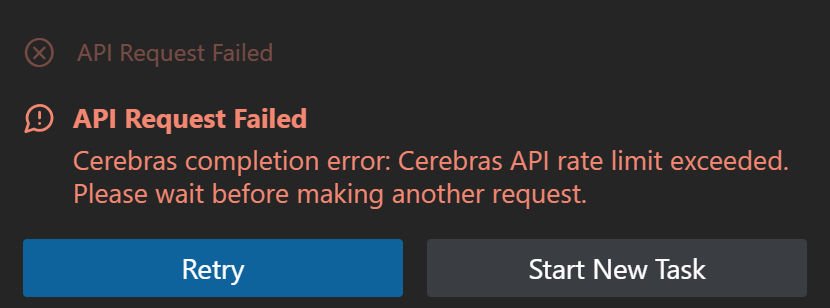

Wrong. It took me just a few hours:

The error you see here is from RooCode, one of my presently favorite solutions for agentic development, that has both chat and code modes, alongside others for planning and a bunch of other useful functionality (multi-file reads, multi-file edits, allows reading large files bit by bit as needed etc.).

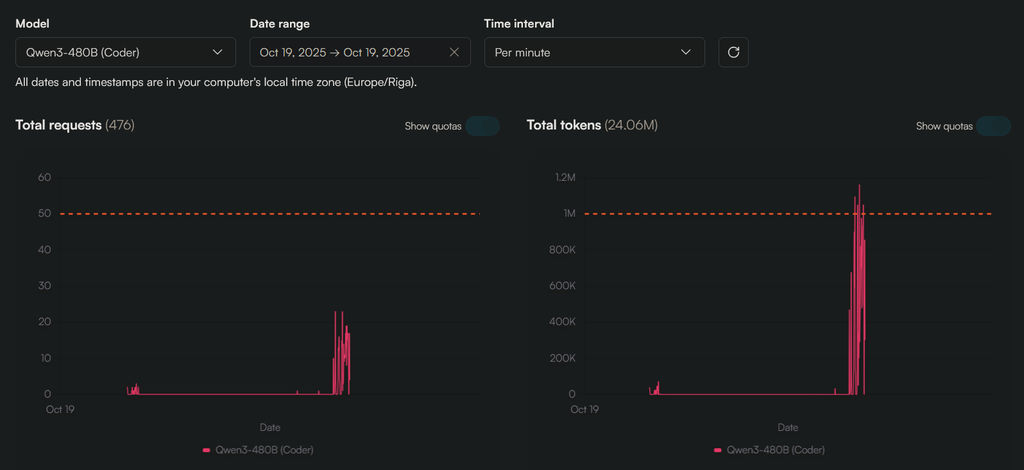

I was still surprised to see it. I thought that it was the request count related warning, that the IDE had decided to be a bit too speedy for what's allowed, but no, even after a minute, the error was still there and the Cerebras dashboard confirmed that my daily allowance was up:

It's helpful to remember that it didn't really cost me much to do that, essentially those 24 million tokens were a bit less than 2 USD given the subscription that I'm on (a bit more in practice because my actual utilization is usually below those rate limits). Even with the good price for me, it might seem like kind of an insane amount.

Perhaps, but I did make a whole bunch of progress, got about 90 components carried over and about 40-60 utilities and project files, alongside a few dependencies (that I had to confirm manually, because I don't allow terminal interaction without my confirmation), a few passes of changes in those files as needed, some restructuring and so on. Even with 150 files that still comes out to around 160'000 tokens per file, which might seem a lot until you consider that it needed to read an instructions file and update a few progress files that persist after the context being condensed, re-read the instructions after the context is partially wiped, as well as reference library type information and other old code.

I was surprised at how quickly I burned through those tokens, but I'm afraid it's already over for me - the very next day, the very same thing happened when I decided to refactor everything and also ensure that all of the TypeScript type checks work and some other linter issues are resolved. On another note, fuck the Vue SFC Compiler and its inability to handle complex TypeScript types, conditionals etc.

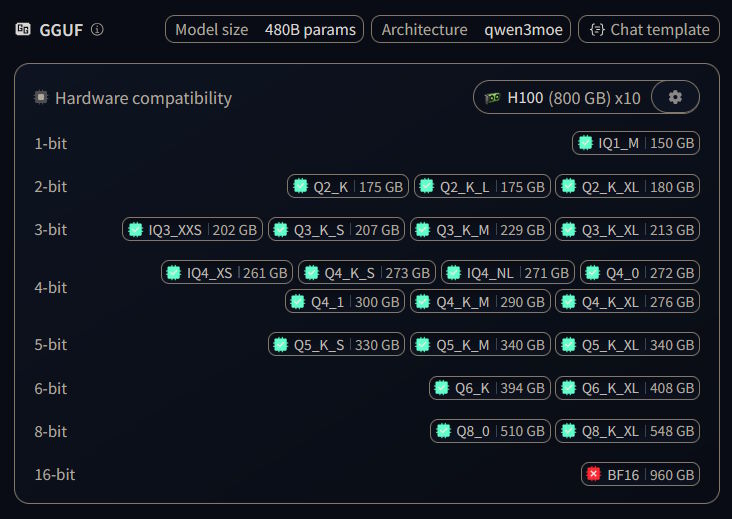

I'm like those people who drink multiple cups of coffee (or energy drinks) a day and then wonder why they're always feeling a bit anxious and why their sleep quality is not great. Out of curiosity, to run that model locally, I'd need around 10 Nvidia H100 cards even at 8bit quantization:

Let me remind you, that one of those cards costs around 25-30k USD, so I'd be looking at a total of around 250'000 to 300'000 USD to buy those cards, and then a total power draw of around 3500W. Even with all that, I'm not sure if I'd reach Cerebras speeds, but I'd certainly do better than most other providers since I'd have it all for myself, but even so, the time to generate 24 million tokens would be a lot:

| Tokens per second | Hours |

|---|---|

| 100 | 66 |

| 200 | 33 |

| 300 | 22 |

| 400 | 16 |

| 1000 | 6 |

| 2000 | 3 |

If that means the cards running at full power for the duration, that comes out to around 10.5 kWh and 231 kWh, or enough energy to boil somewhere between 112 to 2480 liters of water. Every single day (except in the case where it'd take more than 24 hours, obviously). That's like having a bonfire burning whenever all of those cards are going full send.

It's some back of the napkin maths that's probably not entirely accurate, but with less efficient cards, one can definitely imagine a bit more power usage than one would expect and figures somewhere inside of that super wide range, so in my case I'm both thankful that Cerebras exists but also a bit concerned about the future of our environment.

The line must go up

Yet, as I said, I crave more.

Parallel agents, each spending tens of millions of tokens a day. Agents controlling other agents, doing quality control on every change. Other agents calling tools like linters and type checks and builds to see whether the output is good. Agents taking my text and turning it into fully fledged spec documents, others reporting progress on the tasks and delegating down. Just endless iteration to check and cross check and verify, improve and fix and enforce. Loop after loop after loop until it looks like something out of Warhammer 40k. A gargantuan system burning our forests and boiling our oceans, I crave to look upon the gray and brown skies and know that I will never see the sun again, for its light is swallowed in all of the smog, only its rays heating the greenhouse gases with each passing day.

All of that, to make working on shitty CRUD apps easier while I sip whatever drink I'm set on that day.

This should read as a critique of AI, of all of the consequences and empty promises made by people trying to sell it, all of the slop that's created, all of the jobs of translators and people who transcribe audio ruined, all of the graphical design that can no longer be someone's passion because their bosses are pushing for more AI slop and genuinely couldn't care less for the craft, artists having their entire styles copied and stolen, same with books, same with code... at the end of the day, however, I like the power.

The problem is that what we want to do might need an order of magnitude, or maybe two orders of magnitude more resources, time, training, research and all that - not even to get close to AGI, but just make most use out of the technology. I genuinely see how I could make programming be more about planning and less about resolving stupid compilation errors, even if I'd still have to look through all of the files and my job would become very code review centric. Even if the tools now aren't excellent, even if people aren't familiar with the problems that LLMs are good and bad for, even if it all burns down at some point and takes our economy with...

Cerebras gave me a glimpse into how good things could be. A bit like running a Spring Boot app and it compiles and runs in about 10 seconds instead of 2 minutes. One of those leaves you pleasantly surprised, the other makes you want to jump off of a bridge. Presently, most other LLM providers are somewhere in the middle of that spectrum.

Some genuinely cool stuff

Okay, I'm done being dramatic, time to shake things up!

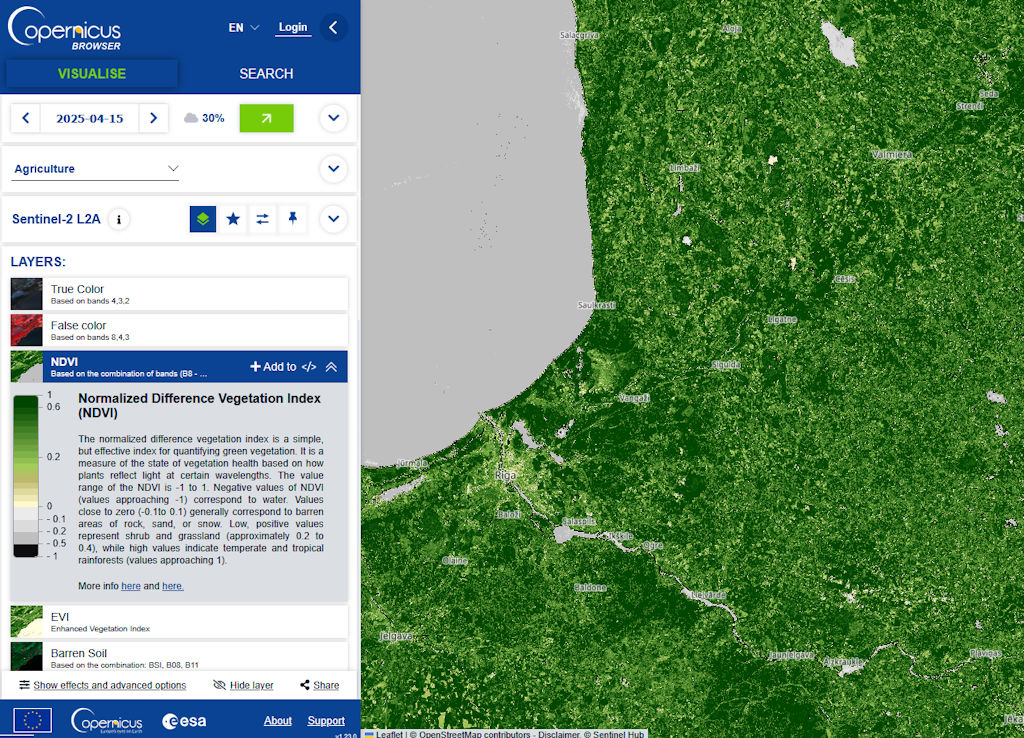

There's also cool stuff that you can do, for example, did you know that Sentinel-2 data is available to everyone and is updated around every 5-10 days:

It's not just regular pictures, but multi-spectral data, meaning that you can calculate all sorts of data, like NDVI (Normalized Difference Vegetation Index), which can tell you what is the state of the vegetation on the ground in any given area!

Not only that, but if you have historical data available (you do), you can even figure out what types of crops are growing in a given area and combine that with the other figures and see the overall health of every single field in your country, if you're so inclined.

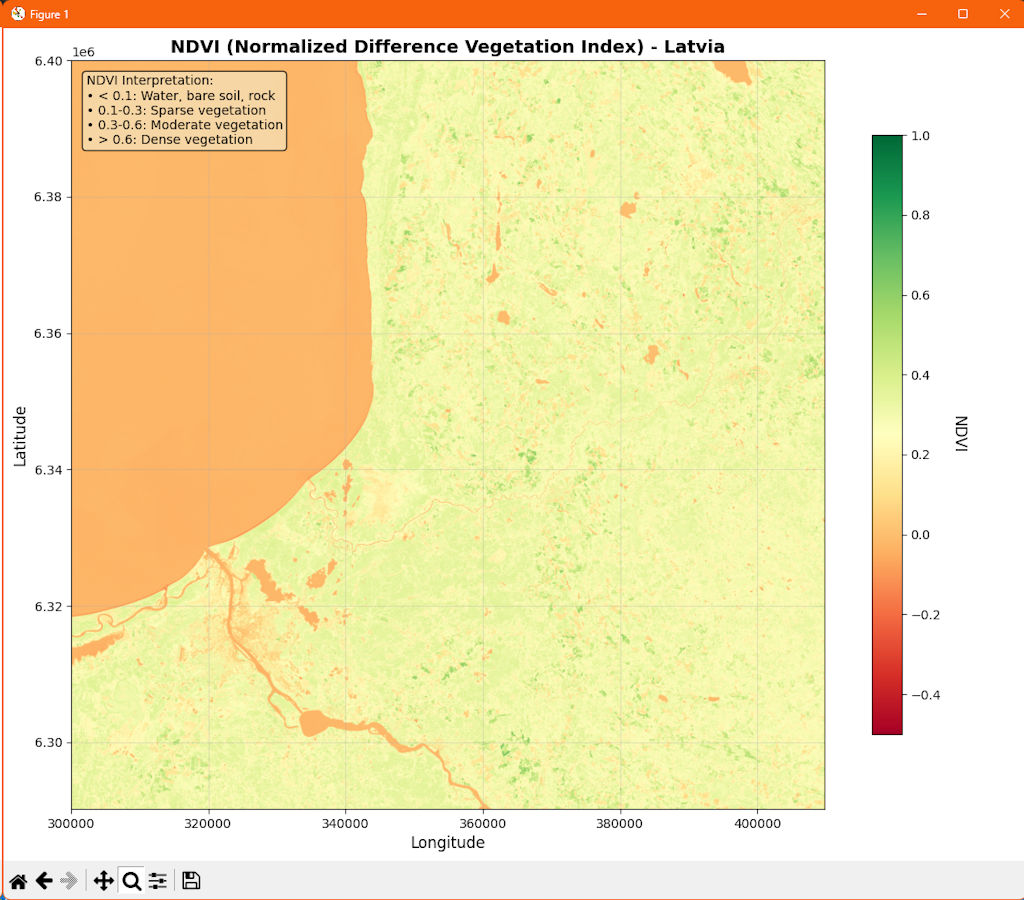

Why AI? Not just because of the methods used in the classification system (less generative AI, more formal methods), but rather that I could whip up a quick prototype to generate simple NDVI visualizations based on the real data within less than an hour:

Notice how the latitude and longitude figures are all screwed up? The data itself is okay, but I'd definitely have to fix that - here's the important part though, at least I'd have a clear starting point to iterate upon! Oh and you'll see that the colors are different, but that's mostly due to the scales not being the same.

Anyone who is saying that the technology isn't useful clearly hasn't seen how it makes the friction of starting new projects or doing those initial iterations, or handling menial repetitive tasks dissipate in front of your eyes. It's amazing.

Summary

You still need to babysit AI. It is flawed and it has very real limitations. However, it can also be immensely useful and trying out Cerebras was an eye opening experience - it's like it's on a completely different level of its own.

At the same time, it's almost inevitable that corpos will eventually try to squeeze as much money out of us as possible, because currently the AI companies are simply competing to be loss leaders and are nowhere near to turning a profit (albeit that's because they are in a race to make better models, all while inference by itself would be profitable).

Yet, I'm convinced that the technology underneath it all is solid and while execs all over the place have the solution for a problem they haven't yet found (yet are trying to sell regardless), I don't care and I benefit already. This is in contrast to the former hype of blockchain, which still remains niche (with one or two use cases here and there), as well as cryptocurrency, which often ends up being a massive rugpull, even if in theory can simplify money transfers a whole bunch (even if transfers inside of the EU are thankfully already fast and easy).

At the same time, it would be cool if we didn't actually need to ruin our ecosystems, I guess I'll have to satisfy that craving of mine by playing Factorio instead:

(I think there's a certain kind of charm that in the game ruining the local ecosystem with the pollution from your factory makes alien bugs come after you, gives you an actual reason to build more base defenses. Bit of a tangent, but hey, it's my blog.)

Other posts: « Next Previous »