Two Intel Arc GPUs in one PC: worse than expected

Date:

Over the years, I've had quite a few different GPUs:

- GeForce 9800 GT (this one melted to the point where reflow soldering wouldn't help)

- GeForce GTX 650 Ti (this one ended up being too weak for most games, I gave it away to a friend)

- Radeon RX 570 (this one ended up not having enough VRAM for modern games)

- Radeon RX 580 2048SP (this one was pretty good, but a bit unstable, especially in VR)

More recently, however, I've moved my main PC over to some Intel GPUs, due to their nice amount of VRAM, XeSS being a bit better than FSR in my experience (even though the support for it isn't quite as widespread), but most of all, them also being affordable when Nvidia seems to be charging an arm and a leg for their GPUs. While I would have otherwise gotten a new AMD GPU, I wanted to support more market competition and the price felt just right.

Initially I started with the A580 and thought that it wasn't all that good, but it turns out that even after the driver issues had been mostly patched out, it was actually my CPU that was the problem: the Ryzen 5 4500 (which I got for ReBAR support, not wanting to hack around with my old Ryzen 7 1700, which wasn't officially supported). Essentially, all of the synthetic benchmarks that I could find online were lying to me and it wasn't as good as my previous CPU, quite possibly due to only having 8MB of L3 cache, despite me being able to otherwise OC it pretty well.

I would get low framerates in games, with the causes for that not being entirely obvious: it would almost never hit 100% usage but after upgrading to the Ryzen 7 5800X all of the issues seemed to disappear even in combination with the A580. In other words, suddenly the framerates were way better, not just when it comes to the B-series card where people suggested that there's driver overhead for the older CPUs, but also on the A-series card as well.

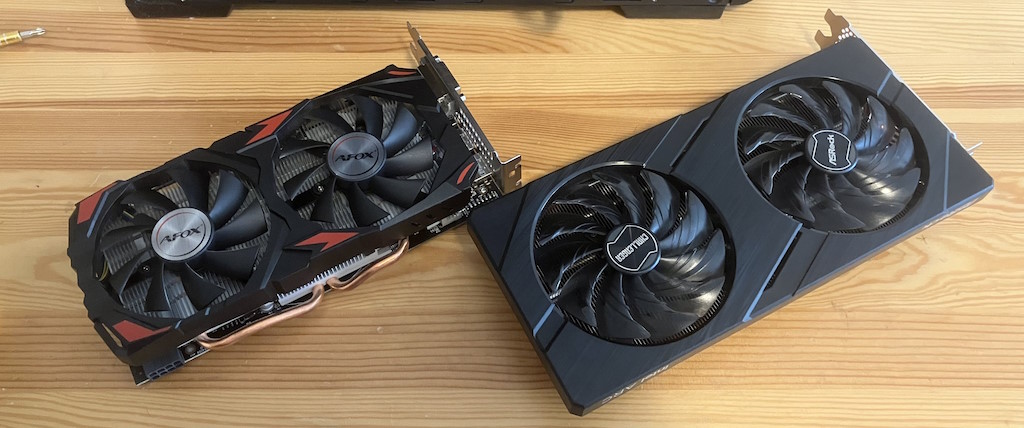

By then, I had already acquired the successor by Intel (somehow managing to get the limited edition for a pretty okay price), which means that I had two Intel GPUs:

Now, they are decently powerful on their own in combination with the better CPU, but I have noticed that if I try to play a demanding game and record/stream it at the same time, the encoder still seems to get overloaded with either of the GPUs and there's no real way for me to tell the OS:

"Hey, I want you to prioritize the recording, so the game framerate would just dip more, but the overall recording/stream would remain stable."

Because a lot of people suggest that the Intel Arc cards are pretty good for encoding and that you could get a card just for that, it seemed like it'd be a pretty good setup: since the A580 that I have is essentially a spare, that also has the pretty nice Intel Quick Sync Video encoders in it, meaning that I could use the B580 for games or anything else and the A580 for the encoding, or even driving the other monitors (I have 4 in total).

It also seemed like a good idea, because I could make most of the hardware that I have: one of my friends has an RTX 4080 Super and they still get similar issues while trying to both play games and record/stream at the same time, the encoder just plainly gets overwhelmed, despite the card costing around 1000 USD, so about twice the total of what I paid for both of my cards. In my case, it seemed that using two separate GPUs would sidestep the whole issue entirely by giving each of them a task to do.

Pretty simple, right?

The hardware issues of a two GPU setup

Well, not really.

I should probably get this out of the way first and foremost: I hate computers. Not computing, but rather the hardware and setting it up. In my years of working on them, I have NEVER had a case where I put things together and everything just works off the bat.

Instead, I've had failing PSUs and HDDs, bent motherboards because whoever came up with the ATX 24-pin connector is evil, though the locking mechanisms being trash also pretty much applies to the 6/8 pin GPU connectors. Front panel connectors are a mess, for whatever reason half the time the USB 3 headers do nothing, CMOS resets are unpleasant to do because the manufacturers put the battery under the GPU, sometimes the GPU blocks off SATA connections AND also the PCIe slots where I could put a splitter card, the cases are often unergonomic, CPU coolers are hard to install. I'm not sure whether all of this is due to trying to do things on a budget, but I absolutely hate working with the hardware and it has only ever made me be miserable.

Ergo, when you see me throwing both of the GPUs in and see an ominous bundle of wires, know that I've given up on being organized and it's all in the name of getting things working:

Dust aside, the story here is that even with a better cooler, the Ryzen 7 5800X still puts out a bunch of heat, I managed to use the Ryzen Master utility to dial it back a little, to make this more bearable with the Curve Optimizer and PBO:

- PPT: 170 (from 180)

- TDC: 95 (from 100)

- EDC: 115 (from 120)

The good news is that it works, the bad news is that I still needed more airflow. Because I can't really be bothered to go through the trouble of setting up an AIO right now, I just opted for a bunch of case fans, hooking them up to monitor the CPU temps and have a pretty aggressive curve near thermal throttling, such as hitting 100% across everything around 87C.

Neither the old nor the new motherboard had 5 case fan headers, so I just opted for getting a fan controller. The problem there was that it has a whole bunch of wires and there's not enough space for me to put on the back side of the case. Thus, there's the ominously hanging bundle of wires, contained only by a liberal application of duct tape. If we're going by Warhammer 40'000 logic, that is where the Machine Spirit lives.

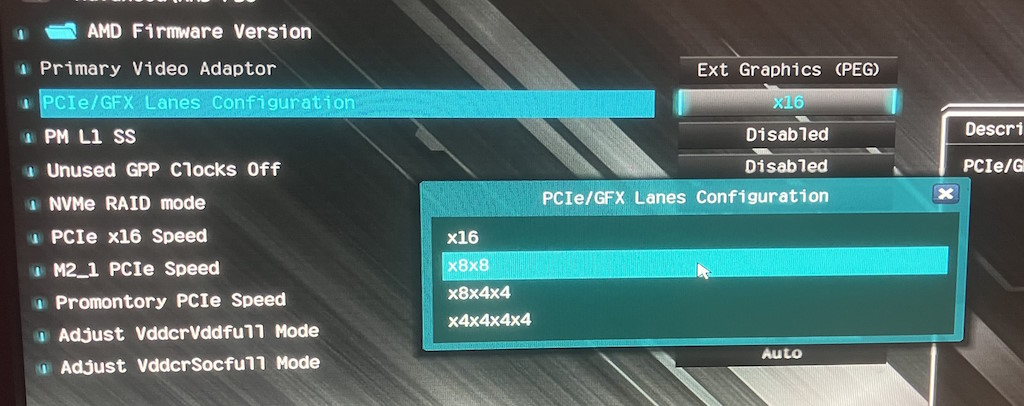

The problem of the actual dual GPU setup, of course, was the fact that the second GPU wasn't being detected at all. I dug around the BIOS and found some options for splitting up the PCIe lanes, which didn't seem like a bad idea, given that the B580 only has PCIe 4.0 x8 lanes (even if I only have a PCIe 3.0 motherboard), so giving up half of the x16 shouldn't be a big deal:

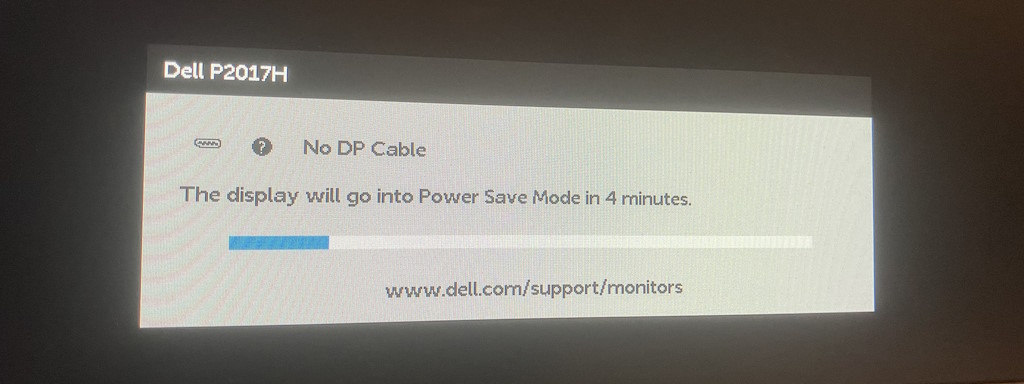

Of course, that was a mistake, as I found rather quickly, because I went from 1 working GPU to 0 working GPUs and would get no video output at all:

I knew that I had selected the wrong setting, but I wasn't sure what the actual issue was, why would x8x8 cause problems, when x16 had worked just fine all this time? That was quite puzzling. At first I lamented the fact that the BIOS doesn't actually attempt to explain pretty much anything (the poster child of poorly documented software; I've almost always seen BIOSes that have dozens of oddly named options, but never anything remotely resembling a good explanation of what most of those do; it's like the manufacturers hate you), though thankfully I did notice a QR code which could bring me to a better source of information:

That, of course, didn't help me. It just links to the PDF of the motherboard documentation, which doesn't even explain the exact options, just that some of the lanes seem to be shared. I find it quite distasteful that the motherboard or the computer as a whole doesn't seem to have that much status output about what's going wrong, or even what might be the expected state of everything. For example, is it really too much to ask to put like 10-20 LEDs on the motherboard that would indicate the state of each of the connected components, as opposed to a black box with spinning fans but no other signs of life.

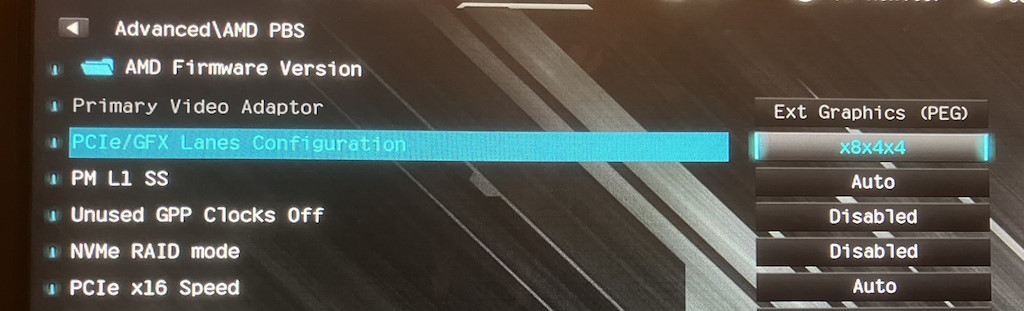

This was one of those cases where I had to mess around until things finally started working (with a few times of pulling the CMOS battery in the middle of that), with me eventually settling on the x8x4x4 preset, still giving my main GPU 8 lanes, 4 lanes for the secondary GPU (no big deal for encoding) and 4 lanes for... something else:

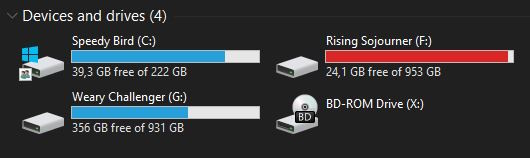

The next problem was that suddenly like half of my drives were no longer being detected, even though this time around I wasn't using a PCIe extension card or anything of the sort, just the SATA ports on the motherboard itself. Yet, the drives were not there:

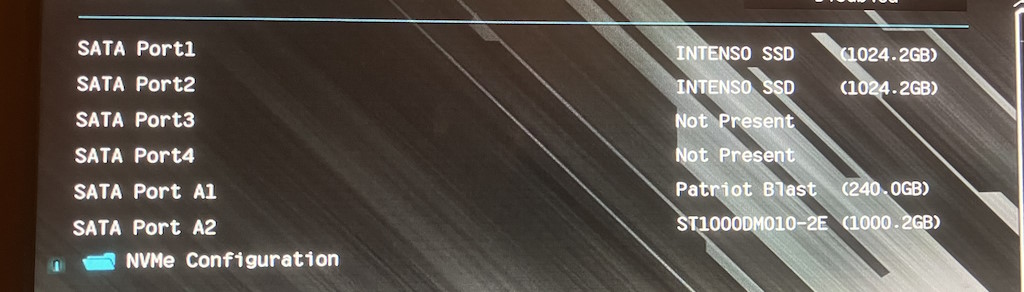

Nor did they show up in the BIOS either, for whatever reason they were just not being detected:

Many folks won't be affected by this, but I do have an interesting storage setup, where I just use a bunch of cheap Seagate Barracuda 1 TB HDDs that I got back when they were cheap for a little bit, around 40 EUR for each, for the storage of my files. For gaming and boot drives, however, I use SATA SSDs because they do seem to both be affordable and fast enough.

Eventually, I dug through the manual and realized that it might be the M.2 NVME drive that I intended to use for dual booting Linux in the future (because my current setup of splitting the same SSD into partitions, some for Windows and some for Linux distros isn't entirely comfortable), because it might be eating up the PCIe lanes that would otherwise go for the other SATA drives.

So, I removed it:

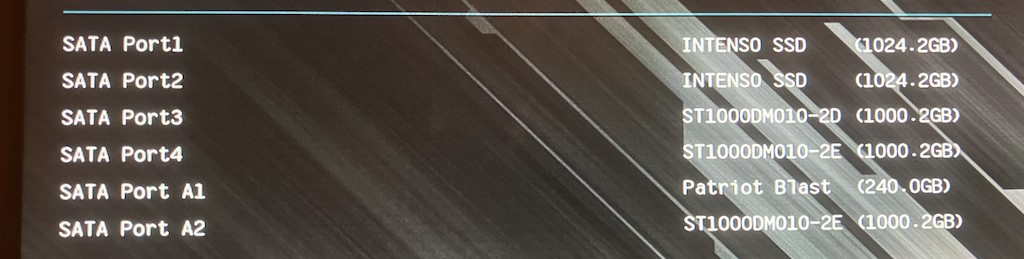

And suddenly my issues were resolved and all of the drives started showing up properly, both in the BIOS:

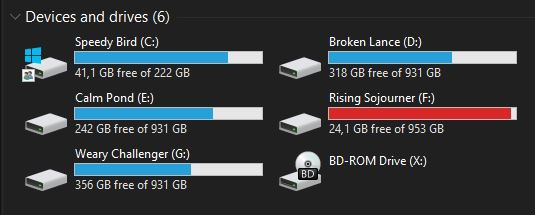

As well as in the OS itself, it seems like I won't be able to use the NVME M.2 drive alongside everything else, but that's a problem for the future:

With all of that frustration out of the way (and no actual answers, rather just toggling a few BIOS settings until things start properly working), the PC was finally brought back to life and now I had a setup with two Intel Arc GPUs, side by side.

Here's a curious detail along the way: I don't know why the older A580 needs two 8-pin connectors because it doesn't really draw that much power and it doesn't seem to be like my old RX 570 card that had an 8+6 setup, where you could just leave the 6-pin connector unplugged if you didn't intend to OC: with the A580, for whatever reason, only plugging in one of those wouldn't work.

For now, I'm happy that the setup works, though if I did ever intend to fix this cable management, then I'd also probably want to look in the direction of an Y splitter cable for the A580:

Despite the shared lanes, it didn't actually seem like there was much of a negative effect on the I/O of the system, even when everything is loaded at the same time (sequential tests here, because the random ones are horrible for HDDs, thankfully that's not the workload that I need them for, more like storing all sorts of files and movies and whatnot), so that was nice to see:

Finally, I would be able to discover why many of my friends recommended against a dual GPU setup and whether this would really be workable.

The software issues of a two GPU setup

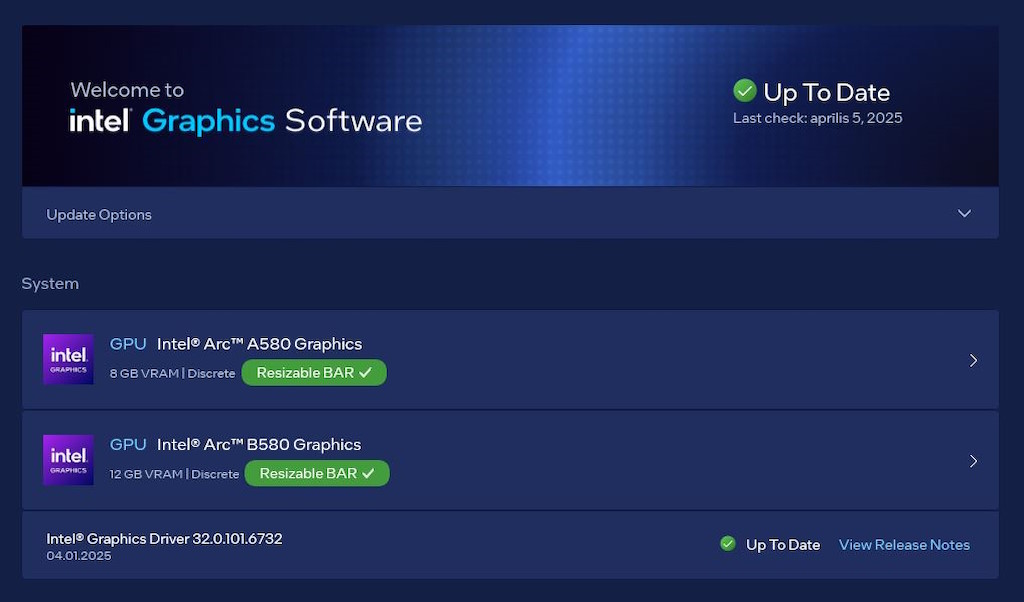

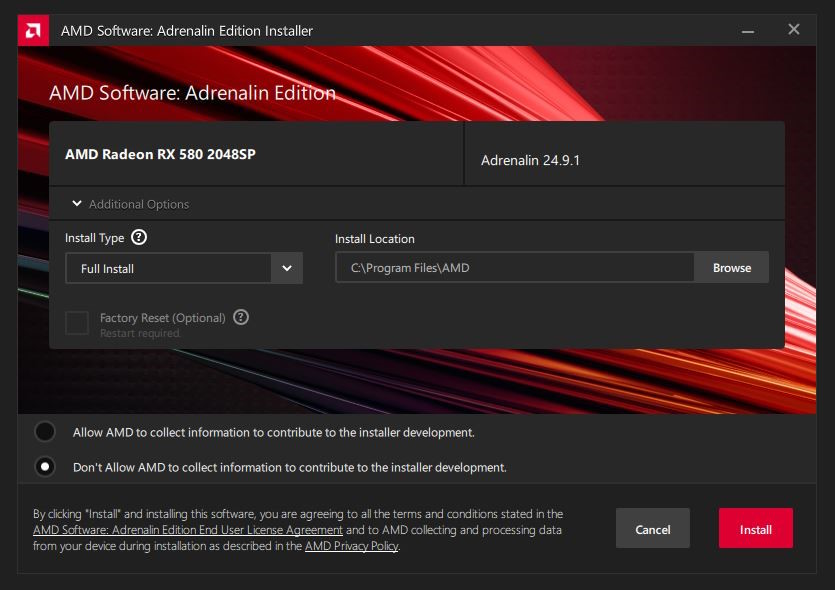

In theory, there shouldn't actually be that much that's difficult about two GPUs running in the same system, as long as you don't expect graphical workloads to be split among them (like SLI), but rather just want to delegate some tasks to them. It was nice to see that I wouldn't even have to use DDU because both of them were detected and working out of the box, though I did run a regular GPU update with the clean install option some time later:

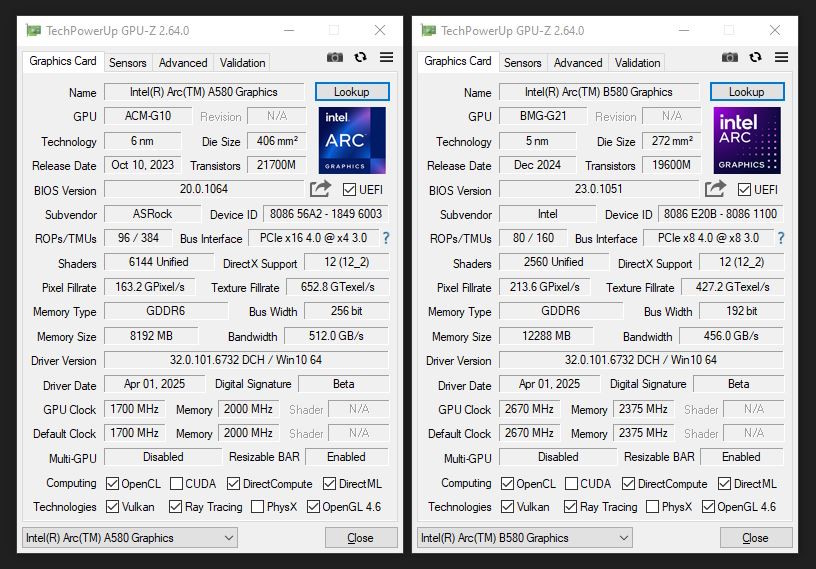

Here's the GPU-Z output, in case anyone is curious. The ASRock card is actually pretty good and I did OC it a bunch previously, but wanted to mostly keep it stock for now, just to not overload my PSU (for what it's worth, 650W seems to be mostly sufficient for a 190W + 150W GPU setup and a 100W CPU):

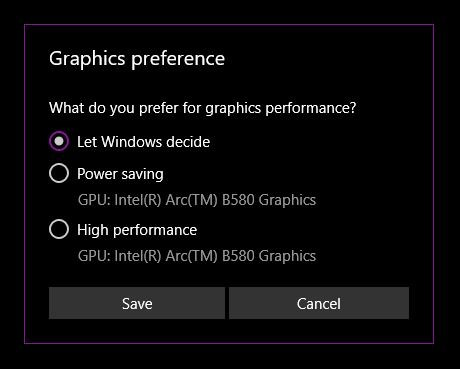

There were also immediate problems on the software side though, notably the fact that Windows 10 gets pretty confused. Apparently in Windows 11 you get a nice dropdown for when you want to set which programs should use which GPU, but in Windows 10 it just tells you to go frick yourself. There are some support questions about how to change it into something more sane, but no actual answers, leaving us with something like this:

Apparently an Insider Build had such a feature, but sadly I'm an outsider, so no dice there.

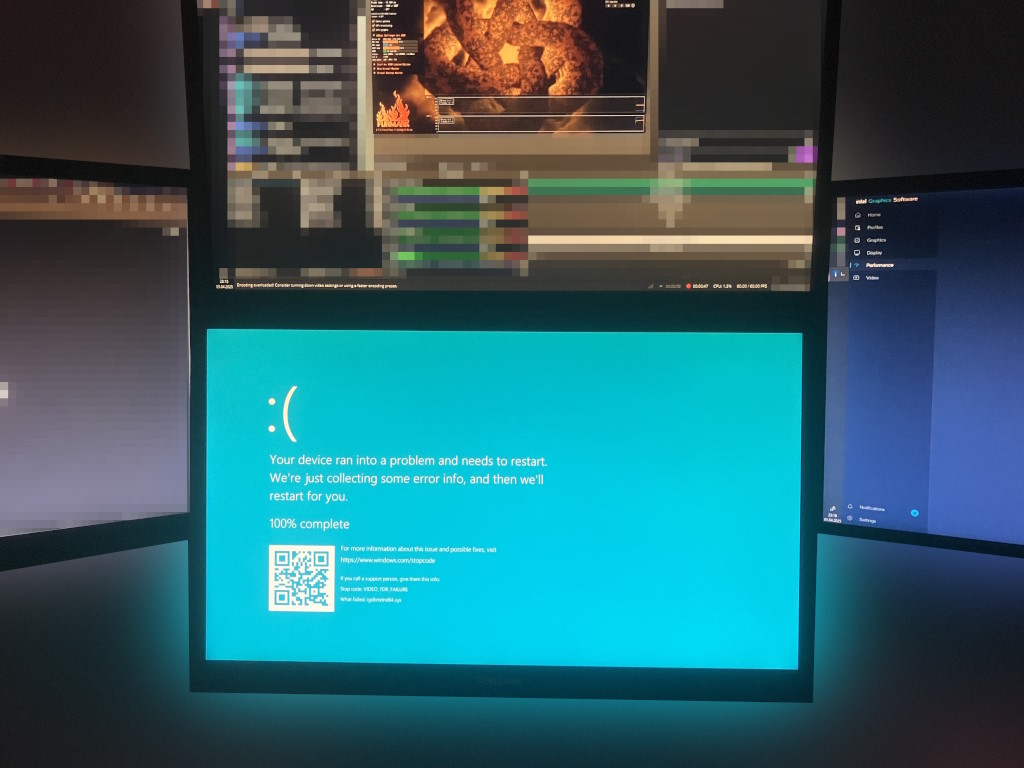

Furthermore, I saw a really interesting type of BSOD while running some stress testing (that I couldn't replicate later), where the main GPU was loaded to the max with Furmark and the other was used for encoding a video of me doing that, using Open Broadcaster Software, which lead to the monitors connected to the main GPU showing the typical BSOD screen but the others just freezing on whatever was the last visible picture:

I've never really seen that before.

Also, you might have noticed that only the main screen was connected to the B580. My idea was that I could perhaps lower the load on the main game GPU by letting the secondary one take over rendering whatever is on the other monitors, however this seems to have actually made things a lot worse for reasons that once more aren't entirely clear to me.

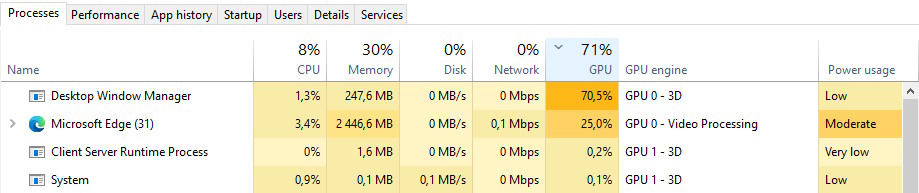

For example, when I would have videos (like YouTube) playing in a browser that's on one of the other monitors, I'd get very high GPU load on the main GPU, the culprit being the Desktop Window Manager process:

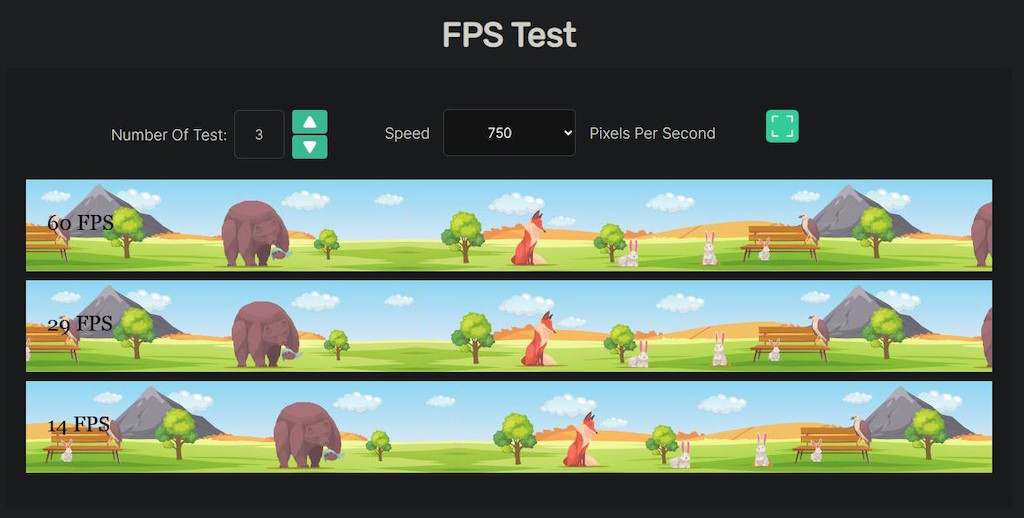

This wasn't just related to video playback, but anything that would cause a lot of things to be drawn often. For example, if I used a regular FPS test page, then everything would be fine with one of the monitors running the test:

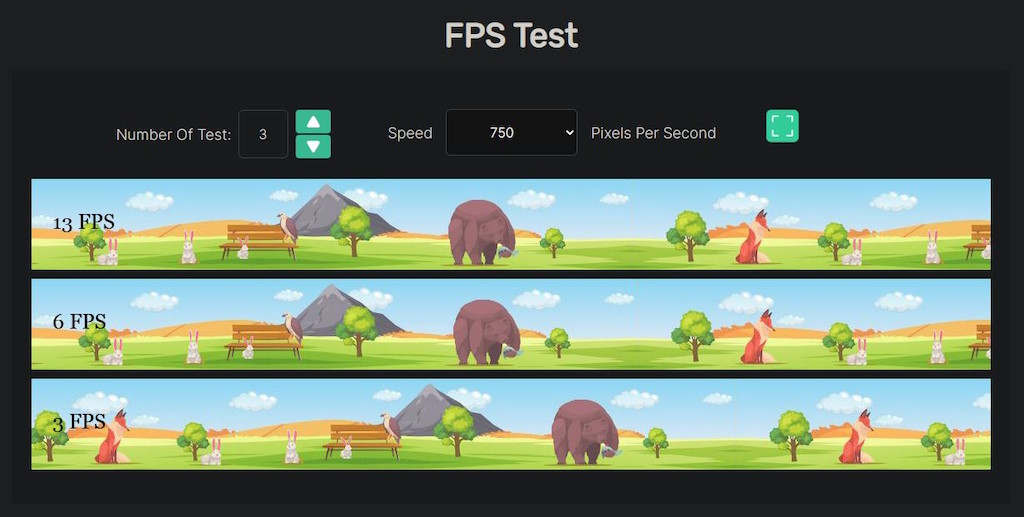

But as soon as I'd launch multiple windows of the same test, one on each monitor, suddenly the framerates across everything would fall horribly. This would happen with games, OBS, or frankly any piece of software with any kind of visuals going on. As you can probably tell, a regular browser tab overloading the main GPU and making the FPS dip to <20 isn't remotely acceptable:

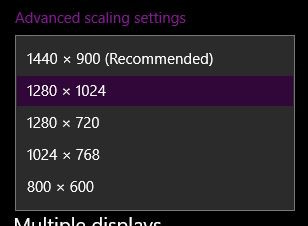

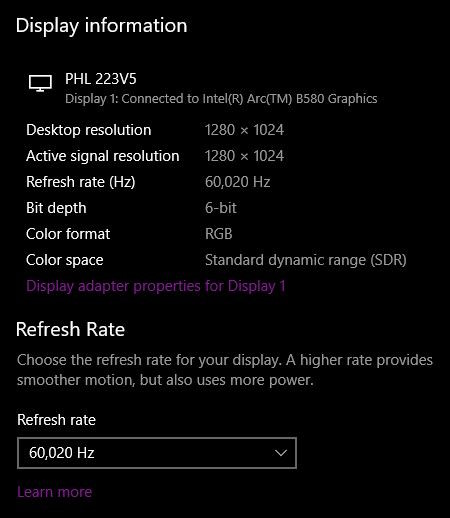

Another interesting issue was that after a restart and just trying to use the B580 for all of the monitors, suddenly one of the monitors only had lower resolutions available, as opposed to the regular 1920x1080 at 60 FPS, in contrast to all of the other monitors I have:

It was as if suddenly the 1920x1080 isn't even a supported resolution by the monitor itself:

I also noticed an interesting thing, which is that the refresh rate would show up as 60.020 Hz, no idea where that particular figure comes from:

I did eventually narrow it down to probably having a bad adapter, which I need to do because only one of my monitors has an HDMI port, whereas all the others only have VGA/DVI available, meaning that the contents of my cabinets of computer supplies look more or less like this:

Either way, I'm not spending 400 EUR to replace my current monitors because they work and the OS suddenly being weird is stupid, so I just ordered a new 10 EUR adapter and it should be okay eventually, for now I just grabbed another spare out of a package and it seems that it solved the issue.

I'd be surprised if you have gotten this far and aren't starting to at least slightly believe that every single attempt of mine to mess around with hardware inevitably leads to things breaking, even in ways that shouldn't be possible due to me not interacting with said hardware in any abnormal ways. Why on earth would the adapter decide to suddenly die, after having worked okay for months? I guess I need to make a sacrifice of an old HDD to make the Machine Spirit happy or something.

I was shortly going to hit even more issues, ones without immediate solutions.

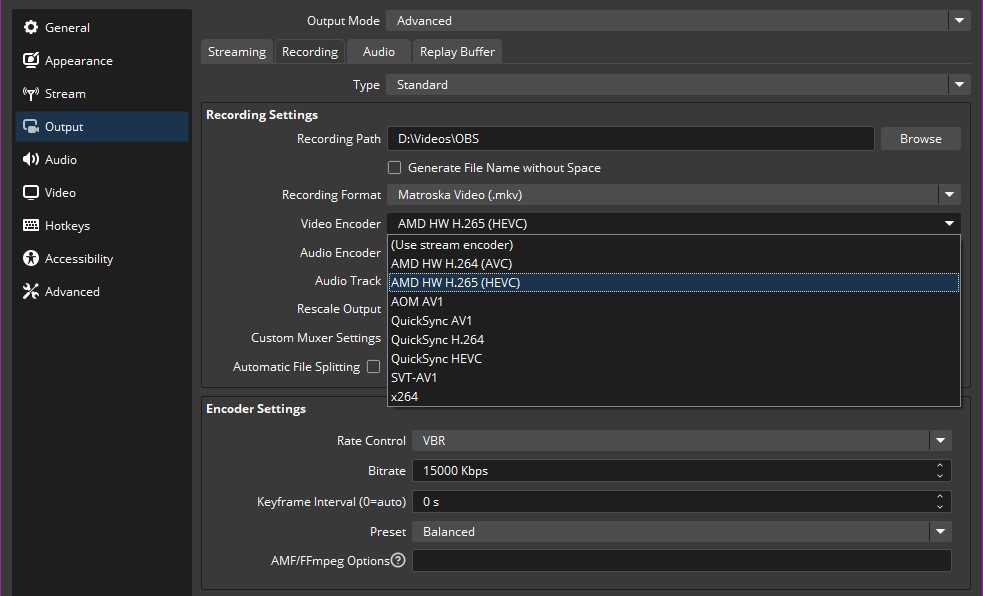

You can't have two Intel Arc GPUs and choose which one to use for encoding

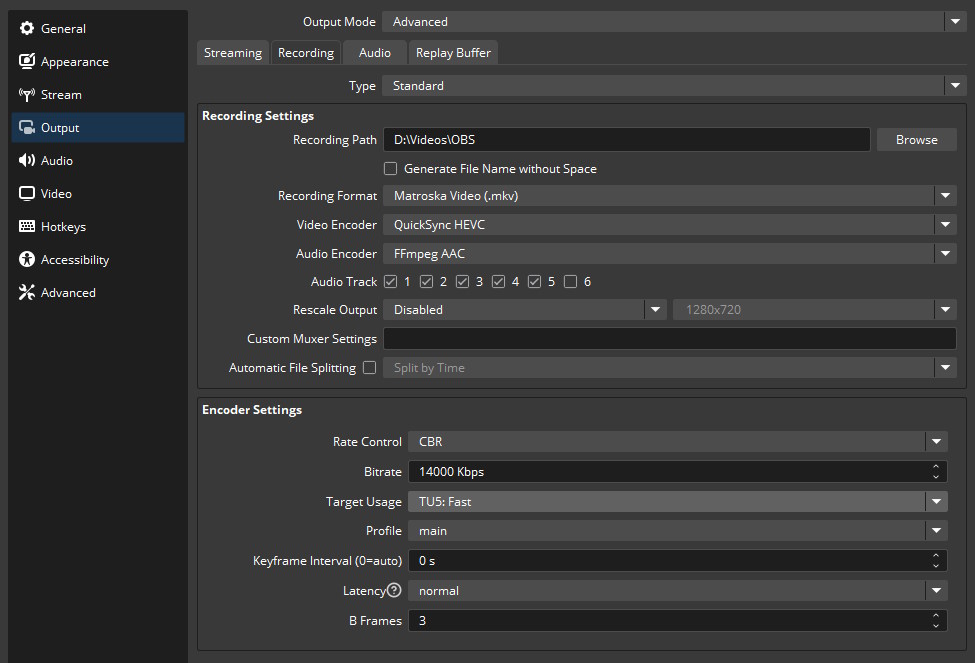

The biggest problem, however, was that if I have two Intel Arc GPUs in the same system, then I can't choose which one I want to use for encoding on Windows 10. Let me repeat that: I put in a second GPU in the system for the explicit purpose of doing video encoding with it (after realizing that I can't drive the other monitors with it without weird overloading issues) and I can't do that one exact thing.

Within OBS you get a dialog to choose what sort of an encoder you want and for Nvidia GPUs you do get a nice dropdown to indicate which one should handle the encoding (see under "Advanced Settings"), whereas for Intel Arc GPUs there is no such thing:

In other words, when you have two Intel Arc GPUs on a regular install of Windows 10 (I cannot test Windows 11 right now), it will use the main GPU, the same that you use for playing games, the very same where games that put it under a bunch of load make the encoder overloaded, making the setup with two GPUs completely useless.

The sort of good news is that OBS is open source and if anyone was feeling brave, you could just write the missing functionality yourself, compared to what is already present with Nvidia, but frankly that's a bit above my paygrade for now. In other words "just write the missing code" is doing a lot of heavy lifting here, especially when C isn't my main language and I'm very demotivated already.

Giving up, two vendors, half the issues

I decided to sidestep the whole issue in its entirety. While that RX 580 was a bit too weak for games and doesn't have support for AV1, it still is a GPU, still doesn't draw too much power and still should be good enough for encoding, so I pulled it out of storage:

Compared to the A580 it is a bit less advanced, a bit less power efficient (for what it is), but also will save me dealing with at least some of these weird issues:

I left the B580 in as my main GPU because I'm quite satisfied with it and just replace the A580 with the RX 580. Still a very snug fit, still need some tape to prevent the cables going to the front panel from hitting the fans and slowing things down, still can't be bothered to clear all of the dust, because at this point I'm quite sure that the computer would just burst into flames if I tried, but hey, it's a setup:

Installing the AMD Software wasn't difficult either and it just worked as expected in combination with the Intel Graphics Software, didn't even need to use DDU for this either:

Curiously, when I last had the RX 580 in as the main GPU for that system, I actually needed to use an older driver version, because the latest ones actually made VR quite unstable, though thankfully I won't have to use that GPU for VR anymore (while the B580 isn't officially supported, at least Virtual Desktop has my back there).

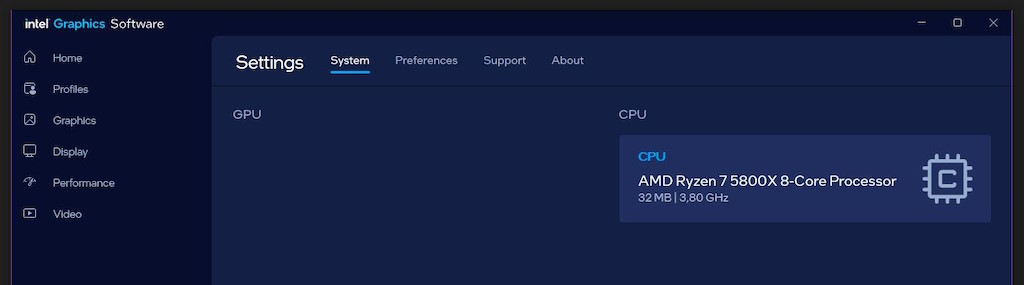

There was a little bit of weirdness, such as for a bit Intel believing that I have no GPUs present, but that resolved itself after a restart:

So where does that leave me? Finally I can choose which GPU to do the encoding on, because now I have one type of encoder supported by each card and the RX 580 still does support H265 (HEVC) for recordings, which has pretty passable quality for most of the things I might want to do:

I did test out how far I can push the older card and it become clear pretty quickly that I can't actually try to stream with H264 at 1080p 60 FPS, because it gets overloaded. H265 at the same resolution and framerate doesn't seem to cause much load upon the GPU at all, not sure why H264 at those settings makes it shoot up to 100% utilization and breaks everything.

Thankfully, by dialing down the resolution, I figured out that I can still have a passable setup:

- streaming: H264, 720p, 60 FPS, CBR at 6'000 Kbps

- recording: H265, 1080p, 60 FPS, VBR at 14'000 Kbps

which is a step up from having the A580/B580 as the sole GPU and not being able to do much more than 1080p at 30 FPS without them getting too overloaded, or in games like S.T.A.L.K.E.R. 2 them getting overloaded regardless of what settings I try to use. Now, if the main GPU struggles with a game or something else, at least the secondary one remains pretty stable with doing its encoding task.

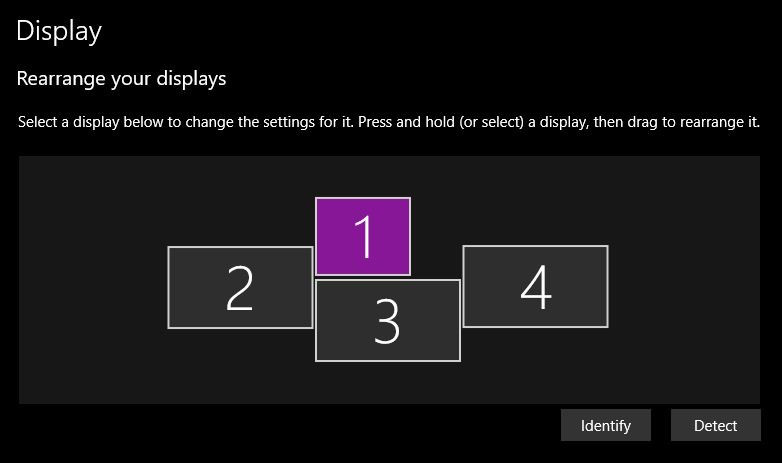

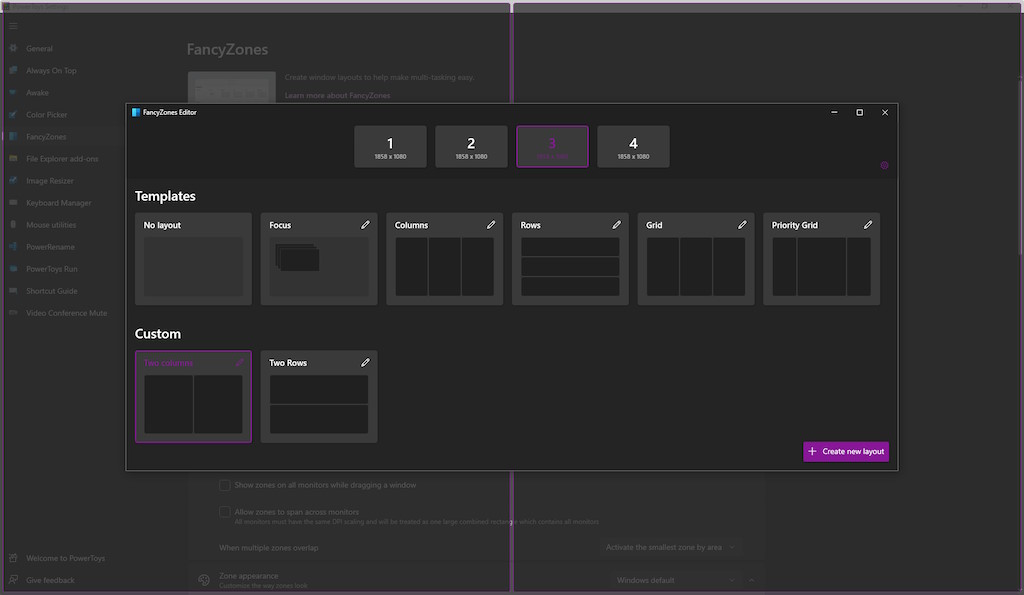

I did need to setup my monitor layout all over again (this time plugging all of them into the B580 because I don't want another run in with the Desktop Window Manager), but thankfully that wasn't too hard and FancyZones also makes custom snapping configuration easy:

With that, I could finally rest. I got a dual GPU setup working, though definitely not in the way how I had hoped.

Summary

In the end, the setup looks like this:

One card from 2018, the other from 2024, working in the same system well enough. Dual GPU setups are definitely a bit of a mess and I cannot in good conscience recommend that most folks look into them, unless you:

- throw a bunch of money at Nvidia who seem to have good software support

- are either okay with waiting for it to get better for your vendor of choice or can write a bunch of code yourself

- or you choose to have two GPUs from different vendors

I'm happy that I resolved the issue that the friend of mine had with their RTX 4080 Super, especially because the RX 580 cost me around 120-140 EUR when I got it a few years ago, though for whatever reason it seems that the price has now climbed up to 190 EUR even for an RX 570, so you might as well get a newer card if you need something now, like an RX 3050 or RX 6600.

I would still recommend an Intel Arc A310 if you need a dedicated encoder card, because there are nice single slot versions that are very conservative on power, while Intel Quick Sync Video is quite lovely, though I can only make that recommendation if you don't already have an Intel Arc GPU as your main one.

I won't lie to myself though and will admit that it's a little bit sad that I couldn't use a setup with two Intel Arc GPUs and that good encoder functionality ends up not getting used in my case, but hey, I'll probably end up sending the A580 for the friend that has the RTX 4080 Super, because it'd probably solve their issues as well.

In summary: when we're not held back by hardware weirdness, we are held back by software weirdness. With the state of how things are, I'm half surprised that my PC even boots up most of the time when I press the power button. This experience did absolutely nothing for my hatred of computer hardware and with every passing day I understand the folks who just get a Mac more and more (even though only like 25% of my Steam library would work on a Mac).

Other posts: « Next Previous »