Containers are broken

Date:

Containers were generally touted as the solution to the problem of: "But it works on my machine!"

And in some respects, they actually succeeded at that, except for when that is absolutely false and nothing works as you'd expect. Now, normally I'd like to consider myself a proponent of container technologies. In the past few months I've actually written surprisingly little about things that are broken, except for my hand finally being forced in that direction, because of just how frustrating some of the issues that I've run into were.

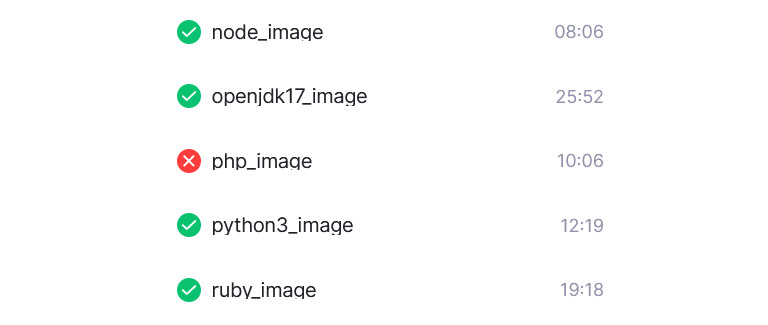

You see, recently I actually embarked on the journey of running most of my software inside of containers. Not just that, but I wanted to make a common base container image (in this case, based on Ubuntu) and build all of the other containers on top of that, such as Java, .NET, Python, Ruby, Apache2, PHP and so on (except for databases or complex software, maybe):

However, things are never quite as simple as we'd like them to be. In the example above, I was struggling for a bit to get my PHP image building correctly, because mod_php for Apache2 to execute PHP scripts through the web server was kind of slow, whereas setting up PHP-FPM proved to be difficult, making me install supervisord to get multiple processes working in the same container with few to no issues. Essentially, the web server process is separate from the process that actually executes the script and they need to communicate with one another and in this case I wanted both of them to be in the same container. Doable, but slightly finicky to achieve.

But what if there was a more sinister kind of problem? A problem, where the build would look like it's actually passing, but instead fail silently? That's exactly what I ran into.

Reproducibility? What reproducibility?

Normally, we'd like to assume that building a container with the same input files, with the same specification (a Dockerfile, or something else) and around the same time should have the same results, right? Well, no, not really. If you use Windows as your development OS (or are just too lazy to switch to *nix because you enjoy playing the occasional game on Windows, or use software like MobaXTerm, for which there is no equivalent), then you really cannot make that assumption.

It all started out innocently enough. I had a Git repository, in which I stored instructions for building the aforementioned PHP image, first with mod_php, later with PHP-FPM. Both worked, when the container was built on Windows, neither worked when the container was built on my CI server, or another *nix machine. It was actually a pretty simple setup, with a directory structure a bit like this:

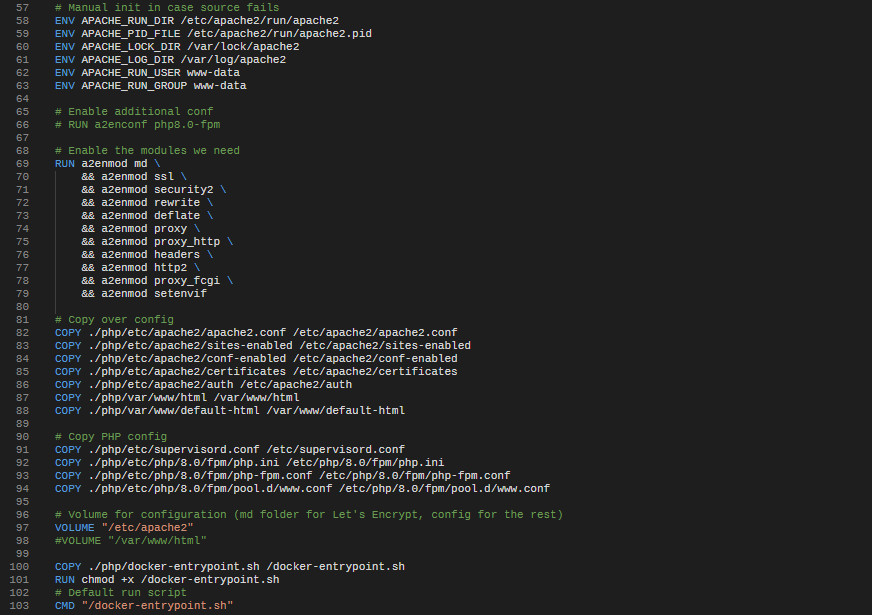

A Dockerfile, which container instructions how to build the container and how to COPY some files into it, some default HTML and PHP files, as well as a favicon, some configuration for Apache2 and a php.ini file, as well as an example htpasswd file for setting up basicauth (with a bind mount, or through the entrypoint), so that one can run the equivalent of phpinfo(); but protect it behind a password (or eventually a certificate, if need be).

The actual build instructions were also pretty simple:

git clone PROJECT_GIT_REPO

cd PROJECT_DIRECTORY

docker build -t IMAGE_TAG -f php.Dockerfile .Pretty simple, right? That's kind of why I actually love Docker, as well as the semantics of Dockerfiles make it pretty easy to figure out what a piece of software actually needs to run. Not only that, but it's also documentation for free, if you sprinkle in a comment or two, rather than a .docx or .odt file with lots of pages of setup instructions that aren't as easy to read and follow through:

And if you execute the same instructions and the build succeeds, then surely you'll get a container that matches what you expected, right? Well, no, not really. I built that container on my CI server, as well as locally. Both builds succeeded (because of no integration tests, yet), I pulled the remote image and then launched the two of them, side by side:

docker run --rm -it -p 3000:80 remote_php

docker run --rm -it -p 3001:80 local_phpHere's how the aforementioned PHP version info page looks like, basically just:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<meta http-equiv="X-UA-Compatible" content="ie=edge">

<title>PHP version information</title>

</head>

<body>

<h1>PHP version information</h1>

<?php phpinfo(); ?>

</body>

</html>I then tried opening both of them in the browser and after entering the credentials, this is what I got:

So, on the right, the locally built PHP image seems to launch correctly and executes the simple PHP script, outputting information about the currently installed version. But on the right? Well, instead you get a seemingly empty page with no PHP output. Worse yet, if you try looking at the page source, you get this:

It actually treats the PHP script as a regular file and in this case lets everyone have a look at the source code for your application, something that you probably don't want to happen in most cases! Well, what's wrong, then? To be honest, I'm not actually sure and it's not as if Docker helps me figure out what's going on, either.

Discoverability? Debugging? Nope!

My first thought was that it was something adjacent to Docker, an issue that I might have run into previously, line endings. Yet, it seems that Git had already been configured to use automatic line endings, to avoid issues like this as much as possible:

$ git config --get core.autocrlf

trueI even tried setting up a .gitattributes file, just to be sure:

# Set the default behavior, in case people don't have core.autocrlf set.

* text=auto

# Attempted fixes

*.conf text eol=lf

*.sh text eol=lf

*.htpasswd text eol=lf

*.php text eol=lfNope, it didn't really fix the issue. Another thing that I noticed, that Windows seems to have different file permissions for the built containers, as well as no execute flag set on files by default. For example, have a look at the following directory listings:

As you can see, the PHP script is executable on the Windows build and has more open permissions than the *nix build file. Yet, even after changing the permissions within the actual file, nothing really seemed to change. That said, it's really discouraging when we have a leaky abstraction - since the NTFS file system simply doesn't have an equivalent to the file permissions system that we're used to:

It's unfortunate, especially if you're ever dealing with something like SSH keys (which some software might reject, if their permissions are set to something too open) and would prefer to have things working out of the box, instead of having to script a chmod call or two in the container entrypoint.

The build output itself also didn't seem to provide any meaningful warnings or differences, which was even more disappointing, and the closest software for debugging something like this was container-diff: https://links.kronis.dev/vJ40fCc53c

The problem? Well, they say it themselves:

NOTE: container-diff is a Google project, but is not currently being officially supported by Google and is in maintenance mode. However, contributions are still welcome and encouraged!

So, essentially it's soon going to become a dead project. That might be overlooked, of course, if there was a Windows build available, which I could use. Which there isn't, so I cannot. Well, one might also look in the direction of actually saving the containers as archive files and then inspecting those, right?

Well, not quite. You see, it's definitely possible to export a container as an archive, which I did:

docker save --output local_php.tar local_php

docker save --output remote_php.tar remote_phpExcept that upon opening the archives, you'll discover a bunch of random layer files, that aren't really named with reliable prefixes, so you won't get anything like 000001_SOME_HASH or 000002_ANOTHER_HASH, but instead will find yourself confused, not really knowing which layers to start with:

Well, technically you could dig through the JSON description file (not manifest.json, the oddly named other one), except that it's not really formatted by default and after you do that, you realize that it's horribly long and not all that user friendly. Technically it's useful, though you'd definitely want some tool for analyzing how any two might differ, rather than trying to look through the file yourself line by line:

Thankfully, I actually decided to use Meld for this and got some interesting output. First off, it seems like the image descriptions themselves are inconsistent, for example, storing ENV values in different places in the file:

Not only that, but it seems that we were using BuildKit locally but not on the server, so the actual commands were a bit different, though technically this shouldn't really affect that much:

Finally, we could see that the layers were indeed differently named between the two builds, which gives us a pretty good idea about their names now, if we care about the order in which they were created and allows us to match any two against one another really easily:

You'll also notice that about half of the layers are the exact same. That's because we use the same common Ubuntu/Apache2 image that I built previously, which really speeds up downloads. If I build all of my containers monthly/weekly and need to redeploy them, then those layers will already be on the remote server (or will only need to be downloaded once), the only actual differences being between the runtimes that each tech stack needs, like Java, PHP, Ruby etc.

Sadly, actually looking at the layers themselves is anything but easy. I'd need to rename them consistently, so Meld merge would even be able to pick up on them, though technically they should be good enough, because being able to compare the total changes to the file system after a particular command being executed is something that we historically have never really been able to do well. We're about halfway there, I'd say.

For example, suppose that I have the instruction:

COPY ./ubuntu/etc/apt/sources.list /etc/apt/sources.list(or maybe imagine it's something more interesting, such as recovering a remote file)

Now, consider that I've found out the corresponding layer names for this instruction:

- local_php -

30ff05376862037172329a3acbdd3ef4c3d9e5605f6862c24f70cc7574dab114 - remote_php -

938e79df77c401fb8b1e56d7a4f8ee4090d97761f3489b45e5ce76133ea67abb

With this knowledge, I could do the following:

cat local_php.tar\30ff05376862037172329a3acbdd3ef4c3d9e5605f6862c24f70cc7574dab114\layer.tar\etc\apt\sources.list

cat remote_php.tar\938e79df77c401fb8b1e56d7a4f8ee4090d97761f3489b45e5ce76133ea67abb\layer.tar\etc\apt\sources.list(or, you know, extract the .tar files first)

And compare whether the files are indeed equal. With sufficiently advanced tooling, you could even do this against whole directories, if only we had a better way of naming layers, something like sortable identifiers, based on the layer number in the image.

But without that? I'm not quite sure where the problem is, even after this exploration, because some of the layers are slightly differently sized, even with the same build files and instructions, but there's too many of them for me to reasonably analyze and the Docker CLI or other tooling doesn't help me here.

Well, where's the actual issue, then?

So, what can we find out?

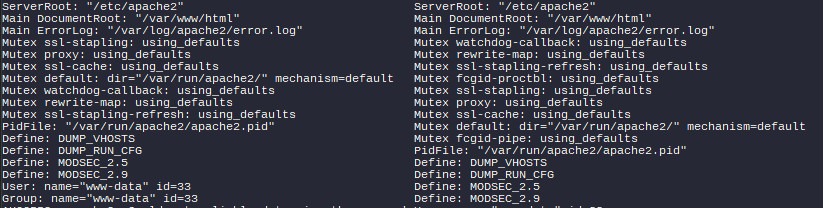

Well, if we look at the container output during server startup (thankfully I increased logging verbosity a little bit), then we can eventually notice some of the lines being slightly offset, indicating that the output itself isn't 1:1 either:

Did you notice where the problem is? In our container that's based on the locally built image, we can see that the FastCGI implementation which we can use for PHP-FPM is starting, for example:

[fcgid:info] [pid 35:tid 140447791488064] mod_fcgid: Process manager 35 startedBut in the container derived from the image that we built on the CI server? Nothing, not even an error message! Now, this is less of a problem with Docker or other OCI runtimes and more with the software in question, if we look past the fact that it should not be a thing in the first place.

We can also see some differences in the bit where we see what functionality will be enabled in the web server:

In particular, these two lines are telling:

Mutex fcgid-proctbl: using_defaults

Mutex fcgid-pipe: using_defaultsOnce again, FastCGI startup is successful in one container, but completely absent in the other. With the same configuration files and startup instructions. As of yet, I've no idea what's causing this, because frankly it is pretty late and I should go and get some sleep soon.

Summary

In summary, however, I can make a few conclusions.

The line "But it works on my machine!" has nowadays become "But it works in my container!", though sometimes you might also have to say that it works on your cluster/cloud provider instead. The more abstractions and different environment details you'll pile on, the higher the chances that something will break.

There is also lots of bad software out there, that won't complain about being unable to fit the configuration that you demand from it and will instead silently ignore errors for no good reason, even if supervisord showed me that the actual PHP-FPM process had started successfully, so apparently in our case the problem lies with the web server.

But what are you to do? I'd say, that in our world of leaky abstractions, you should probably do development work and CI on the same operating system, or at least on the same group of operating systems. For me, sadly Windows Server is out of the question, so I'll need to undertake the effort of booting into *nix for development work more often, at least in cases like this. Similarly, if you need containers, don't run one environment on Ubuntu and Docker, with another running Podman on Rocky Linux. Sure, the OCI specification itself can be pretty portable and using something like Docker across multiple OSes could be enough in quite a few cases, but at least keep the path to getting to a container image as consistent as possible.

Don't undertake unnecessary risks, don't mix incompatible file systems, don't put yourself at a disadvantage. Better yet, mandate a common development platform for all of your projects, ideally a boring distribution that can work both on your servers and, with an additional graphical environment, on your workstations. Personally, I'd say that Ubuntu (LTS) is an excellent choice here, though for all I care, you can use Ubuntu on servers, Linux Mint (which is based on Ubuntu) locally, or even Debian everywhere. There is lots of potential in avoiding the problems of simultaneously needing to support developers on Windows, Linux and Mac, the issues of what I just went through shouldn't even be a thing.

But alas, that is annoying and troublesome, so I don't want to do that just yet. Maybe a development VM with proper clipboard integration and possibly GPU passthrough? Or, in my particular case, maybe after identifying the problem (fcgid not starting) I'll be able to find the solution by some searching online and it'll all be solved... until the next time.

Other posts: « Next Previous »