GitLab updates are broken

Date:

Recently i went on Hacker News and saw the following post at the very top of the front page:

You know, Hacker News is actually a pretty useful place in regards to spotting things like this, it actually also alerted me about log4shell in the first day of it being publicly reported, a few hours after work. Thus, i could react to it the next day and now it would seem that i'd also get to update my own personal GitLab instance as well.

As stated in the documentation, GitLab strongly advised people to do exactly that whenever possible:

This was very much like an update to address an older CVE, one that allowed RCE due to some faults in the exiftool implementation used:

However, promptly looking up how to do updates filled me with a sense of dread, let me tell you a bit more about it today.

GitLab updates are needlessly plentiful

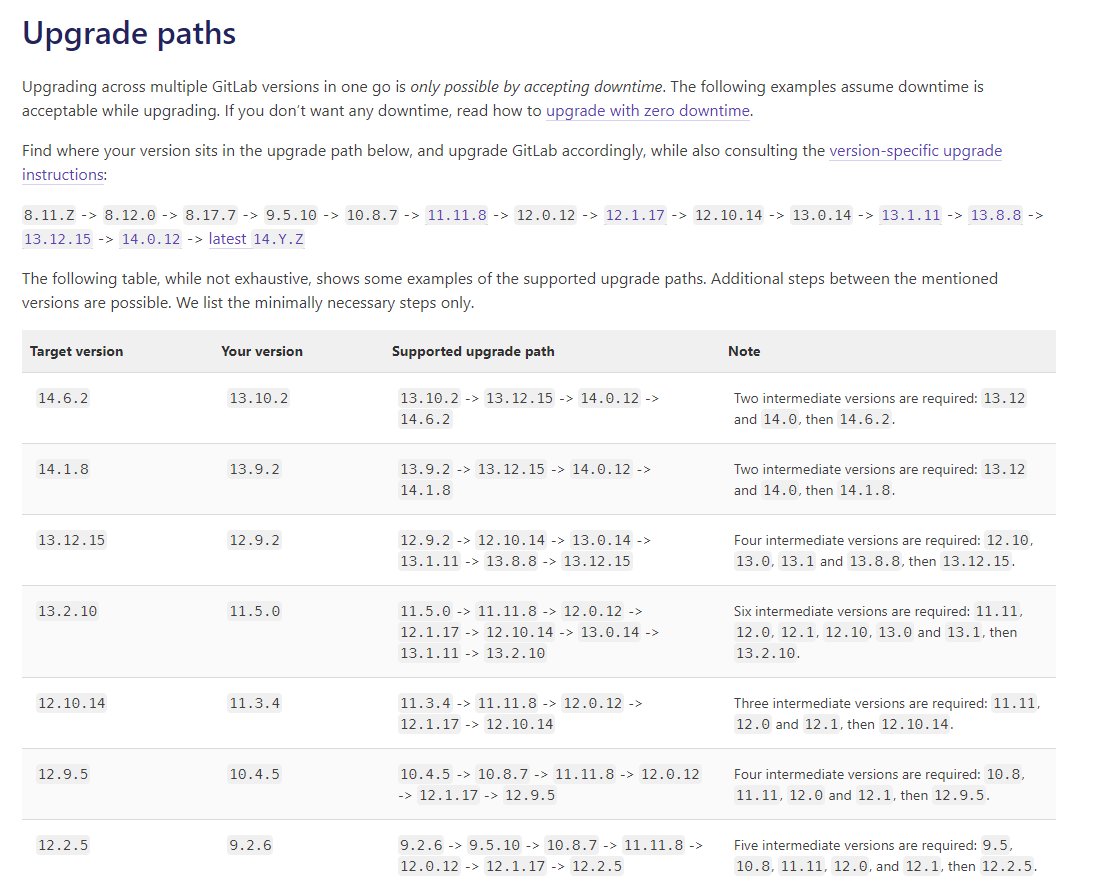

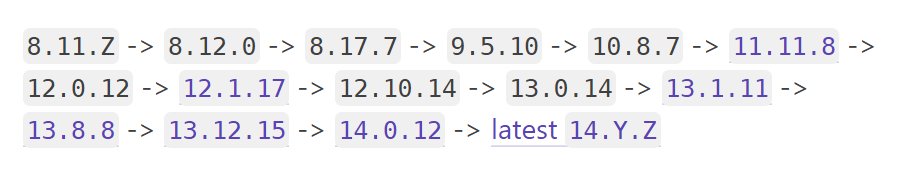

They actually have a lovely page about how to update from any given version to another one. However, while it's nice that the documentation is there, the upgrade paths are really complicated:

Most of the software at my dayjob (at least the one that i've written) doesn't need intermediate versions, you could launch v1 and then go from that straight to v999 - since the DB migrations are fully automated, even if i had to create a lot of pushback against other devs going: "Well, it would be easier just to tell the clients who are running this software to just do X manually when going from version Y to Z."

But GitLab's updates are largely automated (the Chef scripts and PostgreSQL migrations etc.), it's just that for some reason they either don't include all of them or require that you visit certain key points throughout the process, which cannot be skipped (e.g. certain milestone releases, as described in their docs). Of course, i acknowledge that it's extremely hard to sustain backwards compatibility and i've seen numerous projects start out that way and the devs give up on the idea at first sign of difficulty, since it's not like they care much for that and it doesn't always lead to clear value add - it's a nice to have and they won't earn any less of a salary for making some ops' person's life harder down the line.

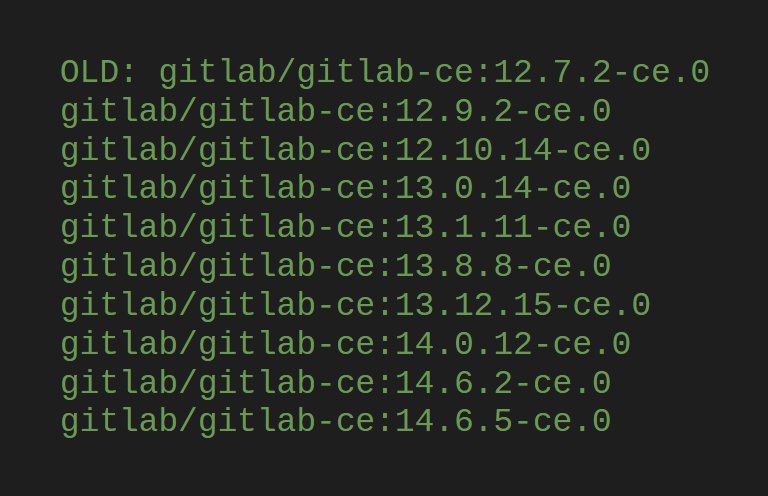

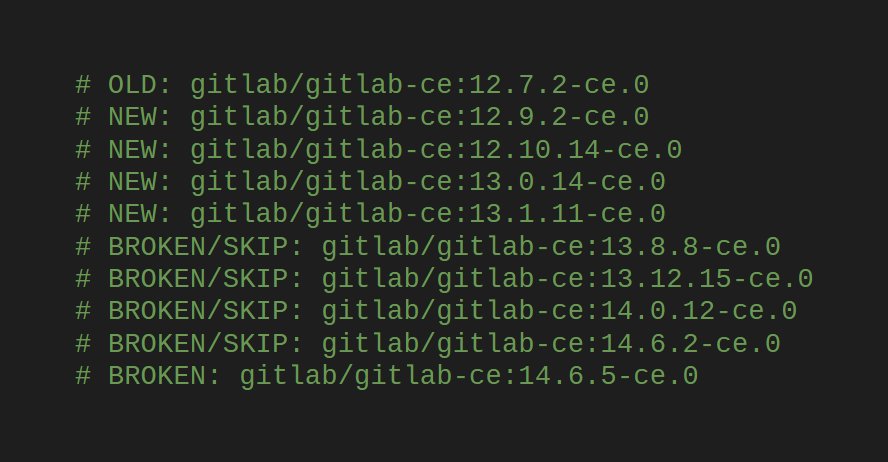

Thus, the situation that we end up with is the following list of versions that must be upgraded to in order:

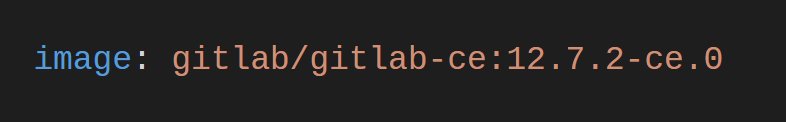

Now, the more often you do updates, the easier it would be for you, however my version was quite a bit out of date. Now, i don't need your judgement about this, dear reader, and i'm sure that most CVEs would essentially expose most of my data to the world, but it's not like there's been anything too important on my instance until now - my master's degree repositories (with SSH keys to servers that no longer exist, as fully fledged examples), a number of useful scripts, the source to my homepage and so on:

While helping my aging parents in the countryside, working my dayjob and trying to keep my skills relevant and also not neglect communicating with my friends, i don't think i have the spare capacity to upgrade all of the software that i run. Now, one might just use the cloud GitLab/GitHub instances, but that presents other issues in regards to where the data is stored, what data you'll be able to put there and so on. Hence, this proved to be a learning experience.

Just to be sure, i actually checked whether you could jump multiple major versions as you could with my software and with some other software out there. It turns out that you can't, even though GitLab points you to their documentation on the topic:

But hey, that's no big deal, right? Let's just set an evening aside and do our best with the sequential path.

GitLab updates are broken

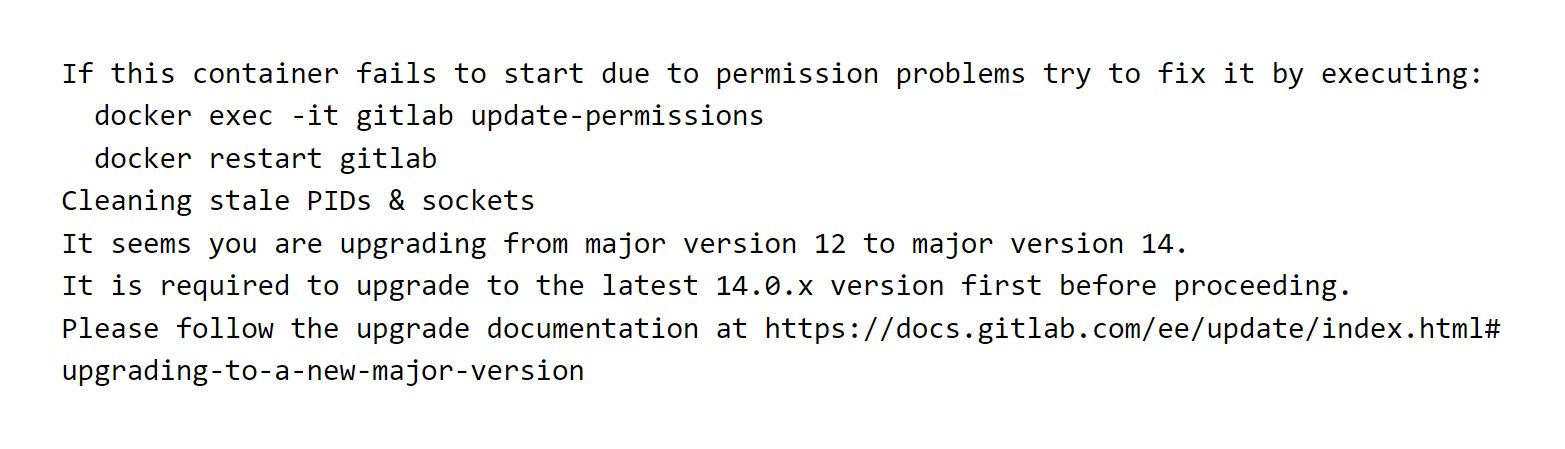

So, first thing's first: instead of just having my backups on a remote box, i also decided to just stop the old GitLab instance, copy over all of the data and use that as my Docker bind mount for all subsequent update actions:

It is probably relevant to point out that i run a GitLab Omnibus Install as a Docker container, due to it allowing me to do precisely this - not bothering to back up the entire VM (which may be costly in some cloud platforms) or to allot the entire VM for GitLab, not other software as well. Docker is excellent here and bind mounts (despite Docker Inc being adamant that they're not a good practice) are perhaps the best solution for easily managing the data for applications that i've run into.

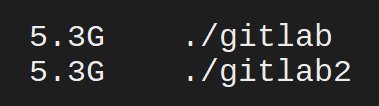

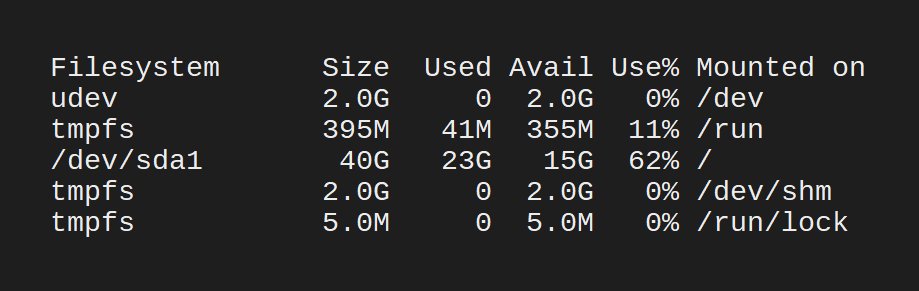

The only downside here is that my VPSes don't have that many resources to begin with so with a 40 GB HDD/SSD i don't have too ample of a breathing room (even when running regular Docker cleanup commands and the same for GitLab registry):

So, i set up a list of all of the versions that i'll need to go through, with their corresponding Docker images:

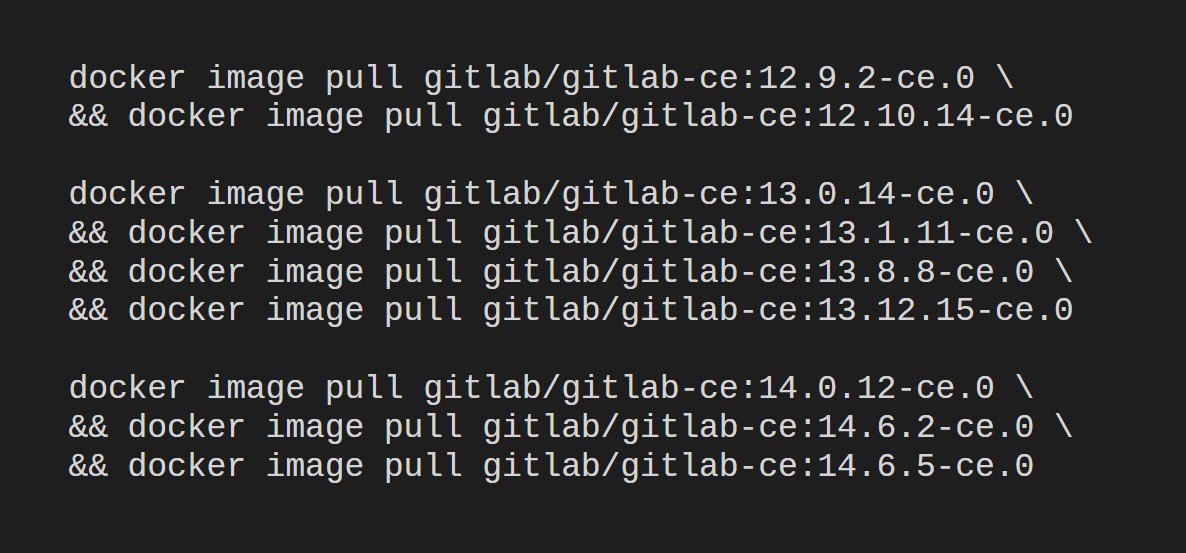

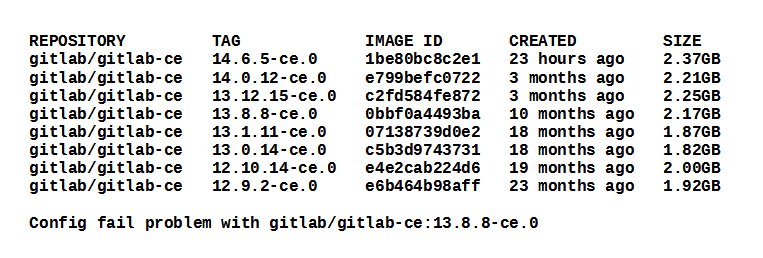

Since the disk space limitations should be taken into account, i also grouped the Docker images to pull and install consecutively, seeing as each is around 2 GB in size (which is huge by my standards for any software package):

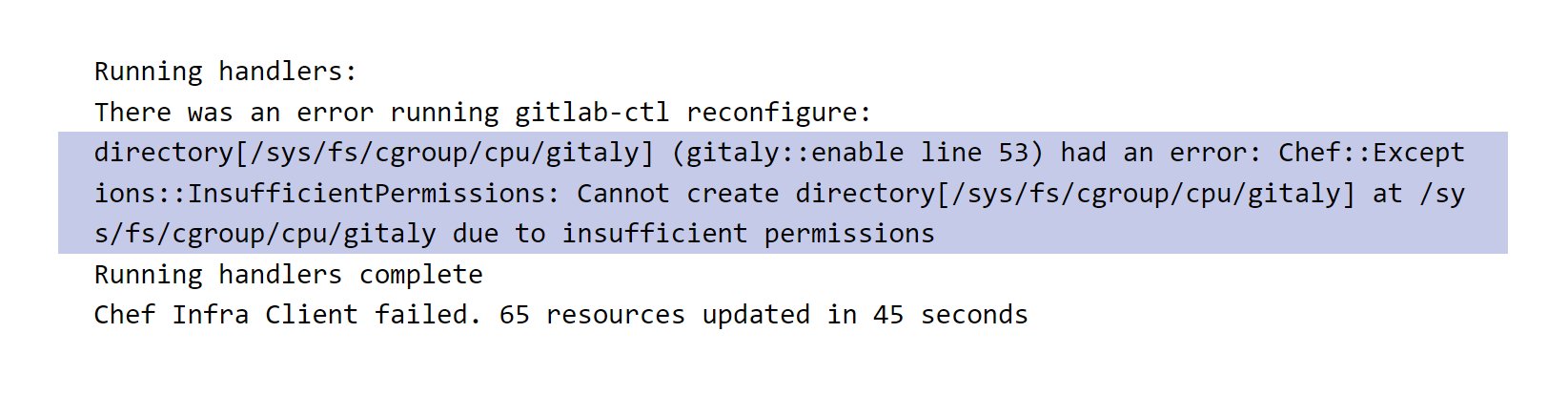

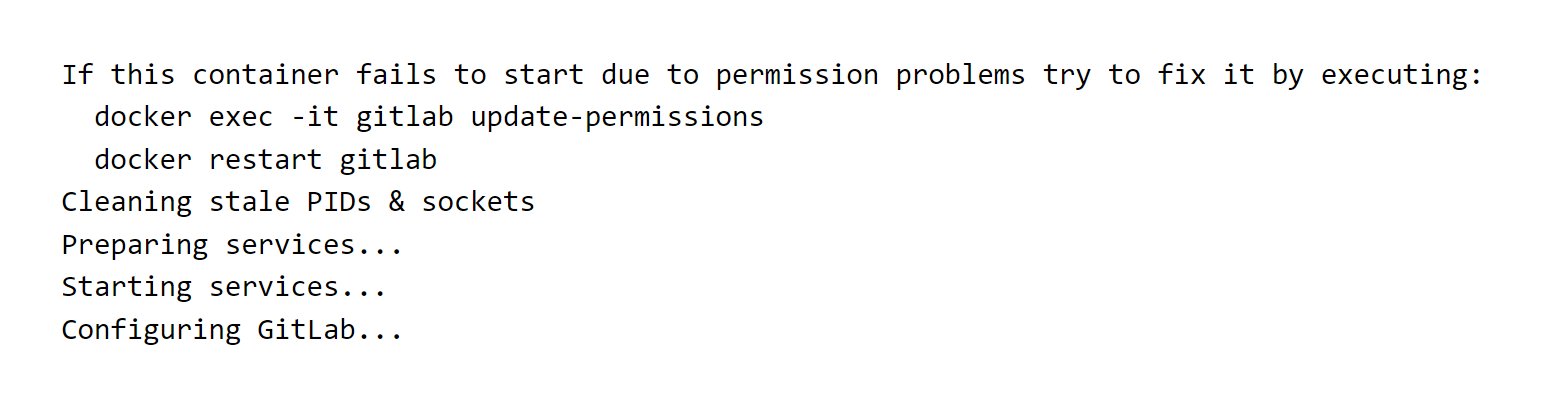

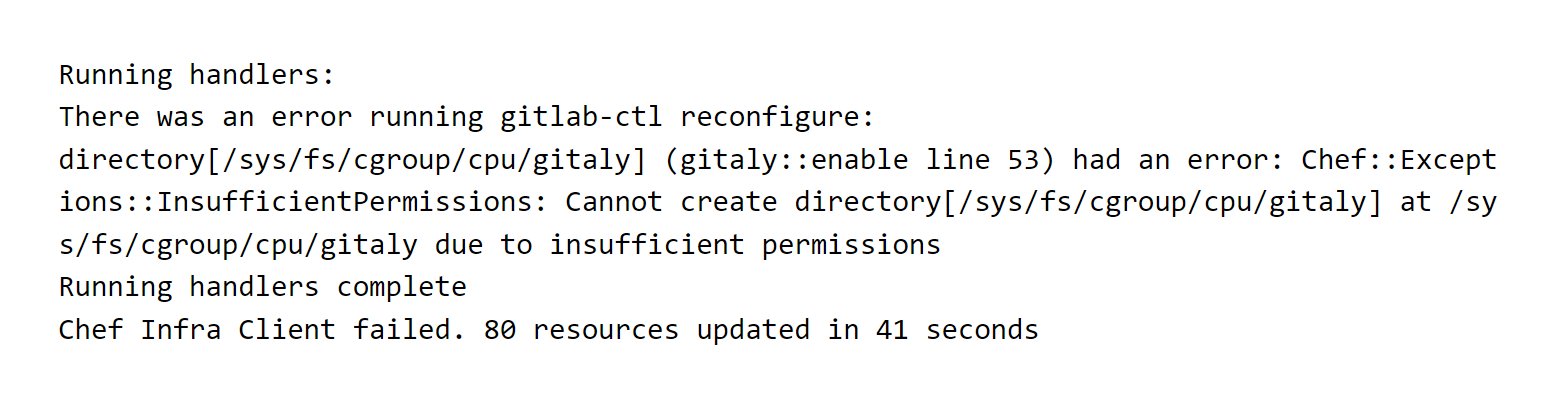

Now, why is this section titled in a way that asserts that GitLab updates are broken? Because once i got to version 13.8.8 i ran into a permission problem that prevented GitLab from starting up:

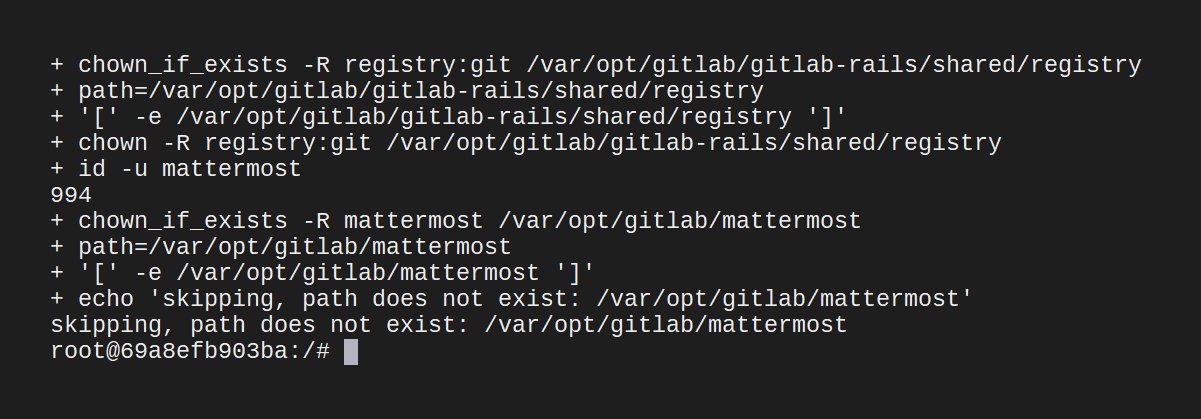

Now, what actually is under /sys/fs/cgroup/cpu/gitaly? No idea, since Googling this didn't return anything useful, nor were that many posts with this exact error, but my best guess is that GitLab tries setting up a cgroup to limit the amount of resources that are available to the gitaly component, but this seems to fail due to the user that's running in the container not having the necessary permissions.

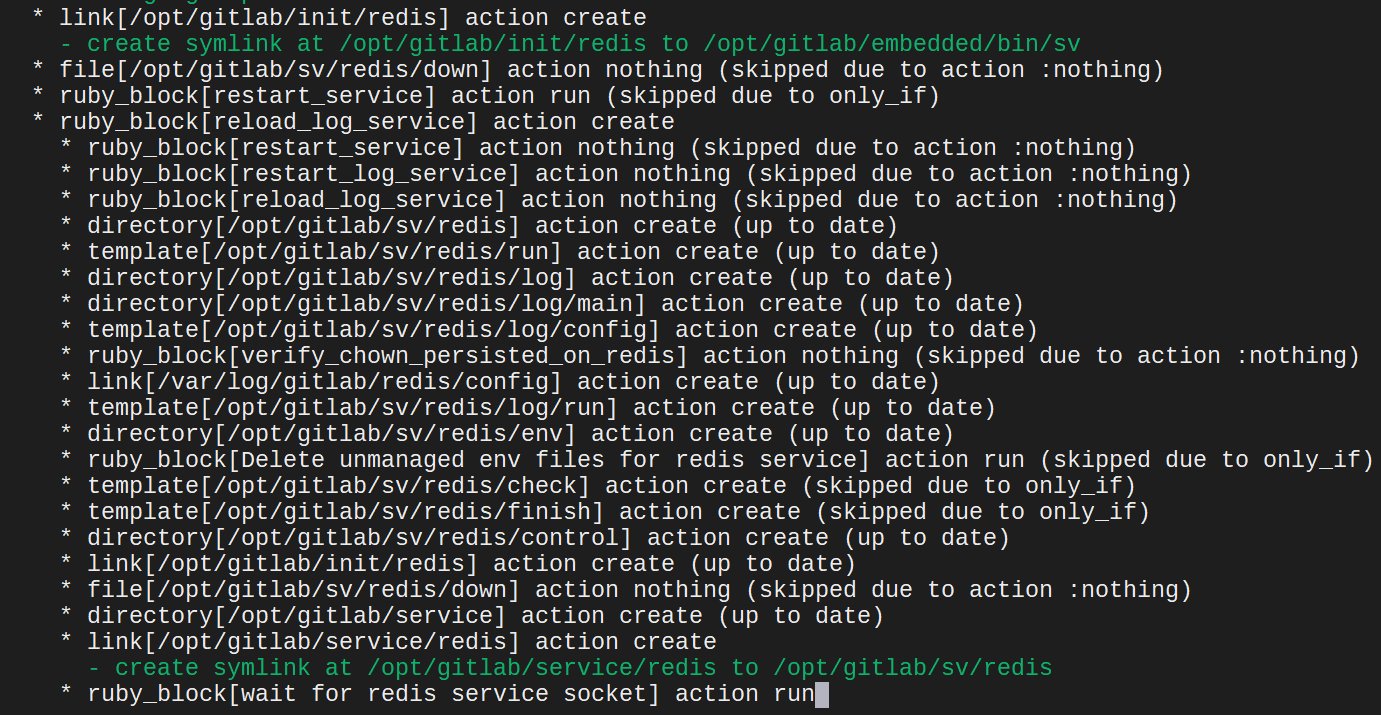

But fear not, for GitLab actually has a gitlab-ctl reconfigure command that should surely aid us, right? Well, no, not exactly, because it seemed to get stuck, while waiting for Redis to startup:

Thankfully, this was a more common problem, probably caused by the fact that the reconfigure command doesn't actually launch what's necessary to be present in the background inside of the container, hence someone suggested what you should do to get it all running:

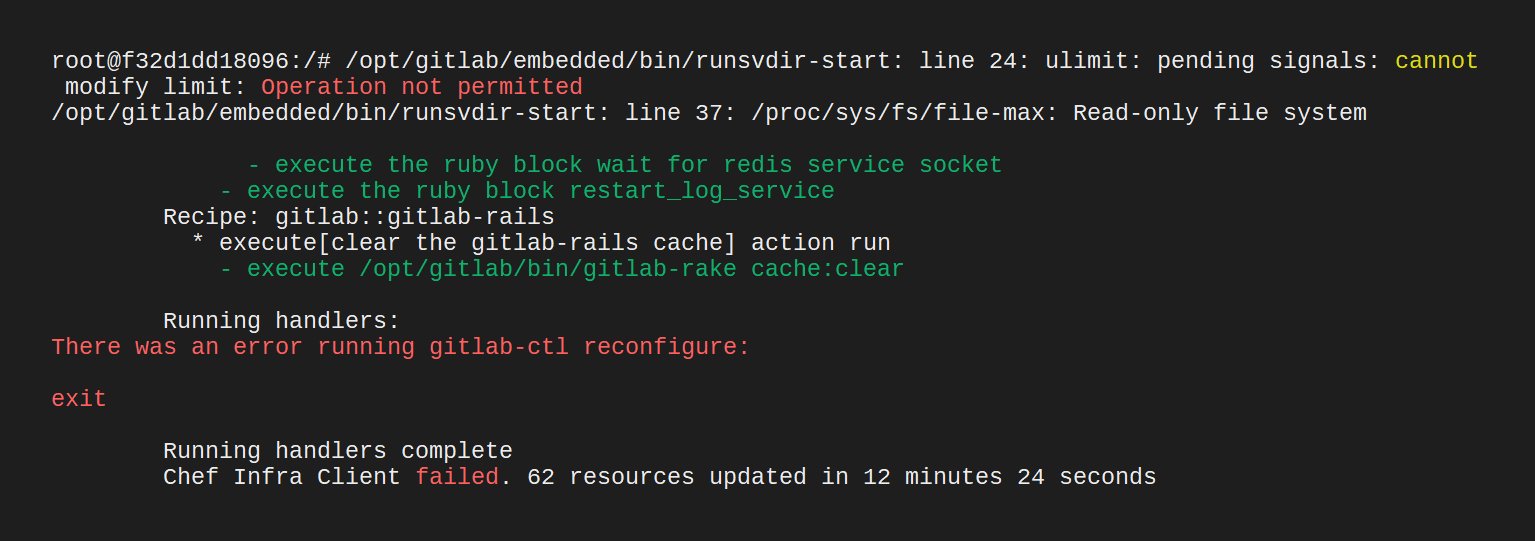

However, the fix was also broken:

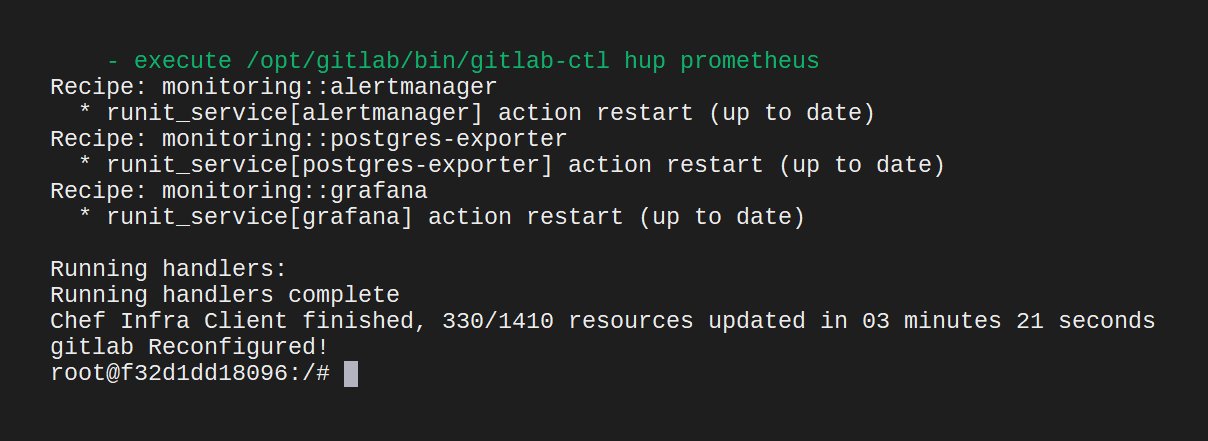

Now, what did i do? Pointelessly try to run it again and again in hopes that something would change, even though the container should be reproducible in its behavior and fail every single time, but it's not like i had any other options. It actually seems like the Omnissiah looked upon me favourably, because all of the sudden things started functioning again, the command succeeding:

However, that's not enough, as the problem wasn't actually solved! Thankfully, when GitLab starts up, it actually offers you other commands that you could run:

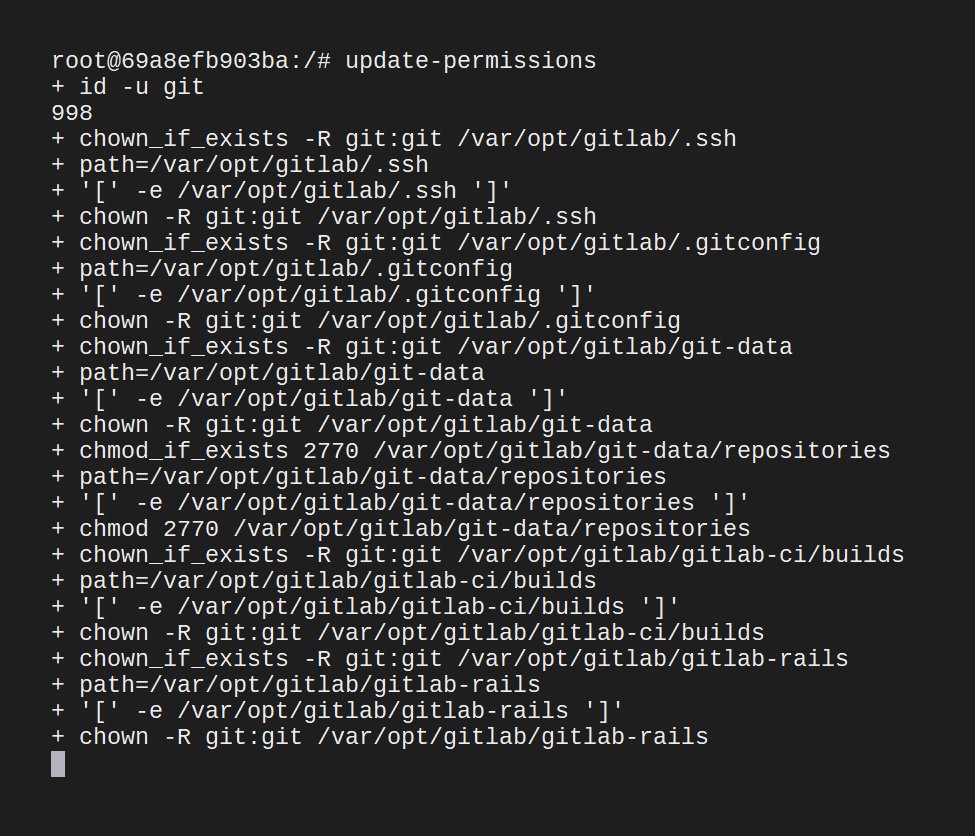

And this command actually seemed to run correctly on the first attempt:

Some time later, it seemed to succeed:

However, unfortunately this also proved to be useless:

You know, i'm not actually sure what i was supposed to do here, since the path for the cgroups that was failing wasn't indicative of bad permissions in some bind mount directory or anything like that, but rather the container having internal issues. At the same time, however, it's not like the Internet was flooded of mentions of this, hence it must have been a limited problem that just hit me. Now, there definitely was talk of cgroups v2 having problems in certain configurations, but without a concrete post telling me the exact problem, it's kind of pointless to wonder about these things.

I did actually try to jump over all of the broken versions, but it would seem that everything broke with 13.8.8 and remained broken, as indicated by my notes:

Thus, unfortunately my install of GitLab remained broken in any of the newer versions and i had to delete the new install, remove the copied files under the gitlab2 folder and restore the old one, before thinking of what else i could do.

GitLab updates have conflicts with configuration (presumably)

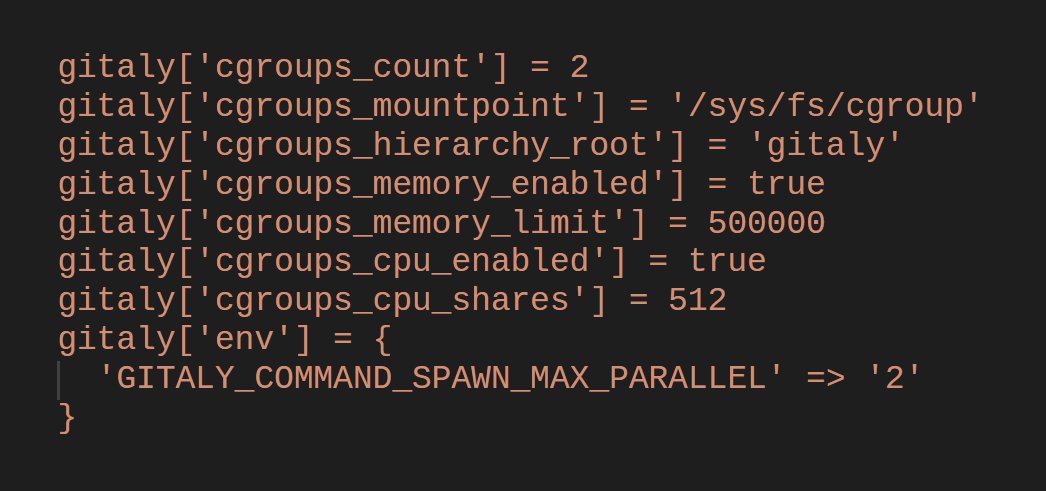

Now, i actually did look into my own configuration to find what could cause these problems and i think i found the culprit:

It's actually an example that was offered by GitLab in their page to configure an instance to be able to run on slightly less capable hardware, as is the case with my instance, due to me not being able to afford much at all. Now, after removing that configuration, it seemed like i might finally be on a better path, things seemingly progressing further in the startup process now!

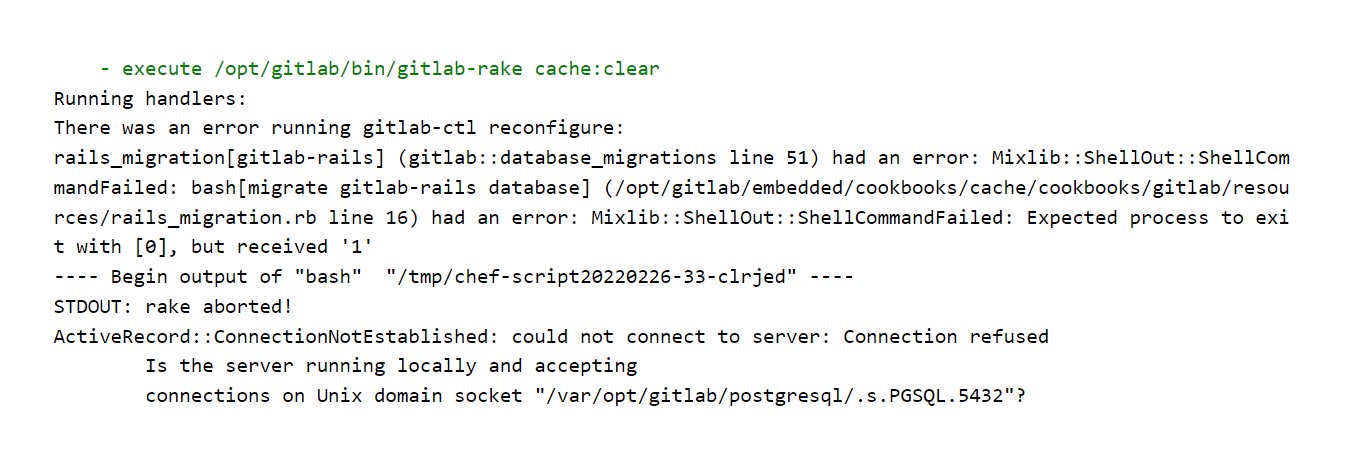

However, at this point, i ran into issues with PostgreSQL within the container refusing to startup or at least allow connections to it:

Now, should i have pulled out debugging tools here and had a look at what's going on inside of the container and why? Probably. But there's also another factor to consider here: that by this time i had wasted multiple hours going through this and no longer viewed GitLab as worth the effort. Its updates seemed scary for a reason to me: because most larger and complicated enterprise-leaning packages tend to have incredible amounts of changes involved in their updates, all of which bring brittleness with them.

A while ago i wrote a tongue in cheek article called Never update anything and i still stand by it - frequent updates will inevitably lead to problems like this in any non-standard configuration, even those that deviate slightly from the "happy path". This isn't something that affects just GitLab, of course, but also plenty of other pieces of software out there.

GitLab updates waste space

Another thing that i think deserves mentioning, is the fact how large GitLab images are. For example, going through those 8 different container images would involve needing almost 16 GB of disk space just to hold their contents, not even talking about the actual GitLab data:

Now, when you're running GitLab in your company with RAID arrays, GlusterFS/Ceph clusters, multi terabyte SSDs/HDDs and whatnot then it's probably not something you'd care that much about, but as someone who has 40 GB of storage in their VPS in total, this causes difficulties, especially when i need to be able to run the copied data files on an older image once something fails to better figure out how it broke and reproduce the circumstances that i saw better.

Summary

In summary, i think that GitLab is still a capable piece of software, but i am rather frustrated by people dismissing someone saying that updates can be problematic, just because they haven't run into any issues:

(to their credit, containers are indeed lovely in many cases for making software run in more predictable ways, as well as in allowing you to preserve the data while not caring about the runtime as much)

If everything works for that person, then good for them, however the Eldritch gods that mediate how computers work clearly hate me and thus i'm stuck dealing with every piece of software breaking in numerous interesting ways as many of the articles in this particular section of my blog clearly demonstrate.

Now, could this have been prevented by the developers of GitLab, perhaps by them picking a simpler architecture? Maybe. But probably not, at least due to how the system has been architected and has developed over time - any sort of a significant rewrite would necessitate more resources than they'd be able to alot to this. Plus, with this many moving parts it's hard to say where the problems even lie.

That's precisely why i have since migrated from GitLab, GitLab CI and GitLab Registry over to Gitea, Drone CI and Nexus, about which i'll write a short tutorial later. I will still definitely be using GitLab at my work, but thankfully it's someone else's problem to maintain it there. Let's be honest, GitLab CI is excellent and having all of the tools that you need in a single integrated package is amazing... as long as it works.

Of course, one could make the argument that with a bit more effort, exploration and acquired know-how my instance would once again start working as expected, but it's also a bit bloated for my VPS in regards to the resource usage, which is why i moved over to the aforementioned alternative stack.

Other posts: « Next Previous »