How to add a wildcard SSL/TLS certificate for your K3s cluster

Date:

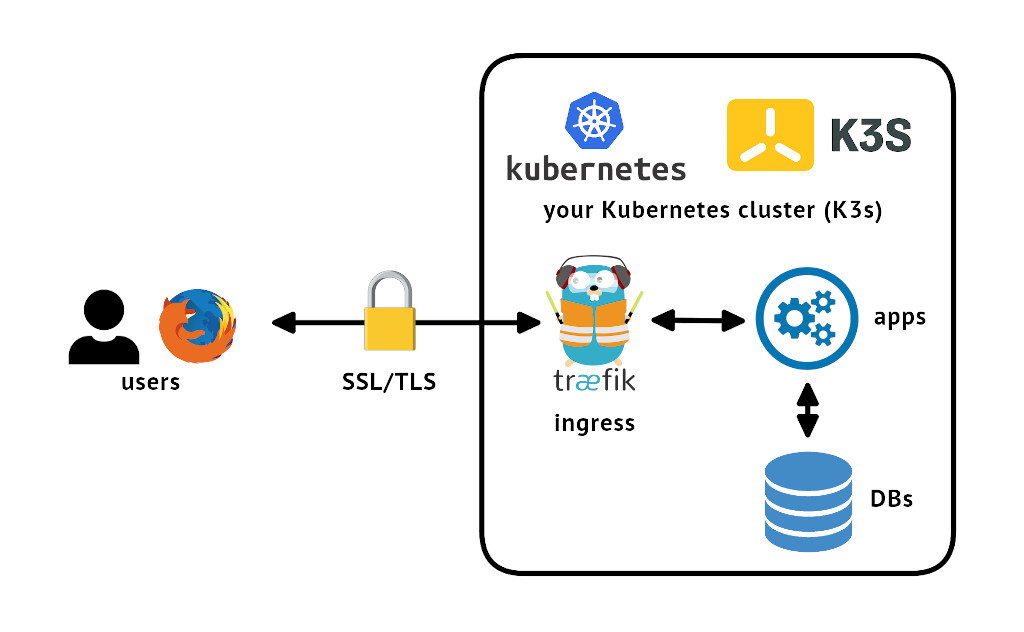

Here's a short tutorial that I felt could be useful for some people, but didn't get around to actually writing previously. Suppose that you have a project that uses Kubernetes and you'd like to secure the network traffic for the services that you expose through your ingress to your users:

So essentially what you'd like to do is make it so that any of your services can securely communicate to your users, in this particular case by using a wildcard SSL/TLS certificate, like *.myapp.com. The difference from many other tutorials here is that we are NOT going to be using the ACME approach with the likes of Let's Encrypt, but rather our own manually provisioned certificate. Personally, I think that Let's Encrypt is great, but in some enterprise settings you'll instead have commercially bought certificates without such ACME automation behind them.

But first, a few words about when and why someone might choose K3s in particular.

K3s

In this case, you're not running just any Kubernetes distro out there, suppose that you have a Kubernetes cluster that uses the excellent K3s distribution.

It's certified and therefore will work with many of your workloads with few surprises, but at the same time is wonderfully lightweight and portable across different OSes. I actually benchmarked it against Docker Swarm as a part of my Master's Degree thesis back in the day, and the resource usage was pretty close, which was impressive, considering that most Kubernetes distros are considered pretty wasteful in comparison:

If memory doesn't fail me, the nodes had 4 GB of memory each and 1 CPU, a full RKE deployment wouldn't even run on the nodes, whereas projects like K3s made it viable to use Kubernetes, in theory even on devices like Raspberry Pi, if anyone were to be so inclined.

That said, I wouldn't call the project perfect either. They opted for Traefik as their ingress controller, which is a bit less of a standard option, at least when compared to the likes of Nginx. In practice, this sometimes might result in slightly different behavior than what you might expect and personally on RPM Linux distros I once had a cluster hang while trying to replace Traefik with Nginx for testing purposes (something about resource cleanup).

Also most of what you're about to see is just my writeup after reading this helpful discussion on GitHub, which helped out greatly. Note that what worked for people in one setup didn't work for others, so it's likely that my setup is also a bit specific, though even if only parts of it are useful for you, it should set you on the right track. I might have to try reproducing everything from 0 to check what's the absolute minimum of what's necessary in the latest versions now.

Creating a ConfigMap

First, I created a ConfigMap to tell Traefik that we want it to provide a default certificate, along the lines of this:

apiVersion: v1

kind: ConfigMap

metadata:

name: traefik-config

labels:

name: traefik-config

namespace: kube-system

data:

traefik-config.yaml: |

# https://doc.traefik.io/traefik/https/tls/

tls:

stores:

default:

defaultCertificate:

certFile: '/certs/tls.crt'

keyFile: '/certs/tls.key'I then copied this traefik-config.yaml file over to the server, where I loaded it into Kubernetes (in the system namespace, in my case):

kubectl create configmap traefik-config --namespace kube-system --from-file=traefik-config.yamlNote that some said that they had to customize the Traefik Helm chart as well, which might go along the lines of this:

---

apiVersion: helm.cattle.io/v1

kind: HelmChartConfig

metadata:

name: traefik

namespace: kube-system

spec:

valuesContent: |-

rbac:

enabled: true

ports:

websecure:

tls:

enabled: true

podAnnotations:

prometheus.io/port: "8082"

prometheus.io/scrape: "true"

providers:

kubernetesIngress:

publishedService:

enabled: true

priorityClassName: "system-cluster-critical"

image:

name: "rancher/mirrored-library-traefik"

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

- key: "node-role.kubernetes.io/control-plane"

operator: "Exists"

effect: "NoSchedule"

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule"

additionalArguments:

- '--providers.file.filename=/config/traefik-config.yaml'

volumes:

- name: tls-secret

mountPath: '/certs'

type: secret

- name: traefik-config

mountPath: '/config'

type: configMapwhere you would put this file under /var/lib/rancher/k3s/server/manifests/traefik-config.yaml.

This is still not going to be enough, because we'll need to load the actual certificates.

Creating a TLS Secret

To store the certificates themselves, we can use Kubernetes secrets, more specifically, the built-in TLS type of secret:

For this, you will need a .crt file, along the lines of:

-----BEGIN CERTIFICATE-----

ROOT CERTIFICATE GOES HERE

-----END CERTIFICATE-----

-----BEGIN CERTIFICATE-----

INTERMEDIATE CERTIFICATE GOES HERE

-----END CERTIFICATE-----

-----BEGIN CERTIFICATE-----

YOUR OWN WILDCARD CERTIFICATE GOES HERE

-----END CERTIFICATE-----And a .key file, along the lines of:

-----BEGIN PRIVATE KEY-----

YOUR PRIVATE KEY GOES HERE

-----END PRIVATE KEY-----These should be familiar to folks who've worked with the likes of OpenSSL in the past, but in my experience sometimes the order of the certificates in the .crt file actually matters a lot, especially if you have intermediate certificates. Since we are only providing one .crt file, you're basically going to concatenate the config of the files that you have, from your CA's root certificate, to the one that you want to use as a wildcard with any intermediates as well.

To actually load them in Kubernetes, we can do something a bit like the following:

kubectl create secret tls tls-secret --namespace kube-system --cert wildcard.myapp.com.crt --key wildcard.myapp.com.keyThis will create the secret, however we might also need a TLS store for Traefik in particular.

Creating a TLSStore

This was the last step that I needed to do in my case. I created a TLSStore which specifies which certificate to use by default:

apiVersion: traefik.containo.us/v1alpha1

kind: TLSStore

metadata:

name: default

namespace: kube-system

spec:

defaultCertificate:

secretName: tls-secretI then copied this traefik-tls-store.yaml file over to the server, where I loaded it into Kubernetes (in the system namespace, in my case):

kubectl apply -f traefik-tls-store.yaml --namespace kube-systemTesting it out

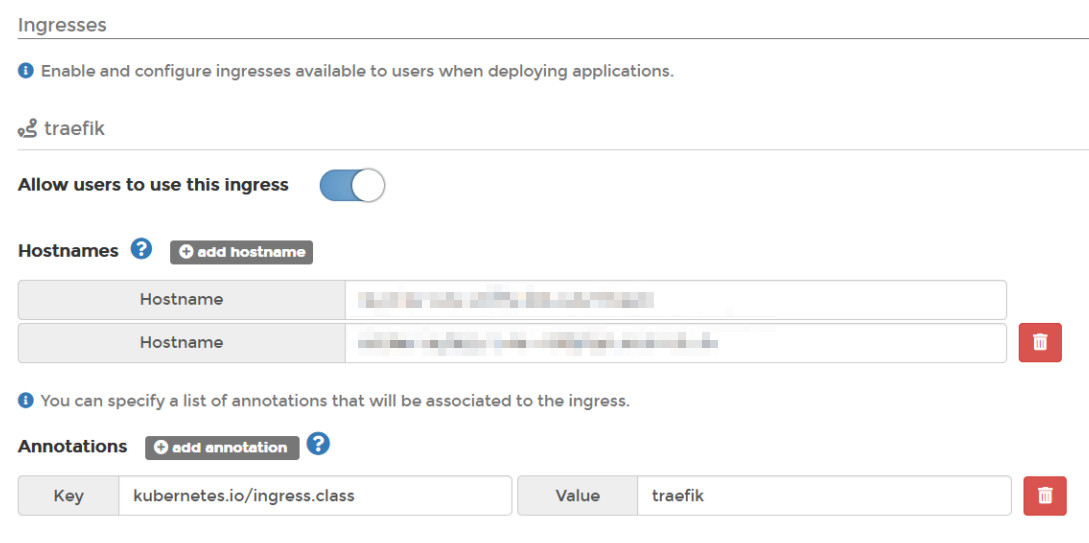

After that, I could specify ingress rules, even when using some graphical cluster management software, like Portainer, such as the domains that will be usable in my cluster namespace:

(note that you can easily add multiple hostnames)

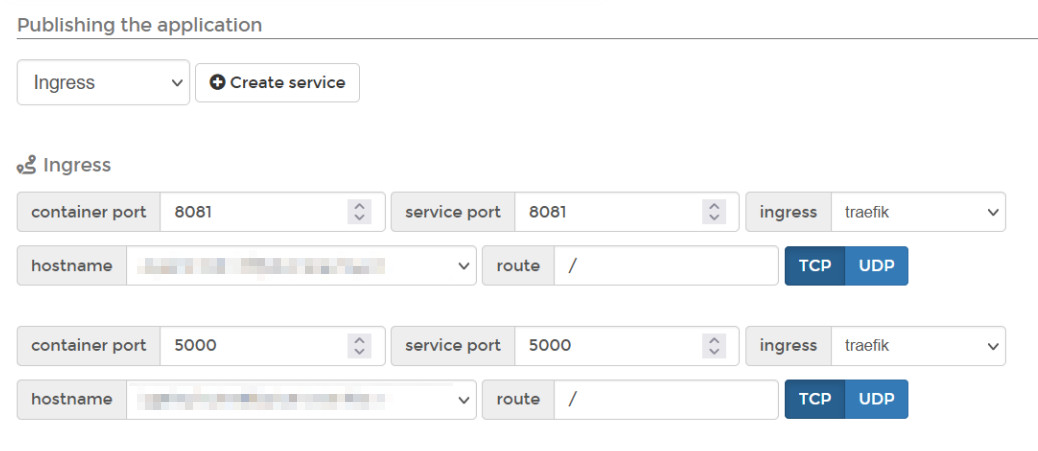

After that, when deploying certain applications, you can also expose your services through the ingress, as shown here with Portainer again:

Also notice that something like Portainer lets you choose from a dropdown of available hostnames, so it suddenly is even easier to configure things. After I finished that configuration, the certificates were served correctly, both for those particular applications, as well as any that I wished to deploy and expose in the future:

And if you want to do things in YAML instead of a graphical interface like Portainer, the Ingress definition doesn't look too complicated either:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: traefik

name: traefik

namespace: my-api

spec:

rules:

- host: my-api.myapp.com

http:

paths:

- backend:

service:

name: my-api

port:

number: 8081

path: /

pathType: ImplementationSpecificOnce again, you might get by with only doing half of these steps in the newer versions of K3s with Traefik, but this should definitely be the right direction in which to look.

It could all be better, though

However, at the end of the day, I don't think that things are as easy or simple as they should be. For starters, configuring SSL/TLS shouldn't be a process where I have to muck about for hours, possibly ending up with some unnecessary steps (as might be the case above), or where each person has quite different ways of doing things, as other people stated in the linked GitHub discussion.

Contrast all of the actions above with how an equivalent Nginx configuration might look, with some additional configuration:

server {

listen 80;

server_name my-api.myapp.com;

return 301 https://$host$request_uri;

}

server {

listen 443 ssl http2;

server_name my-api.myapp.com;

# Configuration for SSL/TLS certificates

ssl_certificate /etc/pki/wildcard.myapp.com.crt;

ssl_certificate_key /etc/pki/wildcard.myapp.com.key;

# Disable insecure TLS versions

ssl_protocols TLSv1.2 TLSv1.3;

ssl_ciphers HIGH:!aNULL:!MD5:!3DES;

ssl_prefer_server_ciphers on;

# Proxy headers

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

# Reverse proxy for the API

location / {

resolver 127.0.0.11 valid=30s; # Docker DNS

proxy_pass http://my-api:8081/;

proxy_redirect default;

}

}Even something like Apache, which is sometimes regarded as more cumbersome to operate, has similarly easy configuration:

<VirtualHost *:80>

ServerName my-api.myapp.com

Redirect / https://my-api.myapp.com/

</VirtualHost>

<IfModule ssl_module>

<VirtualHost *:443>

Protocols h2 http/1.1

ServerName my-api.myapp.com

# Configuration for SSL/TLS certificates

SSLEngine on

SSLCertificateFile /etc/ssl/private/yourdomain.chained.crt

SSLCertificateKeyFile /etc/ssl/private/myserver.key

# Disable insecure TLS versions

SSLProtocol all -SSLv2 -SSLv3 -TLSv1 -TLSv1.1

SSLCipherSuite HIGH:!aNULL:!MD5:!3DES

SSLHonorCipherOrder on

# Proxy headers

# Some already included: https://httpd.apache.org/docs/2.4/mod/mod_proxy.html#x-headers

# Can set additional ones like:

RequestHeader set "X-Forwarded-Proto" expr=%{REQUEST_SCHEME}

# Reverse proxy for the API

ProxyPass "/" "http://my-api:8081/"

ProxyPassReverse "/" "http://my-api:8081/"

</VirtualHost>

</IfModule>The TLS versions and proxy headers are examples of how you might need to do some additional work that can be abstracted away from you by certain ingress controller defaults at times, though of course you'll still need to check that.

Summary

I will admit that personally I prefer just using a traditional web server like Apache, Nginx or even Caddy running as containers in my clusters and binding them to ports 80 and 443 (as well as any custom ones, if needed), which is pretty easy to do with Docker Swarm, but is harder in Kubernetes (due to default port ranges and ingress controllers typically having some bespoke configuration).

Because of this, I'd still advise that people not go against the grain and just use the Ingress abstraction that Kubernetes provides, even if it means doing a bit of extra work. On the bright side, it can mean a standardized approach to things that would otherwise make you deal with web server restarts and validating bunches of configuration and so on. This sort of standardization is actually good, not just because it can abstract certain details away from you (as long as you don't want to see them and the abstraction actually works), but also allow tools like Portainer interface with these formats - the bunch of YAML that Kubernetes gives you is actually one of its better aspects, admittedly, as are many of the built in resource types.

Of course, in a sense the K3s project opted for not the most popular choice themselves. While Traefik is a good and promising project, not picking Nginx as the default ingress means that there will be slightly less information available about how to use and customize it. For example, as far as I can tell, the whole TLSStore resource type is Traefik specific, a CRD that you wouldn't otherwise even be familiar with. But hey, it's still usable for the most part.

Other posts: « Next Previous »