How to use Nginx to proxy your front end and back end

Date:

Recently i split up a monolithic project at work into a separate front end and back end, as well as decided to containerize them separately. This was all achievable thanks to Nginx and this article attempts to explain both why and how to do something like that!

I figured that it would be nice to talk about the benefits, as well as list some reasons for trying to do it in the first place. In my eyes, if you can do something like this at the very start of your project, you'll benefit greatly in the long run, while at the same time doing it even in established projects can lead to having lots of consistency and convenience across stacks.

But first, let's talk about some of the reasons why i even looked in this direction and the problems that faced me otherwise.

Why make these changes?

Previously, the project was a large monolith, a Java application, that was deployed either as a .war, .ear or .jar file and contained all of the resources needed to run it inside of that application container. Now, this might sound pretty good, since you just have one deployment for any given version.

However, a few problems surfaced over time with this approach, which complicated things:

- first, the build was actually one long and sequential string of operations, since you had to build the front end files for distribution, and only compile the application & include these resources afterwards, which slowed things down noticeably

- furthermore, someone had decided to use the maven-frontend-plugin, which caused certain issues with the toolchain, especially when the developers wanted to run Node/npm locally and that led to conflicts due to version mismatches

- the decision was made to migrate the back end from Spring Boot 1.5 to Spring Boot 2, which changed configuration in places, so suddenly the logic to serve static files broke and needed to be fixed

- not only that, but the implementation for handling the request paths and returning these files was specific to Spring Boot; what if another framework were to be used? what if there was a Ruby project that needed something similar? Simply put, these skills wouldn't carry over, since the implementation of serving the front end files is coupled to the back end technology

- speaking of consistency and ease of use, this also extended to local development, where the app needed to serve the front end resources dynamically (e.g. change a file, see the changes after page reload), which necessitated additional configuration

- by the way, configuring the project directories in most IDEs can be problematic, for example, if you have application files under /src/main/resources/static-web-assets, then ignoring that folder because you want to just edit Java code with IntelliJ and to edit just the web assets with WebStorm will take some work

- eventually the decision to migrate from AngularJS to Vue was made, due to the former being essentially EOL software, which was now problematic, because there was no way to run things side by side, to maintain the functionality of the old UI alongside the new one, while having the same backing API

So, with the need to upgrade various components of the app, whilst in the middle of modernizing it and introducing containers, i also decided to go ahead and try out an alternative approach, using a separate Nginx instance, instead of trying to push everything into the app and forcing it to manage and serve static files.

The implementation and its implications

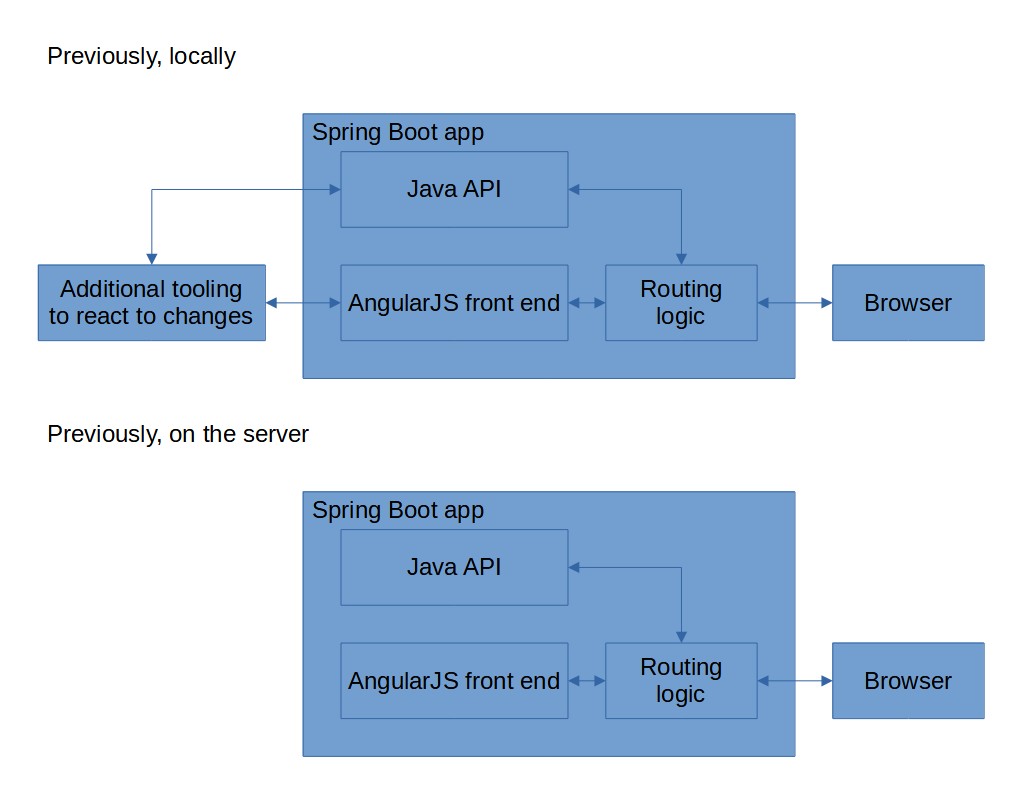

First, let's have a brief look at how things worked previously:

At a glance, it doesn't seem too hard from an ops perspective, actually! As stated previously, you just have one pretty large Java application, which also contains the (slowly) compiled front end resources and allows distributing them. However, as many already know, Java is a fickle mistress and can (and did) cause many problems with doing exactly what we wanted.

In addition, there was also necessity for more tooling: for example, if you were to change a SASS file and as a consequence the corresponding CSS would be re-generated, surely you'd want to serve it immediately from the file system/unpacked application working directory when the browser would reload the pace, right? Well, that's not really the case in regards to the packaged app, where no such recompilation will be done and everything should always be served out of the app files.

Without even getting into managing SSL/TLS certificates, caching, additional access controls and whatnot, i'll just state that there are plenty of things like this in most frameworks and technologies - things that are doable, but are slow to implement and are perhaps just better to do in other alternatives that solve the problems more efficiently.

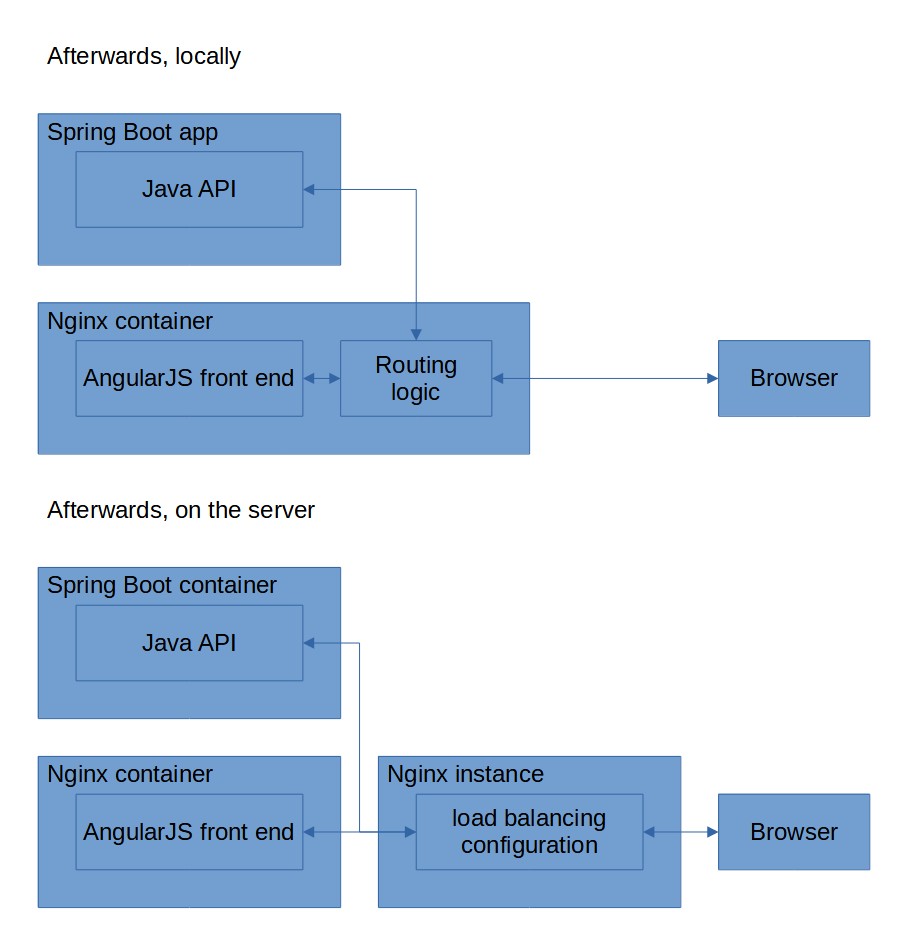

To see what i mean, let's have a look at the topology of everything after my changes:

The biggest changes that you'll notice will be the fact that the additional tooling is now gone, since we no longer need it. Why? Because Nginx allows us to serve stuff out of the file system pretty easily (and we can just do that after SASS is processed, as opposed to fiddling around with configuring the same behaviour inside of the app, or redeploying the entire app upon changes, which we really shouldn't do).

Also, we have not only freed ourselves from needing to work with a lot of Java framework configuration, but also can integrate this very same approach into almost any tech stack, be it Ruby, PHP, .NET, Go, Python or anything else for the back end. Of course, the same also applies to the front end: we can migrate our old AngularJS codebase over to Vue, React, Angular or even Svelte!

The front end doesn't know or care much about the back end and vice versa (well, of course, you still might have to think about CORS and XSS here and there, but that's a tad different) - now both of those can be a bit simpler and Nginx (or even Apache, if you feel so inclined) can take over the heavy lifting for it. And, in my personal experience, i've found Nginx to do largely one thing and do it well - it's a pretty good web server and as such isn't an absolute pain to configure and manage!

Of course, you'll notice some peculiarities, such as us using just one Nginx instance locally whereas we have two on the server - that's because we also run Nginx on our ingress, which i felt could also be included in the graph, to illustrate the point. Our new architecture allows us to introduce more components as we please: we get the ease of use and customizability of a single instance Nginx container locally, whereas we can keep the serving of static files for the stuff that's running on the server, separating the load balancing and SSL/TLS termination into a separate container.

That, in turn, can mirror how things will look in production, or in other environments, be it with Nginx or something else (assume that this is a made up example, though many organizations out there find Nginx more than sufficient for being their prod load balancer, though some like to go the SaaS/PaaS route here too).

Allow me to explain, perhaps some code would finally be in order...

Local setup

Locally, all that we need is essentially the following nginx.conf to serve the static assets from our front end project directory and proxy /api/ requests over to the Java app:

# Example of front end app container, but with reverse proxy to API.

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

keepalive_timeout 65;

gzip on;

server {

listen 80;

# Example of reverse proxy, separate front end and back end

location /api/ {

resolver 127.0.0.11 valid=30s; # Docker DNS

proxy_pass http://host.docker.internal:3000/api/; # Local back end

proxy_redirect default;

}

# Serve the built front end assets

location / {

index /index.html;

root /usr/share/nginx/html/;

try_files $uri $uri/index.html =404;

}

}

}Now, this is a fairly basic setup, you'll find that a lot of it is copied from the Nginx defaults, the interesting bits being under the server directive: where we declare that we'll serve some data from the local file system, but we'll proxy the API over to the app, with a bit added in to deal with accessing stuff running on the host OS from the Nginx Docker container.

Intermission: but why run Nginx in a container but not the Java app? Well, in my experience you need quite a bit of debugging and breakpoints for most development, regardless of tech stack (Java, PHP, all of the others i mentioned), which is sometimes problematic with the container abstraction between you and what you want to debug. Thus, i've found that a good compromise is to run your actual app locally but use containers for all of the supporting software, be it something like our Nginx, or maybe a local database or message queue.

But how to actually run the local Nginx? Well, thanks to Docker, it becomes as easy as running a simple Docker Compose file:

# Docker Compose file for Nginx, that lets you proxy front end API requests in a transparent manner.

# Run with:

# docker-compose -f docker-compose-nginx-local-dev-proxy.yaml up

# Clean up afterwards with:

# docker-compose -f docker-compose-nginx-local-dev-proxy.yaml down

# docker rm nginx-local-dev-proxy

version: "3.7"

services:

nginx_local_dev_proxy:

container_name: nginx-local-dev-proxy

image: nginx:1.21.3

ports:

- target: 80

published: 80

protocol: tcp

mode: host

volumes:

# Here we mount the local configuration for the Nginx instance

- "./nginx-local-dev-proxy-container.conf:/etc/nginx/nginx.conf"

# Here we mount the actual source code (or you can mount the build output)

- "./src:/usr/share/nginx/html"

# Enable this for detailed routing debug info

command: [nginx-debug, '-g', 'daemon off;']The most interesting bit here will be the bind mounts, which forward our local directories to those of the container (in a manner of speaking), letting the container look at its own file system and see our files: the configuration for Nginx and the resource directory to serve to the browser requests.

As stated before, this simple setup works for pretty much every tech stack out there. No longer do you need to mess around with Vue proxy configuration either, or anything like that! This is also basically the same that will run on the servers, which is excellent from a reproducibility standpoint. Run into problems with file uploads not letting you work with any files larger than 1 MB? Perfect, you can test it locally and also develop a fix for it right away!

Discover problems with how your app doesn't want to work with load balancers in front of it (had this with mTLS)? Better now than when trying to deploy to prod, you'll be able to address this issue right away and replace mTLS with something more modern like JWT.

But how different are things on the server?

Server setup

It's almost the same, actually! For the actual static asset container, we simply remove the API proxy block (to minimize network hops, not strictly necessary) and the configuration therefore becomes:

server {

listen 80;

# Serve the built front end assets

location / {

index /index.html;

root /usr/share/nginx/html/;

try_files $uri $uri/index.html =404;

}

}But where does the API proxying configuration go, then? To the ingress, because routing requests to the correct endpoint is its job now, at least in lieu of a dedicated service mesh with service discovery. Alas:

server {

listen 80;

server_name app.dev.my-company.com;

return 301 https://$host$request_uri;

}

server {

listen 443 ssl http2;

server_name app.dev.my-company.com;

# Configuration for SSL/TLS certificates

ssl_certificate /etc/pki/.../dev.my-company.com.crt;

ssl_certificate_key /etc/pki/.../dev.my-company.com.key;

# Disable insecure TLS versions

ssl_protocols TLSv1.2 TLSv1.3;

ssl_prefer_server_ciphers on;

ssl_ciphers HIGH:!aNULL:!MD5;

# Proxy headers

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

# Example of Matomo analytics on the same domain

location /analytics/matomo.js {

resolver 127.0.0.11 valid=30s; # Docker DNS

proxy_pass http://matomo:80/matomo.js;

proxy_redirect default;

}

location /analytics/matomo.php {

resolver 127.0.0.11 valid=30s; # Docker DNS

proxy_pass http://matomo:80/matomo.php;

proxy_redirect default;

}

# Example of reverse proxy, separate front end and back end

location /api/ {

resolver 127.0.0.11 valid=30s; # Docker DNS

proxy_pass http://app-dev-backend:3000/api/;

proxy_redirect default;

}

# Serve the built front end assets

location / {

resolver 127.0.0.11 valid=30s; # Docker DNS

proxy_pass http://app-dev-frontend:80/;

proxy_redirect default;

}

}For brevity's sake, i've excluded the boring Nginx bits like "worker_connections", they can either be the same across all instances, or you can just alter them on a case by case basis.

But here, we see the beauty of having such an ingress - this will be the first bit of network request processing logic (or one of) that'll be triggered for any incoming requests, for any of our apps that are behind this ingress.

Thus, we can handle SSL/TLS here in a centralized manner, as well as implement any HTTP --> HTTPS redirects (if your site needs to be able to receive data from users), disable any TLS versions or ciphers that we think aren't safe, or add additional header processing logic.

Also, here you'll notice that we have not only the previously described routing in place for our API and static resources, but also paths for Matomo analytics, next to which, in practice, you could also put additional rules for something like APM solutions (e.g. user browsers could send error messages), session recording, or maybe even different API endpoints altogether, or even micro front ends!

Even if you're currently not doing micro services, having this sort of flexibility and dead simple configuration out of the box is really nice to have!

Of course, there is another piece of our secret puzzle, that i'm yet to divulge. What does the actual build for the static assets look like, then? Something that's described really nice by the contents of my Dockerfile:

# Dockerfile for a multi-stage build of the app, the first container using Node.js to build, whereas the latter stores the generated files for Nginx

# ========== BUILDER ==========

FROM registry.my-company.com:5000/my-project/nodejs AS builder

LABEL stage=builder

# Copy the files for dependencies, so we can install those first and use Docker caching to speed up builds

WORKDIR /workspace

COPY package.json /workspace/package.json

COPY package-lock.json /workspace/package-lock.json

RUN npm install --registry=https://registry.my-company.com/repository/npm-group/

# Copy the source

COPY . /workspace

# Make sure target directory for compiled files is empty

RUN rm -rf /workspace/target && mkdir /workspace/target

# Build app

RUN your-stack-cli build

# To make sure that front end is building correctly

RUN echo "Front end resources after build:"

RUN ls -l target/

# ========== RUNNER ==========

# Simple Nginx image that contains the built front end files and will proxy API requests further

FROM registry.my-company.com:5000/my-project/nginx

# Copy files from builder

WORKDIR /usr/share/nginx/html

COPY ./nginx-generic-front-end-container.conf /etc/nginx/nginx.conf

COPY --from=builder /workspace/target /usr/share/nginx/html

# Health check example, different values can be specified when running

HEALTHCHECK --interval=20s --timeout=20s --retries=3 --start-period=120s CMD curl --fail http://127.0.0.1:80 | grep "Welcome to my app!" || exit 1"

# We leave the Nginx run commands unchanged here

# Remember to set up the Nginx config in a bind mount, if your app needs that for any localization JSON files or something!Now, i'm a fan of doing multi-stage builds to make containers pretty small, as well as using bits of configuration such as our own local proxies for dependencies versus always downloading them from the Internet or relying on local caches which may or may not be available, but overall it shouldn't be too hard to grok either!

Summary

In summary, i believe that there is a lot of value for keeping things simple, as far as any single piece of software is concerned. Web servers are generally pretty good at serving files, whereas your APIs shouldn't necessarily attempt to fill that role, but instead should be free to focus on what they do best - bringing your business rules or cool application ideas to life!

In my eyes, this extends to how your services may be structured - you don't always have to do domain driven design (DDD) and have a thousand different services, but developing functionality to generate PDF reports or bills in a separate service can be a good idea, as can splitting your application's service boundaries along the lines of wildly different technical solutions and demands, otherwise you could find yourself unable to upgrade your huge monolith, because doing so would break your PDFs and surely you cannot do that! Thus, 9 out of 10 features would be held back by that one problematic one versus just writing down that you should perhaps rewrite that 10k line service to work with new tech and being free to upgrade the other services in the mean time!

This also extended pretty clearly to the front end technologies - since after my changes we are free to replace AngularJS (or, you know, jQuery, or imagine any other dated technology here) with anything else, whilst still keeping the old UI up should the need arise, for example, for the pages that still haven't been ported over. Admittedly, with our modern day SPAs that might not always be easy (e.g. data in cookies vs browser memory) and might need code changes, but at least our hands are not tied as far as the topology of everything goes and if we ever wanted to look at micro front ends, we could do that too!

Oh, and developers no longer need to wonder about whether the expired SSL/TLS keys/certificates should be in a .p12, .pfx, .jks or some other format that the particular finnicky Java app server or even that particular framework needs - Nginx has got your back there as well! Hell, if you wanted to, you could even integrate with Let's Encrypt with the help for certbot for free SSL/TLS certs that will be automatically replaced for your public applications (or with DNS-1 challenge for non-public ones). Or even use mod_domain with Apache2, if you want.

In short, i'm pretty happy about choosing this approach and introducing a web server (or two) into the mix can have plenty of tangible benefits!

Disclaimer: if some of the project specifics seem inconsistent (e.g. 3000 for HTTP, more indicative of Node than Java) or fake (the domains) that's because they are. This is just an example, but it should still be applicable for most realistic circumstances.

Other posts: « Next Previous »