Increase container build speeds when you use apt

Date:

It's no secret that I use containers for most of my workloads, due to the reproducibility and standardization that they provide. I've also gone a step further than most folks, since I base all of my container builds on Ubuntu, actually getting pretty close to the old joke of: "If it works on your machine, we'll just have to ship it." In other words, if I run Ubuntu locally and build my containers by installing packages with apt instead of various "hacks" like adding pre-built binaries, then I can get pretty much the same development setup, even without using containers for local development (remote debugging is sometimes a pain).

However, while I avoid the issue of using something like Alpine Linux (which I still think is great) for my containers and having to "pretend" that it's going to be close enough to a local dev setup, until I run into some issues, there is something that still need a bit of talking about: performance. So, how do I keep my build times reasonable, even without optimized distros like Alpine, all in the name of simplicity, consistency and productivity?

It's actually not that hard, so let's jump in.

My current setup

For starters, here's a quick refresher on the setup. Instead of using ready made images with a mishmash of various distro versions and customizations from Docker Hub, I need just the base Ubuntu image, which I then further customize with some tools of mine, and use as the base for all of my other images. Here's an example of the "prod" builds, which contain images that I might deploy directly:

I also have a lot of "dev" images, which contain the runtimes for various languages and other tools that I might want to run, such as PHP running on top of either the Apache or Nginx image, a Java or .NET runtime, maybe Node and Python and so on:

Now, there are some exceptions to this rule, so I don't lose my sanity: for images like MariaDB, PostgreSQL, MinIO, Redis, RabbitMQ and other turnkey pieces of software, I just re-host the excellent Bitnami images for my own needs. This is done because they have pretty sane defaults and similar configuration for all of them.

These builds largely happen in the background, either once per month (for the "prod" and "hosted" images) or once per week (for the "dev" images), mostly because I have separate cleanup policies for the old images, since the "dev" images are likely to take up way more space (because they're kept alongside regular CI output), so re-building them more often and getting rid of the old versions makes sense in my case:

In total, the setup looks a bit like the following:

What you'll immediately notice, is that I use Sonatype Nexus almost like a centerpiece for the whole setup. This has a number of benefits: I can cache remote packages there, thus use all of my server's I/O for my own needs, without worrying about something like DockerHub rate limits, or bad performance if I ever need to pull lots of apt packages, or npm packages, or whatever:

Not only that, but I also get a bit of added privacy (because I can protect whichever repo I care about) and can also use all of the storage available to my server, without having to pay for hosting these elsewhere. However, the build performance could be improved. Let's look at some of the common things that one could do.

Disabling fsync when using apt

The other day, someone on HackerNews was saying how apt is a slow package manager, however someone in the comments brought up how this is due to its focus on robustness, to prevent issues in the case of a power failure, as much as possible:

Well, guess what? That's a great feature to have when running the OS on a server (or in a VM) directly, but not something that you care very much about when building containers! Because of that, turning off fsync might actually be a good idea for container builds - if the build was to fail, it could always be restarted later and would just report its build status as failed, which is no big deal. On Debian and Ubuntu, we can turn that off with the interestingly named eatmydata tool.

So, what does that looks like? Here's an example of my base Ubuntu image, where I will install it. Up until now, the Dockerfile looked a bit like this:

# Switched from Alpine to Ubuntu, because the EOL is 2025.

FROM docker-proxy.registry.kronis.dev:443/ubuntu:focal-20220801

# Disable time zone prompts etc.

ARG DEBIAN_FRONTEND=noninteractive

# Time zone

ENV TZ=Europe/Riga

# Use Bash as our shell

SHELL ["/bin/bash", "-c"]

# Bootstrap ca-certificates, so can use HTTPS for own repositories.

RUN apt-get update && apt-get install -y \

ca-certificates \

&& apt-get clean && rm -rf /var/lib/apt/lists /var/cache/apt/*

# Use an Ubuntu package mirror

COPY ./ubuntu/etc/apt/sources.list /etc/apt/sources.list

# Run updates on base just to make sure everything is up to date

RUN apt-get update && apt-get upgrade -y && apt-get clean && rm -rf /var/lib/apt/lists /var/cache/apt/*

# Some base software, more to be added

RUN apt-get update && apt-get install -y \

curl \

wget \

nano \

net-tools \

inetutils-ping \

dnsutils \

lsof \

unzip \

zip \

git \

gettext \

supervisor \

rsync \

&& apt-get clean && rm -rf /var/lib/apt/lists /var/cache/apt/*

# Print versions

RUN cat /etc/*-releaseBut now, I install eatmydata as a package, and can use it for all of the further apt invocations, both for this image and any that are based on it:

# Switched from Alpine to Ubuntu, because the EOL is 2025.

FROM docker-proxy.registry.kronis.dev:443/ubuntu:focal-20220801

# Disable time zone prompts etc.

ARG DEBIAN_FRONTEND=noninteractive

# Time zone

ENV TZ=Europe/Riga

# Use Bash as our shell

SHELL ["/bin/bash", "-c"]

# Bootstrap ca-certificates, so can use HTTPS for own repositories.

# Also install eatmydata for better performance when using apt

RUN apt-get update \

&& apt-get -yq --no-upgrade install \

ca-certificates \

eatmydata \

&& apt-get clean \

&& rm -rf /var/lib/apt/lists /var/cache/apt/*

# Use an Ubuntu package mirror

COPY ./ubuntu/etc/apt/sources.list /etc/apt/sources.list

# Run updates on base just to make sure everything is up to date

# Also, install eatmydata to disable fsync

RUN eatmydata apt-get update \

&& eatmydata apt-get -yq upgrade \

&& eatmydata apt-get clean \

&& rm -rf /var/lib/apt/lists /var/cache/apt/*

# Some base software, more to be added

RUN eatmydata apt-get update \

&& eatmydata apt-get -yq --no-upgrade install \

curl \

wget \

nano \

net-tools \

inetutils-ping \

dnsutils \

lsof \

unzip \

zip \

git \

gettext \

supervisor \

rsync \

&& eatmydata apt-get clean \

&& rm -rf /var/lib/apt/lists /var/cache/apt/*

# Print versions

RUN cat /etc/*-releaseSome slight formatting aside, it's a very simple change that has the potential to improve your build times, when dealing with slow I/O. However, will it actually help us? I have some graphs below that go into detail, as well as a little writeup about it, but it should be said that this is likely to have the biggest impact when you're dealing with slow disks - because my servers use SSD for the boot drives and also for the stuff that's needed to build/store containers, there is little benefit to be found here, for the most part.

Adding this optimization might make things better in some circumstances and almost never will make things worse, so it's nice to have regardless. It's one of those things that will depend on what environment you build your containers in.

Disabling cache cleanup, at the expense of bigger containers

Another thing that jumps out to me, is the realization that I actually have to call apt multiple times when building any of the container images that I need. You see, I might install some packages for Ubuntu, then some packages for Apache and then some more to get PHP working with Apache - while it will result in 3 separate container images which I will use in different situations, that is still calling apt 3 times. This wouldn't be a problem in of itself, but up until now I retrieved the package list each time and cleaned it up afterwards, which has a certain overhead (all so I wouldn't store extra 50-100 MB in the image).

But what if it's not worth it? Well, I can alter my Ubuntu image further, to not clear up the package cache and other related directories, so my Ubuntu Dockerfile would basically become the following:

# Switched from Alpine to Ubuntu, because the EOL is 2025.

FROM docker-proxy.registry.kronis.dev:443/ubuntu:focal-20220801

# Disable time zone prompts etc.

ARG DEBIAN_FRONTEND=noninteractive

# Time zone

ENV TZ=Europe/Riga

# Use Bash as our shell

SHELL ["/bin/bash", "-c"]

# Bootstrap ca-certificates, so can use HTTPS for own repositories.

# Also install eatmydata for better performance when using apt

RUN apt-get update \

&& apt-get -yq --no-upgrade install \

ca-certificates \

eatmydata \

# We store my custom repo data, not global ones

&& apt-get clean \

&& rm -rf /var/lib/apt/lists /var/cache/apt/*

# Use an Ubuntu package mirror

COPY ./ubuntu/etc/apt/sources.list /etc/apt/sources.list

# Run updates on base just to make sure everything is up to date (also pull in proxy)

RUN eatmydata apt-get update \

&& eatmydata apt-get -yq upgrade

# Some base software, more to be added

RUN eatmydata apt-get -yq --no-upgrade install \

curl \

wget \

nano \

net-tools \

inetutils-ping \

dnsutils \

lsof \

unzip \

zip \

git \

gettext \

supervisor \

rsync

# Print versions

RUN cat /etc/*-release(note that I still clean the cache for the public packages, before I add my own repositories, because I needed to install ca-certificates and also eatmydata first, but there's no cleanup after I install packages from my own repositories at the end, unlike the previous examples)

This also applies to any other images that I might build. For example, my .NET image went from the following:

# We base our .NET 6 image on the common Ubuntu image.

FROM docker-prod.registry.kronis.dev:443/ubuntu

# Disable time zone prompts etc.

ARG DEBIAN_FRONTEND=noninteractive

# Time zone

ENV TZ=Europe/Riga

# Use Bash as our shell

SHELL ["/bin/bash", "-c"]

# Bloated packages for installing dependencies

RUN apt-get update && apt-get install -y \

apt-transport-https \

&& apt-get clean && rm -rf /var/lib/apt/lists /var/cache/apt/*

# Get MS repos

RUN wget https://packages.microsoft.com/config/ubuntu/20.04/packages-microsoft-prod.deb -O packages-microsoft-prod.deb && dpkg -i packages-microsoft-prod.deb && rm packages-microsoft-prod.deb

# Install .NET 6 finally

RUN apt-get update && apt-get install -y \

dotnet-sdk-6.0 \

&& apt-get clean && rm -rf /var/lib/apt/lists /var/cache/apt/*

# Print versions

RUN dotnet --versionTo a shorter and more optimized version:

# We base our .NET 6 image on the common Ubuntu image.

FROM docker-prod.registry.kronis.dev:443/ubuntu

# Disable time zone prompts etc.

ARG DEBIAN_FRONTEND=noninteractive

# Time zone

ENV TZ=Europe/Riga

# Use Bash as our shell

SHELL ["/bin/bash", "-c"]

# Bloated packages for installing dependencies

RUN eatmydata apt-get -yq --no-upgrade install \

apt-transport-https

# Get MS repos

RUN wget https://packages.microsoft.com/config/ubuntu/20.04/packages-microsoft-prod.deb -O packages-microsoft-prod.deb && dpkg -i packages-microsoft-prod.deb && rm packages-microsoft-prod.deb

# Install .NET 6 finally

RUN eatmydata apt-get update \

&& eatmydata apt-get -yq --no-upgrade install \

dotnet-sdk-6.0

# Print versions

RUN dotnet --versionDon't get me the wrong way: I absolutely end up with larger container images for this, but don't have to update my package lists with every build anymore, since the ones that are in the base image should be recent enough anyways. The only exception to this is when I'm adding third party repositories, such as those hosted by Microsoft for their .NET runtime. However, this impact isn't too big, at least when compared to the actual sizes of the container images themselves.

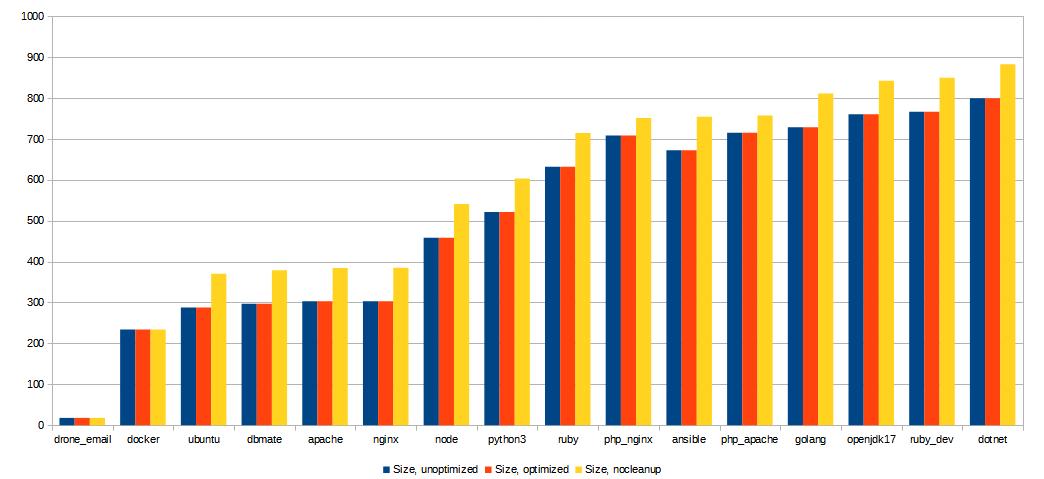

The results, container sizes

So, the first question to answer is: did the container sizes change in any serious way, as a consequence of these optimizations? I'll let the following graph speak for itself:

As you can see, the only images without changed sizes are the ones that I use as is - a utility image for Drone CI to be able to send me e-mails, as well as the Docker image that I use for the actual builds, as a tool. The actual "dev" and "prod" images all ended up with close to 100 MB of additional size added, all of which is coming from the apt lists (and possibly cache) being included in the Ubuntu base image. Now, is this a problem, though?

I'm inclined to say that it doesn't matter too much for me, because the full Ubuntu runtimes for PHP, Go, OpenJDK, Ruby and .NET are already ridiculously big by container standards, each weighing in at multiple hundreds of MB. If I wanted to optimize for sizes instead of simplicity and convenience, I'd probably go for Debian or Alpine based images with various build hacks. Furthermore, another good aspect is layer reuse: the base Ubuntu image comes in at 300-400 MB (with some dev tools included), which will only need to be fetched once regardless of how many of the other images you'll want to use on any given server - because the base is the same.

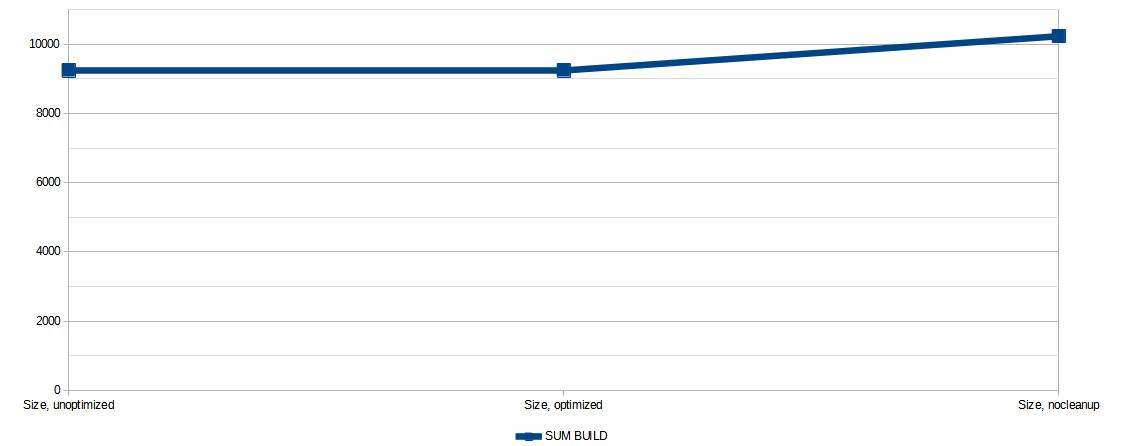

In short, eatmydata had no impact on its own, whereas not getting rid of the build caches did add a considerable amount of data to the container image, which thankfully doesn't matter too much to me, since if you look at the total size (sum) of the images, it's not that big of a change in total (which is made even less of an issue thanks to the aforementioned layer reuse):

For the folks that disagree, remember to clean up your apt caches when installing something, like so:

# Bloated packages for installing dependencies

RUN eatmydata apt-get update \

&& eatmydata apt-get -yq --no-upgrade install \

apt-transport-https \

# Cleanup apt caches after build, if necessary

&& eatmydata apt-get clean \

&& rm -rf /var/lib/apt/lists /var/cache/apt/*But what about the build times themselves?

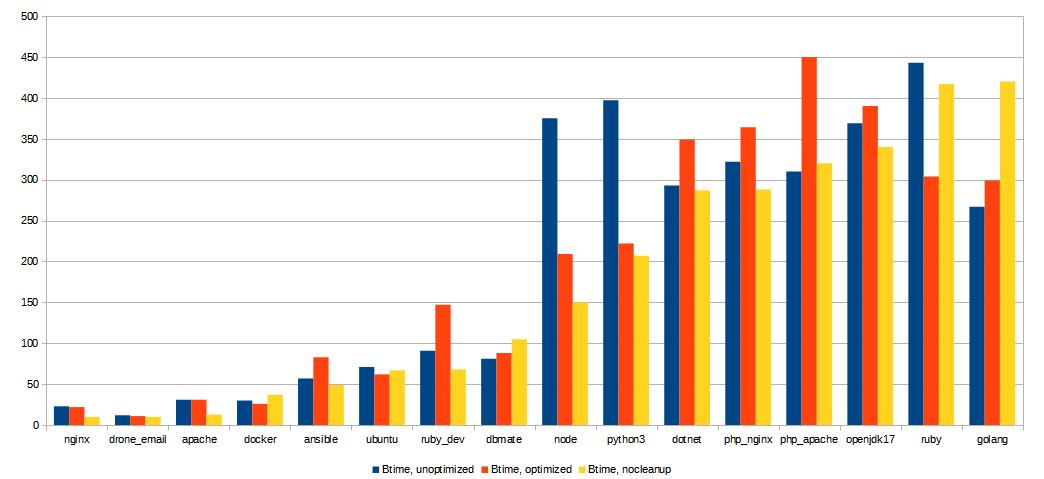

The results, build times

I have to concede that in regards to build times, it's overall a bit of a mess. If I gave you a graph like in the example above, it wouldn't make too much sense:

Some of the builds got way faster, some of them got way slower, what gives? The answer is simple: I parallelize my builds, but all of my homelab servers share the same Internet connection, so I get around 60 Mbps down and 12 Mbps up, so it's easy for two container builds to struggle for what little bandwidth there is. Normally this isn't a problem because they can build while I'm still sleeping, but that's pretty horrible for gathering statistics, unless I want to disable parallelization and not do anything online while some data is gathered.

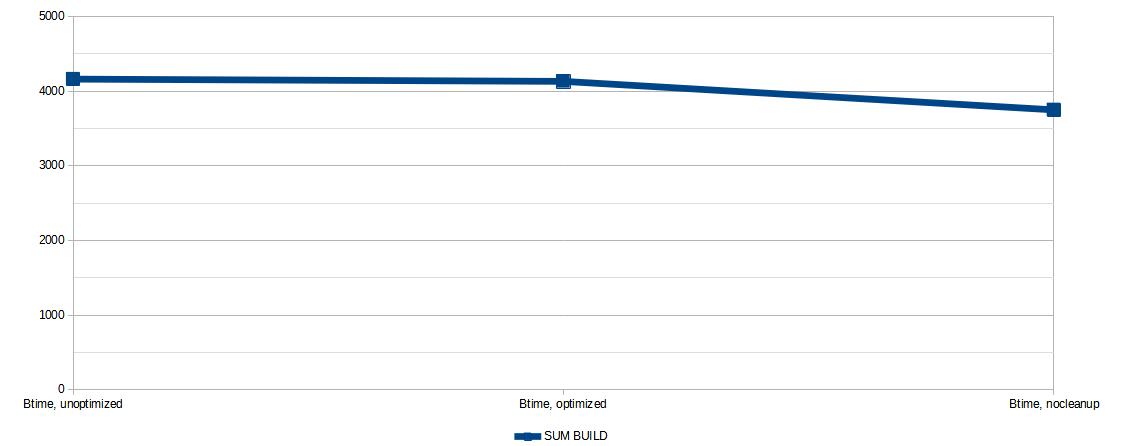

Since I'm lazy, let's just look at the aggregate (sum) build times:

Thankfully, this seems to have some slightly more clear results: the build times weren't affected much after adding eatmydata (mostly because of the SSDs), but the total figures definitely decreased after getting rid of the caches. Note that I removed the upload overhead from this, because uploading images on a spotty 12 Mbps link definitely messed with the results, so you'd probably want to look into whether uploading additional 100 MB once would cause problems or not.

Summary

Aside from the realization that I'm bad at statistics due to circumstances mostly outside of my control, it seems like the optimizations did indeed provide benefits, however they were somewhat negligible. That's why it's always important to benchmark things with your own setup and look into what you even need to optimize for, where the bottlenecks might be.

That said, there's also the added benefit from leaving in the package cache, which is that whenever I need to install a new package, I don't have to fetch it from wherever the repository might be. Now, in my case it wouldn't be too much of a problem because I host my own apt proxy on Nexus, but for someone else that might prove to be a bit slow. It's mostly a matter of how often you want to install additional packages in these images (for example, when changing them or doing some development work that needs this, like something that needs native dependencies for building Ruby Gems or Python dependencies) and this size increase, which might matter more if you had to pay for the bandwidth needed to launch each of your containers.

Another aspect, something that I might otherwise leave out, is how these often repeated builds did take up more space on my Nexus server's disk, because I needed to re-run all 3 of the build profiles ("prod", "dev" and "hosted") with each of these configurations, but thankfully the automated cleanup will take care of this shortly:

This is precisely why I like building my container base images either once a month or once a week, as well as getting rid of any older ones. I will have relatively new software, while still keeping the amount of storage used reasonable.

At the end of the day, my recommendations would be simple:

- If your container builds use fsync, look into trying out

eatmydata, it's pretty low risk, but will yield benefits in case you have slow I/O (think HDDs instead of SSDs). - You may consider what to do with the package cache yourself (for example, if you build public images that someone else will download, then you can optimize for smaller sizes too).

Other posts: « Next Previous »