Simple ways to do backups

Date:

A while ago, there was a post on Hacker News, which asked for advice on how to do backups:

If someone is a less able, cognitively impaired, or perhaps very young - It would be good to see what advice HN would give them for protecting their data from ransomware, theft, or disaster.

That actually got me thinking - in software development, we sometimes count on external service providers to handle our backups, other times we might expect platforms like VMware to do them for us, while other times there are a variety of complex tools, everything from backing up entire file systems, to going down to the level of using specialized tools to backup database instances. But what do you do, when you want simple backups?

So, to that end, i shared a few of my personal thoughts, and som information about my own backup solutions in that discussion, as well as decided to turn it into a blog post of my own. Without further ado, let's get into it!

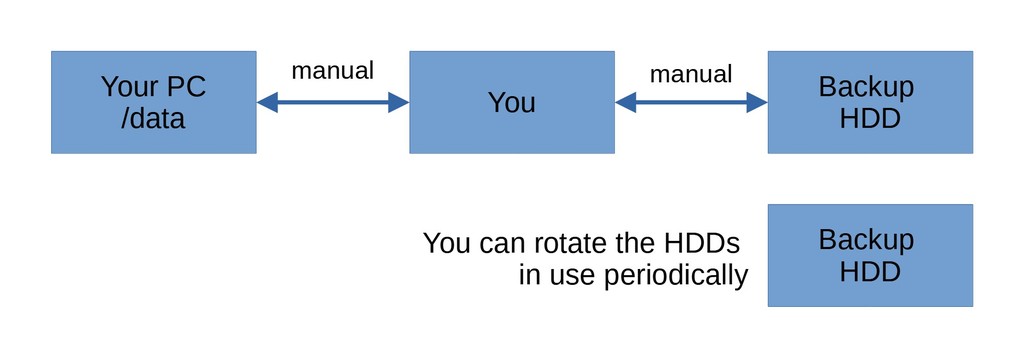

Approach #1: Simple file copies on multiple disks

The absolute simplest option that i can think of: have a few large HDDs or SSDs that you connect to your device with an USB enclosure and copy over all of your data.

It's also reasonably simple to automate that process with something like cron and rsync. If it's not possible to automate that process, or it's too cumbersome, then just do it manually and keep a log somewhere.

Example:

2021.10.01 - copied over /home and /data to HDD1

2021.09.01 - copied over /home and /data to HDD3

2021.08.01 - copied over /home and /data to HDD2

2021.07.01 - copied over /home and /data to HDD1

2021.06.01 - copied over /home and /data to HDD3

2021.05.01 - copied over /home and /data to HDD2

...Pros:

- doesn't take any advanced knowledge of software, software packages, or even require a specific OS

- the backups are just files that you can view and copy just like you would with any other disk

- if the devices have cloud storage clients installed, you can transparently also back up those

Cons:

- somewhat tedious, especially if you don't set up a calendar reminder on your phone or something

- if you want redundant backups (say, on HDD1, HDD2, HDD3), then you'll need to copy the files multiple times

- all of your backups are probably in one place with this approach

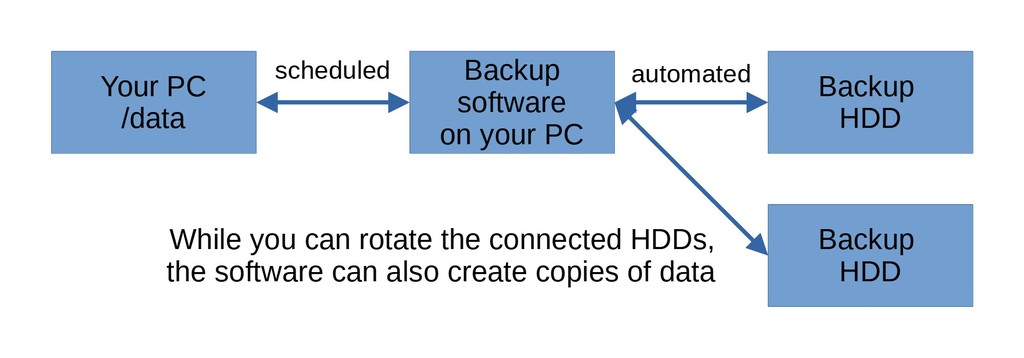

Approach #2: Consumer backup software with multiple disks

If a slightly more complex solution is okay, then you might use some of the software that's out there, built with the purpose of automating backups. Some of the solutions are paid, others are free, but the general idea is the same - you set up some directories which you'd like to backup, you set a schedule and perhaps some rules on what to back up and how and let the software run whenever.

Example:

Pros:

- allows automating backups, so human error is less of a factor

- minimizes complexity as the amount of data that you need (or the count of locations) to backup increases

Cons:

- depends on the platform, each OS will have their own pieces of software for something like this

- still not networked, unless you use a NAS or something similar (which you might consider at this point)

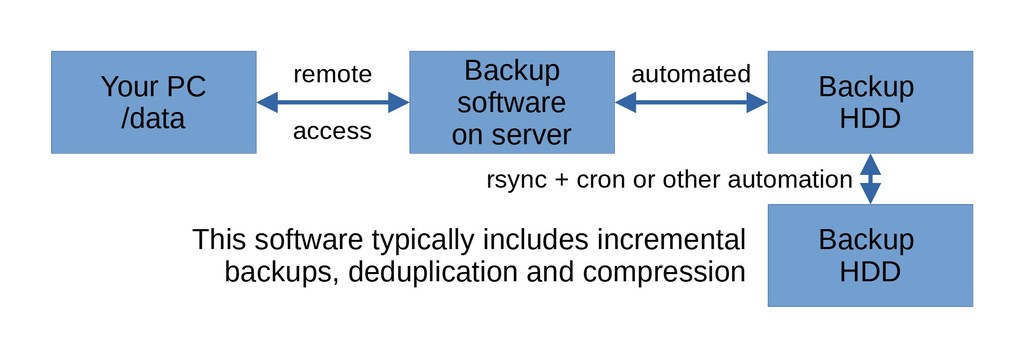

Approach #3: A server for backups over the network

Now, this is a bit more complicated, but since any regular computer can become a server and since HDDs are pretty cheap anyways, spending a few days setting up a backup solution can sometimes be worth it, if the people can spend some time following guides or reading the documentation.

Essentially, you'd set up your own server, with an OS of your choice (personally i'd suggest Debian or Ubuntu LTS) and would install some software package, that could connect to the devices that you'd like to back up and would pull data from them at a set schedule. Alterting options are also available should anything go wrong.

Example:

Pros:

- this can be a proper networked solution, which allows you to host it anywhere, away from your physical location

- there can be some pretty useful deduplication functionality built into the software, as well as support for various connection methods

- this can also give life to your old electronics, as opposed to contributing to e-waste

Cons:

- if the backups work with a "pull" model, you'll need to configure access to all of the devices that you'd like to connect to, which can be troublesome

- furthermore, depending on how all of this is set up, security becomes more of a concern, as it is with any networked solution

- at this point, you're basically maintaining a server of your own

Further thoughts

There are more complicated setups out there, such as file systems with snapshotting, RAID to avoid individual disk failures, storage pools etc., which may or may not be worth it, based on the complexity vs the benefit that they provide.

NAS solutions and cloud solutions for storage can also be explored, as long as security isn't forgotten about - for most people, both of those can be good options and can be combined with any of the alternatives.

Integrity is surprisingly hard to get right and as long as you have multiple backups of the same files over time, it's not always worth it to worry about it too much. Ideally, check the files that matter to you in the backups manually, like whether your master's thesis was backed up correctly.

Version control systems are also surprisingly nice for smaller files, like the aforementioned thesis - with something like GitLab and the aforementioned server backups, that introduces more redundancy and versioning in the mix, with tools that most developers will be familiar with.

Restoring backups is important, yet not often considered much. If you use something like BackupPC, you absolutely need to test whether you can properly download or restore the files that you've backed up into it, otherwise it's useless.

Lastly, you should remember the 3-2-1 rule of backups:

The 3-2-1 rule can aid in the backup process. It states that there should be at least 3 copies of the data, stored on 2 different types of storage media, and one copy should be kept offsite, in a remote location.

(from 3-2-1 Rule)

In my experience, that's why file/archive based solutions are perhaps the best option, because they're easy to carry over to other storage mediums.

Risk Analysis

Not only that, but the above options actually combine with one another rather nicely. Right now, i use manual backups of the data that matters to me on my phone (connect through USB, transfer files monthly), use the backup software to automatically propagate my files across multiple drives, then use BackupPC to pull my files to a backup server, which also has multiple mirrored drives that incrementally synchronize with rsync and cron. Then i also use a few Nextcloud instances which copy my local files to my own VPSes, which coincidentally are also pulled down by BackupPC. And then i also copy some of my keychains and other important files on local storage mediums - SD cards or memory sticks, as well as encrypted containers on almost every device that i use (VeraCrypt). In my eyes, the simplest solutions that don't require specialized hardware are perhaps the best ones.

Thus, my risk analysis looks like this:

- if one of my HDDs/SSDs fail, i can pull a backup off of the spare drive

- if one of my devices fail (power surge etc.), i can pull a backup off of my backup server

- if my backup server fails (power surge etc.), i still have the most important data in Nextcloud, on my cloud VPSes

- if my cloud VPSes fail (banned etc.), i still have the data in various SD cards strewn around the place (as long as i can get VeraCrypt or KeePass working somehwere)

- if my cloud VPSes fail (banned etc.) and all of my local devices fail and my SD cards fail (house burns down etc.), i still have the cached Nextcloud data and encrypted stuff on my smartphone, which i keep with myself

And it's also possible to plan further contingencies, if needed:

- if all of the above were to fail, then it'd also be possible to just give a third party (trusted person) backed up drives every now and then

- if no such party is available, then it's possible to just upload encrypted backups to all of the cloud providers that you know of, for redundancy

- if all of the cloud providers have also failed, then you probably have bigger problems and backups are no longer relevant

Other posts: « Next Previous »