AI, artisans and brainrot

Date:

Recently, I went to a software development event in Germany, where, at the conclusion of one of the days, I was hanging out in a hotel room with a friend of mine. Earlier, she had been exploring ways to get full VMs running on her Linux system easily, for doing some development work and experimentation with Docker on them.

Another friend had suggested VirtualBox as a starting point.

I looked at what she was trying to do, and suggested that maybe Vagrant might also be a good fit, due to her wanting to make some of the output of her experimentation easily transferable and reproducible elsewhere (the Vagrant files might be a little bit better for that, than just some Bash scripts that talk to VirtualBox), in addition to wanting a bunch of stuff to be executed during startup.

Obviously, both of us were actually kind of new to Vagrant, due to me having found Docker (and OCI containers in general) more suitable for my needs, also using cloud VPSes for anything longer term and also Ansible for automating configuring my boxes as needed, whereas she hadn't worked that much on the infrastructure side and didn't really feel like paying for cloud resources for something like this. There's nothing wrong with VMs and her choice here was nice, especially because she felt like wanting to learn more about various setups and experiment.

That's where we hit our first roadblock. I also told her about Docker Swarm as a pretty lightweight and simple to use, setup and operate orchestrator. There is nothing wrong with Swarm itself, but we did hit a snag when trying to get the cluster initiated. What we needed to do was:

- retrieve the correct IP address on the leader node

- use that IP address in

--advertise-addrwhen initializing the cluster - retrieve and save the join token after the cluster is initialized

- on the other follower nodes, retrieve and use this token to join the cluster

She was going through the docs bit by bit and trying things that didn't really work (yet) and that were frustrating. At one point, she had decided to take a pause for a bit and suggested I give it a shot. Admittedly, I didn't care much about Vagrant: I knew what we wanted to do and the exact details of how we'd do that on the line-by-line level felt less important, so I just threw a prompt, a bit like the list above, at one of the AI tools at my disposal.

For this, I needed a few iterations and I got decently far: the AI generated code used the correct IP address within the script with #{ip}, initialized the cluster correctly AFTER actually waiting for Docker to startup in the container (another snag which I hit along the way, which needed another iteration) and could seemingly retrieve the join token. Where I had gotten stuck was that I'd still need to make it available to the other nodes and they were sitting in a waiting loop in their own script, waiting to retrieve the keys but never getting them. I think a good approach here would be to use some shared storage and just watch for changes there, or something along the lines of that. With a bit more iteration and familiarity with her computer (e.g. keyboard layout), I could have had something working in about 15-20 minutes.

But that didn't matter. Tragedy had already struck. I had committed a cardinal sin in her eyes, by reaching for the abominable intelligence.

AI and learning

You see, a lot of people out there hate AI. Not dislike, not think that it's a fad, but straight up hate it. Not just because the term AI is more or less misappropriated when describing LLMs, glorified token prediction machines with some emerging agentic capabilities that will probably never directly lead to AGI, but because there are fundamental issues with what they represent - the tools in the image generation space often being trained on others' stolen art, whereas LLMs consuming as much data as possible, sometimes resorting to piracy, as well as straining websites out there with annoying crawlers.

The arguments against these technologies also go further, with some studies showing that brain activity decreases when you use tools like ChatGPT for common tasks. The friend herself suggested that she's seen people's development skills deteriorating and that using those tools will eventually lead to developers being worse, yet capable of producing things that seem good enough in the moment, until something does break, at which point they'll be helpless. Her guess was that this would probably be hurting both the career of software development as a whole, as well as taking away any artisanal aspects that might have once been there to the craft.

Personally, it's not like I wholly disagree with her take.

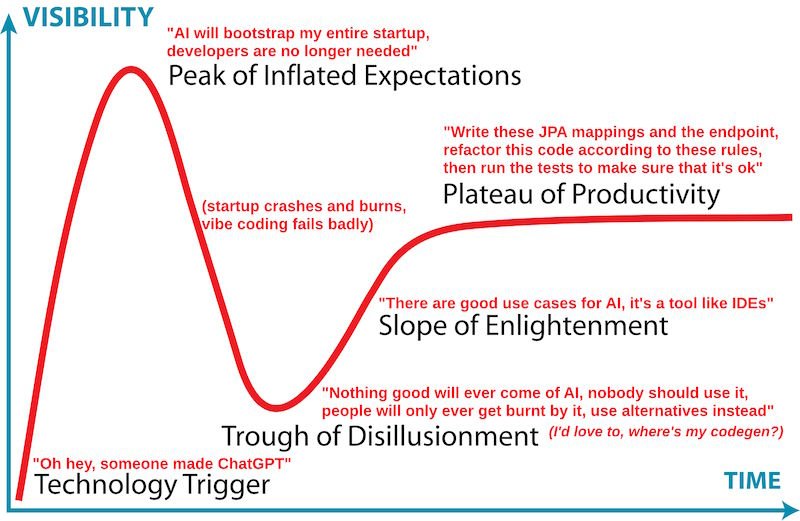

But I don't think it's that simple - the cat is out of the bag in regards to these tools and they'll be adopted more and more, across increasingly wide use cases. I do believe that maybe currently the technologies are near the top of a hype cycle and that eventually we'll settle on a more reasonable take, where their applications won't exceed their capabilities as much, in part due to more mature technology, as well as due to plenty of experience of what they're good at and what not:

She said that it's more of a fundamental change, however. I mentioned how often you don't read all of the underlying code that you're reading with (e.g. the framework you use) but will more often read the documentation, something like StackOverflow, or look at other codebases or examples of how to use it, but her stance is that these AI tools represent a more fundamental shift - that GitHub Copilot and similar tools can do the work for you, not making you apply your brain to the problem much at all, in addition to them being crammed in wherever possible. A runaway hype cycle, if you will.

My conclusion from that, however, is that anyone not using these tools will put themselves at a disadvantage - when most developers start using these tools, the effects will be similar to those of someone writing their code in Notepad++ or Vim directly (which they can only do with any degree of success due to learning the language better) vs someone who is similarly smart but is also using a fully featured IDE like some of the JetBrains products, which give you various code inspections and syntax checks and other suggestions live, as well as advanced language aware refactoring capabilities. Suddenly those not using these AI tools will slow themselves down somewhat and be at a disadvantage, especially junior developers, especially with companies that aren't interested in spending a bunch of their money in training them to become better developers eventually.

Essentially, the choice becomes one between gradual brainrot vs falling by the wayside - the same way how someone might use social media as one of the few viable ways of keeping in touch with their friends and the social scene in their location nowadays, while also being at the risk of a shortened attention span, comparing themselves to the highlights of the lives of others and the many other risks that social media can present (including, but not limited to, disinformation).

There are people who suggest that you can use the AI tools responsibly and not use them to write all of your code for you, merely help you discover some perspectives or edge cases that you might not have considered yourself, however I remain unconvinced - while I don't deny any utility that these tools have, I've noticed that the more you rely on resources that give you a closer approximation of a solution, the less you need to dig into a given problem to understand what's going on. For example, consider someone giving you instructions on how to import certificates into a Java keystore (like cacerts), vs going off on a little research exercise by yourself and learning more about TLS and PKI yourself.

I do wonder, what will be faster - the gradual decline of our cognitive abilities due to using the tools, or their integration within software development and other industries to the point where those not using them can't compete. If things align, maybe those avoiding them will keep their wits about them while also remaining more productive on average. The thing, however, is that we don't have limitless time and in the real world not everyone is as curious. Most people just care about solving whatever problem they are paid to solve and not much more beyond that.

The real world and balls of mud

I wanted to express that nuance to her, the perspective that she perhaps wasn't considering.

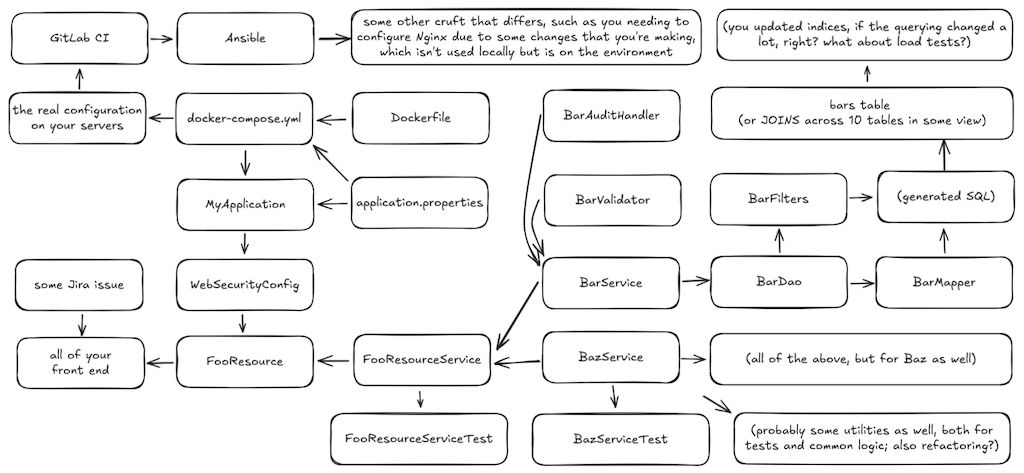

That there are plenty of projects out there that these technologies would still be useful for and would ensure better outcomes - if I have a large monolithic codebase that spans a million lines of code or thereabout, there's no way I'll be able to easily review all of it, or even that my searches will reveal everything about it that I might need to know, vs something more advanced than a simple code search could achieve.

Suppose that I'm your average developer for a second, and that I'm working on introducing some auditing logic within such a large codebase. I might have done a cursory code search but didn't quite catch that there are 3 other similar bits of functionality under different names, that should either be refactored or that my implementation should just use one of the ones that already exist there. Agentic tools have caught similar things in the past, either when using proper embeddings across the whole codebase, or just searching through it with models that allow for hundreds of thousands of tokens in the context. Why? Because these tools can quickly process a bunch of information and have more working memory than the human brain does at any given time. Maybe not the ability to reason properly, but that's not always what things hinge on.

Similarly, for various refactoring tasks, I might already be holding 20-30 files in my head and the more I pile on, the more fuzzy everything becomes and unless I make task lists of everything I might want to do, I'll quickly lose track of certain tasks or details that are important, because of which I might not want to do additional refactoring or even risk changes that could be breaking over similar fears. The AI tools I've used don't seem to have such issues and will happily hold upwards of 50 files in their context and be able to reason about them and do refactoring - reviewing the changes in which is easier than having to take the step by step manual approach.

These tools have also caught edge cases that I wouldn't have thought of (e.g. when doing data validations), are really good for writing boilerplate code (because nobody can be bothered to setup codegen for everything, just ask how many of the people out there can fully generate JPA mappings from your live DB schema and from your versioned migrations), things like utility scripts and one off data transformations, as well as a bunch of stuff.

I make no strong claims about any of these, if you're curious you can see for yourself, though of course the more you stray from the popular approaches and technologies out there, the worse your personal experiences will be. My friend, however, she had a different approach to me talking about the other side of the coin. She said that codebases like that should never exist in the first place, that code should be written in such a way where it fits in the working context of the human brain. Or that the developers in question should get better.

The issue is, that we don't live in that world, even though I'd love to have a memory that I'd be able to call "good". Or not work on complex codebases. Saying that bad or even complex codebases shouldn't exist and therefore that the idea of having to maintain them doesn't need time put into it is like saying that jobs like transcription or translation shouldn't exist because we should be able to automate them (yes, both with formal methods and all sorts of forms of AI) - while the statements themselves aren't wholly wrong, they're also quite ignorant of reality.

Imagine saying that to someone, which doesn't resolve any of their issues and just puts down tools that might help them in some ways, in addition to proceeding to call either their codebase, their job or both, shit. Then, what do you think are your chances of being able to somehow convince them of the point you want to make without making them feel offended? It doesn't work that way. It's also not like they can go to their place of work the next week and rewrite a codebase that has been around for a decade to suit whatever is the present best understanding of sensible software development.

Nor is the particular argument something anyone should make from a position of authority - since there are drawbacks to introducing unreliable networks in the middle as well as a bunch of cruft to push data around, as well as some domains are just huge, nor are almost any of the languages that we use well suited for unreliable network calls as first class citizens.

By that reasoning, should planes run on microservices? In some loose sense, maybe - with systems having modularity and redundancy and something like ARINC being used to push data around, albeit it does not look like the Wild West in web development and might work closer to a microkernel system, except we also all know how GNU Hurd worked out (or rather, didn't), so at that point it's talking about theory and something that gets increasingly detached from what you'll be doing next Monday.

While the line about the large codebase was said to me verbatim with the profanity, the thing is, that I don't even fully disagree - there's lots of arguably bad codebases, architectures, entire teams and projects out there, none of which should have existed or at least ended up in the form that they did. I've seen plenty. However, you shouldn't dismiss the constraints or the processes that lead to those circumstances just because they shouldn't exist.

When someone is stuck in a hole, you don't grandstand about how they should have never fallen in there, or how there should actually be an escalator out of the hole - you give them a fucking shovel.

I'm not actually upset or mad at her, more like the entire industry that brought us to this point, that these AI tools are even necessary, that more formal methods never materialized that much outside of academia, otherwise we'd all design our systems with ample help of UML and C4 models, estimate what needs to be done with COCOMO 2 and even treat our infrastructure as a bunch of boxes to be dragged around, putting as many low code or no code tools as possible in there.

Come to think of it, it doesn't sound right either. I think my problem is that there are so many tools that try to get rid of writing code, instead of making writing code easier and more bulletproof. Writing bad code should be hard in the first place, it's not that you shouldn't write any at all. So maybe more along the lines of various code inspections, linter rules, automatic code formatting and codegen, instead of additional abstractions.

Artisans and engineering

That is why I kind of took issue with something else that she said: the claim that software development is a craft of artisans.

From where I stand, it seems like every next week, there is some new approach to writing front end components, some new DB technology, some new back end framework, only a few of which will survive for a decade and many of which will leave people in deep mud once the support runs out. Even technologies like AngularJS that were once backed by a big company like Google lost support and caused lots of headaches when people needed to migrate to something else. The same happened with React when the paradigm shifted from class based components to hooks, albeit one could understand some of the advantages as well. There will also be issues once JDK 8 hits EOL, if there's ever a serious CVE in it (or anything written on top of it that doesn't support anything newer), then lots and lots of people will need to scramble.

At the same time, people still use languages that result in various vulnerabilities and unsafe memory access all the time, regularly mess up with public S3 buckets, implement everything from data validations to authn/authz poorly, write convoluted code that often is underdocumented, or happily write badly optimized code that results in N+1 problems all over the place, just waving their hands at the end and saying that optimization is on the TODO list. The very same people who'll look at a list of requirements for some Jira work item and say that anything more than 8 hours of work to get it done is unrealistic, whereas in practice the amount of time you should spend on the thing is anywhere between 8 and 40 hours.

You might say that it's just a particular company or a particular project, but when both the tax system in my country falls over when it's time to submit your yearly tax statements, when the e-health system in my country falls over pretty regularly and doesn't have proper ETL with whatever the local hospitals are using, when the vaccination system used during COVID was an unoptimized mess, you start seeing a pattern. Even Windows 11 that I have on my computer right now just refuses to install updates, due to complaining about their web view components not being present - after I've checked that they are, in the OS that they themselves developed. Imagine not being able to just patch your OS because the people developing it messed up that badly.

Because of all of that, these two are both simultaneously true in my mind:

- we're moving ahead at a rapid pace

- without ever having gotten the basics down

Software engineering shouldn't be an artisanal craft that reminds us of the Wild West - a lawless place that sometimes looks like a wasteland of mismatched incentives and padded CVs. It should be actual engineering, with proper regulation, certifications, best practices, down to the very last line of code, down to the methods used, the programming languages themselves.

We will never live in that world.

In such a world, you'd have deeply researched and tested, as well as formally proven frameworks and languages for developing your webapp. You'd learn one front end framework in school and that's what all the professionals would be using. The same for the back end frameworks and databases. The fewer options, the better methods around using them you can introduce. At the very least, no database would be in use without something like thorough Jepsen testing. Every line of code that you write would undergo a lot of analysis, every line would need tests written to cover it, those tests would then also be inspected. The operating systems in use would be more like the versions of BSD instead of Linux - engineered instead of grown. Every cloud technology would be certified with no less scrutiny than the databases.

The pace of development would be slower, the tooling would (eventually) be better, the confidence in the code written would be higher, there would be fewer outages and less uncertainty and impostor syndrome - because you could actually master a single stack from bottom up and use it for decades, more than any T shaped individual of the current age. Innovation would be slower, but often that is directly opposed to reliability and stability, at least when new technologies and practices are used before they're ready.

Nobody really wants that, unless they're building an airplane.

Programming languages and ecosystems

People want code that is quick to write and don't care about the fact that their expectations of reliability in the current landscape are unreasonable.

That's also how we got to the "cattle not pets" attitude towards servers and accepted that they'll just fail every now and then, and everyone moved onwards as if that's a normal take in what should be a highly deterministic system. That's the unfortunate reality of computers and operating systems being far beyond the capability of any individual to fully understand, when compared to the computers of the olden days.

The good news is that you can pick any stack and gain a pretty deep understanding of it anyways, in a decade or two.

The bad news is that the suggestion for one very powerful language above would only lead to something like C++, where you very much have a design by committee situation with every feature under the sun being crammed into the language and making it much harder to fully learn, understand and use... versus just doing less.

I look at the software landscape and it feels like there are too many languages out there that do too many things with meaningless differences between them. For example, if I have a collection of some sort, which one of these will be the correct way to get its length: len, len(), .size, .size(), .count, .count(), .length, .length(). Of course, the answer depends on the language in question, but maybe it shouldn't? Maybe we should have certain conventions that transcend the languages we use, for example, common ways of working with data types, validations, testing and so on, across all languages.

If in a given week I have to work with Bash, Java, .NET, Python, Ruby, Go and JavaScript, then by the end of it things tend to get a bit fuzzy and even language constructs like loops (or Stream API and LINQ) will seem to fade into the background as mostly cruft that stands in the way of me just wanting to accomplish a particular task. The same very much applies to all the web frameworks out there, or even how every stack has a different view on how an ORM should function, even if at the end of the day they all still talk SQL.

It might seem silly, but even in regards to front end, PrimeVue, PrimeReact and PrimeNG are trying to do something very similar to what I'm suggesting: components that work across Vue, React and Angular respectively, with knowledge transfer that's better than any other methods of writing front ends in those, even if it's not perfect. Maybe then it wouldn't feel like some of those are different just for the sake of being different.

To me, it feels like every problem should have one proper solution that you wouldn't stray from that far. And yet, everything outside of RFCs like how HTTP(S) works is still fragmented chaos. Let me give you an example of how getting some CSS ready worked in a project that I once was on:

- you'd have SASS with its SCSS files

- those would need to be transpiled to CSS, but for that you'd need some tooling

- that particular project used Gulp, because that's what was trendy at the time

- because npm was slow at the time, the project also used Yarn

- because the devs didn't want to manage Node.js versions manually, that was installed through a Java plugin with Maven

- for whatever reason, I think node-gyp was also needed there

- that, in turn, needed Python 2 and anything newer would break

- also throw in Grunt for some tasks like watching for file changes, because of course it was there as well

Imagine having to maintain that setup for years. Do you understand better why I'm not a fan of overcomplicated toolchains that start looking like Rube Goldberg machines after a while?

But it's not all bad:

- I like languages like Go, they're easier to reason about than something like Java with codebases that have dynamic class loading and reflection.

- I like SQL databases like PostgreSQL and MySQL/MariaDB (somewhat) and SQLite, because they've been tried and tested and seem like a good fit for the problem space.

- I like web servers like Apache2, Nginx and even Caddy 2, because they're good at what they do and take some of the tasks (like TLS) away from the back end components.

- I like front end solutions like Vue and PrimeVue, because they actually seem simpler than React in some cases (compare Pinia with Redux) without sacrificing too much functionality, even though maybe I'd be happier with Alpine.js, or HTMX, or something of that variety.

- I like technologies like Docker because they allow providing pretty much everything your application needs to be deployed, in a consistent manner.

- I like Linux when used with containers, because it's mostly a good base operating system, especially when you opt for distributions like Ubuntu LTS due to them being predictable (though they have their quirks).

- I enjoy tools like RabbitMQ, Redis, S3 compatible object stores and so on - tools that solve a particular problem and do it well, without meaningless complexity.

- I like that we have web standards like HTTP(S) and also conventions around developing APIs such as REST (even if people don't care much about HATEOAS), as well as the likes of OpenAPI for some common ground.

- Same for the likes of Git for version control and CI/CD tools built around it, like Drone CI, Woodpecker CI, or even GitLab CI, especially when they get out of your way and work well.

What I also like are tools that make using any and all of the above easier and give me confidence that things will work, like I think they should. If some of those tools happen to be AI because we don't have anything better, I won't pass judgement on that.

Humans in the loop and drowning in agile

Something my friend said also made me pause and think:

AI will give you solutions that look correct, but aren't.

Hearing that made me not want to nod my head and agree, but rather question why we even can have solutions that look correct in the first place, but aren't? Your IDE should be screaming at you if that's the case, so should your language server, your code analysis tools, or maybe your AI tools that you can also feed the feature requirements/specifications into, because there's no way people are going to ever write those in a format that a formalized tool understands.

The bottom line is that humans have written and will keep writing code - and the track record so far is that while there are a few geniuses out there that get things right and advance entire industries (like John Carmack, for example), the rest of us absolutely need any and all help that we can get. Would you want your average developer to attempt to validate data pertaining to money transfers with RegEx, for example, without using any sort of a search engine to find what others have used in the past? I wouldn't.

Now, obviously, the problem is that while AI tools will make life easier, the brainrot will still set in - less exploration will be done on our own and more reliance will happen on those tools, to the point of just copying and pasting in code snippets and expecting the magic machine to do everything for you. While the technology has the potential to make me waste less time writing JPA mappings and give me more time to work on an interesting project like a voxel renderer, the reality is that I might as well spend that newly acquired free time just scrolling memes online instead of studying anything.

At the same time, if that applies to a lot of people out there, many of whom would rather talk to a lady that they're interested in rather than spend a weekend learning what monads are or how to use Haskell for some problems instead of Python, then it would be perhaps a bit silly to attempt to hold everyone to such a high standard, where they have to know every language that they use by heart, as well as be able to recall details about the frameworks in those languages minutes after being woken up.

If we say that every single engineer that writes code should be an absolute master at their craft, then there are almost no true engineers out there. Though the few that exist, you probably want more of those people writing the software for your plane.

Summary

So where did the conversation go? Not that far, since it felt too argumentative to get much utility out of it - especially since it won't exactly change the world, or even present that much of a better alternative to AI tools, short of just not using them and getting better on our own, gaining no utility out of it in the process, albeit avoiding its shortcomings.

I will admit that my conversational approach could be better. When considering implementing something or introducing some practice, I tend to focus on the potential drawbacks and issues that might arise with adopting a certain position without questioning them. In practice, that can clash with the strongly held views of others - it seems like I'm questioning their very views with a somewhat long string of gotchas, which can come across like "Here's exactly why your views aren't valid."

Obviously I don't use that sort of phrasing and don't believe that, but the important lesson here is not to treat conversations with people like engineering problems, the outcomes of which matter to a degree where I NEED to get down to some base truth. "Winning" an argument shouldn't be the goal, even in a somewhat factual discussion, if it ends up making someone frustrated. If someone says something that I feel is incomplete or flawed in some way, or ignores various real world intricacies, but they care about it strongly, then the correct thing for me, unless not exploring these would get them hurt (e.g. food safety and such), is to consider really well whether I want to pick that topic up.

As for the topic itself, I guess only the future will show what happens to AI and our brains as a consequence of it. Personally, I feel that they're just tools and both the utility and risks depend on how they're used - though that it's impossible to ignore the fact that most people might very well misuse them. I think my friend is definitely right about being distrustful of AI and I have to admit that going in blind when learning something is probably more conductive to remembering it long term, unless the docs suck or are hard to navigate like is the case with Vagrant, as far as I'm concerned.

Other posts: « Next Previous »