More PC shenanigans: my setup until 2030

Date:

Previously, I explored why having two Intel Arc cards in the same PC is a bit of a mess: Two Intel Arc GPUs in one PC: worse than expected

A little bit later, I discovered that Windows 11 fixes some of the issues and that most games and programs, but not all, mostly work: What is ruining dual GPU setups

Now that I had an OS that's a little bit more cooperative, I decided to go from my setup with the Intel Arc B580 and AMD Radeon RX 580 to a dual Intel Arc setup, with both the A580 and B580 in the same computer, and see if I can fix the problematic software myself:

So, without further ado, let's get into it!

A slightly cursed setup

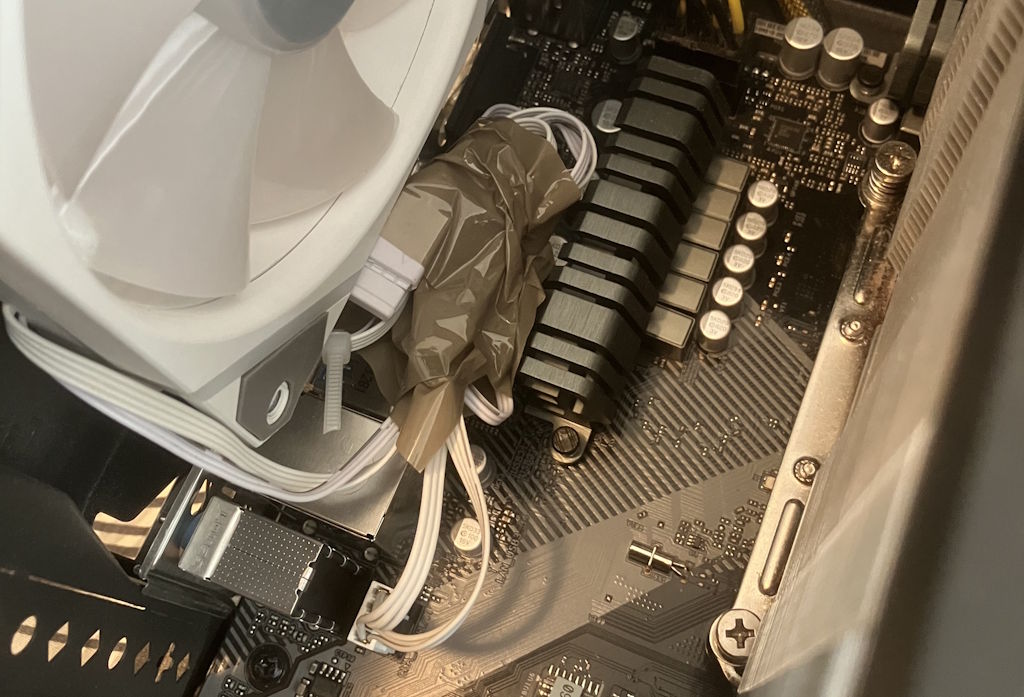

You might have noticed in the previous examples that the inside of my case was quite messy. While I did attend to it later and managed to mostly improve everything, for running two GPUs I needed to make sure that all of the cables at the bottom of the case stay there, otherwise they risk hitting the fans and both creating noise, as well as preventing them from working:

At that point I just looked at some of the other places where I have cables that are too long and just decided to tape them down as well, since it's quite unlikely that the tape would melt and since it doesn't conduct electricity either, there are very few risks related to doing that:

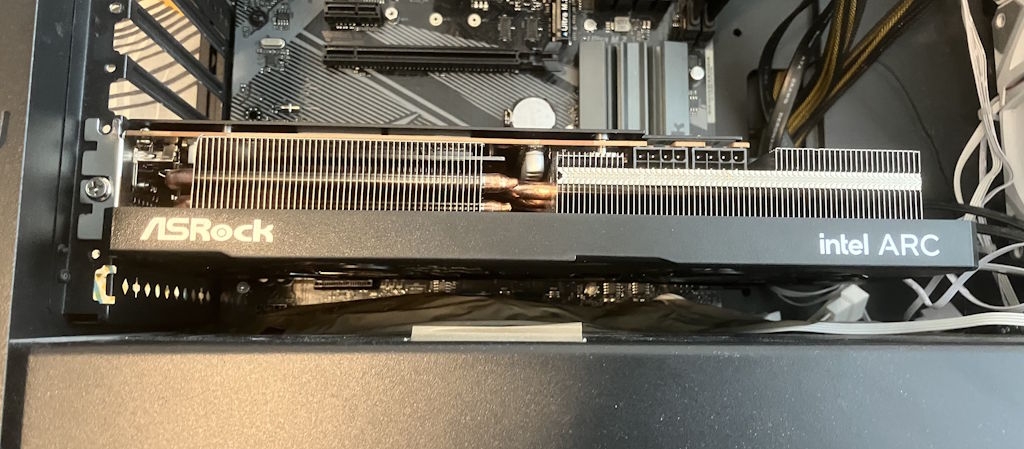

As for the GPU itself, you can see how little clearance there is and why using tape might not have been the worst solution:

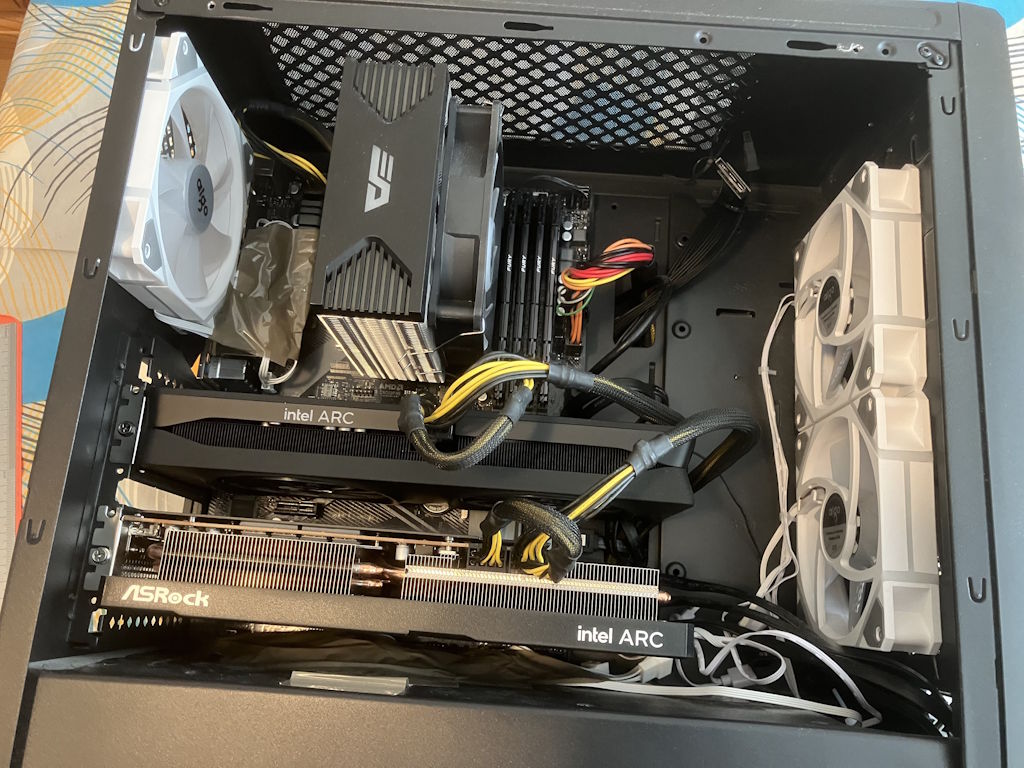

Of course, when two of those are added in the same case, things do get pretty crowded, which makes me think that perhaps I'd need a bigger case for this setup to truly work:

The good news is that the setup itself works and a little bit better than before: Windows 11 lets me choose which games and programs should run on what GPU and since there is no AMD/Nvidia GPU in there, games like Delta Force also cannot decide on their own whim that they don't want to run on an Intel GPU, since those are the only ones that are available!

Aside from that, you will notice that I got rid of the top case fans, because those were pretty messy and it turns out that the CPU cooler is not good enough either way:

I would have actually stuck with this setup, if not for some rather interesting problems down the road...

There is some quite cursed software out there

The first issue, was that even when all of the monitors are plugged into the main card, the framerate of videos and such (on YouTube, for example) is really bad when the browser is running with hardware acceleration on and is using the secondary GPU, my old A580. The fact that this doesn't work well kind of defeats the whole point of the setup: if I can't play a game on the B580 while watching a video that has the decoding done by the A580, why even bother?

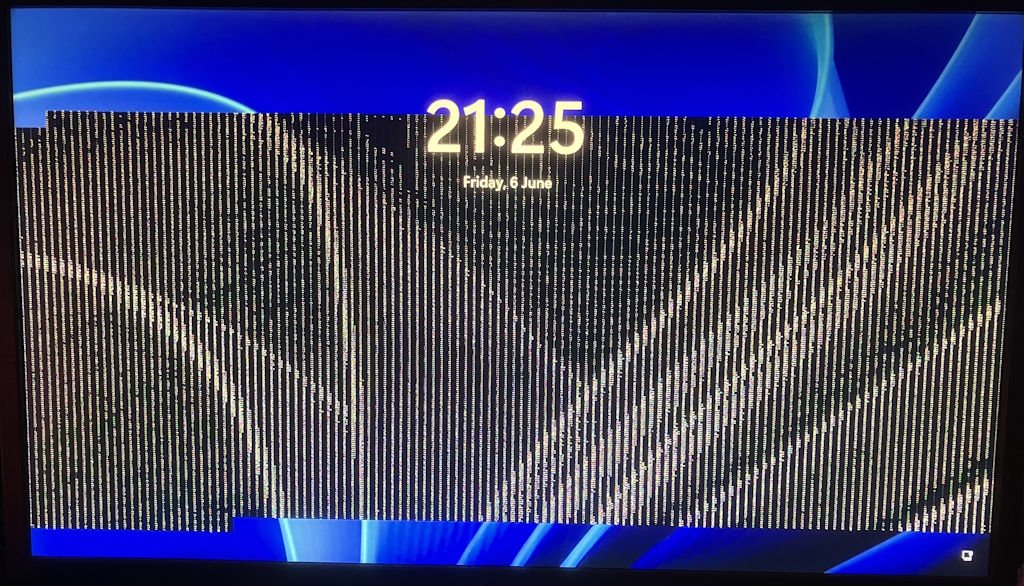

Secondly, I noticed intermittent corruption of what's on the screen, for example when resizing windows, when their contents are redrawn and even once on the lock screen:

Thirdly, I decided to look into whether I could fix OBS and make it allow me to choose which GPU it uses for encoding, since the setup already works in the form of Intel iGPUs and Intel Arc cards, right? Wrong.

Adding the UI controls was admittedly pretty easy, as would be retrieving the value from the OBS UI, similarly to how Nvidia does that:

// default value

obs_data_set_default_int(settings, "gpu", 0);

// UI element

obs_properties_add_int(props, "gpu", obs_module_text("GPU"), 0, 8, 1);

// retrieving the value later

int gpu = (int)obs_data_get_int(settings, "gpu");

// passing it to the encoder, Nvidia specific, but you get the idea

av_opt_set_int(enc->ffve.context->priv_data, "gpu", gpu, 0);With a bit of vibe coding, that part worked fine. Now initially I thought that all I'd have to do is provide a specific device ID to the QSV encoder internals, since by default it seems to be hardcoded:

sts = MFXCreateSession(loader, 0, &m_session);Here's the docs for the corresponding bit of code, if anyone is curious: MFXCreateSession

Here's a GitHub issue talking about how it seems to be the correct way to do this: How to select different devices with MFXLoad and MFXCreateSession API

And yet, it didn't seem to work. If a game is running on the first GPU and I tried encoding with the second GPU, then I'd get the whole of OBS just freezing. With a little bit of additional error handling added to the code, I'd just get encoder errors, but never the desired output, a bit like how there are also issues with capturing games if you run OBS itself on the secondary GPU, not just the encoder.

Since I don't really work with C/C++ or other low level languages, I did try a bunch of vibe coding (since the task itself didn't seem like it should be that difficult, so an LLM that's trained on a bunch of that code did seem to be a good bet), but even its attempts to copy the texture through the CPU didn't succeed, because despite using a pretty basic setup of NV12 color format, the stuff that came out of the GPU was not recognized.

Ergo, I abandoned that idea, because frankly looking at the codebase and also the error messages didn't fill me with the feeling that with a few more days/weeks of work I could get something working out of it - a bit like how seeing old enterprise projects in an outdated version of Java with outdated dependencies and no documentation makes you want to look elsewhere. If anything, that just made me have more respect for the people who maintain OBS and work on it, albeit I did see some curious historical code, like this for example:

/* this function is a hack for the annoying intel igpu + dgpu situation. I guess. I don't care anymore. */

EXPORT void obs_internal_set_adapter_idx_this_is_dumb(uint32_t adapter_idx)

{

if (!obs)

return;

obs->video.adapter_index = adapter_idx;

}I feel like my expectations for a lot of the production code out there are a bit too high - often times it working is probably good enough and there's not that much going on behind the scenes, albeit I'm starting to think that the particular bit of code under libobs-d3d11/d3d11-subsystem.hpp could have been worth a look as well, if not for all of the other issues that made me look away from using two Intel Arc GPUs in the same system.

Let's just solve what we can and look past what we can't

A lot of people told me that dual GPU setups aren't really good. In some ways, they were right, even if the reasons for that are quite unfortunate. It's like a Catch-22: dual GPU setups suck because nobody writes code with them in mind, because nobody uses them, because when they try, the setups don't work, because the code is not written with them in mind. Unfortunate status quo, but I can't exactly do much about this myself.

Ergo, I decided to look into a slightly different direction that would still let me do recordings/streams, without the encoder getting overloaded, but first... fixing other issues with the PC! You see, for the most part, the setup has been in a state of "good enough for now", however clearly the Ryzen 7 5800X is also struggling a bunch with it hitting 90C under full load, which probably won't exactly melt the CPU, but does mean that its clock frequency gets dialed back quite a lot.

Behold, an AIO:

This particular one is from Aigo and I got it from AliExpress for about 50 EUR, which is about as good as the prices get, even on discount. That said, it should provide both better thermals, easier installation (no bulky heatsink on a CPU air cooler), as well as reduce the total amount of fans, because I can replace two case fans and the CPU fan with it, hopefully meaning a bit less noise and better thermals.

I don't really do sponsored content and this is no exception, I just went with this particular model because it was about 10-20 EUR cheaper than the stuff available in the online retailers in my country, in addition to me having had decent experiences with other Aigo coolers in the past (you can see that the case fans also come from them, even if they're not quite as beefy as the AIO ones). Another nice thing about the overall setup is that I can also replace my old PSU that has a bit of a rattle to it - since I don't intend to see what happens when its cooling gives out due to bad ball bearings.

Long story short, installing the AIO wasn't all that bad, I swapped out the PSU and could clean up at least some of the cabling along the way, albeit the back of the case is still somewhat messy, mostly thanks to me having 6 SATA drives connected in a case that definitely can't handle that many:

I did end up disabling the front RGB strip on the case itself because it's quite annoying and did install the radiator in the front of the case instead of the top, which honestly might be a bit of a mistake from the cooling and airflow perspective (the front panel of the case doesn't have that many air vents, while the top has a mesh panel with a dust filter), though it would get a bit too crowded if I installed the AIO on the top, so this is good enough for now:

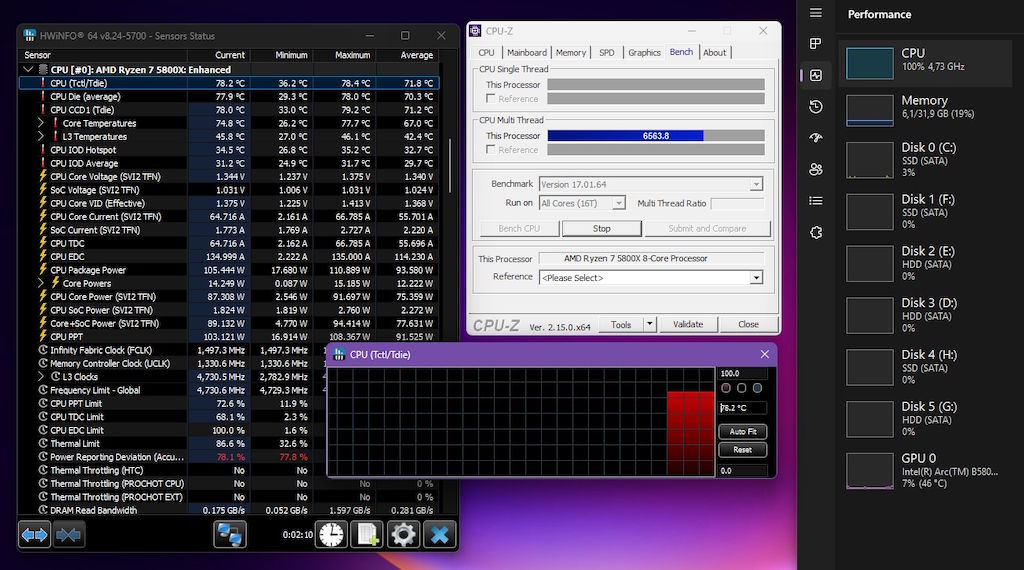

Some quick tests without the panels installed also reveal that the temperatures are way better too, even under full load. I will admit that the AIO fans are a bit more beefy than the previous case fans I had, so while the pump itself is pretty quiet all things considered, under full load things can get pretty noticeable with my fan curves, but the thing is that most of the time I have headphones on anyways and the CPU not throttling itself into oblivion is the preferable thing here:

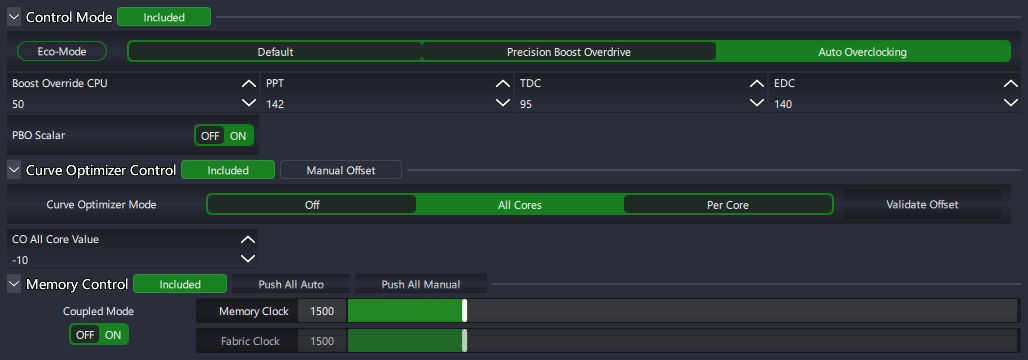

After that, I messed around a little bit more with Ryzen Master to have the PPT, TDC and EDC values at stock so the chip can pull enough power, as well as dialed back the Curve Optimizer value from -15 back to -10, also hoping that this could prevent any instability issues, since at this point I should have had a bit more thermal headroom, right? I also made the memory run a bit faster, from the previous value of 1333 to 1500, since those particular RAM sticks seem to be good enough for that.

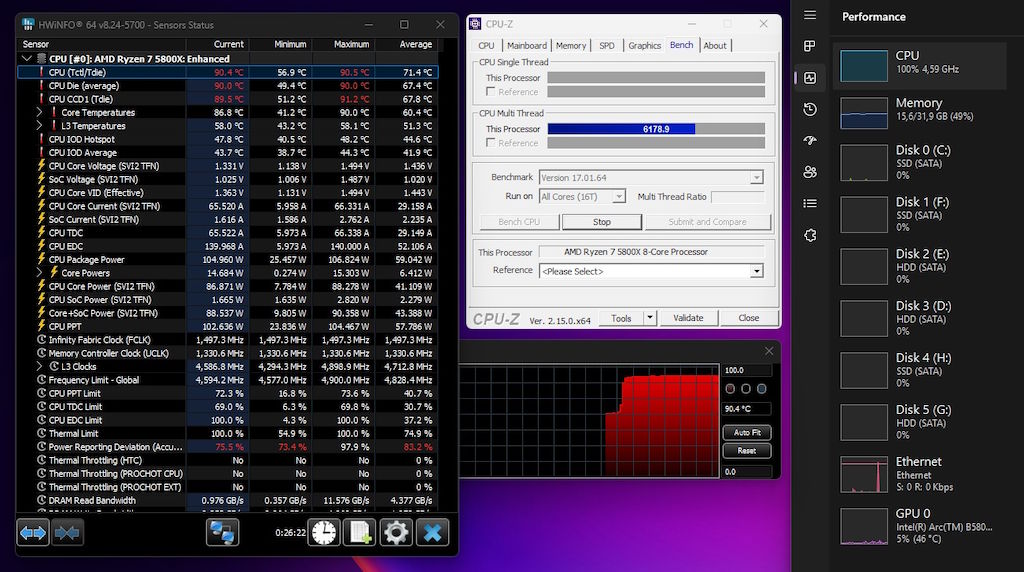

Unfortunately, putting the side and front panels back on, coupled with the CO value that's closer to stock and slightly less aggressive fan curves means that under synthetic load it's still very much possible to make the CPU thermal throttle, albeit it's a little bit better than before:

This still wouldn't usually happen, even when compiling software the CPU won't typically be pinned at 100% load all the time, plus I could probably mess around with PPT/TDC/EDC/CO values a bit more if I wanted to, but honestly having an all core sustained clock speed of 4.6 GHz under full load is good enough for me, it doesn't throttle as much as before and under normal loads the temperatures are at least 5-10C lower than before.

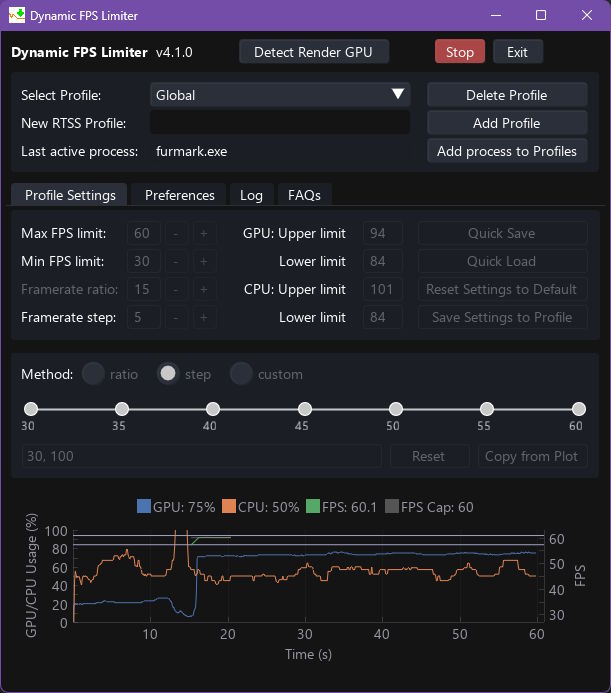

With all of that out of the way, what's my secret weapon for preventing the GPU from getting utterly overwhelmed and stuttering, either the games themselves, or the encoder? A program that hooks into RTSS and lets me limit the framerates in games and programs based on the GPU load: Dynamic FPS Limiter

While my earlier comment on a lot of software out there being a bit jank if not outright broken stands, it's also immensely cool when people write something like this, to build upon good software that already exists and add new features that more or less solve my exact problem:

You see, the encoding stutters when the GPU load hits 100% for prolonged periods of time, so instead of the GPU dedicating all of its power to the game with no way for me to control that (process priority setting in Windows basically does nothing), now I can limit the maximum framerate that the game can run at when it's becoming too demanding, meaning that for example in intense sections I can drop from 60 FPS to 30 FPS until the situation improves, which is way better than either the framerate being very inconsistent or the recorded video stuttering altogether.

So now what?

I have to admit that AIO coolers have a reputation for having a bit more of a limited life span compared to air coolers, but it will probably be fine: if everything goes okay, it should last me around 5 years, at which point I can probably look into other options, or just refill/clean it myself, since at that point I would no longer need to worry about a warranty of any sort. Heck, I might consider putting some car coolant in there, to see how that performs, since at that point the system will probably have run its course and won't matter that much.

Speaking of which, I intend to run this computer setup until 2030 at least, maybe swapping out some drives along the way: the CPU itself is good enough and I am quite sick of the modern trend of UE5 games coming out very, very unoptimized which feels like an attempt by the Big GPU to sell more GPUs. Humor aside, I think it's easier for me to just choose not to play those titles and not give those developers money, if they can't release a product that runs well on a plethora of hardware.

There basically is no good excuse not to do that, because most of the game engines out there in current use can scale all the way back to a Nintendo Switch and most of the graphical effects they try to push on people should also be toggleable off if the player so chooses. I will admit that I really enjoy upscaling: FSR/DLSS/XeSS and even the kind that Lossless Scaling has, all of which alongside frame generation, in theory, should make it so that you don't have to buy a new GPU for the upcoming decade but can just gradually dial those settings back for more demanding (but optimized) games and still get some enjoyment out of it.

Clearly, that's not what's actually happening in the gaming industry though (or much anywhere else, seeing how demanding even basic software now is, shipping full browser instances just to render a UI, in part because cross platform solutions for UI aren't very good or easy to work with, compared to webdev technology), where you buy a modern AAA game and need both upscaling and framegen as a crutch just to get to 30 FPS and not even that often times in beloved franchises that are otherwise great.

For example, have a look at this video: GTX 1050 Ti: Oblivion Remastered LAG FEST! (Far Cry 4 Sanity Check)

(just an image if you don't want to look at the video right now, I'm not doing full embeds)

It's similar to how some people like Threat Interactive are speaking the truth about modern game development, regardless of the status quo.

At the same time, even on my slightly older A580 some other games that don't use UE5, like Arma Reforger, can both run really well with a lot of the settings turned off on comparatively budget builds, in addition to letting you turn on whatever you want to get most out of your hardware if you want to go in the other direction. Here's a few screenshots, when I did that comparison myself:

As you can see, the game looks pretty good in most cases, except you can feasibly make it run really well even on a somewhat sub-optimal setup (I think I had the A580 combined with a Ryzen 5 4500 back then), which is what I think the future of gaming should be like. What's more, the lower graphical settings do not make the game any less amazing and wonderful than it would otherwise be, you can still have plenty of enjoyment out of it! Whereas if you can't turn the settings down, the game running really badly definitely would ruin it for anyone!

Basically, think more along the lines of Doom 2016, instead of Doom: Dark Ages, which also forces ray tracing to be on with no way to turn it off. It's not that ray tracing and similar expensive methods in of themselves are the issue, but just the fact that you don't have the ability to disable it.

Anyways, I digress.

Summary

It's unfortunate that dual GPU setups come with so many issues, even though I still think that they should be the default, to avoid e-waste with old GPUs and offload a whole bunch of tasks to secondary cards. Yet, currently, trying to do that with two Intel Arc cards leads to even video playback not really working that well, whereas my old RX 580 actually had stability issues and some games would pick the AMD card instead of the Intel card, probably thinking that it's an iGPU, instead of following the Windows 11 settings.

Either way, I'm happy that I could clean the case up a little bit, the AIO should also help the CPU work better long term, as well as the dynamic framerate limiter and RTSS should give the setup slightly more lifespan, hopefully with more games in the future also supporting XeSS, although Lossless Scaling will surely help and step in when the games don't. I've no idea what the future of gaming looks like, but I'll definitely try to support the developers that care about optimizing their games and making good use of the hardware.

If all goes well, this setup should last me until around 2030 before I need a new significant update, maybe aside from replacing some of the HDDs with less bulky SSDs (those are still a bit expensive over here, at least 1 TB and larger versions) or just replacing drives when they do fail, I still have a backup HDD sitting in a closet, so that I may replace one of the failed ones with it and restore any data from backups.

I have to admit that people who said that multi GPU setups don't work well were ultimately right, but I'm still happy that I looked into it all, even if it took a bunch of exploration and blog posts to get here. This will probably be the last hardware focused post for a little bit, I'll most likely get back to writing more about programming and software development after sorting all of this out.

Other posts: « Next Previous »