Building brittle software

Date:

So, for most of the week, my blog, homepage and some other sites have been down, so let's talk about brittle software!

It all started, when I wanted to have a quick look at the font stack on my homepage, since I've forgotten exactly what it was and there's this one lovely site that gave me some inspiration. Unfortunately, when I went to open the site, my browser refused to do that:

(you'll notice that a lot of the details in this post are redacted, I got a bit curious about how many environment details I'd still accidentally let slip by if I tried to do that for a post, so let's see)

It wasn't an issue of the site loading slowly, it wasn't a database connection failing, or even Apache2 failing to reverse proxy the requests to the Docker container that is responsible for the exact site. Instead, the whole thing was just down.

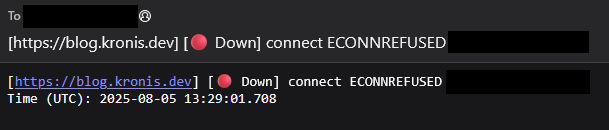

That's quite odd, since I do have monitoring set up, Uptime Kuma, which has generally worked pretty well and previously had alerts to Mattermost set up, but since I no longer run my own Mattermost instance (so I'd have less software to keep patching constantly), was hooked up to my mail server.

Was the mail server also down, that notifications had failed to appear in my inbox? Aside from the fact that in the future I might also need to hook the monitoring up to some 3rd party mailbox (rate limits and privacy be damned), opening the monitoring site itself on another server showed that everything had been down more or less since the start of the month:

Normally, that'd be pretty terrible! It's like most of my online presence had been wiped out for a little bit, but the good news is that it's not particularly important - it's not like people can't receive healthcare or other essential services during this downtime, it mostly just hosts this blog, my homepage, as well as a few sideprojects here and there.

In that sense, not having SLAs is freeing, but on the other hand - also annoying. You see, I purposefully pick software and stacks that are quite boring, with the idea that I'll have fewer surprises along the way.

Docker Swarm issues

Unfortunately, there are still plenty of those, regardless of what I do:

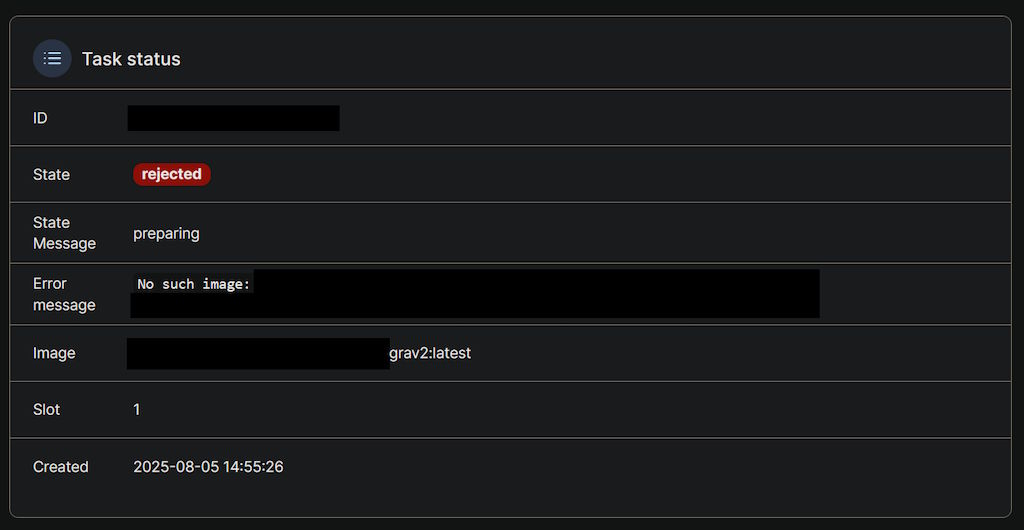

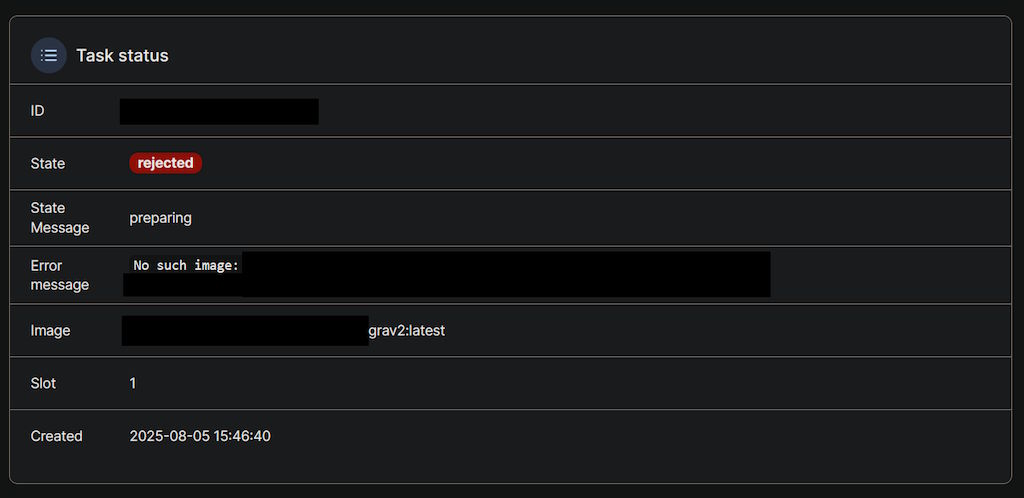

So, it was complaining that the Docker images for my software could not be found neither locally, nor in my custom Docker Registry. That should never happen. Even if the registry is down, there's no reason for the local image to disappear.

Yet, this had been just one such time. Digging around in the logs, I could also see this for some of the services:

network sandbox join failed: subnet sandbox join failed for "10.0.2.0/24": error creating vxlan interface: file existsFor what it's worth, it seems like folks online have run into similar issues, seems like it's just one of those things that you run into if you have a cluster for long enough, not exactly a point in the favor of using Swarm in my eyes, but I've seen similar issues with Kubernetes in the past as well, so I can't exactly complain that much.

Either way, I cleaned everything up... and the issue didn't disappear.

Build issues

It seems like what had happened, was that since the containers couldn't start, the images were considered not in use. That's when the daily/weekly/whatever container cleanup (docker system prune) picked up on it and wiped the images from the local nodes, meaning that they're just not there.

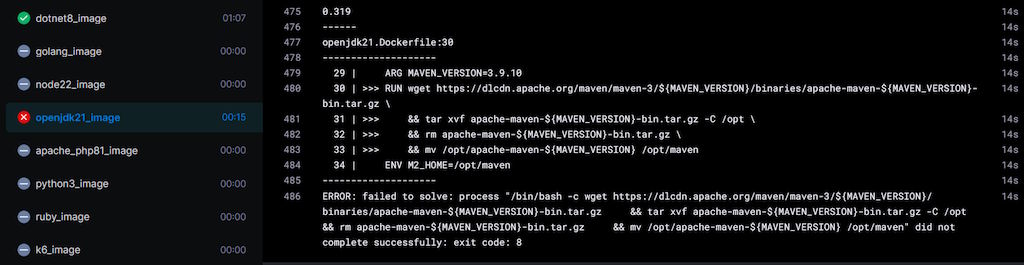

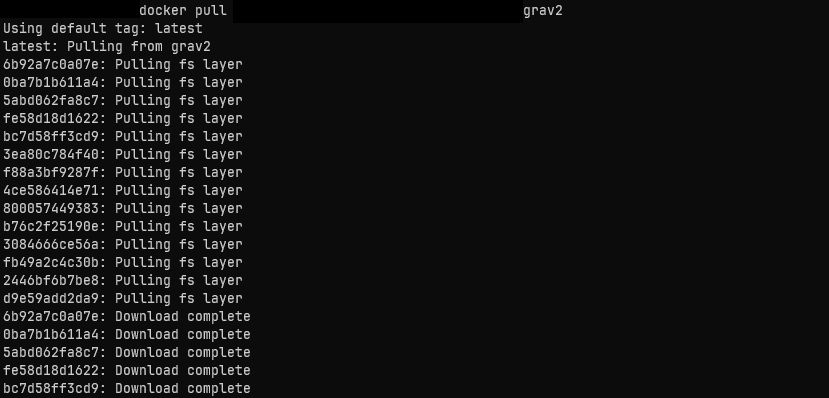

Well, why not just pull them from the registry, then? Because they're not in the registry either! You see, since I don't want to manually recreate the blob stores or manually clean them, the registry has a cleanup schedule as well. While it's also setup to automatically rebuild images so the latest versions are always there (but not old builds from months ago), clearly that wasn't working:

Since the blog is running on Grav, it needs a PHP base image to build it. Since I'm building my own (for layer reuse and consistency's sake), it's attempting to get it from the registry... and failing.

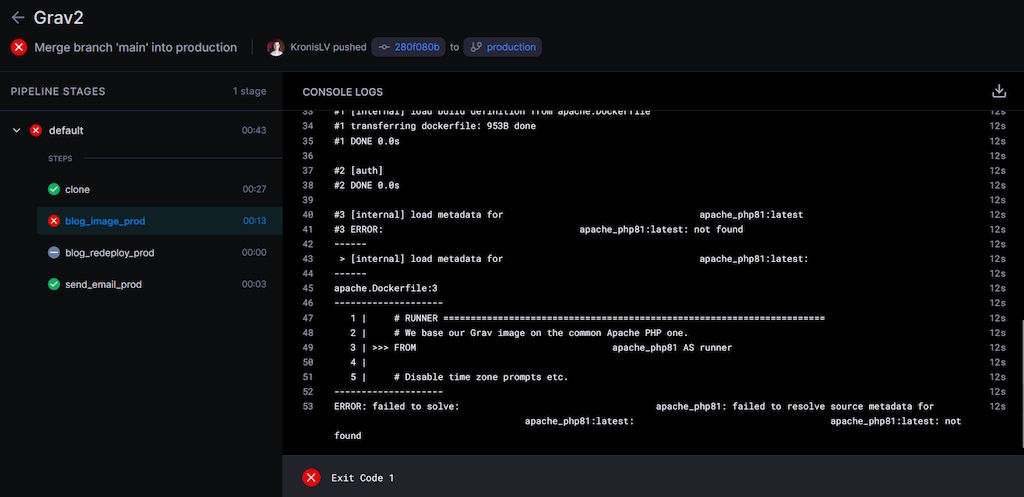

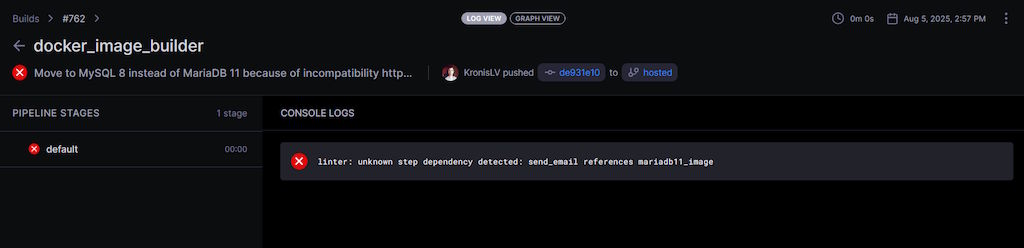

So what's the cause for it not being there? The fact that the task that's building the images is failing due to some dependencies that are missing, as a part of me doing some refactoring:

You see, I was going to have some MariaDB 11 based images previously, but because there are some issues with MySQL drivers against it, I figured that I'd probably have to go back to having a regular MySQL 8 image or whatever is the LTS, because MySQL has more drivers available and even the instructions for some stacks say that you should just use the MySQL driver for MariaDB - which is obviously no longer viable.

Okay, fine, so I fixed the issue and everything should now be okay, right? Wrong, now that same task also can't build the OpenJDK image, because the Maven binaries are no longer available on the site from which I download them, leading to more failures:

Why download them from the Apache site directly, instead of just using the OS package manager? Well, the thing is that I tried doing that initially, but the version included with the OS (at least back then) was too old, meaning that some dependencies would fail to install properly and some plugins and such would fail to work.

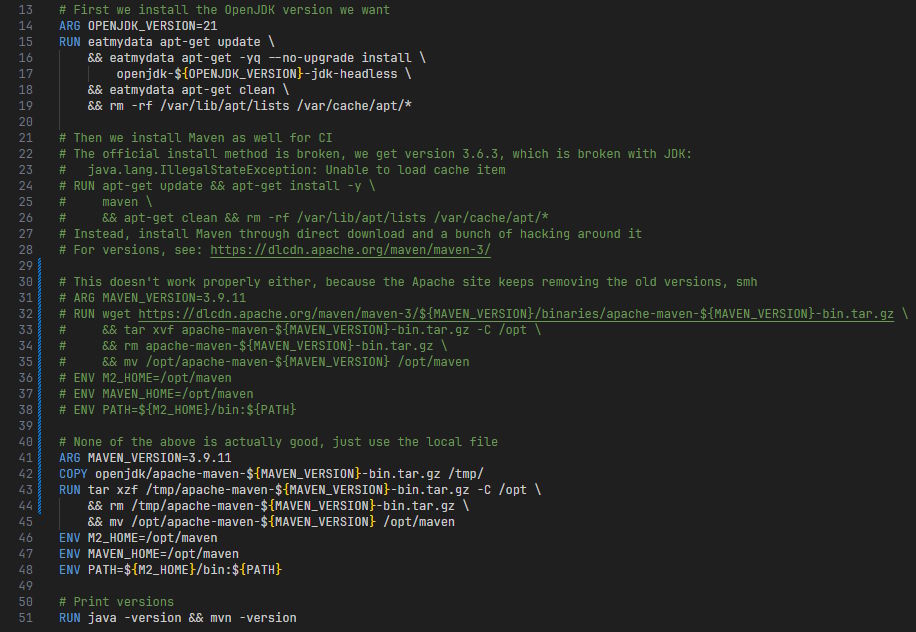

So instead of hoping that they packaged the correct version in the latest Ubuntu LTS, I just decided to outright copy the .tar.gz into my Git repo and to use it directly, giving me even more control over what I want to include in my containers:

It seems like that finally fixed the builds and while I was waiting for them to finish, I noticed some e-mails about failures in my mailbox after all:

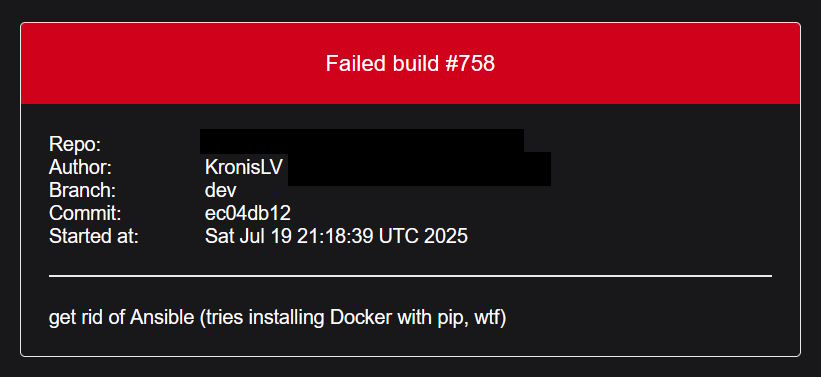

This example mail was from when I removed my own Ansible image as well, because for whatever reason it seems like I needed Docker to be installed inside of the containers alongside it (probably to use the Docker or Swarm tasks), but after a Ubuntu LTS version upgrade it was failing with PEP 668 related issues and frankly I wasn't using it that much outside of work, so it got cut.

There were also a few more messages closer to the exact issues pictured here, but you get the idea - the cause was forgetting a line or two in CI/CD config, probably in some late evening due to not having a second set of eyes to look over my personal changes, nor bothering to feed it all into AI for code review either, probably being a bit too swamped with work the next morning to notice the e-mail about the failure.

And since Docker Swarm deploys that fail also don't have any alerting for them, this whole thing just kept getting worse. No idea why my uptime monitoring didn't properly alert me at all, maybe there was something going on with the mail server as well? It's probably a good example of why self-hosting the monitoring for your self-hosted apps isn't a good idea on the same servers, but I'm absolutely in no position to pay for more servers, so it's not like there's a good solution for this.

But at least now everything would be finally solved, right?

The images were building correctly once more, and the Swarm stack was about to be redeployed with those images, once they'd be done downloading from the registry:

Eureka! Or at least it seemed so...

More issues with networking

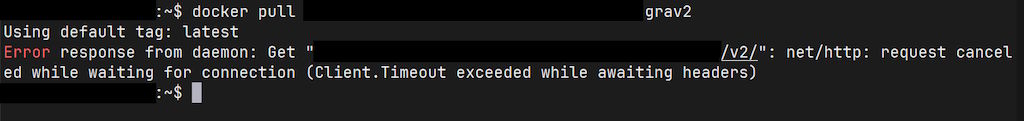

Except of course they wouldn't get fully downloaded, because I can't have nice things:

Essentially, it once more complained that the image didn't exist, even though the build logs very much told me that it's uploaded! Not only that, but from my local PC, I could pull the image with no issues whatsoever:

Yet, trying to pull it on the server would just return timeout errors:

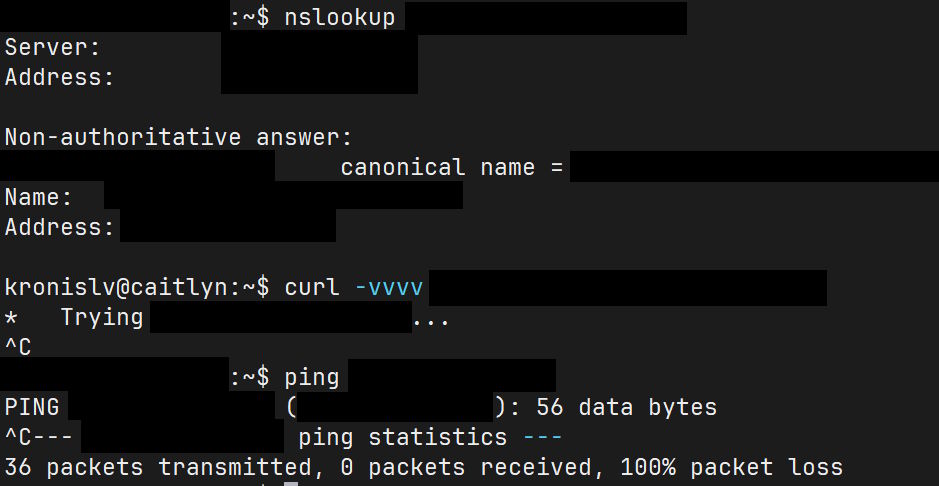

Trying to increase those values didn't help at all. So, I got debugging and one thing lead to another and eventually I got down to the issue. Admittedly, this looks like something out of declassified NSA documents, but here you go:

What's visible here is that I can get the DNS records for the domain after migrating from NameCheap to INWX, since I also wanted an EU based alternative to US services that I had been using up until now, as much as possible. However, when I try to actually ping the server, or get data from it, nothing happens...

It's not a connection that's refused, it's not a port that's closed, it just... stalls. Now, I did a bunch of exploration and from what I could tell, Contabo VPNs had suddenly decided to NOT be able to talk to one another over their public IPs. For example, if I have server A that reaches out to server B to retrieve some data, that would just no longer work. I could reach the servers from my PC as normal, but they couldn't talk to one another.

This is increasingly odd, especially since I shouldn't need a private network for this (public IPs should be fine in a zero trust environment), nor could I find any odd iptables rules or regular firewall rules or anything like that. Because I don't have as much free time anymore these days, I just ended up throwing Tailscale at the problem and more or less called it a day.

Of course, that doesn't quite solve all of my issues. You see, I need to access my site through https://my-registry.my-domain.com/some/path and the exact domain matters, because that's what I'm getting a certificate for through Let's Encrypt. Arguably, I should probably use a wildcard certificate so there aren't as many attacks thanks to the likes of certificate transparency logs, but at the same time Apache2 doesn't support wildcards with mod_md so that will have to wait.

Either way, I could specify the host header directly even when talking to a node through its Tailscale IP, a bit like:

curl -H "Host: my-registry.my-domain.com" http://<tailscale_ip_or_name>/some/pathBut the problem is that clearly I can't do that for every web request from every piece of software that I use. So, short of running my own DNS server (which also adds a bunch of complexity and misconfiguration risks), I decided that I am going to patch the /etc/hosts file as a good enough solution, since I don't have that many nodes.

There's another problem there, though: I use CNAME records to have a human friendly name for each of my servers even when it comes to their DNS records. For example, to access the registry example domain, I'd have something like:

my-registry.my-domain.com --CNAME--> my-server-5.my-domain.com --A--> 10.110.0.5This is also sometimes helpful when I want to move software between the servers, so I can just change the CNAME to point to another server and don't have to think about IPs at all.

The problem there is that it doesn't seem to be enough to just change the server A records, I also need to specify the CNAME records, otherwise some software won't correctly retrieve the Tailscale node IP addresses I want to specify. So basically I am replicating my DNS zones in the hosts file, with the added disadvantage that it doesn't support anything like a CNAME, so it all ends up being quite awkward.

While that did solve my immediate issue of nodes not being able to communicate (since now the traffic would be routed through Tailscale and would work correctly), it doesn't feel like a proper solution and it also feels like I shouldn't have had to mess around with this in the first place, since there's no good reason (other than maybe a lot of attacks originating from their servers) for Contabo to restrict traffic like that. I'm not saying that they're doing that, maybe there's some other weirdness going on, who knows, traceroute just threw me into a void after a few hops.

What's worse, this doesn't really apply to my Docker containers: they don't inherit the full /etc/hosts contents from the host node, meaning that if they ever get those same issues communicating with any of my software through the public IPs (though I generally just use internal networks within the Swarm, after patching the MTU settings and getting everything working with Tailscale), I'd either need to have a lot of stuff in extra_hosts, or look for other options.

By now, I did receive some monitoring e-mails, first about the site going down and later about it coming back up:

How we got here

By now, the service is largely restored. As for how it got this bad?

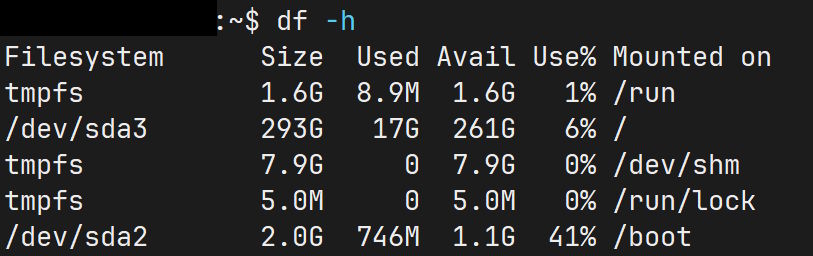

Hard to say, a part of it is probably historically having issues with not enough storage and container builds (Ubuntu LTS based ones, because Alpine had some compatibility issues despite their smaller container sizes) and that leading to too aggressive cleanup policies, alongside the fact that sometimes Sonatype Nexus and similar software just kinda fails to cleanup container images, given that the layer system seemingly isn't the most trivial thing in the world.

Luckily, at least with Contabo, it's not as much of an issue, especially since I opted for a few bigger servers instead of multiple smaller ones:

Regardless, that's quite the tally for one day:

- monitoring and alerting issues

- container cluster issues

- dependency issues

- automated process issues

- container build issues

- container deployment issues

- networking issues

The silver linings are the fact that I had invested enough time to be able to rebuild all of the images that had gotten cleaned up. Within a few hours, everything is back up, at least until the next time something like this happens again. So what's my plan to prevent such situations?

Largely nothing.

Sure, I'll look in the direction of better alerting, maybe something like unregistry, for being able to push images directly to the servers, maybe even figure out a way to have custom rules for cleaning up containers, maybe even run a bare registry image with some auth in front of it (because Harbor would be overkill and Nexus kind of sucks)... but all of these issues happened in the first place, because my hands are largely tied.

Unregistry wouldn't work for when I need to build images that have pre-requisites: unless I want to send all of my OpenJDK images and Python images and everything else to every single CI server every time I rebuild them (though with storage being cheap, maybe that is more viable, especially since I have just a few CI nodes).

The registry choice itself was just based on what I could easily enough setup for free, even the lack of tooling around cleanup policies and such is just a matter of not having enough time and resources to sit down, integrate with Docker or other APIs directly and write code to handle it like any other automation issue. Same for Docker Swarm not doing alerting for when containers fail to startup, because I had to get rid of Mattermost, because that also takes time and effort to manage, so I'd probably be stuck with e-mail alerting, which is easier to overlook for whatever reason (or also might need an external mailbox for this).

Same for hosting the monitoring infrastructure on the same servers - it helps in cases where a site or two refuse to work, but not when network weirdness also brings down everything, though it's not like I'm getting another node just for that on another cloud, since literally all servers are kind of expensive out there and I earn nothing from these.

It just feels like a lot of the software out there is going to have edge cases and will end up brittle, no matter what you do.

The perfect world

I had this old post of mine: Never update anything

It was written to be a bit absurd and since the post has had a few updates, my blog has had a few updates, pretty much every piece of software or hardware that I use has had a few updates; except for a cheap notebook I have laying around somewhere, that one has the Wi-Fi break with any updates and I'd need to re-compile this random GitHub repo I found that lets it work, although at this point its battery is also kinda dead to the point where it dies in an hour off the plug.

But I digress, I was going to say that everything around me has had updates... and that that's also the problem, very much so. Because with updates, changes, things break. Oftentimes in ways that are hard to anticipate ahead of time and all of which will take time and effort, in fighting against the churn.

In a perfect world, things would go more like this:

- you get a CD/DVD/HDD/SSD/USB memory stick with installation media

- you install the OS, all of the first party packages are also available on the media

- updates, if any, are security oriented and tested (as much as SQLite) to not be breaking

- if anything breaking needs to happen, there are fully automated install scripts that carry things over to a known good sane configuration for moving forwards (possibly with approval if done interactively)

- there is a set of packages that are well tested and well documented and proven to work together: a web server, a container runtime, a database solution, something for key-value storage and queues, maybe something for document storage, something for blob storage etc.

- there's also a hypervisor and/or a container runtime so everything you run is more or less isolated from the core system and won't mess it up

- the experience is somewhere between Docker, AppImage and Flatpak

- everything is generally geared towards just working together and keeping working

- you setup software in 2025, it runs pretty well until 2050, then you spend a day doing updates and are good until 2075

Honestly, 25 years of software running well should be the starting point, not the end goal. But alas, you try running Windows XP in the modern day and age, connect it to a network and see what happens. Even RHEL is supported for about 10 years until EOL makes you upgrade your distro. You just can't do that, unless you like being a part of a botnet.

Summary

It's not the fact that updates are bad per se, but that dealing with them leads to churn and things breaking, which more or less puts an upper limit on the amount of software that you can run yourself, given a certain amount of free time you have.

Honestly, keep a system running for long enough and everything that can go wrong probably will anyways, so for now, the best idea is to just try to isolate components as much as possible, make them easy to carry over to another node (yes, this includes backups) and generally err on the side of boring and simple tech choices.

I've linked Service as a Software Substitute before, but while it still rings somewhat true, the people playing for SaaS or PaaS solutions probably are spending less time on things like these and are out there running awesome software and shipping more often.

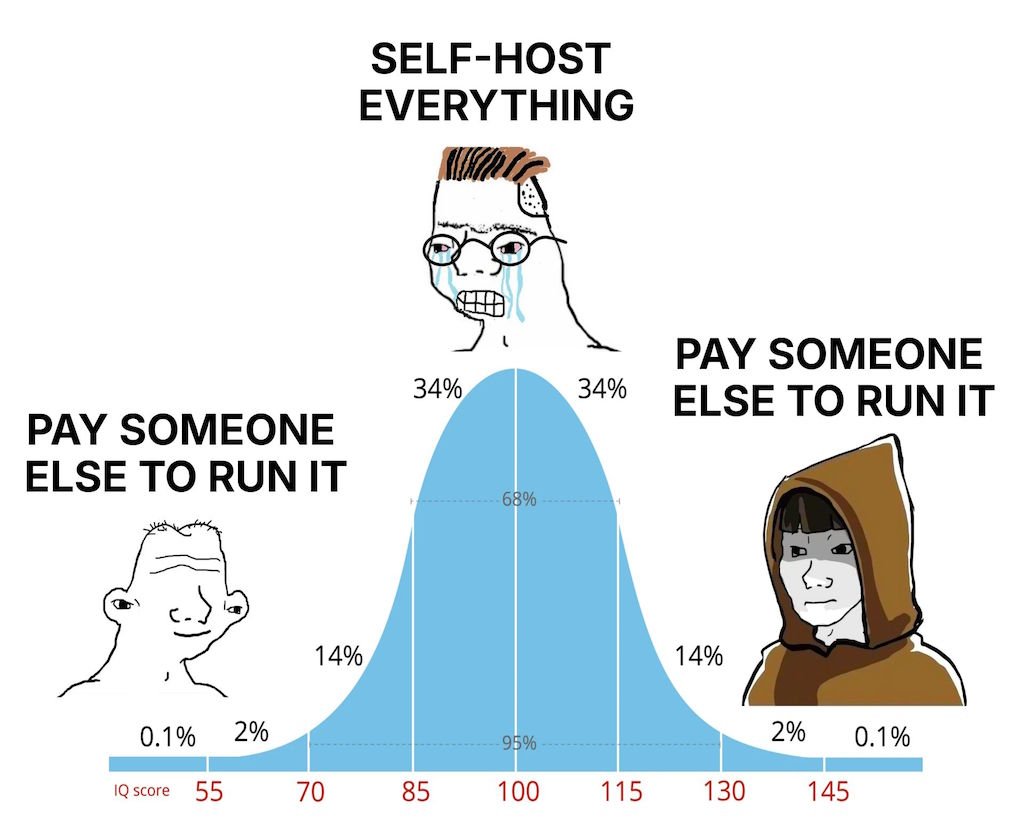

If I had to summarize everything with a slightly vulgar meme, I'd go for this one:

Of course, maybe the meme above also doesn't quite work because it seems like most people would indeed just reach towards something like Railway or Render or Fly.io or one of those other platforms, but after doing self-hosting for a while, I indeed have more appreciation for services that exchange some of your freedoms in exchange for ease of use and fewer frustrations (until they raise their prices).

You do learn a bunch self-hosting stuff, but it can be a bit tiring, even in simpler setups without something like Kubernetes, because of how brittle things can be, even trying to follow the happy path as much as possible. I do realize that I'm basically saying that I need something like FreeBSD, but the thing is that the DX of Docker has also kinda spoiled me. Guess there is no silver bullet.

Oh well.

Other posts: « Next Previous »