The great container crashout

Date:

At the start of this month, I had an outage where more or less everything I host went down. In part, it was due to failing builds, in part due to bad networking, in part due to broken cloud dependencies, alongside software just being plain finicky. The good news is that I resolved most of the issues, everything was back up and running and eventually I even figured out that you need to setup static routes if you want servers on Contabo to be able to talk to one another directly.

Unfortunately, this weekend, everything broke again. My homepage was down. My blog was down. I couldn't even connect to some of my servers. For a second I thought that maybe it was finally the time for me to be hacked and someone to steal all of my data or something and take over the servers... but nope, it was just more of software being obtuse garbage. Today, I'll tell you a bit more about the perils of self-hosting, though admittedly situations like this make me write increasingly profanity laden posts, which I don't normally do.

Tailscale is broken

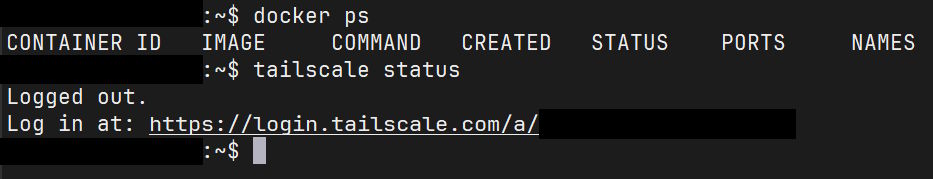

Either way, allons-y, everything is down:

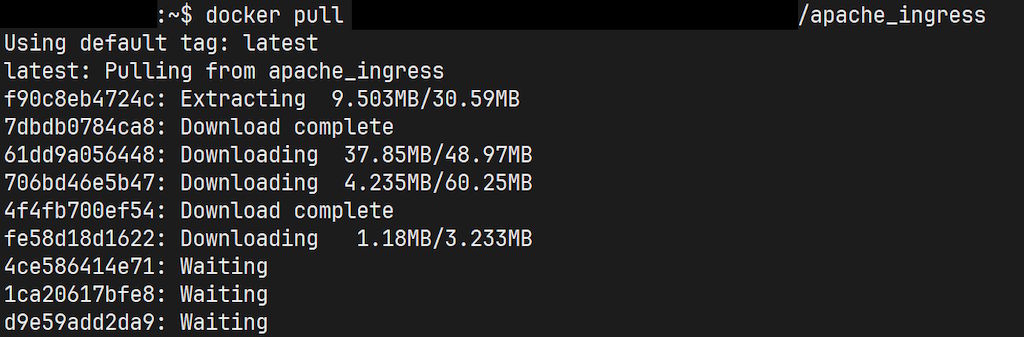

No containers are running, quite possibly because the servers can't reach the leader node for the Docker Swarm setup, whereas Tailscale shows that we're logged out, which explains that. I've also got host records setup so that nodes can access sites hosted on each other directly through the Tailscale IP addresses (if I ever decide to cut off public access to anything I host, so things would keep working), which is a bit of a problem because suddenly they also can't pull new container versions.

Why are we logged out? The most I've done is update the Tailscale version a few times, but it's kind of horrible when such a base networking component is pulled out from under your feet, not unlike someone doing that to a rug that you're standing on. It seems like there are a few people experiencing similar issues with the latest version, but I honestly couldn't tell you the cause at the moment.

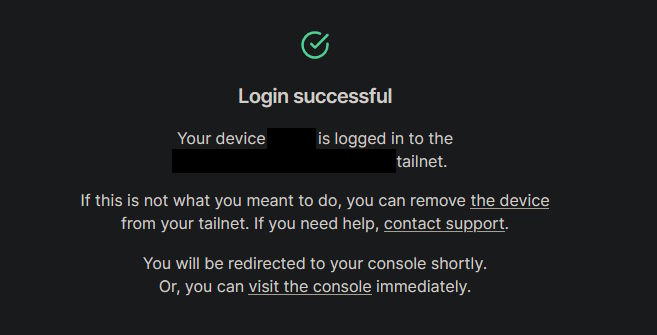

Either way, we log in:

Great! Except it would be nice if a service that's supposed to be fairly automated wouldn't randomly need human intervention. Nothing changed about the nodes in the question, yet I was still logged out. I didn't even get an e-mail along the lines of: "Hey, nodes A, B and C have been disconnected from the tailnet due to reason X, please log back in if necessary." Nor could I even connect to some of the nodes because me primarily using Tailscale to access them in the first place.

I did work around all that, but it's pretty clear that Tailscale can't be the backbone of all my networking.

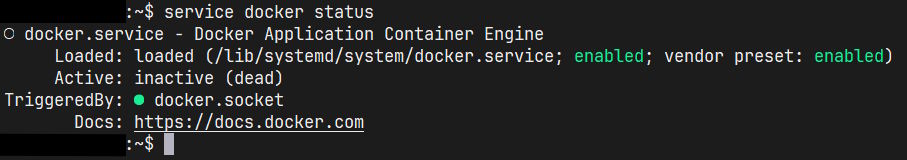

Docker is broken

Except even after a server restart (just in case), the Docker service shows inactive (dead) under its status. It's not even that the containers won't run, but rather the solution to run the containers is dead. Another server restart did eventually help in all of those cases across multiple servers, though I've no idea why another one was needed:

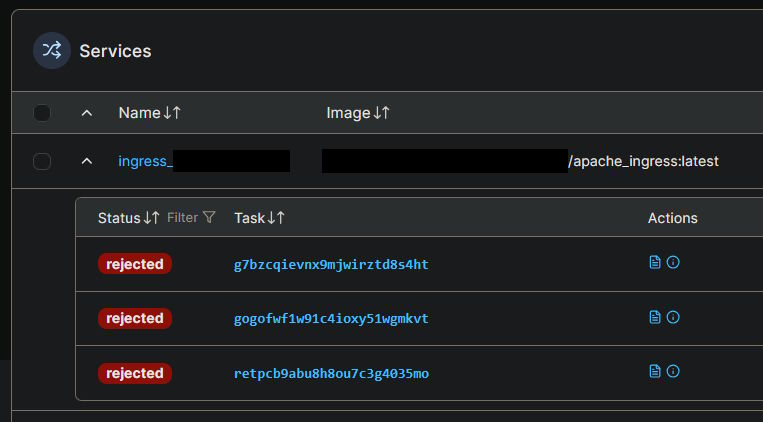

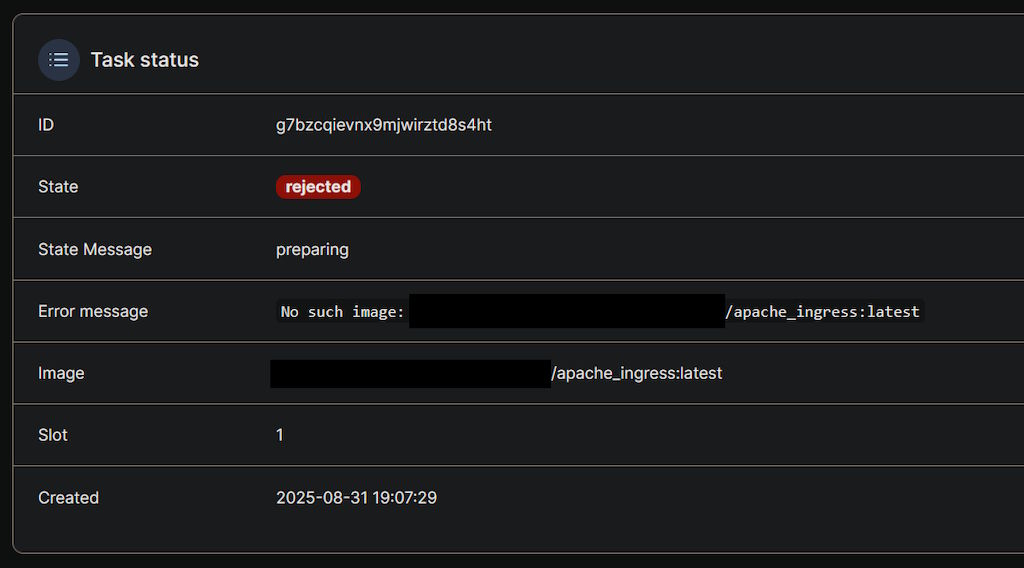

Once I got Docker up and running, it was time to run into our beloved old friend, tasks getting rejected on the servers:

The good news is that the nodes can talk to one another and actually try to schedule the tasks, so the Swarm network itself is okay after fixing Tailscale. The bad news? Well, the fact that it's complaining about containers not existing:

This is an issue that has been pestering me for years at the most inopportune of times. Sometimes an aggressive Docker cleanup removes an image on the system that should be there and as a consequence the container can't be run, other times the registry that you try to pull the image from is down and you can't download it, I've also seen cases where the image is present locally, but running it is still refused because the remote registry is unreachable.

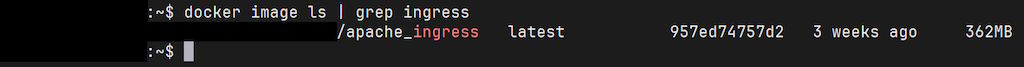

Actually, it looks very much like this:

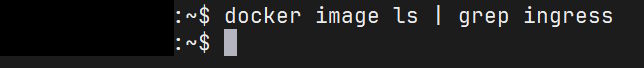

Which is funny, because we also get the other class of issues right alongside that:

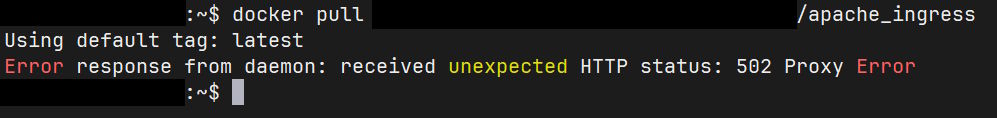

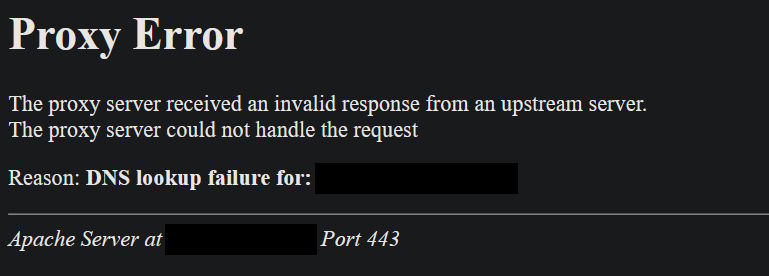

In such circumstances, the reasonable thing is to at least try to pull the latest version from said registry, except that we get a proxy error when we try to do that:

Now, why would there be a proxy error?

It's always DNS (and web servers)

My worst fear was that it'd be some sort of a byzantine error, where the ingress image has gotten wiped due to an automated cleanup and can't be pulled from the registry, because such an ingress is needed in front of the registry, meaning that none of it can be brought up as it is. One solution is to use a public Nginx/Apache2/Caddy image in front of the registry, though that's problematic when you want to use the same images in front of 20-30 sites across all your servers and build them yourself.

Another alternative is just to export it as a ZIP that you can re-import into the system manually if ever needed, instead of relying on those automated cleanups not ruining things for you sooner or later (you're kind of forced to run them, because if you build containers often, without them you'll run out of disk space fairly soon, at least on your average VPS). You can even name the image something like 127.0.0.1/my-project/my-image so that Docker wouldn't try to reach out to your registry at all, in addition to perhaps deploying them through SFTP/SCP as a push, instead of a pull.

I'm actually doing something similar at work, which works nicely, but haven't gotten around to fixing my own setup. The good news (somewhat) is that this time it was a plain DNS error inside of the Apache2 container:

This is also puzzling. I got the registry image working BUT it wasn't being resolved inside of Apache2 despite it existing. My best guess is that either the web server container cached the fact that no such container name could be resolved (internal domain name, such as my-registry), or that expecting to be able to "hot plug" new containers doesn't always work that well, in circumstances that are both unclear and inconsistent, because that usually works.

Regardless, after restarting the web server, everything suddenly started working:

It's a bit unfortunate that so often it boils down to:

Have you tried turning it on and off again?

because I'm quite sure that for a long time people were talking both about graceful degradation, as well as being able to bring systems up after failures, so that they'd be more self-healing. This experience right here proves that software is still quite brittle and that that line of reasoning, in practice, sometimes ends up being plain lies.

What to do about it

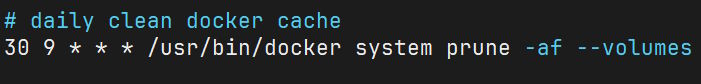

The first step, is to acknowledge that I can't fully automate everything. Not with the time and resources (or lack thereof) that I'm dealing with at the present moment. The simplest step is to disable the overly aggressive Docker cleanup:

The command above essentially resolves to:

- remove all unused containers, networks and images that are dangling (untagged and not in use)

-fskip confirmation prompt, which is what we want when we automate things-aremove all unused images as well, basically the ones that do have tags, but aren't in use--volumesdo the same for any volumes that might be left over after the containers that used them are gone

The problem with the above it, is that it's lies. You saw it yourself, the ingress containers were removed even though they were very much scheduled to run on the nodes and just weren't up for whatever reason. The fact that there were no running containers doesn't mean that the Swarm cluster should suddenly forget what should run where or even the fact that when it was LAST CONTACTED something was supposed to be running on the node and therefore no removals should be allowed.

But they were. This has happened multiple times in the past. I don't even care whether it counts as a bug or some badly described behavior - it's stupid and I want it gone. So now, if an image is tagged, I'll only ever remove it manually.

Actually, I could even disable the untagged cleanups and just clean up manually, when there are too many intermediate build images or what have you. But first, this should be a pretty decent compromise to hopefully break the setup less often.

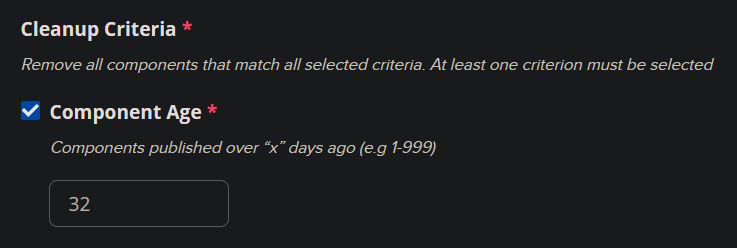

Secondly, I also disabled the automated Nexus cleanup:

That mechanism never worked well. I've seen Nexus instances gleefully ignore the fact that there are image layers stored in the blob stores and even when the cleanup pattern is *, nothing is cleaned up (with all of the correct tasks for cleanup run in order, multiple times).

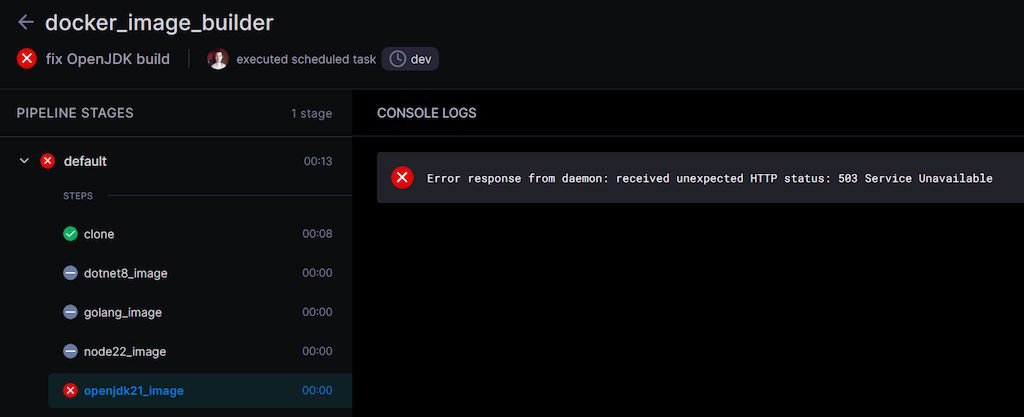

On the other hand, if there's a cleanup policy to delete images older than a month and you try to run a build just slightly ahead of that cleanup schedule, you're not exactly leaving yourself a lot of leeway if the builds decide to fail due to Tailscale rugpulling you:

The same rugpull that also prevents e-mails about those failures going out, as well as those by the uptime monitoring system. I expected my sites or the web server to fail, not the stupid Docker setup. It seems like my choices are either to fork over money for an external monitoring solution, or to just accept the fact that my monitoring setup will never be perfect, or just run it against an e-mail address that belongs to Google or some other 3rd party.

Back to my previous point, Nexus is also garbage, don't use it. Except you don't get the choice because there aren't that many alternatives out there. You either pay Docker Hub to host your private repos, or setup something a bit more complex like Harbor or just struggle. We need a version of the regular Registry image, but with a decent auth and management solution around it. I'll just keep dreaming.

The timeline

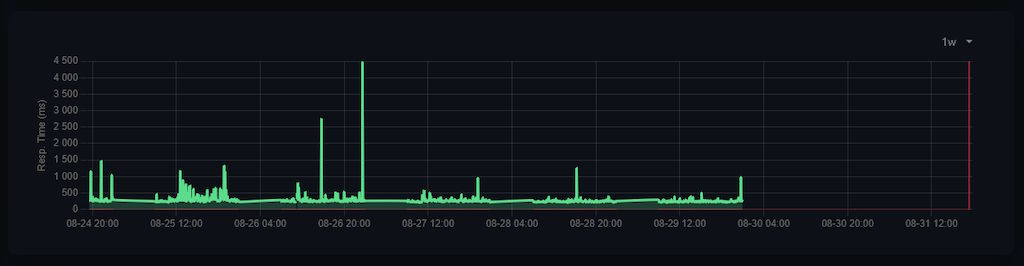

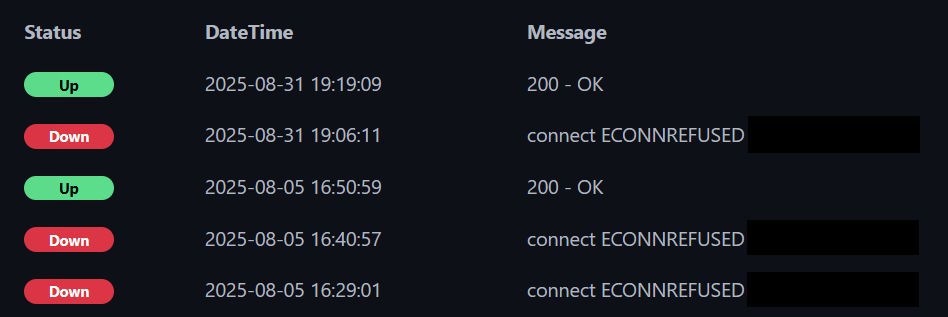

This time around, the issues weren't present for all that long, but once more I feel like it was a combination of factors and brittle software that just shouldn't have happened in the first place:

Even the timeline itself is incomplete, because the uptime monitoring software was in the same cluster that went down, hence you see the difference between there being no data about the last day whatsoever and then suddenly errors appearing:

Alas, it cannot ever be truly self-hosted, if I don't have enough nodes for that and want to run everything as a part of the same cluster.

Summary

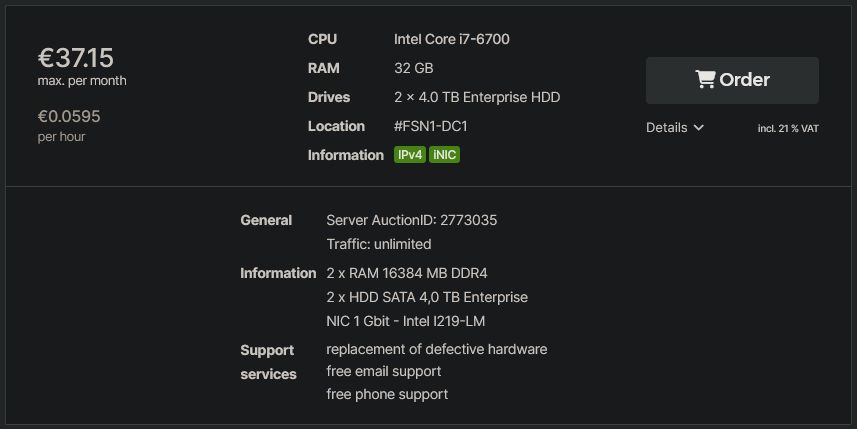

In summary, this sucks. It's simple enough for me to understand on a surface level and fix, but I just wanted to write some code over the weekend, not debug stupid things like that all over again. At this rate, I'll probably have gray hair before I hit the age of 30. I think the clearest solution here is to simplify things further, until such issues become more unlikely. What's my biggest step towards that? Well, I recently looked at the Hetzner server auctions and noticed that there are some pretty good offerings on dedicated servers:

The fact of the matter is that I don't want something hyper-scalable for my personal setup. I just want a large box somewhere with a stable OS on it, alongside a pretty good backup setup that pulls data back to my homelab boxes. Something in the cloud that has good networking and can run 24/7 unlike my own servers in the apartment which sleep roughly when I do. Then, inside of that one larger dedicated server, I can run a few VMs that will represent prod and dev "servers", just so that me messing with the ingress or any software on either won't break everything.

In that regard, having a single dedicated server will actually be better towards making the underlying infrastructure pretty simple, while also giving me a decent amount of bang for the buck, plenty of storage so I can tell those cleanup policies to frick off and do stuff once a month or two manually, alongside still being able to limit the blast range of me messing things up.

Also, since I'm not sponsored by Hetzner or anything, I can tell you that the internal UI for those dedicated servers absolutely sucks, but the prices and the hardware seem okay. In that regard, they're not unlike Contabo, though they do have better reputation, presumably due to their support and track record. Either way, I also look forwards to the day when I get rid of the pull approach for getting Docker images and can maybe even throw Nexus out into the trash and just send images directly to the server over SCP/SFTP, maybe even use something like unregistry.

But until then, I'm crashing the heck out - weekend is kinda ruined and I didn't even learn that much useful, outside of the fact that Tailscale has the odd failure mode of sometimes just disconnecting your servers and asking you to reconnect manually. I love that Tailscale exists, but WTF. Oh and also that I was not at all wrong in calling a lot of the software out there brittle. Well, okay, maybe the thing that's simplest for me would be to open my wallet and make all of the technical bit someone else's problem and just focus on building software, but that's slightly beyond my means for now, so I learn, I tinker and sometimes I suffer.

Who knows, maybe I'm damned to experience the darker side of Murphy's law, like how the protagonist in the game Receiver 2 exists in a world where anything that can go badly, will. I don't really blog about video games, but if I did, I'd definitely do a post about that game - because the writing and worldbuilding in it is pretty interesting. The counterpoint, of course, is that I've self-selected for trying to get my jank setup working with minimal resources.

Update

Turns out that Tailscale has a documentation page that explains the key expiry, although I'm not sure whether that was the cause or something similar to the issue mentioned above, since there was no messaging about any expiry to me. I get that it's a security feature, but still, some notifications about that would be nice. A bit like how Let's Encrypt used to do certificate expiry reminders over e-mail, but for whatever reason discontinued that service, which I find to be a bummer.

Oh and fun thing: it seems that not only Portainer decided to upgrade to a new version (since that's one of the few containers where I just went with the LTS tag, expecting updates for something so fundamental to be quite important), but also wiped my defined webhooks, so I had to update the CI configuration for redeploying this very blog as well. Issues are just piling on, it seems.

Other posts: « Next Previous »