Docker error messages are pretty cryptic sometimes

Date:

With the new version of the blog up and running, let's complain about technology again for a little bit!

I use Docker a lot for development. Nowadays, most of the software I make and want to deploy on servers, whether for personal projects, or at work, is containerized. I take a Git repository, build a container image from that and then can launch it wherever I need, with the exact versions of dependencies that it was built with. There are quite a few benefits to this:

- no more issues with slightly different runtime versions, I've had problems due to that in the past

- no more human factor related risks, when it comes to setting up the app instance

- the ability to set resource limits (e.g. CPU, RAM), so that the app plays nicely with others and can't bring the whole server down

- a common way of configuring the environment variables, storage, networking and a lot of other things

You don't always need containers per se (and I shipped some stuff running directly in the OS as a systemd service last week), but when you do, the DX (developer experience) is generally quite pleasant. I would also say that this was one of the selling points of Docker in particular, even if containers existed long before it. Even when technologies like Kubernetes are sometimes more trouble than they are worth.

But this isn't about Kubernetes, not at all. It's about something a bit more fundamental breaking down, since yesterday I started randomly seeing some errors in the logs, when building some containers:

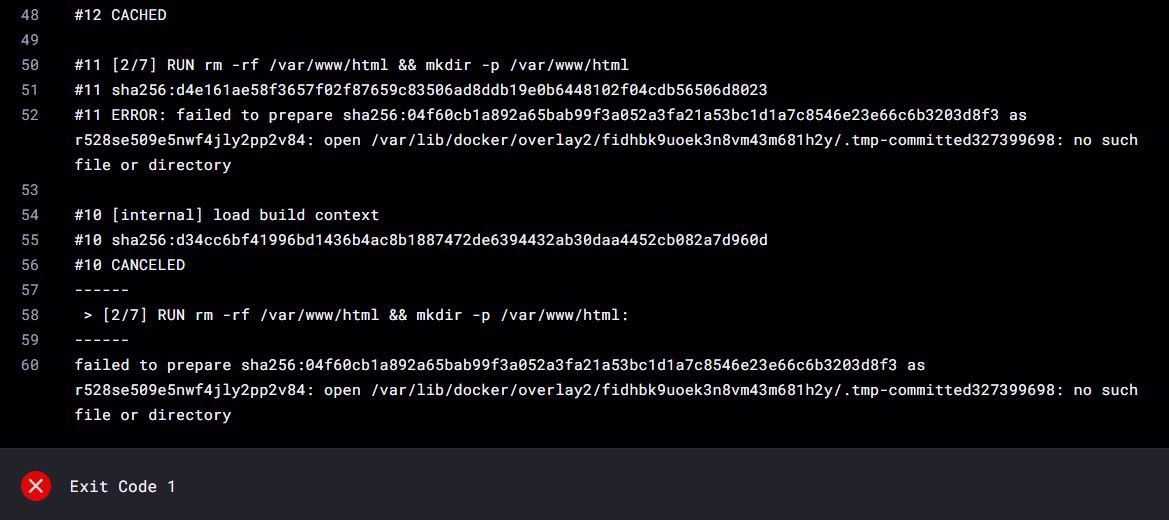

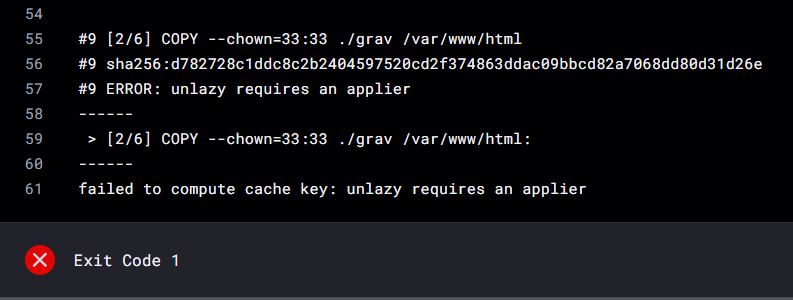

So, I basically got:

no such file or directoryThat'd suggest that I've messed up something in the Dockerfile, right? Well, not really, not in this case, because the very same pipeline would other times throw this:

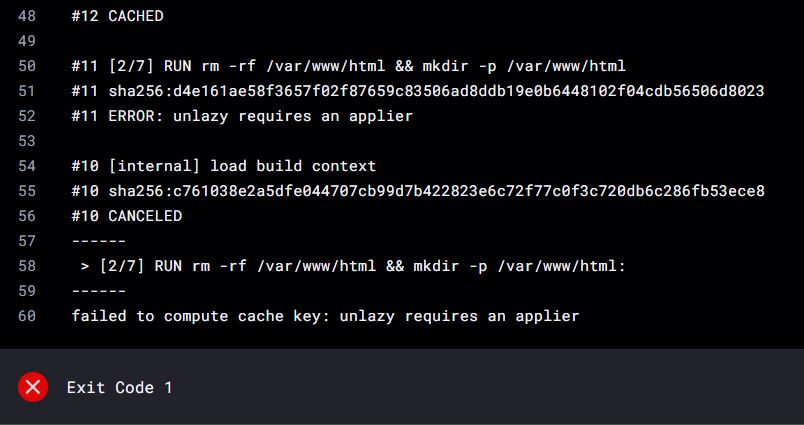

Here's the other error (hey SEO):

failed to compute cache key: unlazy requires an applierNow, the obvious question is:

What am I even looking at?

I have no idea what unlazy is or why it'd break the Docker caching mechanism. Even the Dockerfile was very simple, there's literally not a lot that could go wrong there:

FROM ...

# Disable time zone prompts etc.

ARG DEBIAN_FRONTEND=noninteractive

# Time zone

ENV TZ=Europe/Riga

# Use Bash as our shell

SHELL ["/bin/bash", "-c"]

# We don't need the default files, just the directory

RUN rm -rf /var/www/html && mkdir -p /var/www/html

# Copy over Grav files

COPY --chown=33:33 ./grav /var/www/html

# Copy over config that we will use for Grav by default

COPY ./apache/etc/apache2/sites-enabled/000-default.conf /etc/apache2/sites-enabled/000-default.conf

# Setup entrypoint

COPY ./apache/docker-entrypoint.sh /docker-entrypoint.sh

RUN chmod +x /docker-entrypoint.sh

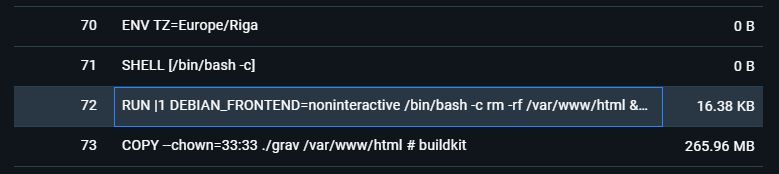

CMD "/docker-entrypoint.sh"The base image that I use does have some files under /var/www/html for the purposes of testing out whether the configuration has been setup correctly. Having to remove those for production builds isn't very efficient because it creates a spare Docker layer that we don't really need, but isn't necessarily the worst thing ever, either:

But why would that break, especially when building everything locally is perfectly okay and similar setups have worked with no issues in the past? My first idea was to take the Dockerfile and temporarily remove the cleanup and see if things start working better without it:

# We don't need the default files, just the directory

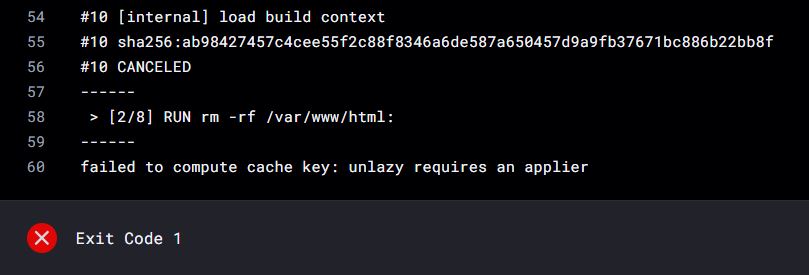

RUN rm -rf /var/www/html && mkdir -p /var/www/htmlIf that's the part that's causing the issues, then surely this should fix it? Well, no, it didn't:

So after the changes, we get the same issue but in a different location. The same happens if I remove further instructions, which is really odd. There simply isn't a lot that could go wrong with the simple COPY instruction, which more or less confirms that the issue lies with the Docker environment itself (or perhaps the cache in particular), instead of what's in the file:

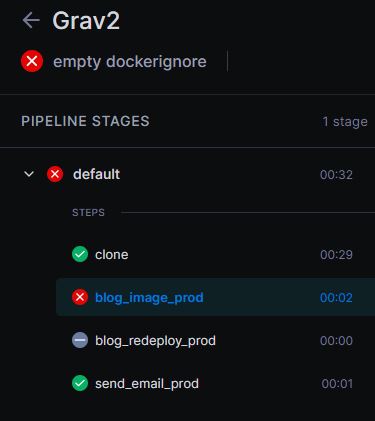

Some comments online suggested that it could be related to the .dockerignore file, but it still fails even after removing the file altogether:

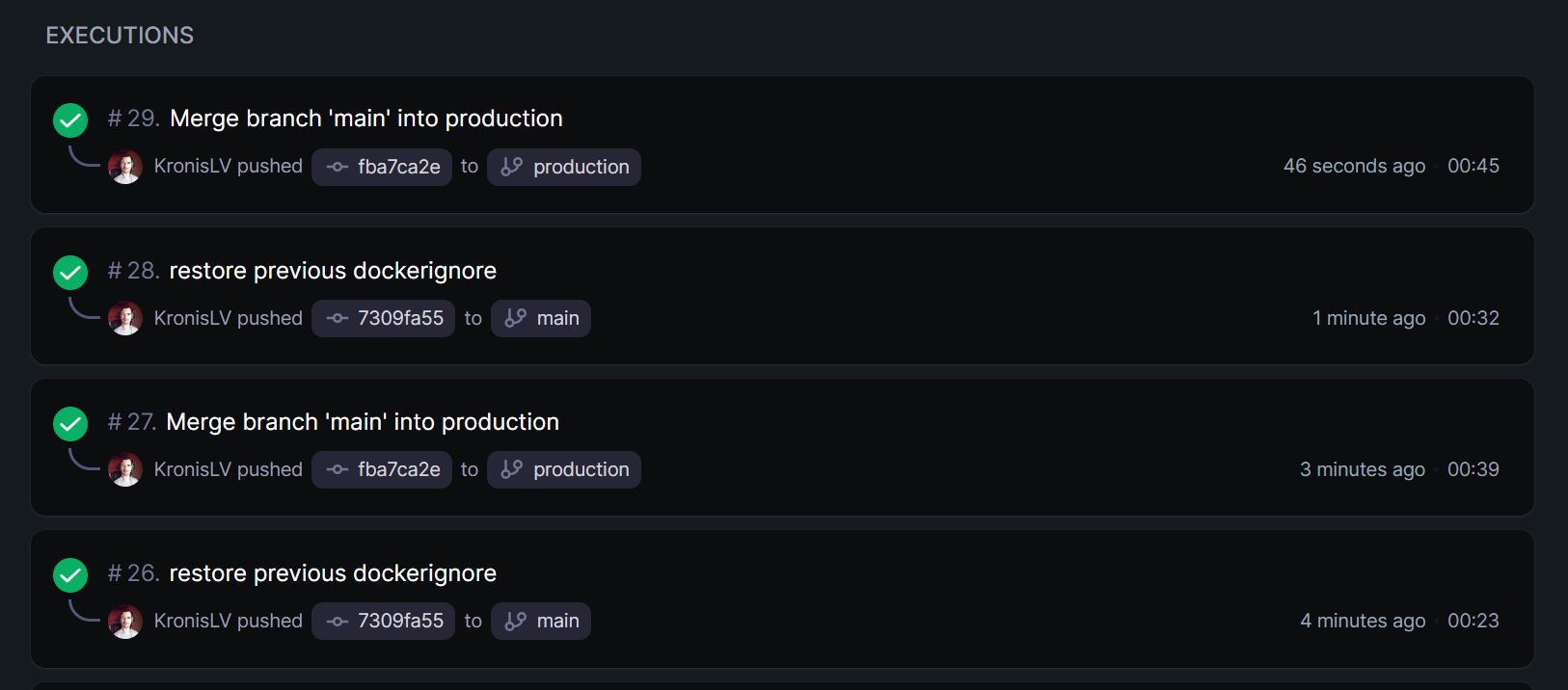

What did seem to help, was not running multiple builds in parallel, but instead running them one at a time:

Except that's also a lie, because the very same commit sometimes works and sometimes doesn't:

Frankly, I don't really know what to do here.

Summary

I think the most annoying thing in cases like this, is that we don't really know exactly what the problem is. Some troubleshooting can help us narrow it down somewhat, but at the end of the day, it's still pretty much like the absolutely useless "Unspecified error" messages that you can get from Windows or other software, where the developers didn't really care that much about helpful error messages.

I fear that the real solution might just involve wiping the server and setting it up anew. Best case, I'll have to wipe the cache and that'll help it, but because that's done automatically and didn't seem to help either (the problem persists today as it did yesterday), I wouldn't count on it. There were also some networking changes that I did, but honestly those really shouldn't be at fault.

Otherwise, I have no idea because it's not like the software is trying to be helpful.

Other posts: « Next Previous »