Gitea isn't immune to issues either

Date:

It's a little bit amusing that some of my first posts on the new blog setup are about how software is broken, but here we are. Regardless of whether I'm just quite unlucky, or just plain cursed, let's explore the fact that Gitea also has some pretty unfortunate issues.

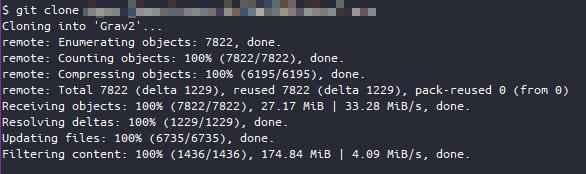

I previously moved to self-hosting Gitea from my older GitLab setup, because it's much easier to update and administer, in addition to needing fewer resources to run well. Overall, I'm quite happy with it, but it's not bulletproof either. When I was migrating the blog, I decided to check how much storage Git LFS was using. Much to my surprise, that figure was 0 KB, which made no sense.

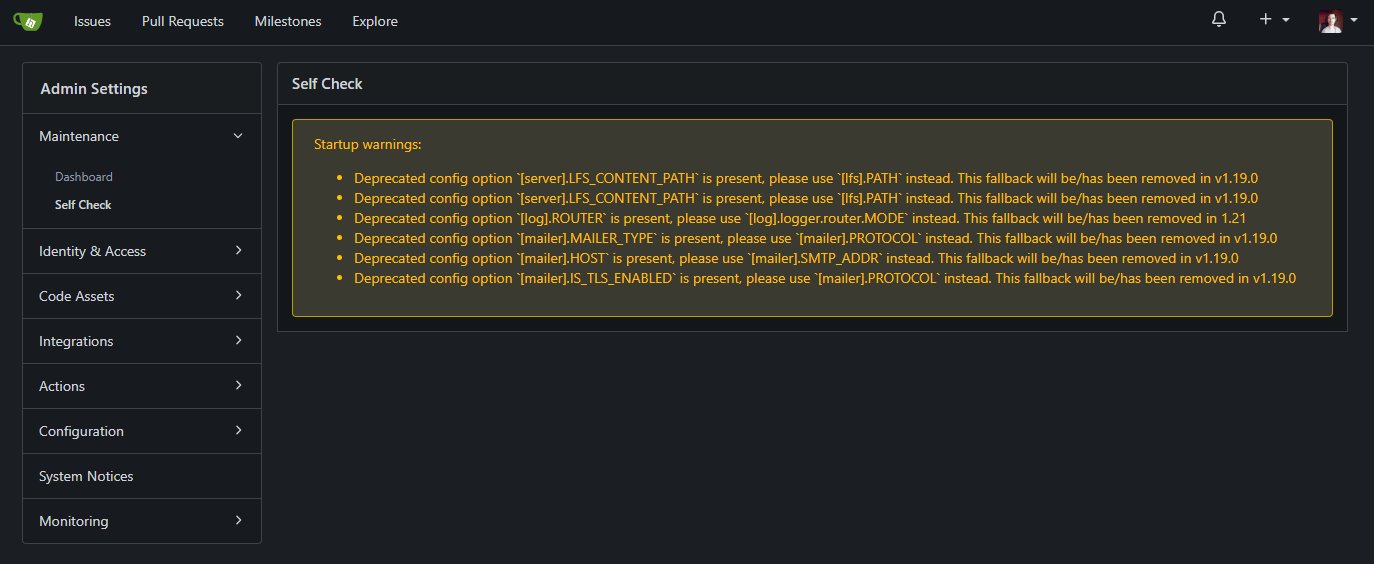

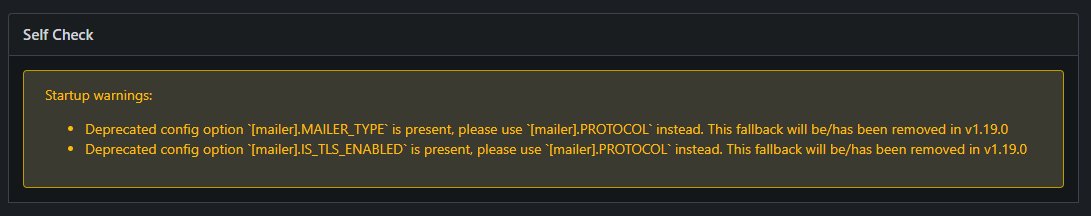

Trying to see why that was, I noticed a bunch of notices in the Self Check section of the admin pages:

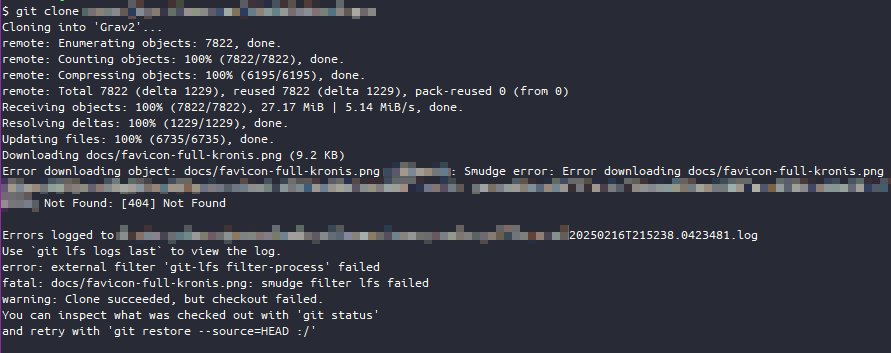

This is where the first issues became apparent: there was a bunch of supposedly deprecated configuration being used, which might break things, but this was only visible in the admin dashboard. I wanted to verify whether this is really the issue, so I tried cloning the repository fresh and it did indeed have problems with binary files that are managed by Git LFS:

It's extremely alarming, especially when you consider that I got no issues when trying to initially push the resources, neither from the Git client, nor in Gitea. In other words, I might have been pushing code that would never work properly due to an incomplete set of changes being put on the server, Gitea failing silently. But it's not just a matter of the configuration, rather, the way it's managed is bad.

Bad ways to handle configuration

Suppose that you have an application that needs some configuration to be changed, which, if not done, can have catastrophic circumstances like the above. If you want to use version control, then it makes sense to expect that things would be versioned correctly and that not happening is as bad as it gets.

Now, which of the following do you think is the best approach for managing such circumstances:

- describing the new configuration in your changelog or docs and letting things silently break for like 90% of your userbase

- also adding some sanity checks and making the old bad configuration be logged in the app itself somewhere, bringing that number down to maybe 70%

- showing the configuration warnings and the possible impact of those in the application itself (or even a generic message like "There is deprecated configuration in use, please see the admin Self Check section for more information"), making that number a more manageable 40%

- also sending e-mails out to the administrative users on startup or other important events, so that even if they don't look at the Web UI for a bit, they'll still be notified of what they need to do, making that number maybe 20%

- deciding to reject Git pushes when the broken configuration is used, so that things cannot fail silently and so that users see exactly what's going on, essentially making that figure 0%

- or perhaps, if the configuration values are pretty straightforward and what you've done is essentially some reorganization, then migrate the old values on startup, failing fast in the case that can't be done, also making that figure be 0%

In other words, it's possible to go from approx. 90% of your userbase having issues due to the actions that you've done, down to that figure essentially being 0%, by progressively making it more apparent, regardless of whether it's done just for the repositories that use Git LFS or all of them. Instead, letting the code silently fail is the worst possible thing that you can do. It doesn't end there, though, the rabbit hole goes deeper.

You see, I run my instance of Gitea in a Docker container, they even have official instructions on how to set it up. If most of the configuration can and is done through the Docker environment variables then it'd make sense for all of the critical parameters to be correctly set there, or at least the underlying files to be updated to a good state, right? Well, no, because I didn't have many of the deprecated values anywhere in the description of my environment:

This is actually a mistake that a lot of software makes, I'm not just being critical of Gitea here in particular, but rather the overall trend. If you're going to containerize your software, you more or less owe it to your users to have a docker-entrypoint.sh script that maps ALL of the allowed configuration values to the files upon every startup and also has sane defaults for the values that aren't set. Defaults, which could technically change or be migrated across releases, all done in a way where the user doesn't have to think about them.

I know why it doesn't happen - people often use Docker as just a packaging format of their pre-existing software and don't really want to invest in the tooling around it too much, such as having a formal schema of all of the allowed configuration values and iterating through it and checking against all of the incoming values and the ones stored in the configuration files. It's difficult and I mostly only do that for my private software, because getting the buy in for that at work is slow, although there is some promise there finally.

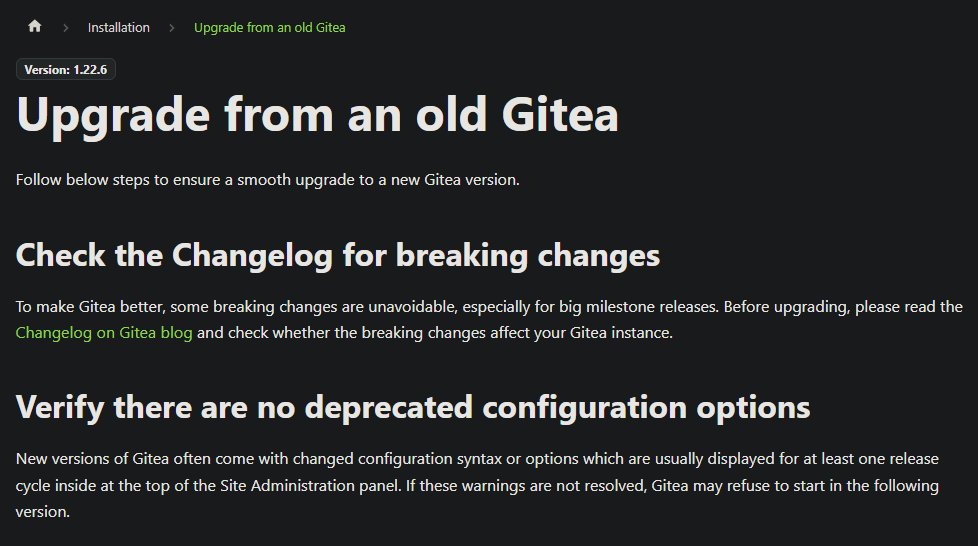

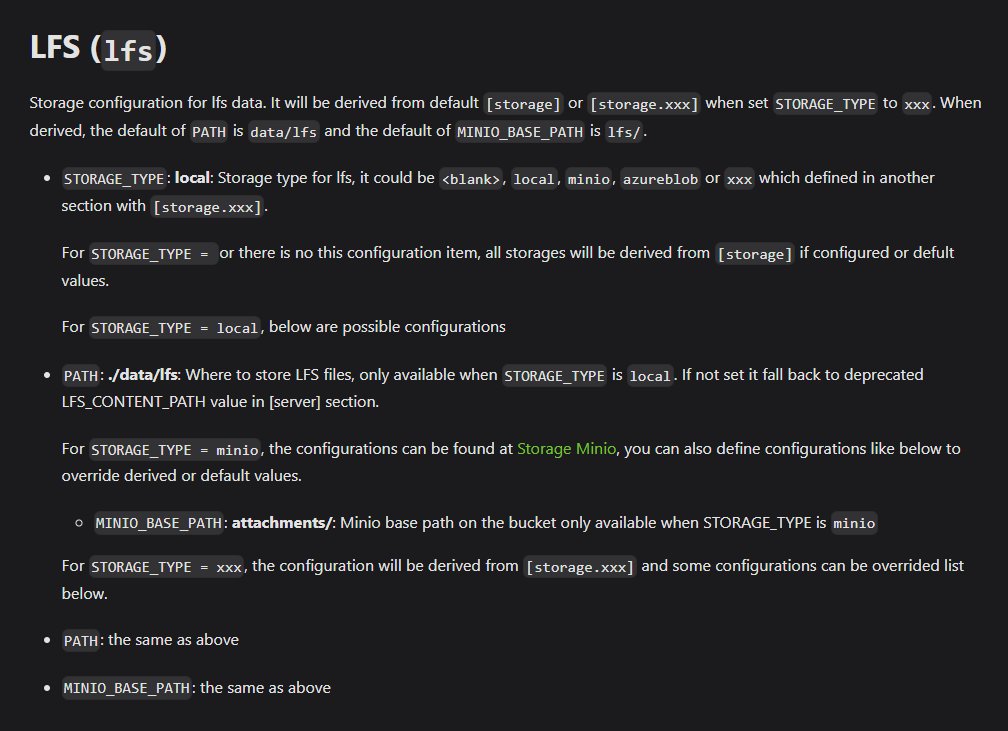

To Gitea's credit, they have at least done some of the things to inform you about what you need to do, even if you're still on the hook yourself for checking the self check dashboard after bumping a version, or even know that it exists. They're setting you up for failure, because human beings are fallible, but at least they document what changes you might need to do:

Oddly enough, the page mentions that the app shouldn't start with bad config, yet it does and just trudges along. It's also quite unfortunate, because the actual configuration that I needed to change was very trivial in pretty much all of the cases, to the point where you could easily migrate it over with a Bash script or a Python script (or even in Go), just iterating over the old config and fixing it:

For example, couldn't you just take server.LFS_CONTENT_PATH and transform that into lfs.PATH? And if there is no STORAGE_TYPE set, then just set it to local, which corresponds to the previous configuration. You can be doubly sure, if you see that the actual path value is the default value of /data/git/lfs, meaning that you're unlikely to ever need to consider whether migrating it could cause further issues:

Of course, there were some other issues, that refused to go away, even after me setting the correct parameters. Apparently, my mail configuration was all wrong, even if the values mentioned here were set NEITHER in the Docker environment variables, nor in the actual configuration file:

That's a bit silly, especially given that the actual e-mail functionality kept working with no issues:

Of course, fixing the configuration wasn't enough, because by now the damage was already done.

Software silently failing is the worst

If any software fails silently, such as MySQL quietly truncating values in the older versions, or even something like running non-ECC memory and getting corruption issues in your backup archives, then by the time you realize that something is wrong, it might already be too late. I guess in this case I got pretty lucky, because I caught it all early on, but this more or less meant that I had to go through my local repositories and push all of Git LFS files again, to make sure that they're actually on the server, since I did bump the server version a while back.

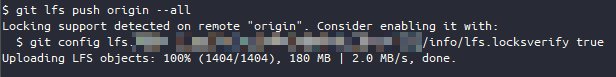

Luckily, Git LFS actually has a command for that: git lfs push [remote] --all

It actually works pretty well, which is unlike 90% of my experiences with Git LFS:

After doing that, I can clone the repository correctly, meaning that the data is on the server:

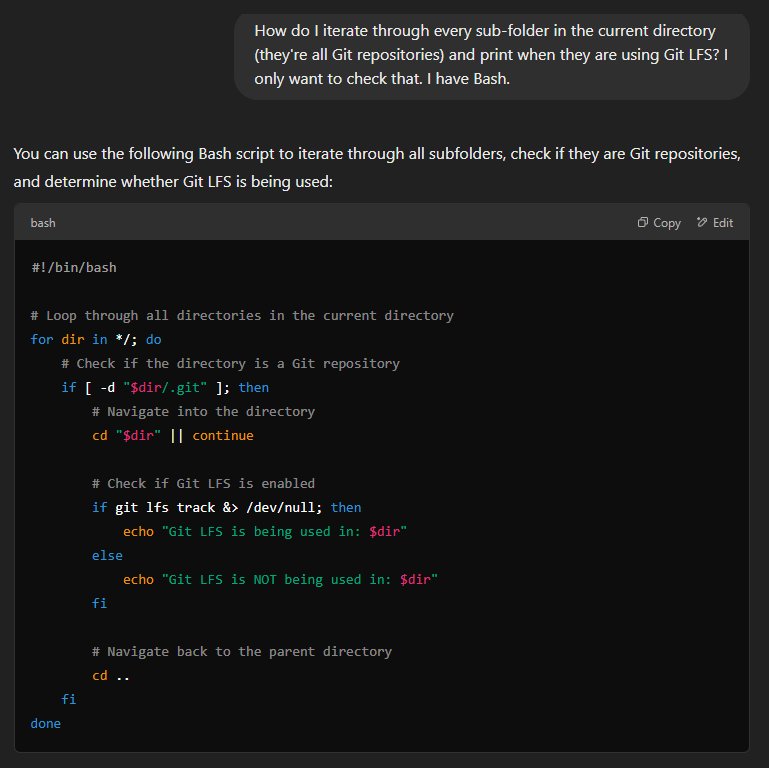

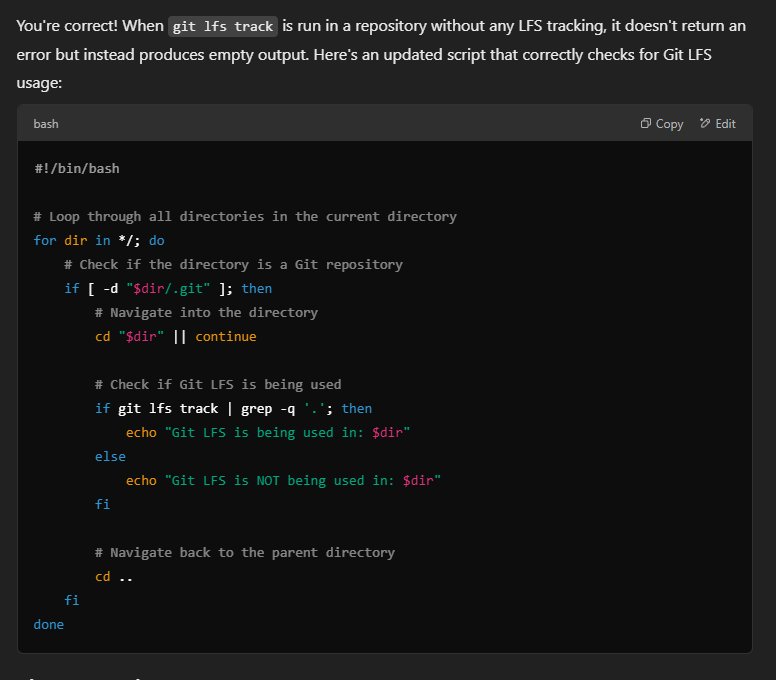

The actual problem is knowing which repositories need to be pushed again. The first step is probably to find out which ones use Git LFS in the first place. I actually ended up just turning to ChatGPT for this, to give me a script to iterate through each of my project folders and tell me how widespread the damage is. I do recall using Git LFS for some binary assets like images (supposedly a best practice) and some GameDev projects, but I really don't want this to be a case of data loss due to me forgetting something:

Unsurprisingly, the first iteration actually didn't work, so I had to ask for some more specific changes, such as checking for whether the output is empty. Since the task itself is simple, but some of the valid solutions, such as git lfs track | grep -q '.' (due to it printing the tracked file types) feel not immediately obvious, this was actually a really good use case for an LLM:

In other words, we pick up on the output in the following format:

$ git lfs track

Listing tracked patterns

*.png (.gitattributes)

*.jpg (.gitattributes)

*.jpeg (.gitattributes)

*.gif (.gitattributes)

*.mp4 (.gitattributes)

*.ico (.gitattributes)

*.xcf (.gitattributes)

*.phar (.gitattributes)

grav\bin\* (.gitattributes)

*.jpg (.gitattributes)

*.jpeg (.gitattributes)

*.png (.gitattributes)

*.gif (.gitattributes)

*.mp4 (.gitattributes)

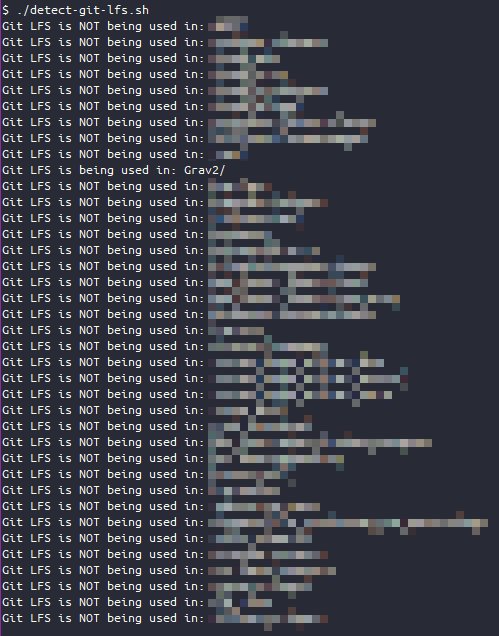

*.ico (.gitattributes)The script worked pretty nicely and soon I knew that none of the other projects in that directory needed further actions, but I did find a few in the GameDev project directory:

That was all I needed to do, to solve this issue.

Summary

Overall, I'm pretty happy that I could figure this out, instead of realizing that something is very wrong like a year down the line, when the local files would no longer be present on my PC (especially because I do intend to reinstall the OS soon), but it's rather unfortunate that I had this issue in the first place, because I definitely shouldn't. I still like Gitea, but this just goes to show that almost no software will put that much care into the configuration management.

Truth be told, people won't always care about the details, which is inevitable, so you should just bite the bullet and make the machine care, put that care into the code that will be run regardless of who runs it and how.

Also, I've had issues with software like Nextcloud in the past where it would refuse to update properly, but at least in those cases it failed fast and printed exactly what was wrong in the logs. Not that it wasn't annoying, because it was and it inspired the Software updates as clean wipes post, but I will take that instead of silent data corruption any day of the week.

I will keep using Gitea because it's pretty good for my needs, but this is definitely something to keep in mind, especially for the software that we write ourselves.

Other posts: « Next Previous »