Sometimes Dropbox is just FTP: building a link shortener

Date:

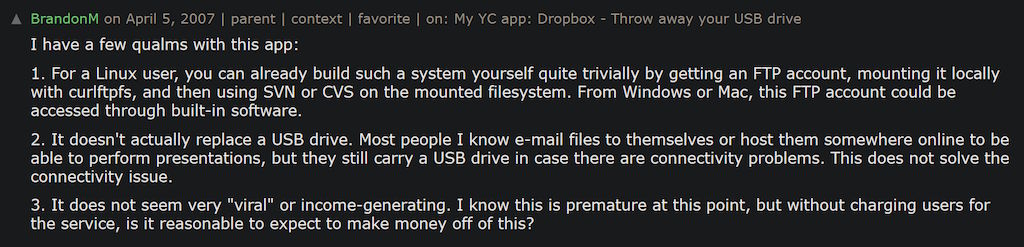

There is this one old HackerNews post that sometimes people reference, when talking about how engineers view software:

It's an observation of how the trend goes:

- a new product or service comes out

- an engineer looks at it and says "Anyone could build this for themselves"

- it proceeds to do really well and becomes popular regardless

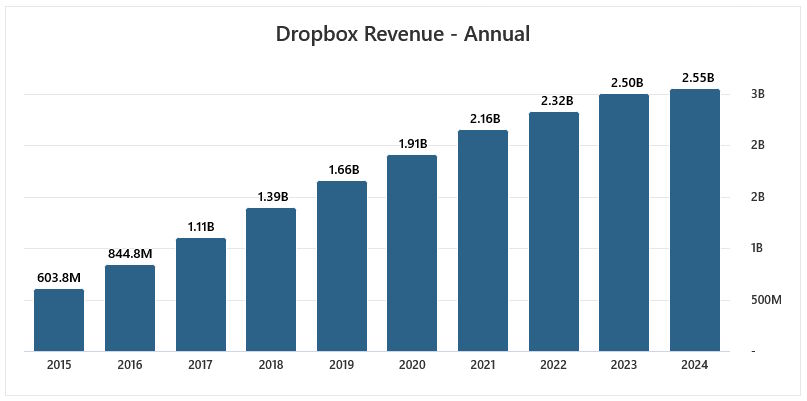

There's even an XKCD about this and you can look at the Dropbox revenue for yourselves:

Sometimes these products or services more or less outlive the relevance of the software that already existed and could be used to build something like that at the time. Why? Well, in part, I don't doubt that engineers underestimate the staying power of something that is pleasant to use and also solves a problem that the user has, even if it's nothing groundbreaking on a technical level.

Sometimes it's not even completely new ideas or software, either. For example, look at Linear, it is just a slightly better Jira that already existed before, which in turn is maybe a bit better than Redmine. Similarly, Obsidian is just Notepad++ with Dropbox, which could just be Notepad++ with SFTP.

And yet, all of those are useful and are doing pretty well! Except in some cases, the engineer is right and Dropbox is, indeed, just SFTP.

My previous setup

Let's talk about link shorteners. I use them on this very blog, as you can see above - in part due to the CMS that's running this blog sometimes having weird behavior around complex URLs, other times because a URL gets super long and I want to include something nice instead, e.g. a URL to a map to tell a delivery driver exactly how to get to my place when delivering something, or maybe some instructions for an event, or how to access a particular document.

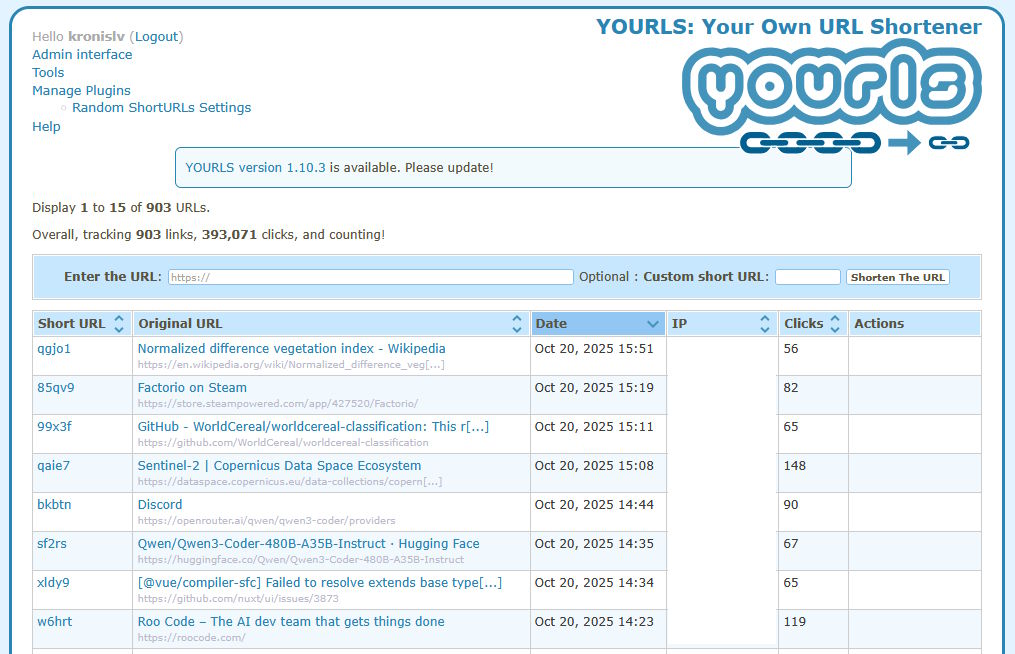

There are some out there that you can just use, however they might not be around forever, might inject ads and other stuff before the redirect (they have to earn money somehow as well), in addition to there always being the option of self-hosting something yourself. For a while, that's exactly what I did. There is a pretty cool open source project out there, that is called YOURLS. It has served me well for a while and was pretty much perfect for not making me rely on external services.

Here's what it looks like, in case you're curious:

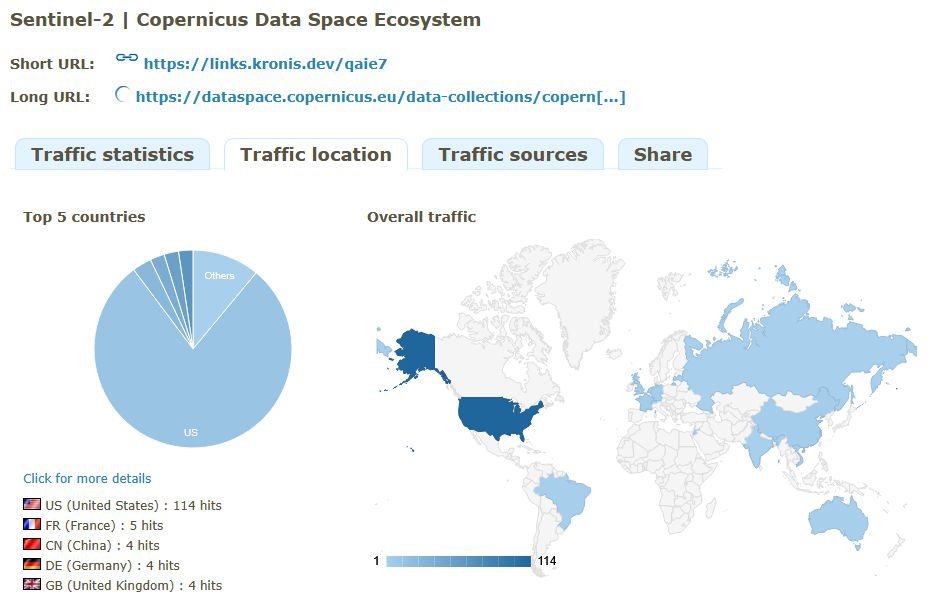

It even supports a pretty nice statistics view, in case you're curious about where your traffic is coming from and all sorts of other stuff:

At the same time, as an engineer, I view it as a liability. I trust that the developers are doing their best, but what if there's a vulnerability, maybe not even in their code, but in one of the dependencies? What about keeping the database that it needs up to date? What if one day it decides to break, like I've had happen plenty of times with PostgreSQL WAL getting corrupted and needing manual intervention? Even if nothing breaks there, sooner or later the updates will need newer PHP versions, speaking of which, what if there's a PHP or configuration related issue?

Obviously, I don't actually store anything important in this instance, but anything that might help me focus less on running and administering software (which seems to be an ever increasing amount), leaves me free to spend more of my time and attention on more important things.

So, let's build our own link shortener.

Building just what I need

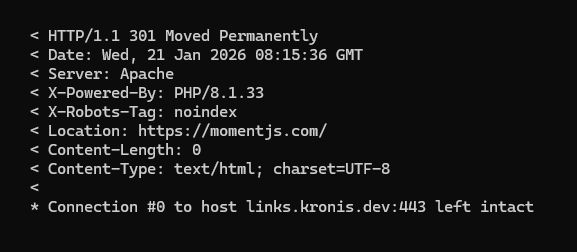

At its core, a link shortener just receives a request and if it matches something that should be redirected, does so with the Location header and either a 301 (permanent redirect) or 302 (temporary redirect) status code, depending on whether the link is meant to be unchanging, which it will be most of the time, or something that could change often:

I quite quickly realized that I don't actually care much about the analytics or statistics, similarly to how I mostly blog to just put my voice out there, but don't care much about back-and-forth or the sometimes inflammatory comments I might get with a public comments section (it's kind of unfortunate, but anyone who's seen YouTube comments will get it, maybe outside of more moderated communities like HackerNews, those have different kinds of biases despite generally being more pleasant).

Sometimes people e-mail me with kind messages and I appreciate those, funnily enough I've even gotten a few job offers thanks to my public presence, but in general I'm saying that I don't really care that much about analytics and number crunching (aside from maybe setting up analytics to have a proof of concept to apply at work later).

Either way, it became quite obvious that, much like how Dropbox is just SFTP, a link shortener could just be a web server and some shell scripts for generating the links, thanks to /dev/urandom.

To prove that, here's my link shortener:

#!/bin/bash

CONFIG_FILE="apache/etc/apache2/sites-enabled/000-default.conf"

CODE_LENGTH=10

generate_shortcode() {

local chars='a-zA-Z0-9'

cat /dev/urandom | tr -dc "$chars" | head -c "$CODE_LENGTH"

}

is_shortcode_in_use() {

local shortcode="$1"

grep -q "\"/${shortcode}\"" "$CONFIG_FILE" 2>/dev/null

return $?

}

shortcode=""

while [ -z "$shortcode" ]; do

candidate=$(generate_shortcode)

if ! is_shortcode_in_use "$candidate"; then

shortcode="$candidate"

fi

done

echo "$shortcode"I will take 100 million dollars per year, please.

Obviously, I say that in jest, but the above, with a few additional scripts and Apache2 running, alongside some CI/CD so it'd be redeployed once I push some new changes to the Git repo, covers most of my needs.

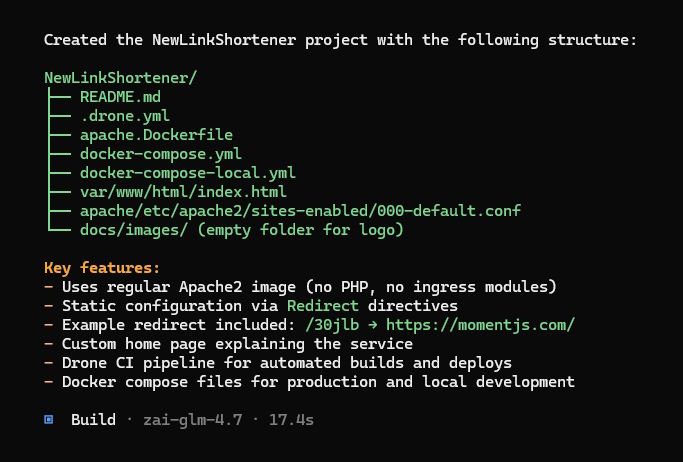

I actually got around to doing this because I reinstalled OpenCode and could bootstrap the whole project based on my pre-existing configuration examples and templates really quickly, making that friction of starting something new near-0:

In the future, that will probably let me migrate from Drone CI over to Woodpecker CI, which I've actually been meaning to do for a while now, but for now it helps me create any number of scripts and test them out, with the previously mentioned Cerebras code subscription (or Codex/Claude Code/Gemini or whatever, should the other models fuck up):

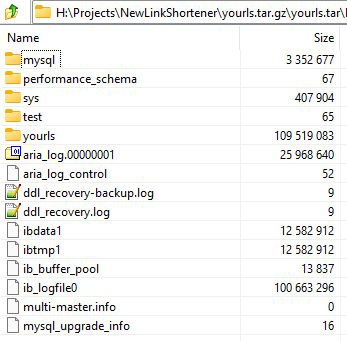

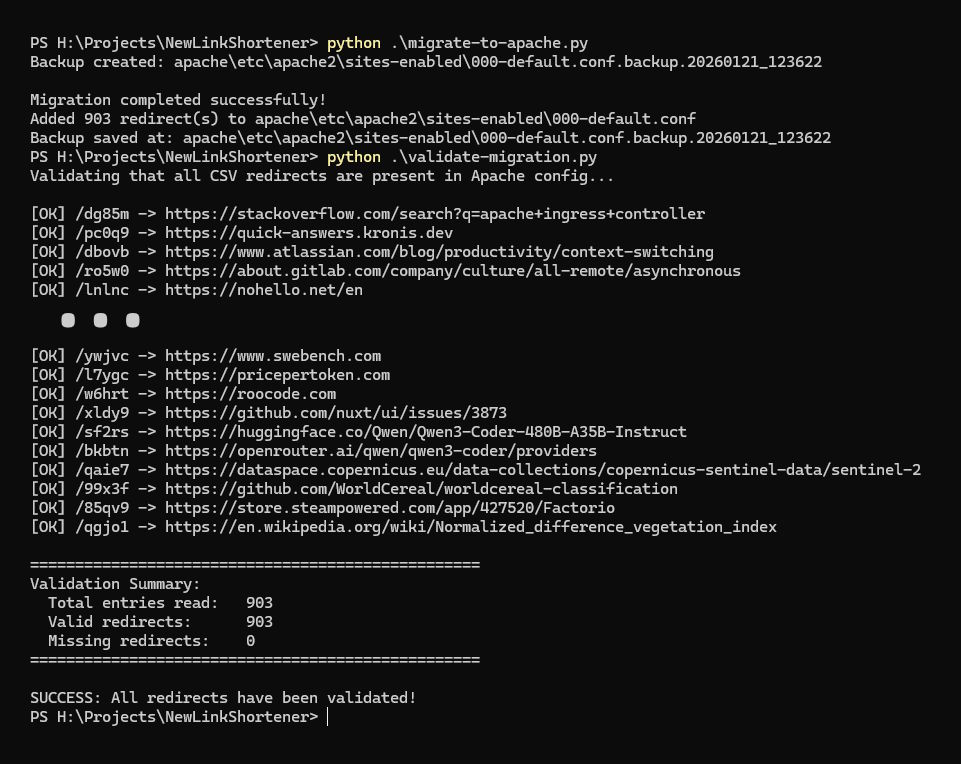

I did tweak a bunch of things along the way, but also ran into a problem, which was that I still had the old database and it's not like I can just discard 900 links and break most of my blog:

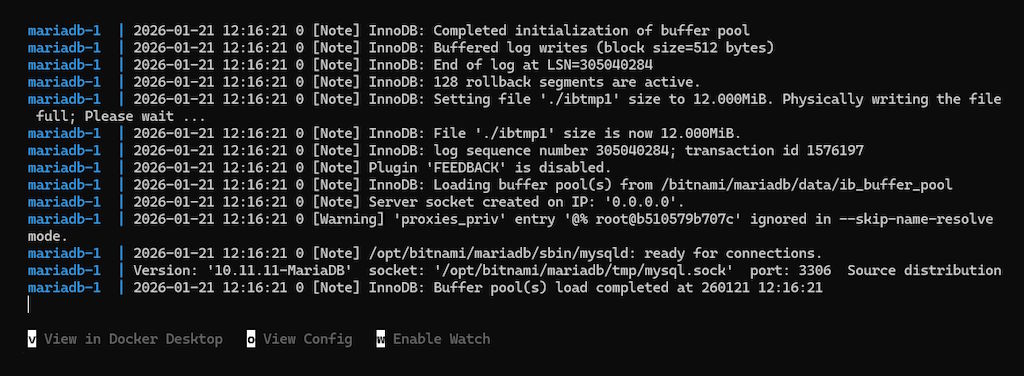

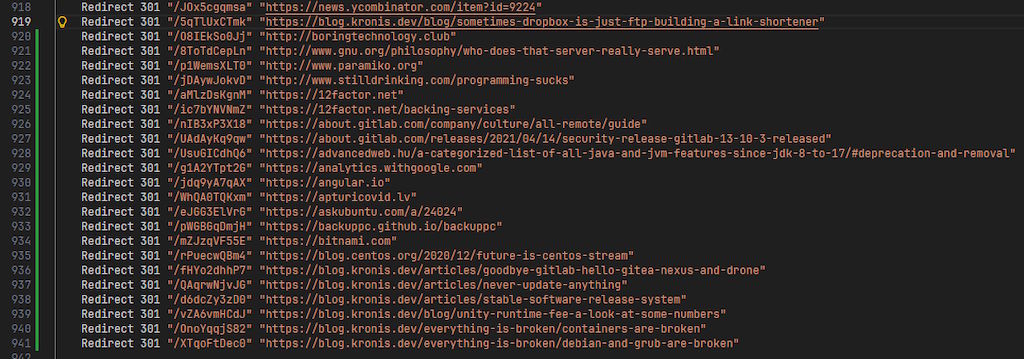

I needed to carry all of those over to my Apache2 container image configuration file, which thankfully isn't too hard, because pretty much all of my software runs in containers and the data of which can easily be exported, downloaded and ran locally:

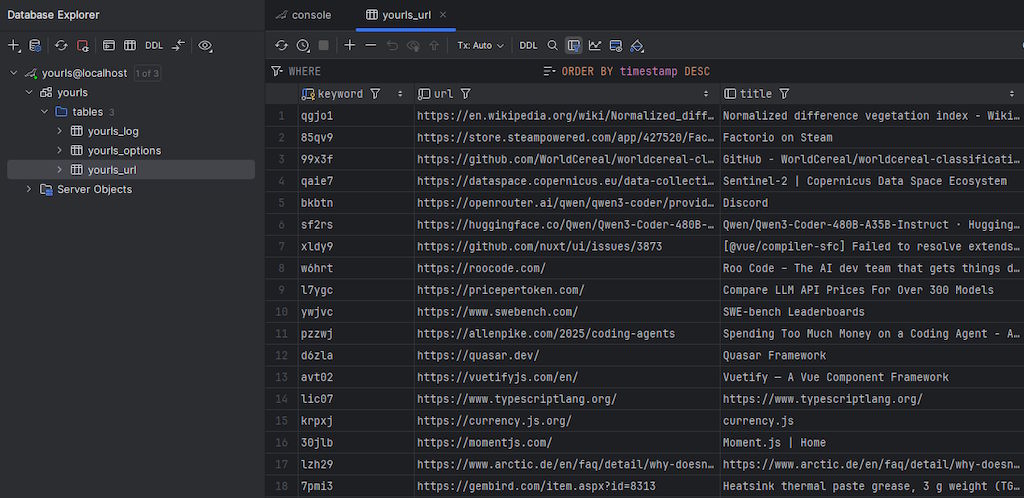

With a local instance and DataGrip (or MySQL Workbench or honestly any other software out there), I could then look at the local data pretty easily:

Now, thankfully, YOURLS had a pretty simple DB structure with no surprises along the way, but that still got me thinking about whether all of the links would follow a format with no trailing slashes and a simple Redirect directive would work in Apache2, or whether I'd need the more complex RewriteEngine and RewriteRule combination, which I'd rather avoid.

Thankfully, over here the AI once again came in helpful, executing a bunch of grep commands faster than I could come up with them (especially given the patterns):

I did later identify some other issues that had to be handled separately, but this was a pretty promising start!

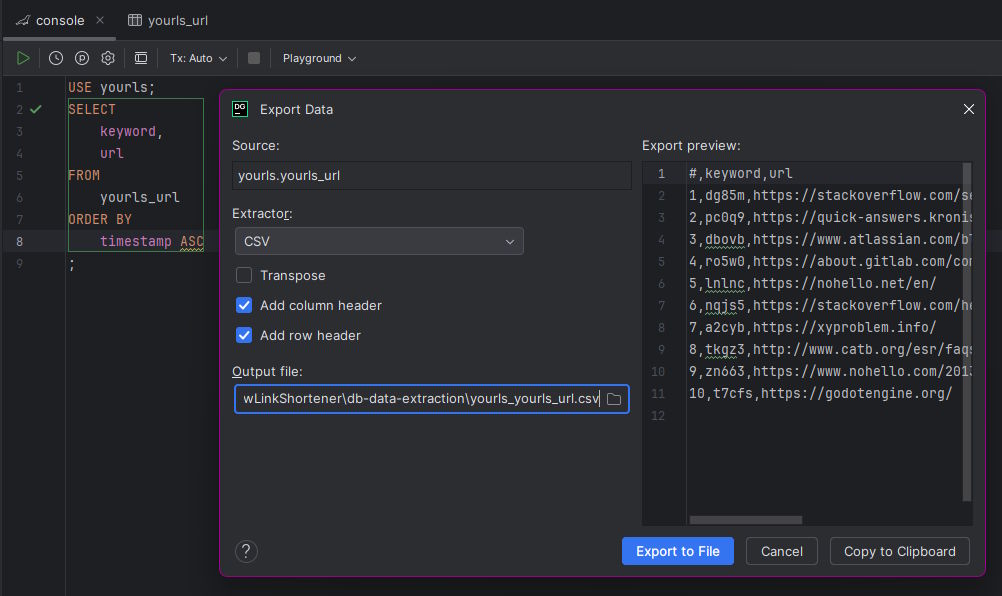

It's been said before, that you should always bet on text, which in practice means that if I can export my data from inside of MariaDB or PostgreSQL or wherever into a file I can browse on the file system in a simple manner, then suddenly operating on it becomes trivial:

Somewhere along the way I realized that we're all pretty cooked, once I realized that even the somewhat limited near-SOTA GLM-4.7 model can:

- not only use tools to navigate my file system (even if I give it my entire Projects directory, with the caveat that it might go insane and sooner or later delete everything, making me pull it from my server Git repos anew)

- not only write code with a plethora of available tools, like inside of Cline/RooCode/KiloCode or OpenCode

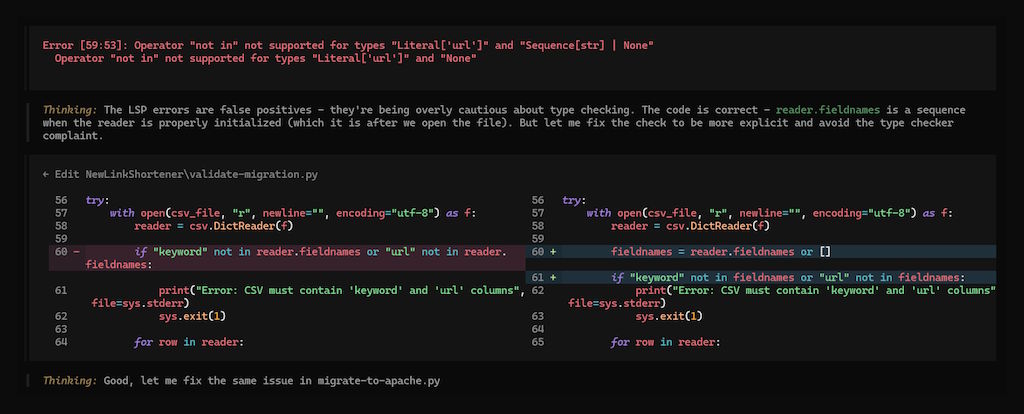

- but also that the tech works closer to being autonomous at this point, integrating with various linting and LSP tools:

As I'm sure you're aware, I acknowledge the issues with AI and ethics, but also don't have the burning passion that some of the people I know have towards AI. I view it as a tool and at this point can (perhaps unfortunately) say that it produces code faster AND better than many of the people I've worked with would, when properly guided and validated.

There are fuckups that humans wouldn't make, sure, but it also is capable of catching some edge cases in code that humans wouldn't think of - I still use it as a tool, but the people slowly raising alarms over the fact that a lot of junior developers will get replaced by greedy orgs that don't want to train them seems more and more likely. In my case, I could achieve something in 1-2 hours that would take me 3-4 hours normally, precisely because of this level of automation (and the ability to validate any one bit of output as correct/incorrect quite quickly):

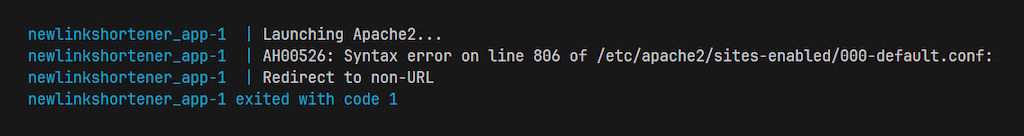

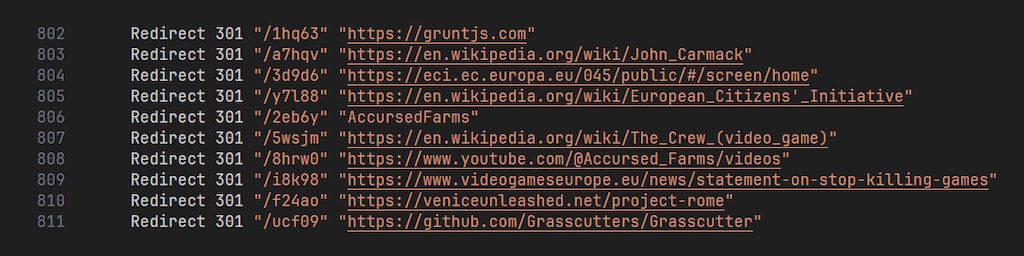

That said, it's not like we can or should discard formal methods of software engineering, for example, nor the Silica Animus, nor did the scripts it wrote catch the fact that one of the links stored in the YOURLS database was NOT a link at all (which also escaped my own view when I put it there through their web UI), but Apache2 refused to start because of it, helping me catch it:

Very much seems to me like everyone benefits from some guardrails, tests and other stuff, human and machine alike:

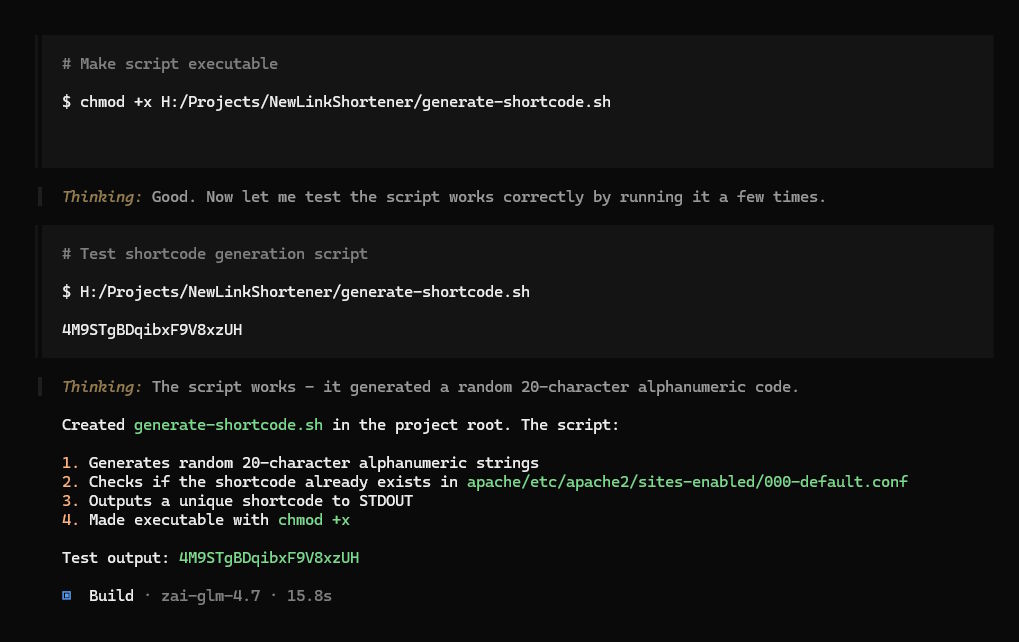

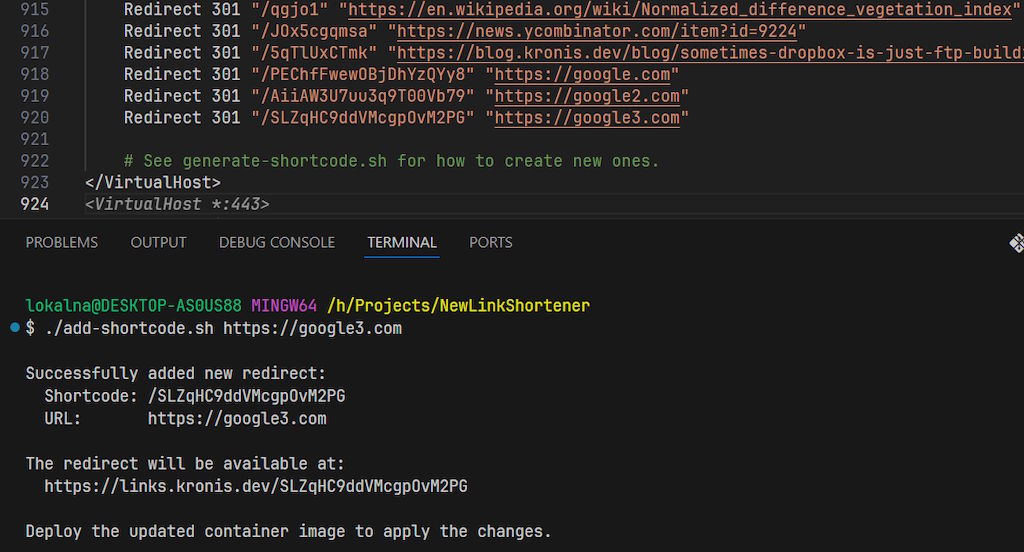

Now, obviously the file can get quite long, and under normal circumstances I will not want to edit it manually. Not like it's hard to do, but convenience scripts are so much nicer:

(I still played around with the link lengths a bit, I think 10 symbols is more than enough, especially given that I can easily check for duplicates already in the file)

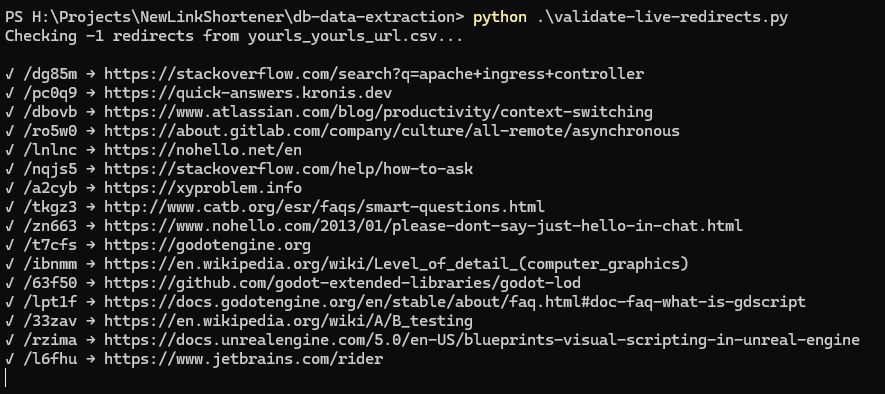

Not only did I migrate all of the links over, but I could even get a Python script running, which would check every single line in the CSV file and check whether curl can get the Location header back:

But wait, there's more! I had a bit of technical debt laying around! You see, before YOURLS, I had a bunch of regular, direct links. Although those didn't tend to break since I had only ran into simpler ones back then, I decided that migrating them as well might not be too hard to do, so with a bunch of parsing, preparation and updates, I could make every single URL in my blog (unless I messed up here and there) use this new link shortener:

It's actually immensely useful to have two repos locally and to be able to have scripts from one repo call scripts in the other repo - to iterate through my blog posts and automatically add what needs to be added to the link shortener configuration.

What's next?

So, the link shortener is up and works pretty well! It did take me a while, but that's because I ran into a slight, yet manageable, amount of scope creep, as tends to sometimes happen. The good news is that this setup is good enough to hopefully last me for another decade without too many issues along the way, probably longer than that, given the manageable amount of links my blogging (and other stuff) creates.

Oh, also, the container image builds and deploys in about 5-10 seconds. It's kind of the sweet spot of KISS and YAGNI.

Looking forwards to the future, I think that I might also consider the possibility of moving away from Grav altogether, if it ever becomes a problem - the current setup is good enough and I get the benefits from both a CMS and being able to treat it as something headless normally: it's built and deployed from my Git repo, I'm writing this post in Visual Studio Code, yet it's hard to beat the security and simplicity of a bunch of HTML files.

For some documentation at work we already moved to mdBook and in practice it works very well! Maybe it's better suited for Wiki-style sites, but a part of me also daydreams of getting rid of PHP and a bunch of dynamic logic currently needed by Grav, until some day the whole site is built on my server and sent away for deployment.

Or I could go down the rabbit hole of writing my own CMS from scratch, because even if I'd make something perhaps more horrible than Grav, it would still be a cool experience. Either way, even if I wouldn't hold my head high and confidently tell people that "Dropbox is just FTP", sometimes you can indeed build something that's good enough for you with relatively few issues along the way.

Other posts: « Next Previous »