The SSH tunnel is dead, long live Tailscale

Date:

I ended up reworking how my hybrid cloud does networking.

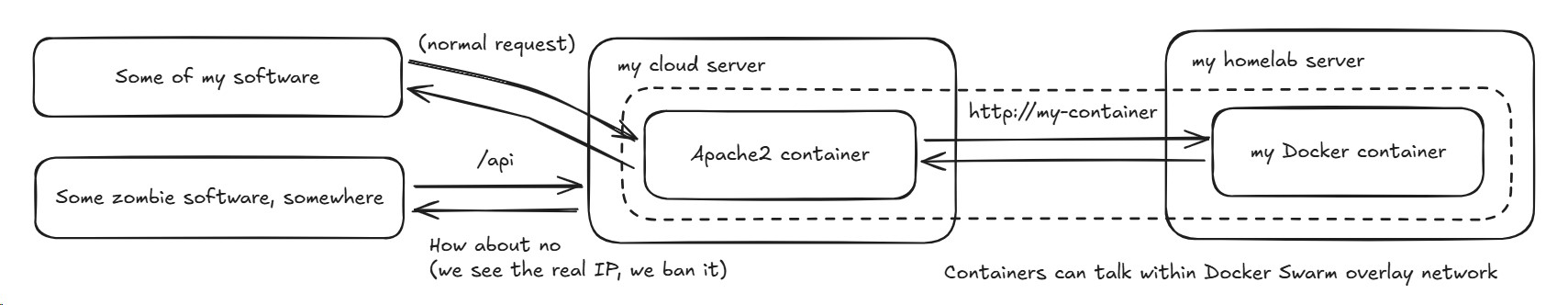

In the previous post, it became apparent that my web servers should work as a proper point of ingress: the kind that sees the IP addresses of incoming traffic, so it's easier for me to drop anything I don't like. When it comes to achieving that in a simplistic way, running the web servers directly on the nodes that receive the traffic makes more sense, than trying to use SSH tunnels or having me mess around with iptables rules.

Furthermore, if I don't want to write a bunch of manual rules of what traffic should be forwarded where or mess with IP addresses directly in my web server configuration, I can just use my Docker Swarm cluster for that. I can use it to tell the web server that's running on the public VPS that it needs to act as a reverse proxy for a container that's running on my homelab, behind NAT. While I could previously forward traffic to ports 80 and 443 over an SSH tunnel, the limitations of that quickly became apparent.

Remember this image from the previous post?

Trying to use Docker overlay networks doesn't work when you can't make the nodes communicate with one another over UDP ports as well, not just TCP. That could be achievable with SSH tunnels, but would involve some unpleasant hacks. In addition, you need a bunch of ports, so the configuration for that quickly becomes unwieldy.

Not only that, but with how Docker Swarm works, it expects an IP address and port combination for each node, instead of just random ports exposed on 127.0.0.1. In other words, I needed a proper networking setup to allow my servers to talk with one another, otherwise the containers are somewhat useless:

At this point, it's probably useful to point out that some of the details in the post will be a bit blurry, because I finished doing all of this around 3 AM and even now, as I write this, the sleep deprivation still hasn't quite worn off. The joys of trying to improve things and running into more and more and more issues, I guess.

Regardless, if I didn't want to stay up until 5 AM instead of 3 AM, there was one solution that made everything easier!

Tailscale

Enter Tailscale, a piece of software that promises to make establishing private networks easy and has client software for Linux, Windows, Mac and a bunch of other platforms. There's even a Docker container, but it did seem a tad unwieldy. I hadn't actually needed to use Tailscale before, because for the most part WireGuard had worked for getting around NAT as well, when I had just a few nodes.

Yet now, I wanted a virtual network where every single node could reach out to every other one. Not just for containers, but also things like monitoring with Zabbix or some backup software, to make things just a little bit more safe, in addition to any other auth mechanisms that the software has.

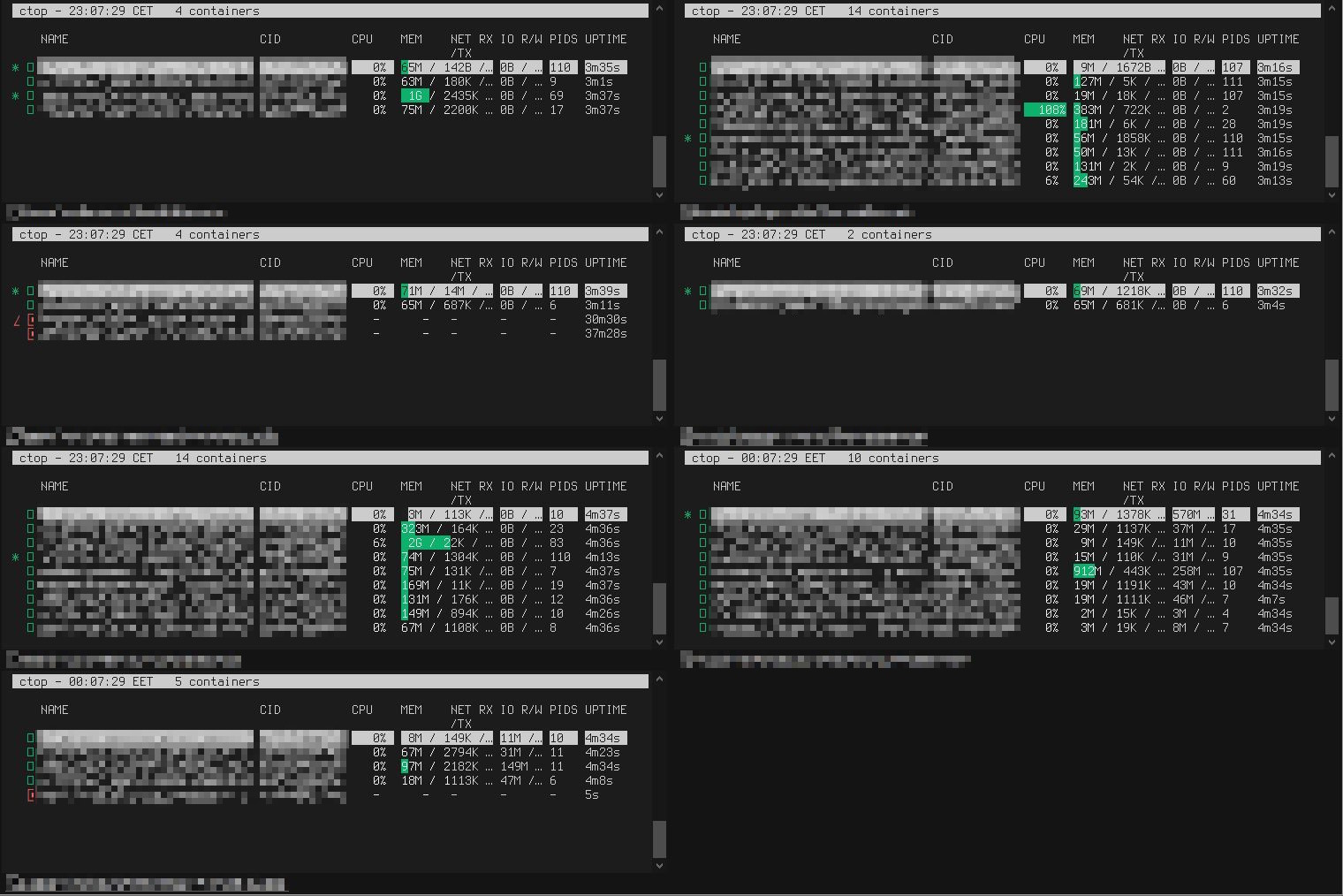

With WireGuard, I'd need to manage the private and public keys for each of the servers, for example, servers 1-6 would need to know the public key of server 7. Same for servers 1-5 and 7 for server 6. You can imagine how the configuration would become a little bit unwieldy. Tailscale solves all of that for me. I just install their software, join the nodes to a network and don't have to worry too much about it:

(notice how I try to blur some information here, sometimes I'll get a bit overzealous with that, but that's because right now everything is a blur; also, to any of the state actors or motivated people that actually have the motivation to recover the information from some pixelation and knowing what the font in question is, please don't steal my very valuable meme stash on the servers, adding black bars would break up the text too much)

Tailscale also has pretty decent pricing, where the free tier, at the time of writing, gives you the ability to connect up to a 100 devices. I'm actually surprised that they're not a bigger corporation, because their offering is simple to use and very useful. Not a paid ad or anything, I don't really do those, I just like what they have. Of course, it's a bit naughty to give data about my internal network to some corporation, but then again, if they ever became truly evil, I could just drop them and do the grunt work to switch to WireGuard directly.

But alas, every node is now connected, there is transparent NAT traversal and life is good. All that's left is to make the Swarm cluster use it.

First, I make the worker nodes leave the Swarm, with:

docker swarm leavethen followed by the manager node. This does bring everything down, but thankfully I don't care that much about the downtime in my case.

I then re-create the cluster, specifying the virtual network addresses to use, for example:

docker swarm init --advertise-addr <manager-tailnet-ip> --listen-addr <manager-tailnet-ip> --task-history-limit=3and then join the nodes to the cluster, like:

docker swarm join --advertise-addr <worker-tailnet-ip> --listen-addr <worker-tailnet-ip> --token <swarm-worker-join-token> <manager-tailnet-ip>:2377The added bonus here is that all Swarm traffic will be routed through this network, meaning that it's also a little bit more secure. After a few minutes of work, the cluster seems to be working okay. I can then redeploy my stacks and it all should just work, right?

We can't have nice things

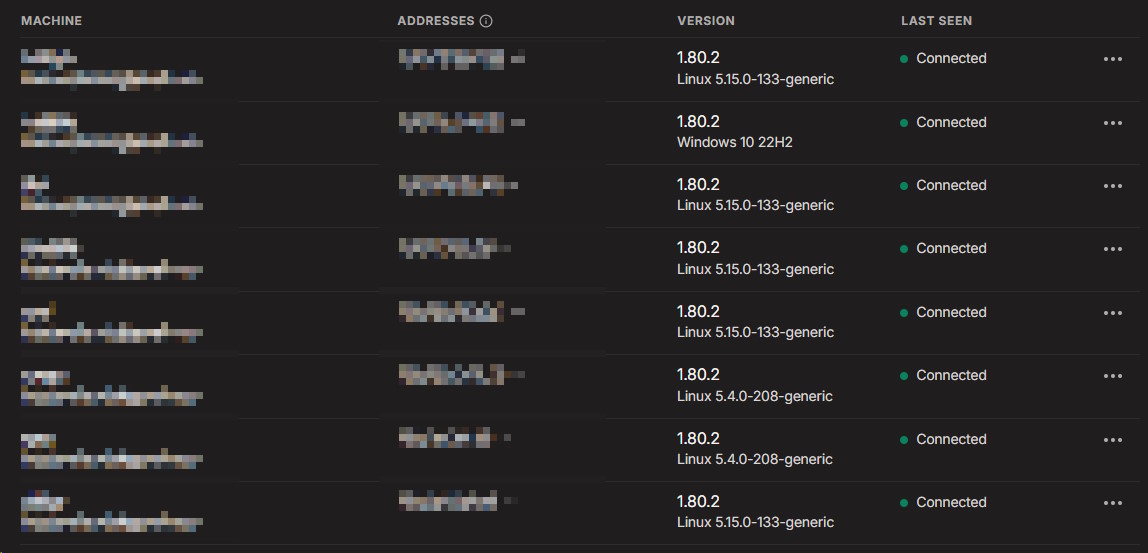

No, not really. Initially, things seem okay. But as I redeploy a bunch of web servers and a bunch of containers, suddenly I'm getting networking issues.

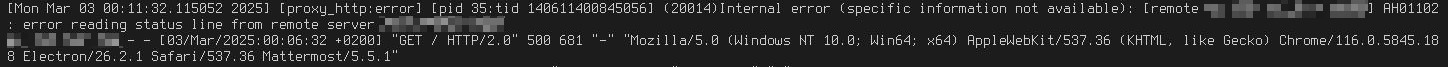

For example, there are errors along the lines of:

[proxy_http:error] ... (20014)Internal error (specific information not available): [remote ...] AH01102: error reading status line from remote server ...in the web server logs when I do requests that cross server boundaries:

and

At first, I thought that maybe it's due to issues with the web servers themselves or the containers running behind them, but it got even weirder.

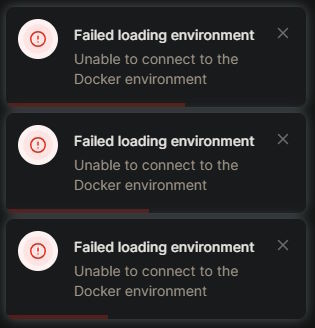

For example, Mattermost in the native client doesn't work:

Mattermost when opened in Chromium based browsers like Edge doesn't work.

Mattermost when opened in Firefox seems to kind of work, some files loading but not others.

curl seems to always return correct responses, at least for the files that I checked.

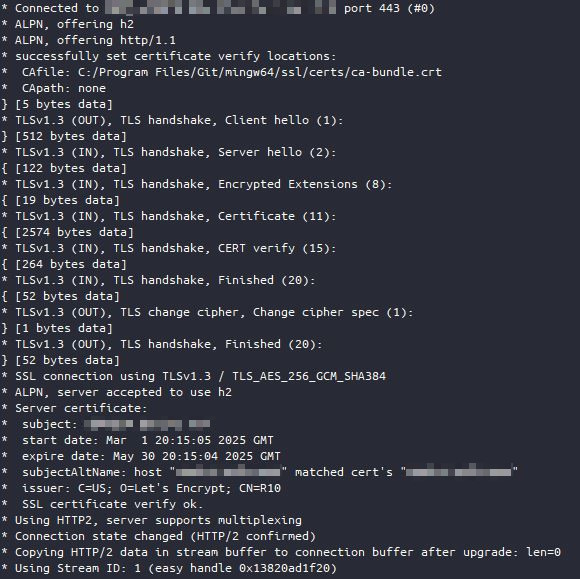

There's also nothing wrong with the TLS certificates either:

It's just your average flaky connection situation, except that it doesn't work like 90% of the time and works 10% of the time. Highly confusing and hard to track down. At this point I was wondering about whether this was related to the amount of traffic going through the network and whether the issue was on Tailscale's end or my end, since there's no way their service would be that bad.

My suspicions were somewhat confirmed, because initially Portainer had worked pretty fast, but now I couldn't even load the list of deployed stacks:

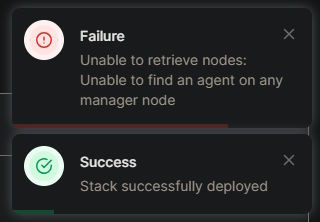

It's even weirder, though. Sometimes I could deploy new stacks, but trying to get their status immediately afterwards would fail, leading to things like this in the web interface:

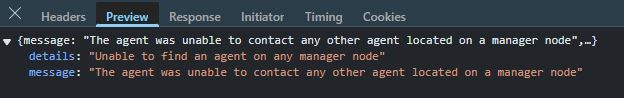

The error itself would be pretty consistent:

Unable to find an agent on any manager node

The agent was unable to contact any other agent located on a manager nodewhich I could see it both in the browser:

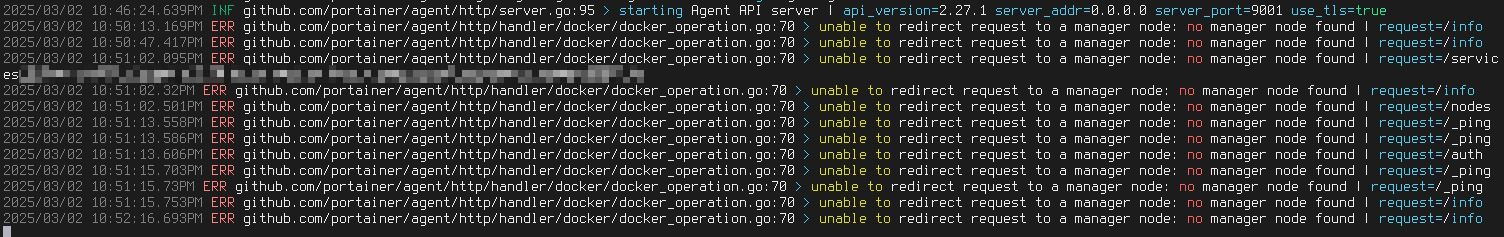

and in the logs for Portainer itself:

This definitely means that something was broken with how things are networked at a lower level, because to even get that error in the browser, the web server needs to work correctly, at least for some requests, whereas if I see that message in the Portainer logs, then the issue is present with traffic that doesn't go through the web server.

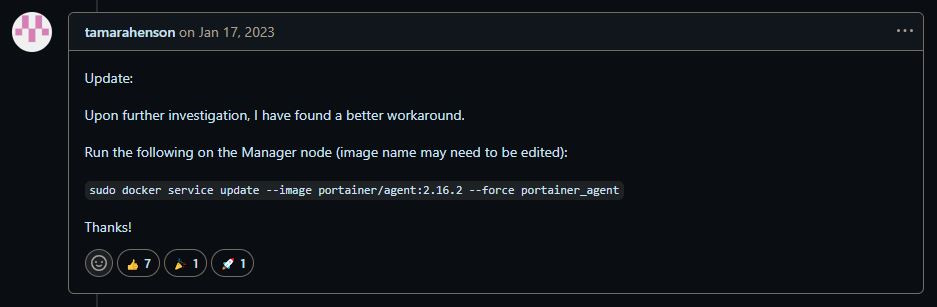

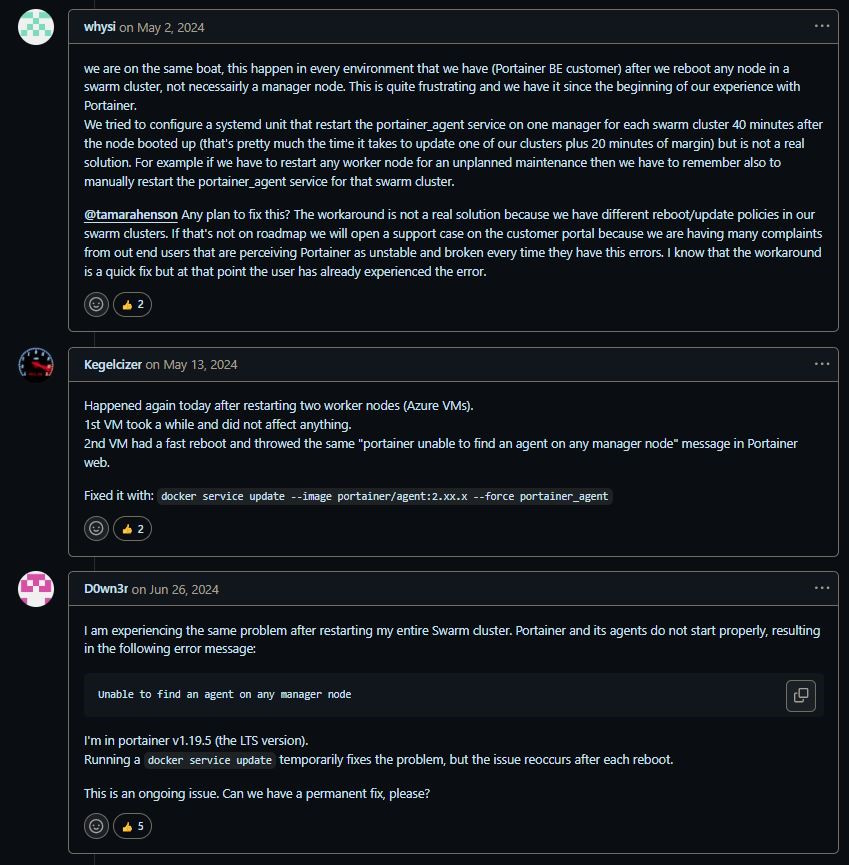

It could have still been something else, like a Portainer misconfiguration and someone actually had a temporary workaround for something like that:

Sadly, that's not an actual fix and people complained that such issues have been around for a long time:

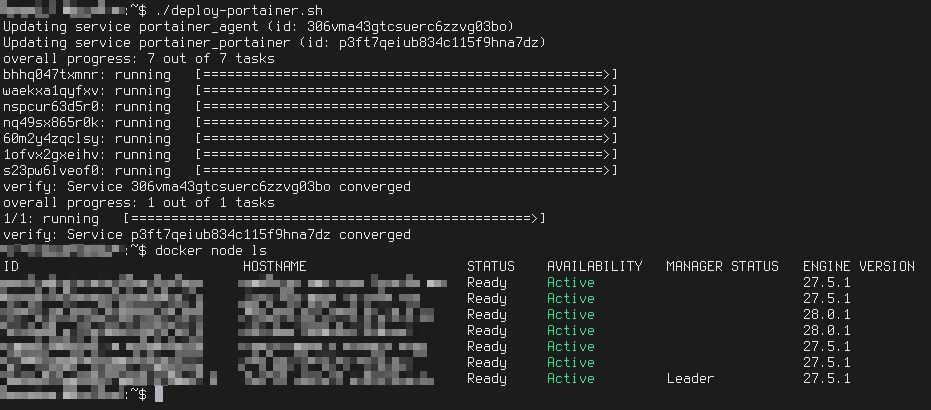

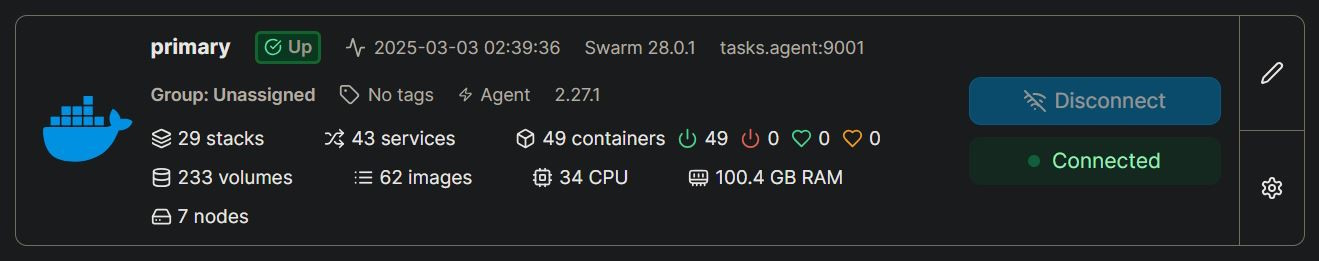

Unfortunately, that didn't help me. I wrote a script to do the workaround more easily, even do full redeploys of the Portainer stack. It would run successfully, all of the nodes would still show up as reachable:

After that, things would be great for about a minute and the network condition would then deteriorate severely once more. This lead me to believe that the network itself is definitely to blame, especially when its hit by more traffic. This would explain why everything worked with a bare cluster, but once I'd have ~50 containers running across the nodes, that it'd get much worse.

The actual issue

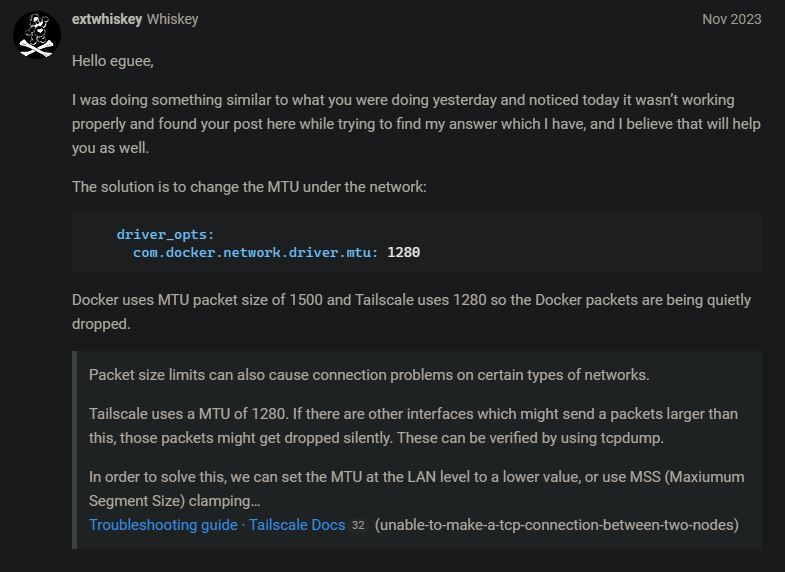

I stumbled upon someone talking about how the maximum transmission unit (MTU) configuration had been a problem for them, specifically when trying to run Tailscale and that lowering that value resolved their issues.

Again, I'm not a networking engineer, so all I can do is spitball along the lines of the network doing the equivalent of trying to put large luggage cases into containers that doesn't quite fit them and when they're sometimes fully packed, that causes them to fall off and make a mess. The linked Wikipedia page is actually both highly technical and quite vague, but you get the idea:

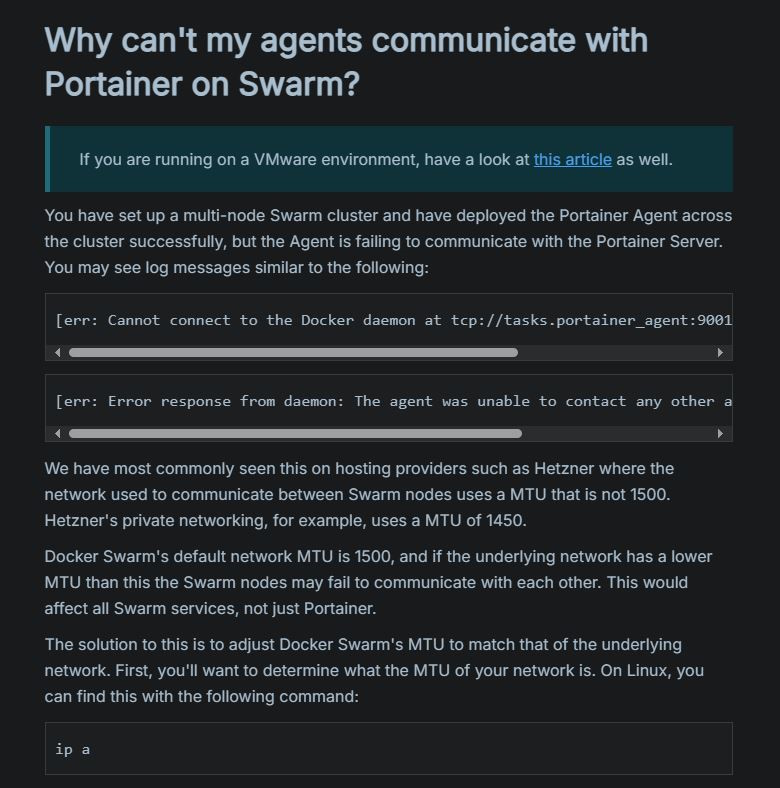

Upon digging a bit deeper, even Portainer has similar suggestions, so it would appear that I wasn't the only person who has run into such issues:

The command to actually check the MTU sizes for each interface is pretty simple, though not the one that you'll commonly see mentioned:

ifconfig -sThis shows the problematic 1500 value, whereas 1280 was suggested, the same MTU that Tailscale uses for their networking:

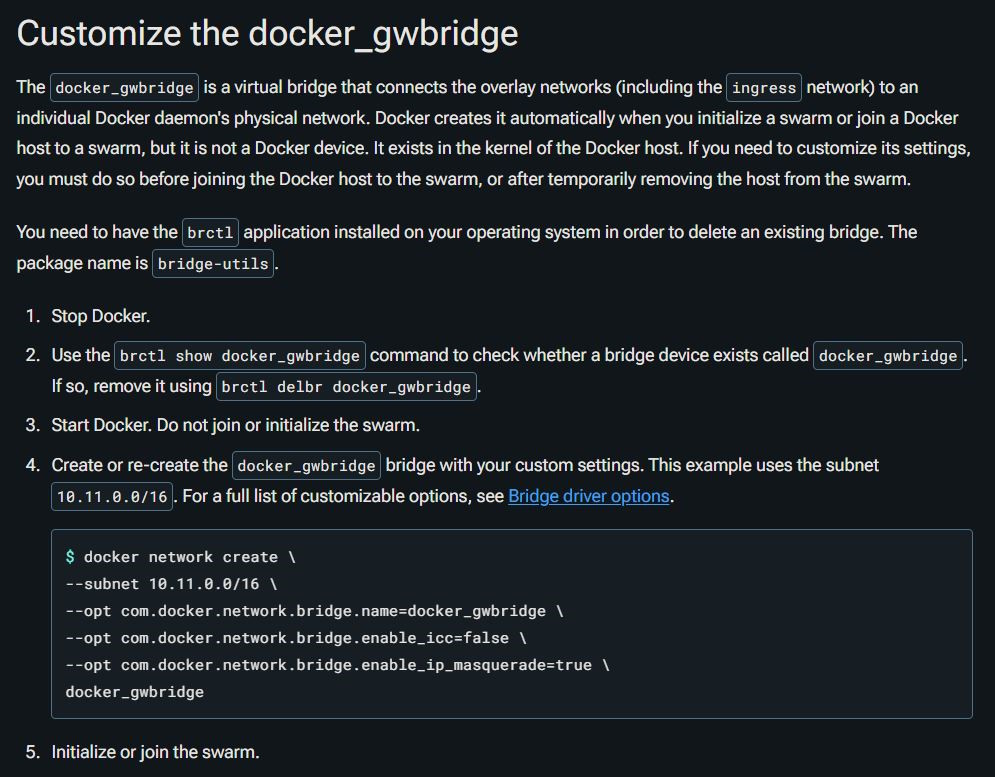

There's a bit of a problem with all of that, though. You see, if I want to change that value, then according to the Docker documentation I need to:

- destroy the whole Docker Swarm (including all of the deployed stacks, just like when initially leaving it)

- install the additional

bridge-utilspackage - stop Docker, delete the resource and recreate it

- then start Docker, re-create the whole cluster, re-deploy the stacks and hope it all works

Those instructions kind of suck! If it's needed for technical reasons, I guess I have no other options, but even if the tasks are simple, they're still quite time consuming and having an iteration of experimenting with a new configuration taking 15 minutes is never good.

Have a look for yourself:

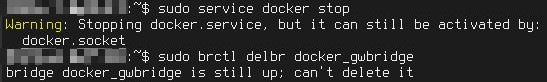

What's worse, the instructions don't actually work, I just get an error along the lines of:

bridge docker_gwbridge is still up; can't delete iteven when Docker is stopped, which is quite unfortunate:

At least I knew approximately what I'd need to do and thankfully I was on the precipice of finding the solution I needed.

The light at the end of the tailnet

I ended up doing a few more things along the way, such as putting the following in /etc/docker/daemon.json:

{

"mtu": 1280,

"ipv6": false,

"experimental": true

}The instructions for handling docker_gwbridge ended up looking like this for me:

# Check the status

ifconfig -s

brctl show docker_gwbridge

# Leave the swarm (apparently didn't even need to shut off Docker)

docker swarm leave

# Remove the interface and then re-create it, with the default configuration BUT with the smaller MTU of 1280

docker network rm docker_gwbridge

docker network create --subnet 172.18.0.0/16 --opt com.docker.network.bridge.name=docker_gwbridge --opt com.docker.network.bridge.enable_icc=false --opt com.docker.network.bridge.enable_ip_masquerade=true docker_gwbridge --opt com.docker.network.driver.mtu=1280

# Restart Docker anyways, just in case

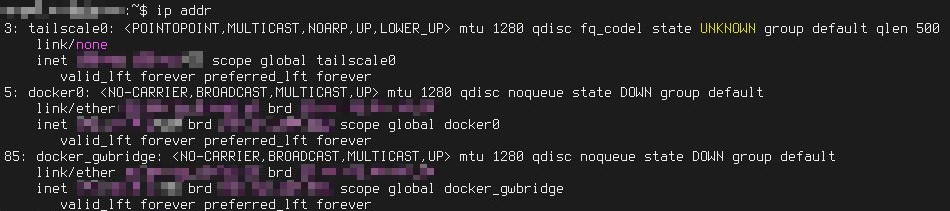

sudo service docker restartI have to say that at least the options you can use for the networks are documented pretty well, which gave me at least a bit more confidence. Finally, it seemed that the MTU values for both the Docker and the bridge interfaces matched that of the Tailscale one:

The other command that I mentioned previously also seemed to confirm this:

And just like that, Portainer started working nicely again, there were no more weird traffic issues, the web servers also properly did their job and I can finally forward traffic from the cloud VPSes to containers running on my homelab, without any custom iptables rules, nor do I need to manage a WireGuard install manually at the present time.

It just works:

Furthermore, the aforementioned benefits of having a virtual network that's private for the most part are also apparent. For example, I can now run Zabbix traffic over it and don't have to risk a misconfiguration exposing too much information to the world, because none of this traffic is publicly routed either. I don't have to worry about NAT. I can also use this same setup for some backup software as well:

I will still keep using mTLS and a custom CA for some of the sites that I'd prefer to keep more private and sit behind my reverse proxy, but it's yet another useful layer in my security model, which already looks like Swiss cheese, so it's nice to at least pretend that any of this is remotely secure:

Notice how I keep layering on technological solutions, even though I'll probably accidentally push an SSH key somewhere where it shouldn't be some day, the kind that doesn't have a passphrase protecting it. Oh, also, the image is more or less borrowed from this blog post, which talks about cybersecurity a little bit more.

Summary

I do have a lot of appreciation for the likes of WireGuard and Tailscale, even SSH tunnels are useful for specific use cases. Networking still sucks, that's the opinion that hasn't changed though.

For example:

- why doesn't the MTU drop to 1280 automatically if that's all that's supported over the Tailscale interface, isn't that the whole point of exposing that value?

- why are there no better mechanisms for detecting MTU related issues, instead of my web server just throwing

Internal error (specific information not available) - why do the official instructions say that I have to wipe my entire container cluster, just to change one networking value? You're all about automation, you do it.

- why does Tailscale support 1280 in particular, instead of the more standard 1500? If it's so messy, why doesn't Linux also just opt for 1280 out of the box?

To me, it feels like there's something missing. There's tooling for working with most of this stuff, but not enough automation to avoid making us do the work. For example, Docker Swarm or Tailscale or whatever just doing a quick connection test, figuring out what MTUs are supported and at what values things start failing, output the results in the logs and do some auto configuration for me. Fin.

That said, now my web servers see the proper IP addresses and it's become easier to ban whoever tries to spam me with requests for that dead domain from the previous post:

#!/bin/bash

INPUT_FILE="blocklist.txt"

while IFS= read -r ip; do

echo "Blocking IP: $ip"

iptables -A INPUT -p tcp --dport 80 -s "$ip" -j DROP

done < "$INPUT_FILE"Or maybe I should just put fail2ban inside of my Apache2 container and setup some additional rules, go figure.

Other posts: « Next Previous »