Zombie software and haunted tunnels

Date:

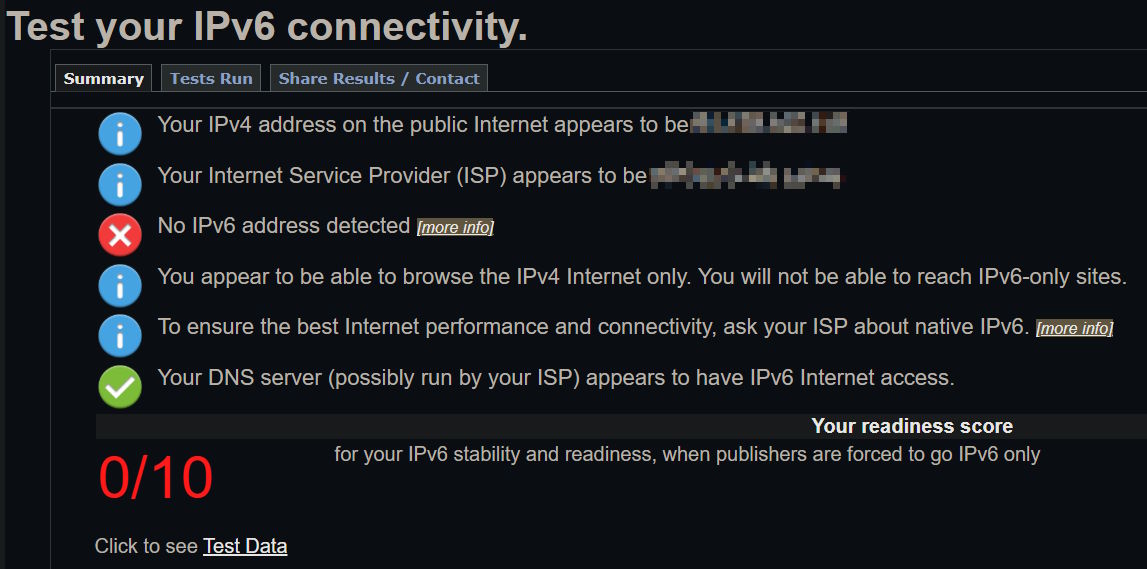

So here's a fun one, and by fun, I mean absolutely frustrating. To start off, there is very little support for IPv6 in my country, though it seems to be the case for a lot of the world, meaning that I can't really use it at all:

IPv6 promised to be a great technology, that would solve the shortcomings of IPv4 and would simplify networking, compared to what it had become: a bunch of workarounds for the limited amount of addresses available. IPv6 seemed to be a pretty sound design and made a bunch of promises... which, judging by the above, are nowhere to actually be seen.

That leaves us with IPv4. Because there's not enough addresses, it's not like anyone will give every device its own publicly accessible (infrastructure in the middle willing) address, so having a bunch of servers in my room isn't easily doable. I remember that it wasn't the case in the university that I went to, where I could connect a few boxes running Debian to the network and shortly after get addresses for them that I could reach from anywhere. That was a pretty nice setup, while it lasted!

Now, before I continue, let me make something clear: I suck at networking. With every passing year, I suck at it a bit less and perhaps some day I'll consider myself okay at it. Yet, as someone whose day job is primarily writing software and doing your run of the mill web development, my comfort zone currently only reaches so far. Configuring TLS? Sure. Configuring a load balancer? Okay. Making sure that the firewall plays nicely with things. No problem. Troubleshooting simple network related issues? Doable. Having a tunnel here or there? Fine. Split VPN or split DNS? Sure thing.

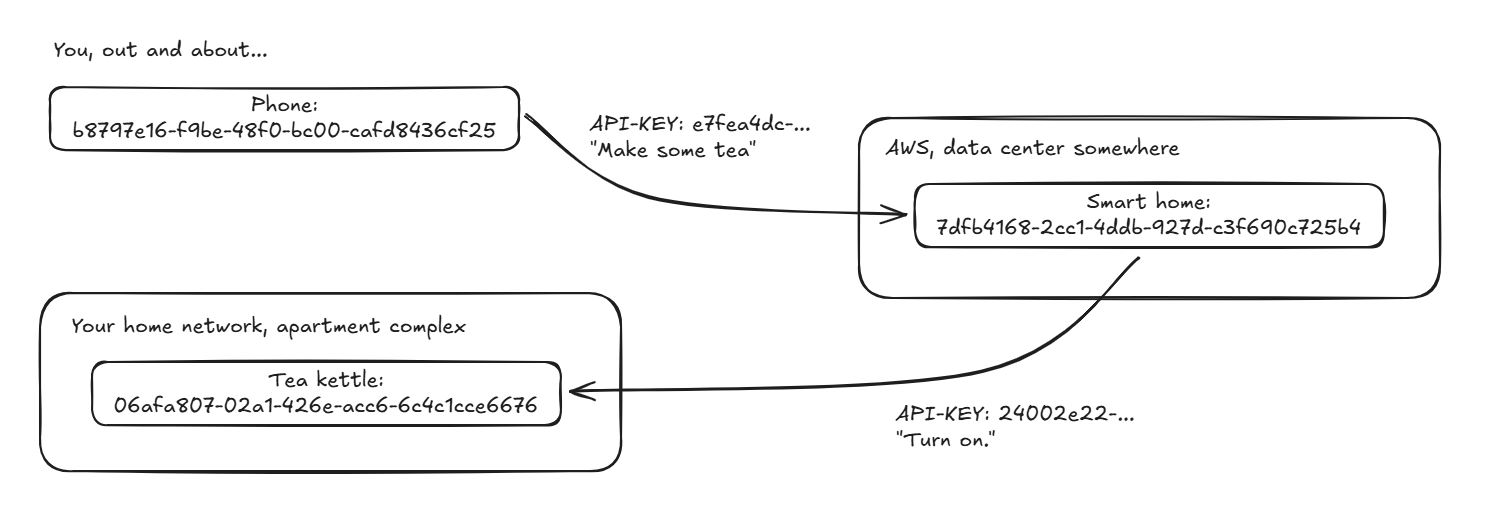

Yet, the lower the level I need to look at, the murkier things get and often the entrenched technologies feel like design that isn't good but also not so bad that we'd be forced to move on to something new, so things just trudge along. In my eyes, in the perfect world, every piece of hardware should be uniquely addressable. For a second pretend that the OSI model doesn't exist and that every NIC has a singular address, like we already have MAC addresses. Essentially a UUID that you can use as a publicly routable address:

Now, whether your PC, laptop, phone, fridge, tea kettle or light bulb would pick up when something is trying to reach out to it, that's a different question. Whether they even need to be connected to any kind of a network, that's also not something that I'll get into here. But if the world was around such a concept, things could be really simple. Services like VPNs surely would exist to tunnel your traffic through for privacy reasons, but if you'd want to reach out to your homelab server or your public cloud server, that'd all be one address and some network hops away. I wouldn't hate networking then.

But that's not the world that we live in.

Haunted tunnels

In my case, my personal devices sit behind NAT. Even in the best case where my router is reachable from the outside, that still gives me one IP address that I can't exactly split between two of my homelab servers (e.g. two separate web servers) if I want to use standard ports and don't want any trickery.

I could probably pay for an additional static IP address, but that's outside of the scope here, because the companies love to charge you a bunch of money for that. Come to think of it, I can't even find any pricing of static IP addresses on their homepage, but I know that the previous ISP wanted 6 EUR per month per address, I can get a whole VPS for that amount, with and address instead.

To cope with that, previously, I made my homelab accessible through a WireGuard network, where I forwarded the traffic through a publicly accessible VPS. So, even if my homelab can't be reached directly, it can reach out to the cloud server instead and the cloud server can forward the traffic to it. Plus, if I ever move or change ISPs, I just need any sort of an Internet connection and the homelab servers can still connect to the same network with the same IP addresses that are configured, so it's a pretty stable setup.

The actual configuration is also pretty simple, even if the blog post is a bit dated at this point. The problems started with actually routing the traffic, since I needed to mess around with iptables, which I really dislike. It's essentially software that's not very approachable and gives you ample opportunities to mess up. It's actually surprising that I haven't locked myself out of any servers while poking around in the dark. Either way, the setup was a bit of an interplay between WireGuard and some additional routing. It worked, but was perhaps a bit too complex.

So, wanting to keep my setup simple, I looked in another direction: SSH tunnels.

Since I already run Linux distros and use SSH, it sounded pretty much perfect! I could use the software I already have to expose specific ports from my local software (e.g. Apache2) to be available on the remote server, with no additional software. Just a bit of configuration, along the lines of:

AllowTcpForwarding yes

GatewayPorts yesin /etc/ssh/sshd_config and then either:

Host my-server.com

HostName my-server.com

User myuser

# HTTP

RemoteForward 80 127.0.0.1:80

# HTTPS

RemoteForward 443 127.0.0.1:443in ~/.ssh/config or just using the following syntax directly:

ssh -i ~/.ssh/whatever -R 0.0.0.0:80:127.0.0.1:80 -R 0.0.0.0:443:127.0.0.1:443 myuser@my-server.comThere's actually a pretty nice article that goes into more detail and could be useful to anyone to learn a bit more.

Of course, there are a few caveats:

- you can only tunnel TCP, not UDP, at least without involving a bunch of other hacks

- it sometimes doesn't work, good luck figuring out why

It might not be super descriptive, but guess what: I still don't know why every now and then the connection seemed to drop. Enter the next piece of software in the puzzle: autossh

At this point, I still hadn't lost my faith in getting this to work, it still seemed to be simpler than going back to WireGuard and shuffling traffic around iptables (I hate it that much). After all, I just needed the following to get things working:

autossh -M 0 -o "ServerAliveInterval 20" -o "ServerAliveCountMax 3" -o "ExitOnForwardFailure=yes" -N "$SERVER_URL" > "$LOG_FILE"Except that also didn't work, same issue. No amount of messing around with the monitoring ports, or timeout values or whatever seemed to help. Eventually, I decided to get a hacky solution working, along the lines of:

#!/bin/bash

# Define paths and variables

PID_FILE="/some/path/autossh.pid"

LOG_FILE="/some/path/autossh.log"

SERVER_URL="my-server.com"

# Check if the PID file exists

if [ -f "$PID_FILE" ]; then

# Read the PID from the file

PID=$(cat "$PID_FILE")

# Check if the process is running and kill it

if ps -p "$PID" > /dev/null 2>&1; then

echo "Killing existing process with PID $PID"

kill "$PID" || { echo "Failed to kill process $PID"; exit 1; }

fi

# Remove the PID file

rm -f "$PID_FILE"

fi

# Start autossh and save the PID

# add -vvvv for more output

autossh -v -M 0 -o "ServerAliveInterval 20" -o "ServerAliveCountMax 3" -o "ExitOnForwardFailure=yes" -N "$SERVER_URL" > "$LOG_FILE" 2>&1 &

# Save the PID of the background process

echo $! > "$PID_FILE"

echo "autossh started with PID $(cat "$PID_FILE") for server $SERVER_URL"It still fits within one page. I can understand what the script does. I am totally not sinking deeper into the sunk cost fallacy. Not at all. Especially because running the script is just one file, and I can use cron to make it execute upon every server restart, or even make it execute periodically, if I accept that there's going to be occasional interruptions but still can't resolve the issue of the connection just dying:

@reboot sleep 30 && /root/ssh-tunnel-to-cloud-server.shThis, if course, isn't ideal. What if I decide to use some backup solution that needs an S3 bucket that's on my homelab, but it fails to upload backup files because the connection resets every now and then? I decided to look a bit more deeply into what was going on. After a cursory look at the logs, it seemed that the server that was getting issues was actually getting way more traffic than the other one (I have a few homelab servers).

So what's the cause?

Zombie software

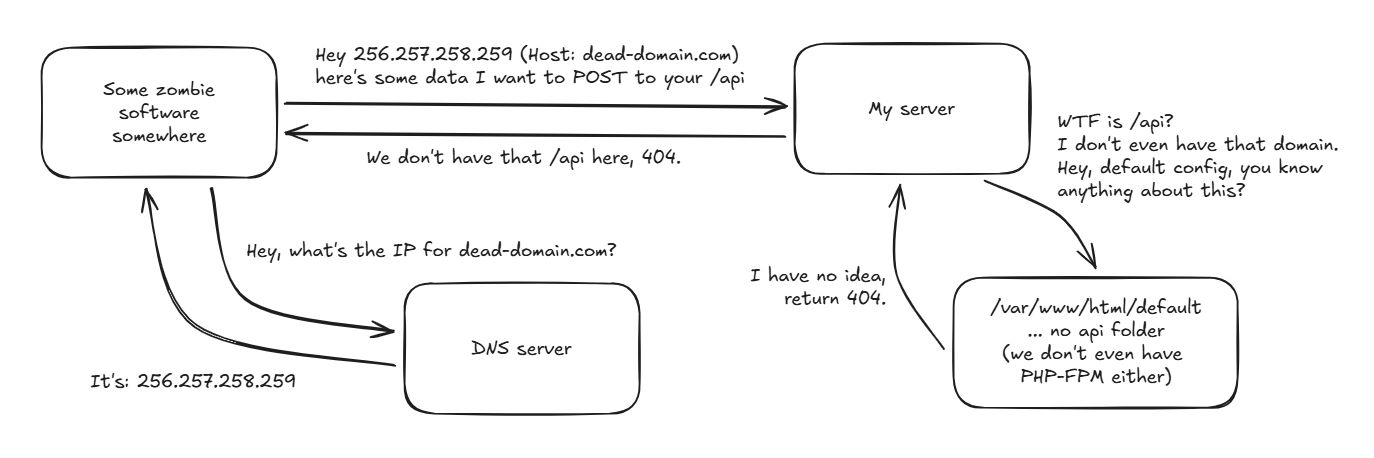

At first, I thought that maybe it was Mattermost or Nextcloud acting up and requesting data more often than it should. But nope, those were actually external requests coming in from across the world, requesting things that they shouldn't. At first, I thought that it was an attempt at finding vulnerabilities, just how like if you have anything reachable by SSH without disabling password auth, within minutes you'll probably start getting (hopefully futile) attempts at cracking your password.

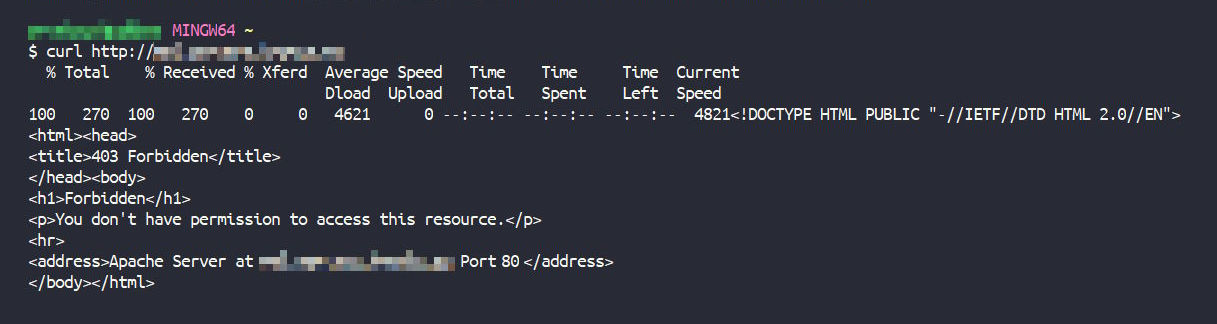

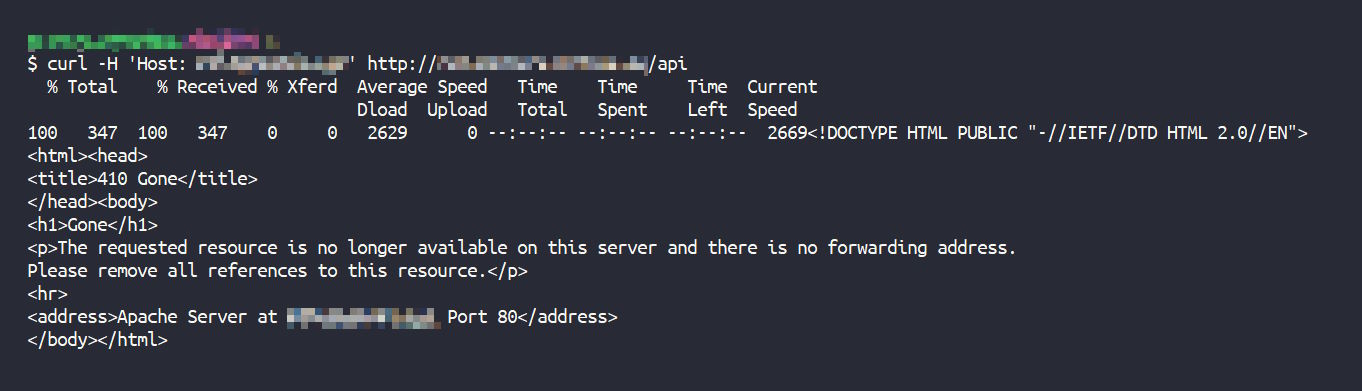

Yet, it wasn't really an attack, the requests were periodic and accessed the same endpoint: /api, under the default virtualhost, even without TLS (no HTTPS), meaning that they weren't even attempting to hack a particular piece of software on my servers. Instead, they'd see this placeholder page baked into my Apache2 container images, which I left there for debugging purposes:

I'm a bit of a web developer myself, with a passion for graphic design.

As far as I'm concerned, though, if it was an attempt at hacking, it was a pretty bad one. Since I had recently migrated over to Contabo for cost reasons, my best guess was that someone still had their domain pointing at the IP address that was now given to my server. Because my server doesn't actually have any of the content that they were looking for, they were fruitlessly hitting the default page, along the lines of:

It's odd that the software wouldn't give up, I thought that maybe returning 403 (forbidden) or 410 (gone) might change its mind, so I did a quick change to remove the default page, along the lines of:

<VirtualHost *:80>

Deny from all

</VirtualHost>Which would return the following to anyone attempting to access the resources:

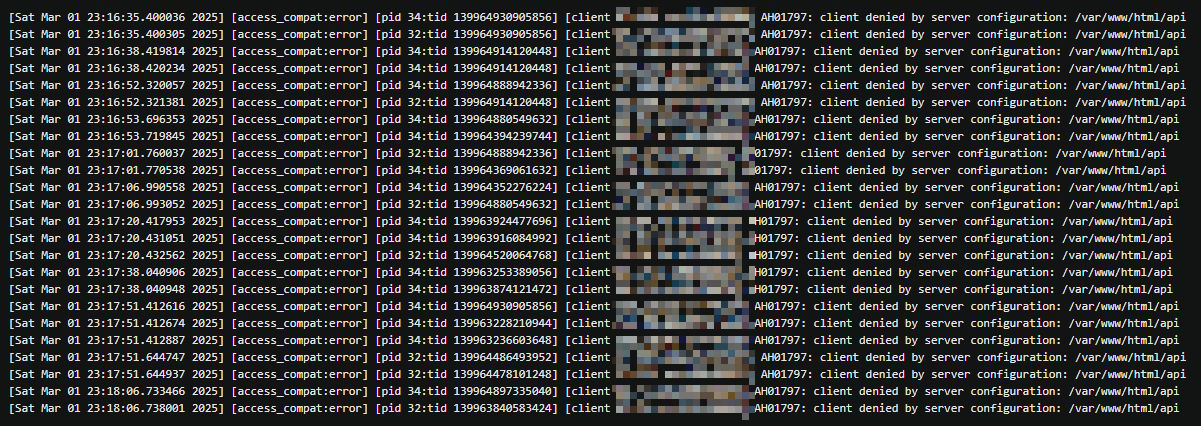

I thought that it might prevent similar issues in the future, but of course, nothing is ever so easy and oftentimes the software out there is rather badly behaved, so the requests still kept coming in from a handful of IP addresses:

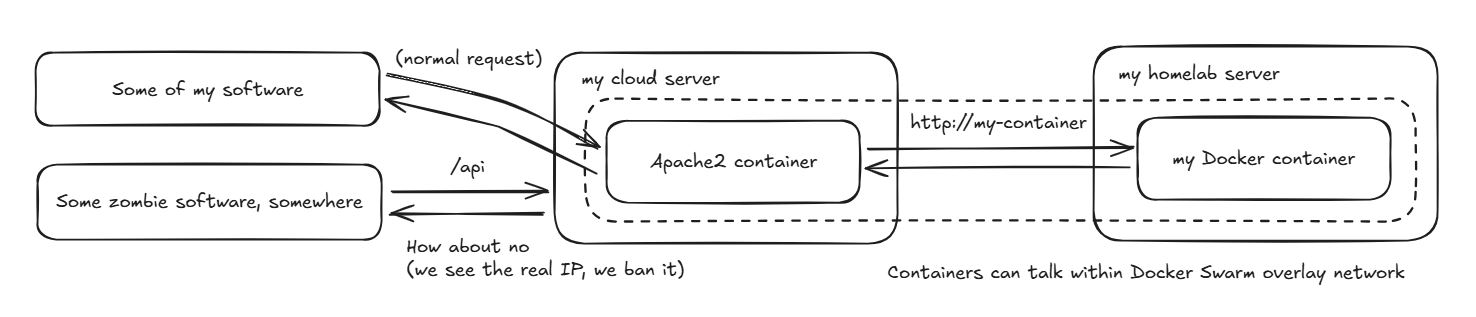

You know what the worst part is? If I run the web server on my local server and the cloud server pushes the traffic to it through the SSH tunnel, then there's no way for me to tell the real IP address. I can't really ban the badly behaving IP addresses outright, because the web server only sees the address of my cloud server as the source. This is also pretty bad for anything you might want to call a serious deployment from a security standpoint, yet perhaps isn't such a big deal for me at the moment.

Normally, you'd have an upstream load balancer just pass you X-Forwarded-For request header or something like that, but my whole setup was built with the idea that I wouldn't have to run two separate servers and coordinate between them - that'd be wholly counterproductive to my goal of decreasing the amount of involved components into the mix.

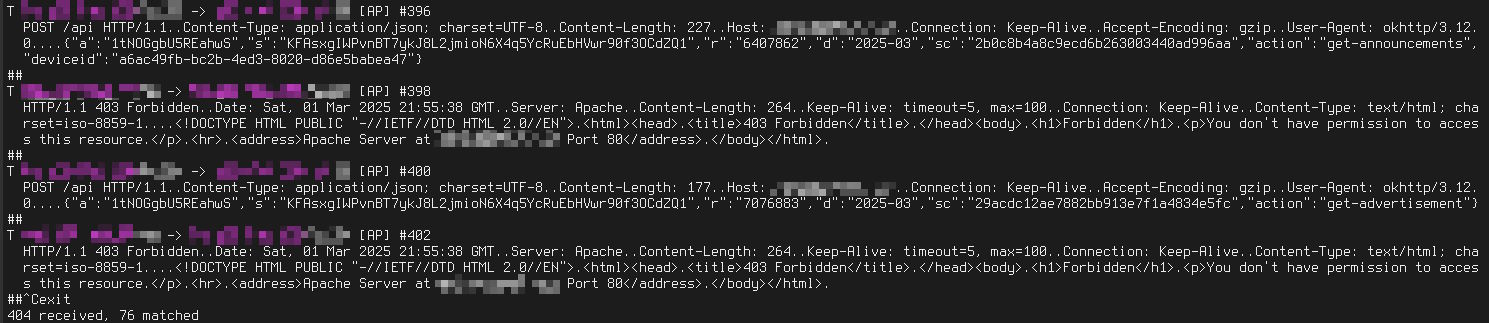

Looking at the exact contents of the requests with ngrep also didn't reveal that much useful information, aside from the Host header and the domain that they were trying to use. You'll notice that I haven't really attempted to clear the data so much, because it is using HTTP in the first place anyways and is sending data to a server that they don't own. Oh, and they attempted to implement something like SOAP in a JSON API:

Heresy. Looking at the domain itself, it seems that it will expire at the end of this year, unless they decide to renew it. If they do, I guess I'll have to reach out to them, but because they're located somewhere in the Caribbean I didn't really feel like trying to contact them just yet and instead reached for a technical solution. Well, maybe because it's also 3 AM and I doubt I could compose a polite e-mail right now:

Now, I did have an idea about how I could fix this...

Losing my sanity

What if I just ran the web server on the cloud server directly and then just let the Docker Swarm overlay network talk to my homelab node and the containers running on it? It might mean putting some of my TLS certificates and other information on the cloud server instead of keeping it within the confines of my homelab and complicate things further, but on the surface level it seemed like it could work:

It would also mean that the servers would both take the majority of the incoming traffic and if anything happened to them, the impact on my homelab would be a bit lessened. Not only that, but I could also see the real IP addresses of the incoming requests and block whatever I didn't like, integrations with software like fail2ban should also work nicely, as would any rate limits and whatnot.

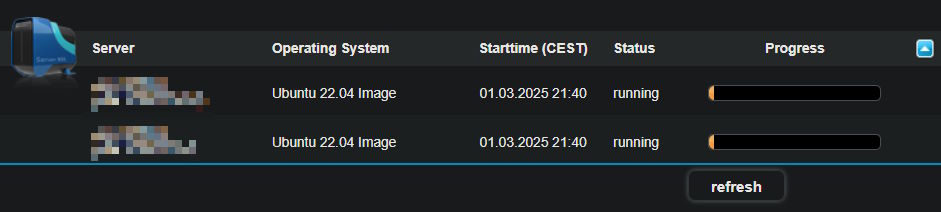

The first step was shutting down the Apache2 containers on my homelab and disabling the SSH tunnels. That was pretty easy to do.

The next step was connecting the public servers to the Docker Swarm cluster, so that I could schedule services (container instances) to be run on them and so that they could partake in the overlay networks. Done.

Then, I'd just need to launch the same Apache2 instances, after carrying over the necessary configuration. Took me a few minutes to drag a few .tar.gz archives around, but nothing too bad.

Except it didn't work. You see, the nodes showed up in the Docker Swarm cluster. The containers were running. Doing curl http://localhost:80 returned the contents of the web server container, just as expected. However, when I did the request from any external server or even my own web browser, it would just show Connection refused. The port was open, but the connection would just be refused.

I checked that server against a few others that had no such issue. Same container. Same type of configuration. Same host binding against the ports on the server and the same overlay networks for communication with the other containers, even ones on the same node. The same iptables rules. The same configuration for SSH, no SSH tunnel ports left open. The same privileges and user running the containers, even launching a container with docker run --rm -p 81:80 ... so on another low numbered port right next to the main one would work.

What on earth was wrong with it!?

I tried using tcpdump, I tried digging around in the every possible log that I could think of, I tried every type of configuration I could, I inspected every Docker container and network configuration and the node configuration. Everything seemed the same and should have worked, but didn't. I felt like I was going more or less insane, so in the end I did the only thing that made sense.

Wiping everything

Most of the data on my servers is pretty well isolated and I'm working on getting a new backup system working, so it's pretty safe to wipe servers whenever the need arises. And now was definitely the time to do so:

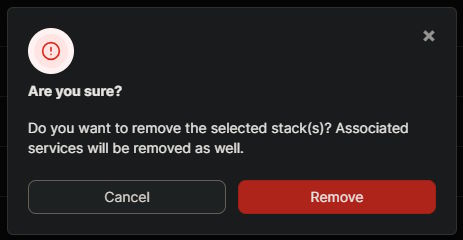

This also meant that I'd need to do a bit of cleanup on the Docker Swarm side, to remove the networks and containers in question, which I would later recreate as necessary, just to avoid any dangling stuff left over, at this point I was taking no risks:

About 30 minutes of setting everything up later (I could spend a day or two automating everything with Ansible, not really worth it yet), I had the servers back up and running and there were no longer issues! It was all looking pretty good, even though I felt a bit sour about not being able to pinpoint the cause of the issues. I suspect that it might have been some rogue iptables rule, but it would have been very odd when the rules just wouldn't have showed up, that'd make no sense!

So, with the new web servers in place, everything finally started working:

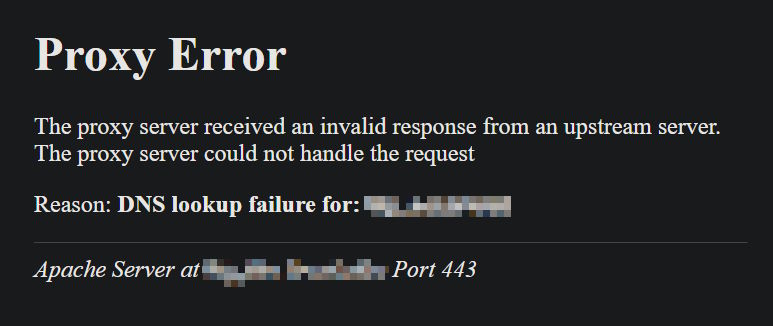

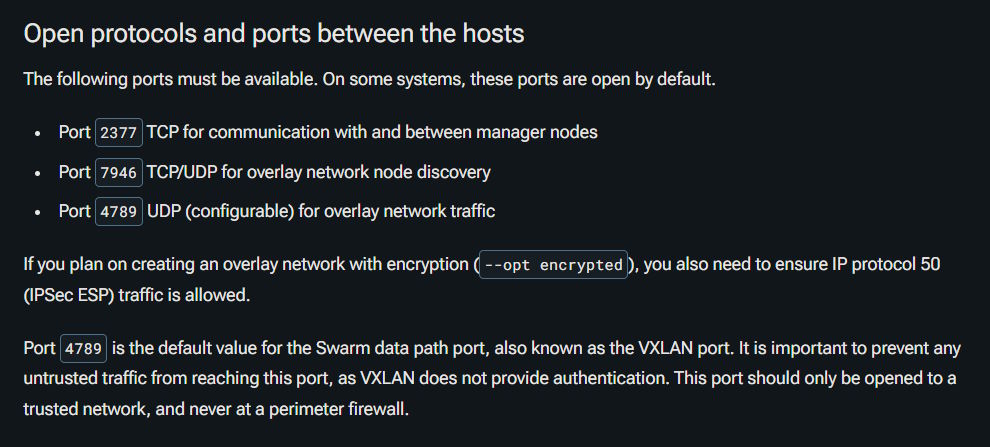

Oh wait, it didn't. The web server itself was up and running, and accepting traffic. The problem was that it couldn't reach any of my homelab servers. Now, I was thinking that I could just update the SSH tunnel configuration to include whatever Docker Swarm needs... except that what Docker Swarm needs is UDP ports:

Which I cannot tunnel easily. Some of these ports can't even be remapped to others and there are pretty strongly worded reminders about not exposing these in the wrong places. In other words, it became painfully apparent to me that I should have stuck with my WireGuard setup and perhaps even just join all of my nodes to a WireGuard VPN and let them talk through that, for the added security. Trying to simplify things just made me get burnt pretty badly on all of the above.

A learning experience, sure, but an unwelcome one.

Giving up

So what did I do in the end? I settled on letting the zombie software just do its thing, but at least tell it that it's unwelcome here:

<VirtualHost *:80>

ServerName dead-domain.com

RewriteEngine On

RewriteRule ".*" "-" [G,NC]

</VirtualHost>

Enough for me to sleep at night, not at all enough to actually solve the damn problem:

It feels like in the future I'll just need to go back to using WireGuard and hopefully figure out a more manageable solution for managing traffic past that.

Summary

I fully accept that I might still have years to go until I'm good at networking, but literally none of this makes me feel good about the state of configuring anything or trying to make anything work, when nothing does, certainly not for a lack of trying.

Here's a list of grudges:

- WireGuard not having its own simple syntax for routing traffic, something like SSH tunnels would be great

iptables, all of it, though I guessnftablesis a bit nicer (not really using it myself for now)- needing

autosshin the first place, not being able to figure out much from the logs (what isdebug3: send packet: type 96and hundreds of lines like that, use your words) - debugging anything being hard, even with

tcpdumpandngrep, though I'm happy that at least they exist - again, I'm happy that Docker Swarm is as usable as it is (compared to something like Kubernetes), but it's failure mode being "It sort of works on the same node, but suddenly will throw DNS resolution issues at you without clearly telling you that the nodes can't communicate to use the overlay network" is really bad. Even worse because there's no good way to diagnose that. If they figured out something closer to the WireGuard configuration where everything is pretty obvious (e.g. if you could choose who makes the connection and in which direction) then it'd be great, maybe aside from missing the ability to share volumes over the network (though I guess NFS exists, though that has its own issues).

- this whole situation, why can't I just use a single command to route traffic from 80 and 443 of my cloud server to my homelab server, while preserving the real IP addresses; it's not that niche of a use case!

And a few takeaways. Unless you hate getting things done in a timely manner or if you haven't been doing this for years, don't try doing all of this yourself. Just use a Cloudflare Tunnel or look at some of the tools here if you really need something that runs on your servers like sshuttle or frp or even WireGuard, but for the most part seriously just look into giving in to the centralized nature of professionally developed services in exchange for control over how you run things, that will save you a lot of time.

Otherwise, it's going to feel a lot like that one meme from Rick & Morty:

Other posts: « Next Previous »