What is ruining dual GPU setups

Date:

Recently I posted about the woes of trying to run two Intel Arc GPUs in the same system and how I couldn't easily use Windows 10 because it doesn't let me split tasks between them properly, nor use one of the cards for encoding in OBS, because the support for choosing which GPU to use for encoding just isn't there for Intel Arc cards (I'd need an Nvidia card for that, or wait until someone writes that feature).

Since then, I ended up moving to Windows 11 and some things got better, but others not so much. Since I have more information to share, I figured that I might as well make another blog post. For what it's worth, Windows 11 actually seems more stable than Windows 10 for me: I haven't had a GPU crash or system freeze since installing it, the resource usage is okay and with Rufus you still can make a local user account easily.

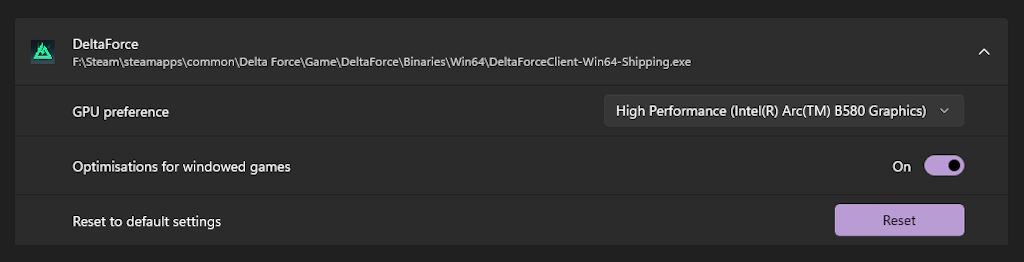

I'm surprised to say this, but it genuinely might replace Linux Mint as my daily driver distro, especially because it has compatibility with all of my games and a lot of the software I use anyways. Or rather, it should, even in my dual GPU setup, though things aren't always as simple. You see, there is a menu under System > Display > Graphics which lets you easily choose, which program will be handled by which of your GPUs:

I love that menu. Most of the time Windows is smart enough to decide which GPU to use for what (though they totally should have backported that feature to Windows 10), which typically would be used to make games or other demanding software run on your dedicated card, leaving things like your browser or video players etc. running on the integrated GPU, if your CPU has one in it. At the same time, it works perfectly fine for my dual GPU setup, where my Intel Arc B580 is the gaming card and my old RX 580 is the secondary card for browsers and things like video encoding.

There are some exceptions, such as you can run the OBS window on whatever GPU you want, but configure which GPU to use for the encoding inside of the program. Similarly, for video editing, I might run DaVinci Resolve on the RX 580 but use the encoder in the B580 because at that point I don't need its computing power for anything else, such as when trying to play/stream/record a game. For most software and games, however, it's far simpler: the OS tells the process which GPU to use and it does so.

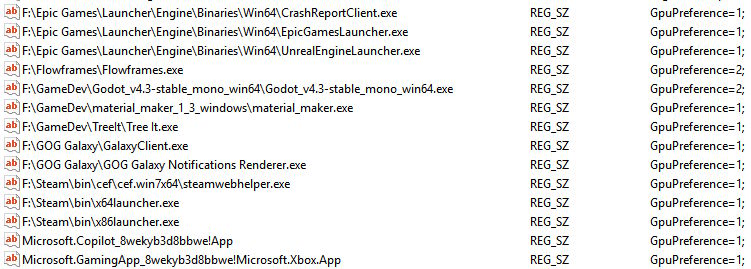

Under the hood, the Windows preferences are stored in the registry, under Computer\HKEY_CURRENT_USER\Software\Microsoft\DirectX\UserGpuPreferences, with the following data format:

Name- path to the executable, like:C:\Program Files\Mozilla Firefox\firefox.exeType- string value, so:REG_SZData- preference value (see below)

Implied default value for Let Windows decide:

GpuPreference=0;Value for default power saving GPU:

GpuPreference=1;Value for default high power GPU:

GpuPreference=2;It can also refer to a specific GPU, not just the role that can be filled by different GPUs:

SpecificAdapter=<some_id>;GpuPreference=<some_id>;Here's how it looks in the Registry Editor:

It's not exactly perfect, but it's simple enough that you can write some software to handle more advanced use cases yourself. For example, some programs out there (like Microsoft Edge) have the executable change based on the installed version, with different paths for each version:

C:\Program Files (x86)\Microsoft\EdgeCore\136.0.3240.50\msedge.exeC:\Program Files (x86)\Microsoft\EdgeCore\136.0.3240.51\msedge.exeC:\Program Files (x86)\Microsoft\EdgeCore\136.0.3240.52\msedge.exe

and so on.

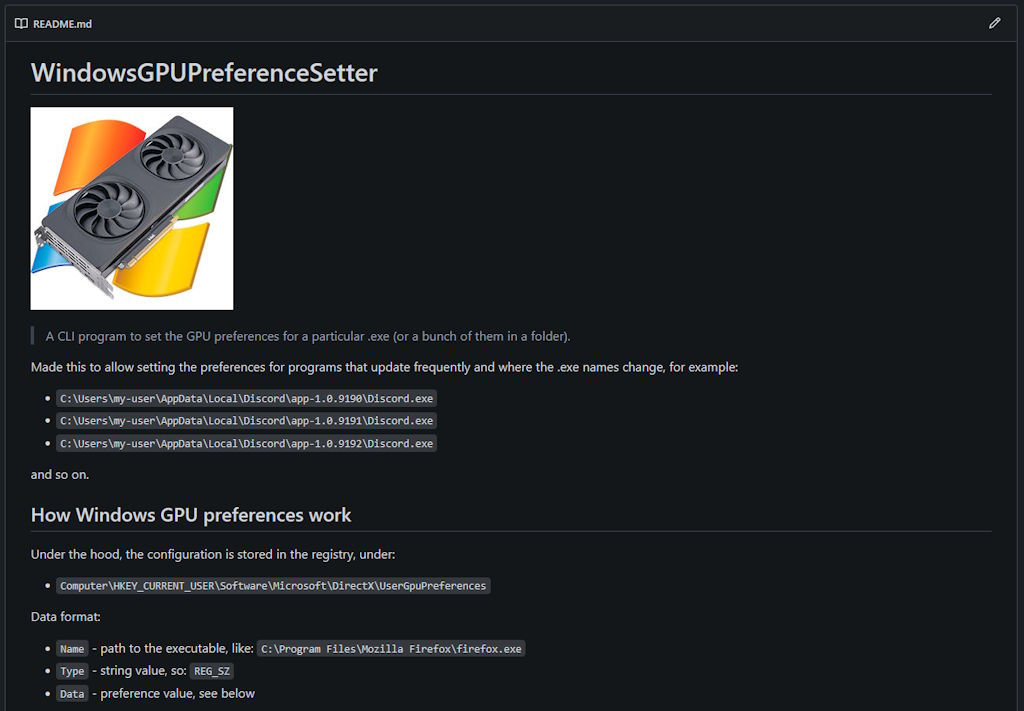

I did write a program for myself to automatically set preferences for any globbed paths, like:

WindowsGPUPreferenceSetter.exe energy "C:\Program Files\Mozilla Firefox\firefox.exe"

WindowsGPUPreferenceSetter.exe power "C:\Program Files\VideoLAN\VLC\vlc.exe"

WindowsGPUPreferenceSetter.exe energy "C:\Program Files (x86)\Microsoft\EdgeCore\*\msedge.exe"I'm not really releasing it to the public yet, but it took me about an evening to write (.NET is actually quite lovely), so I'm sure that it's within the reach of most people that need something like it:

For the most part, it does work. My browsers use the secondary card and don't take up a lot of resources, I can get VLC to run on the B580 because it has better video decoding, whereas at least in theory I should also be able to run all of my games on the B580 too as the gaming card, leaving the other one up for other background software and streaming/recording.

Except things aren't so nice...

Where it all goes wrong

One of the first games that I booted up to test this out was Delta Force. It's a free shooter game that plays a little bit like Battlefield, is free, is made on Unreal Engine 4 (there's also a co-op campaign with Unreal Engine 5 but last I checked it was really bad, so we don't talk about that) and looks great.

Sadly, when I booted it up, I was unpleasantly surprised. Here you can see the RivaTuner Statistics Server output which I use both for framerate limiting sometimes (when games run away with high FPS whereas my monitor can only do 60 FPS) and also monitoring the available resources:

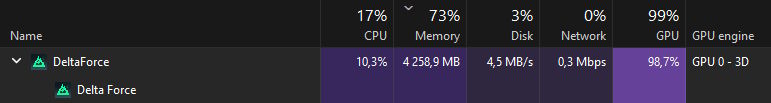

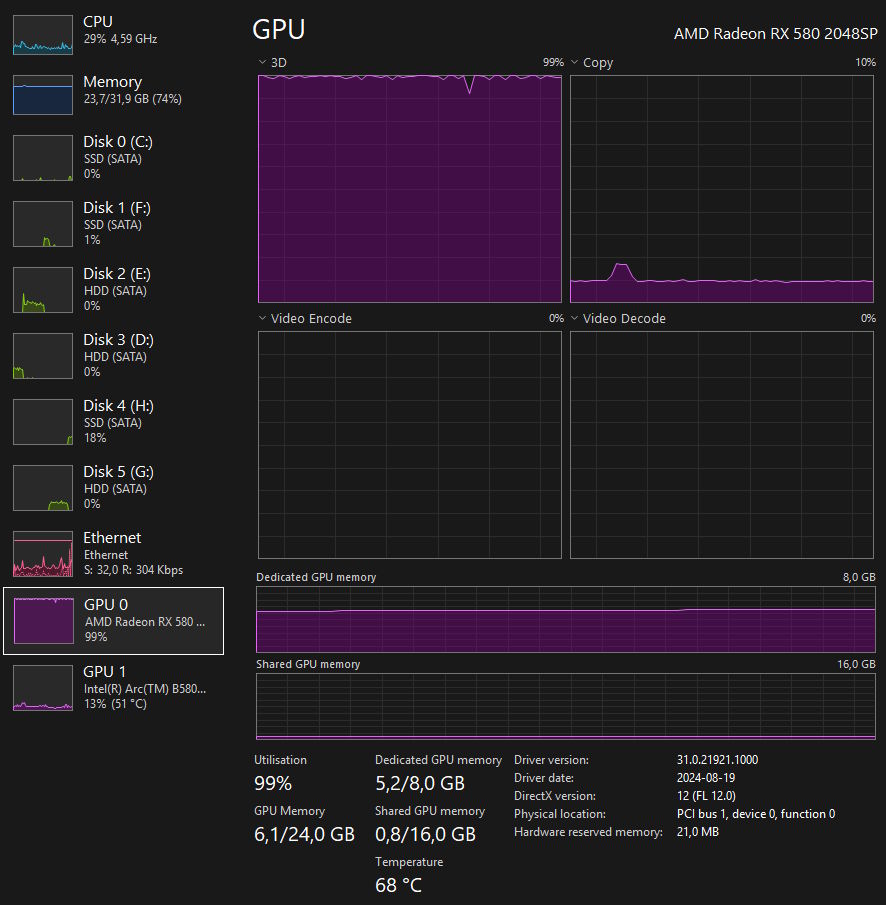

So, the framerate doesn't hit 60 FPS, the CPU isn't overloaded at all and the GPU utilization sits around 20%, which is surprising. But remember, I'm monitoring the main GPU here, because that's the one that the games should be running on. Looking at the Task Manager quite quickly reveals where the issues lie:

GPU 0 is running at 100%, not 20%, which explains why the framerates are so bad! Looking at Task Manager in more detail, we can see that the game is using the RX 580, not the B580 as needed:

It should have been simple to fix, but in the end it turned out to be impossible. Delta Force (and as it later turned out, some other games, too) just straight up ignores the GPU preference settings and picks whatever GPU it wants to run on by itself, something that you cannot change in any way:

- adding every single .exe in the game directory to the GPU preferences does nothing

- trying parameters such as

-adapterorr.GraphicsAdapterfor Unreal Engine also does nothing, it also sucks beyond belief when you can't even get proper answers and it's more like there's some mysticism around how the engine is supposed to work, in lieu of actual documentation - there is no software out there that can hide a GPU from a program, the closest is Process Lasso but that is CPU only

in other words, unless I want to disconnect/disable the RX 580 when I want to play Delta Force, then there's no way for me to play it.

Understandably, I uninstalled the game and won't touch it until this is patched out, which I assure you, is quite unlikely - because unfortunately dual GPU setups are so rare that most likely nobody on the dev team has such a setup and if they do, then it's probably in the form of Intel iGPU, which they will try to avoid ever selecting for running the game, not even considering that Intel also makes discrete GPUs.

That got me thinking - just how screwed am I?

Some of the games

I spent the evening installing and booting up quite a few of the games in my Steam, Epic, GOG, EA, Xbox and other game libraries. I don't really care about which are supposed to be the most popular titles, merely some of the ones that I might play at some point in time. I was also unable to try out some of the more modern games like S.T.A.L.K.E.R. 2 because quite frankly the install sizes for most of them are quite crazy - there's no way I'm downloading 100 GB just to test how a game runs, that alone makes me want to not play it too much.

Either way, I looked at a total of 60 games, the oldest ones being from the late 90s (Half Life) and the latest being from just a few years ago, across a number of game engines. I did make a summary table a bit later on in the article, but first, let's look at some of the things I saw along the way.

Deus Ex (2000)

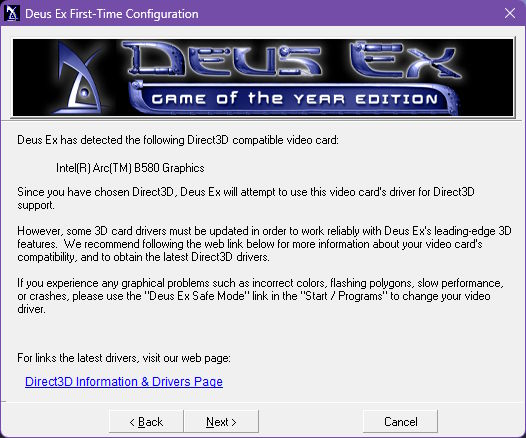

I did wonder how older games would run and since Deus Ex is a bit of a classic, I gave it a fair shot. Honestly, I was very pleasantly surprised, it detected the GPU well enough and gave me some additional options as well (such as choosing between a Direct3D and OpenGL renderer):

The game itself also seemed to run okay, except for one specific issue, which was the fact that it felt sped up, no doubt due to the developers coding it for a specific framerate back in the day and it getting quite confused on a modern system. That's not really a GPU related issue though and thankfully, since the game has a bit of a cult following, it's possible to get a launcher like Kentie's and that would normally fix the issue.

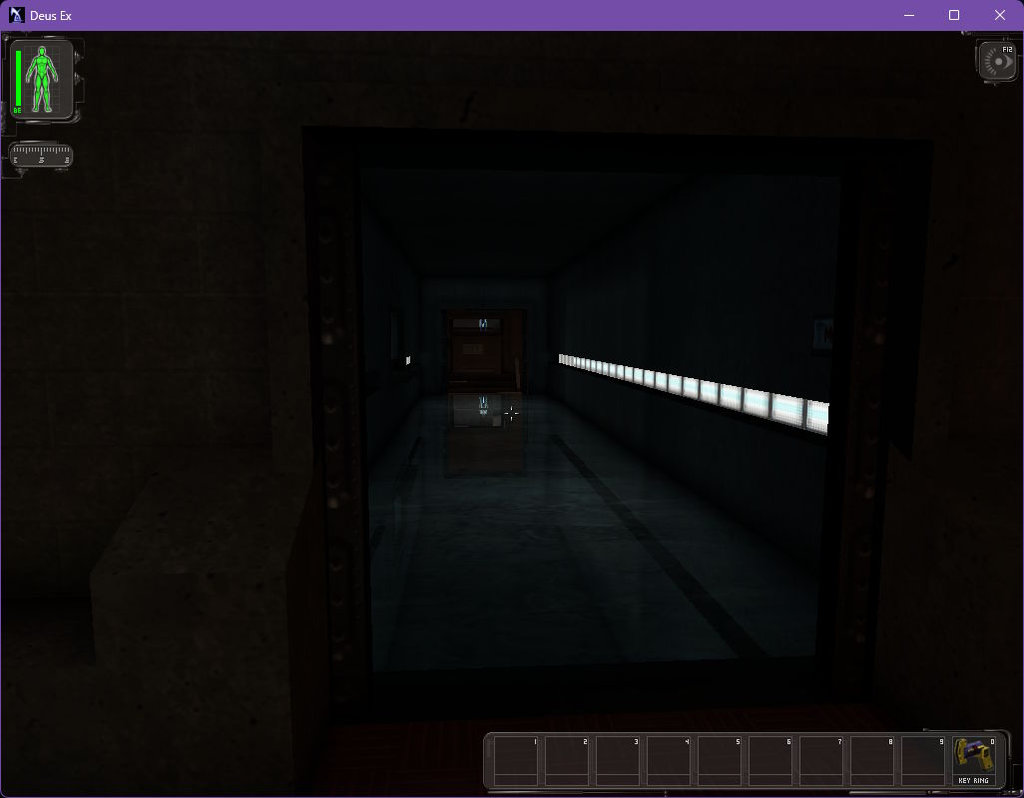

Either way, look at 25 year old graphics, a few clever tricks here and there and it almost feels like you have raytracing:

Overall, it's quite nice that even such old titles can run on GPUs that came out relatively recently, I'm definitely happy that Intel is in the GPU market and is providing some competition to AMD and Nvidia in the budget segment (to where I would go for a B580 over the regular RTX 5060 8 GB)

Brothers in Arms: Road to Hill 30 (2005)

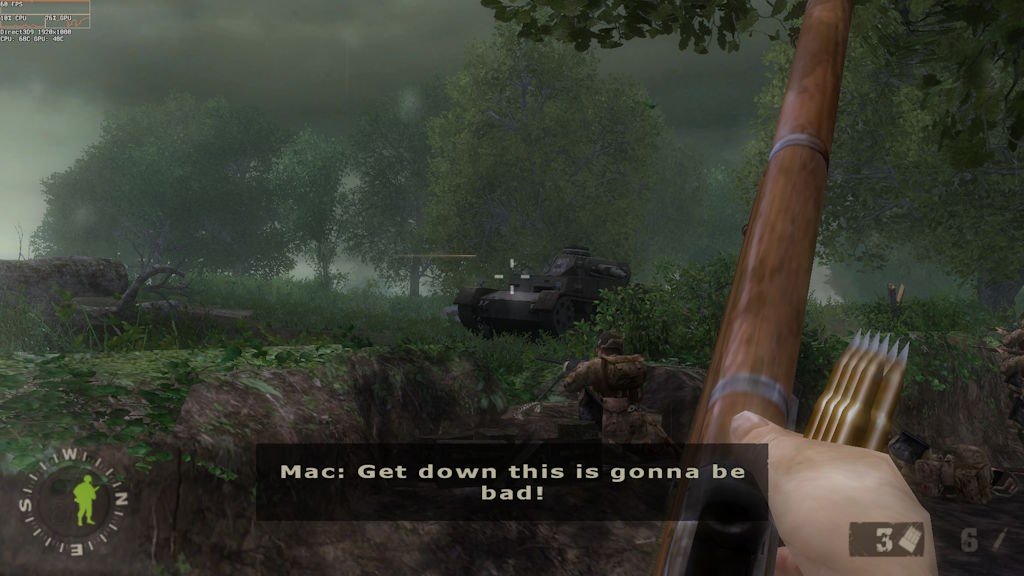

Here's another old game. While Deus Ex runs on the Unreal Engine 1, I also wanted to test something on Unreal Engine 2, 3, 4 and even 5. I haven't actually played a lot of BiA in the past, but it does feel a little bit like the first Call of Duty game and ran pretty nicely from the get go:

You can see that the field of vision would feel uncharacteristically narrow for a modern widescreen monitor and setup, as well as all of the UI elements feel much too big, but remember that back when this game came out, a 1024x768 was considered a good resolution. Admittedly, for some Unreal Engine 5 games, that isn't too far off from the internal resolution before upscaling, because badly optimized games just keep getting released.

Either way, no issues here.

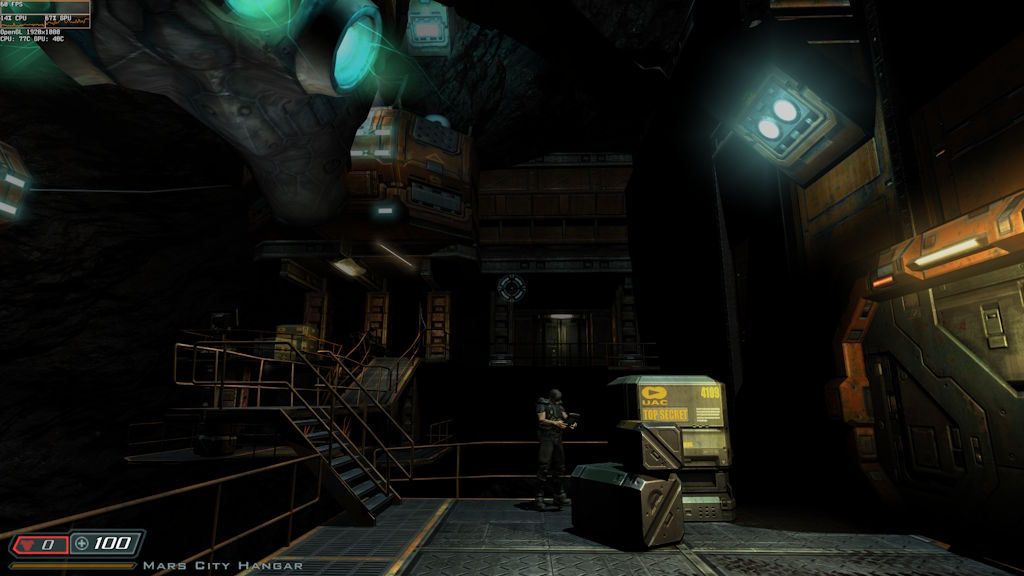

Doom 3: BFG Edition (2012 remaster of 2004 game)

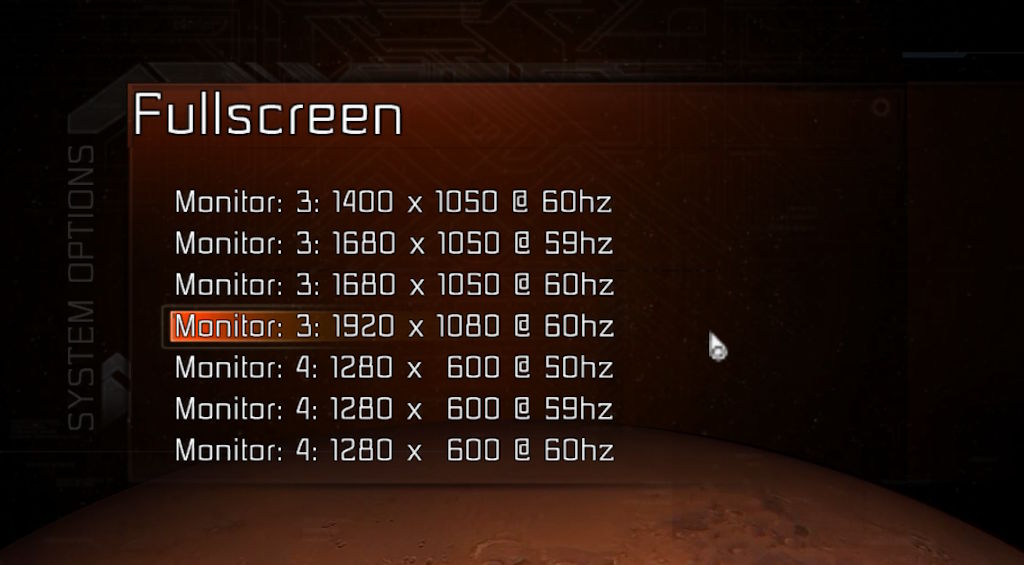

I do recall the BFG Edition of Doom 3 getting some critique when it came out over the changes to the flashlight mechanics and atmosphere of the game, but I'm just happy that there's a way for me to play the game on a decent resolution on a modern system. It also has an immensely cool option, where you can choose which monitor you want to run it on:

Every game should have this option (though at least most of them start on the primary monitor, looking at you, Ashen) but either way, here's some Doom 3 on the id Tech 4 engine, running with no issues:

I might just have to go back sometime later and replay it, such an iconic game from back in the day, it also ran fine on the B580.

Bioshock Infinite (2013)

On the list of iconic games, Bioshock Infinite definitely also takes a high spot! It's both a well made game, narratively and gameplay wise, but also pretty to look at:

Don't be mislead, it is using Unreal Engine 3 under the hood and it does so really well. If nothing else, it proves both that artistic direction and skilled developers matter more than just raw graphics horsepower, especially because of how well it runs.

Dishonored (2015 remaster of 2012 game)

There's also the Dishonored game, which got a remaster, still running on Unreal 3, no issues in sight:

I might prefer the gameplay more to than the graphics in the first game (the second one is really pretty, but also a really big download), but either way, it worked fine.

Doom (2016)

I also did test out the Doom game from 2016 and I have to say that I was even more pleasantly surprised.

Honestly, I have never had a game work so well out of the box and be as optimized as this one was. If you asked me what the future of gaming should look like back when Crysis was all the rage, something like this game would definitely fit the bill. I'm not just saying that for the sake of it, but rather as a subtle dig at some of the other modern engines: if the big studios threw a bunch of money at the more recent version of id Tech and actually put some effort into optimizing their games, we'd be at much better of a spot when it comes to new releases.

For this one, I specifically disabled the framerate limiter and increased the size of the graphs, just to demonstrate how insanely well it runs. Everything is more or less turned up to the maximum, is running at 200 FPS (that is an artificial cap though, seems like their physics engine would bug out if it ran at anything higher, though that would normally be separate from the rendering, either way people with 300 FPS monitors will complain) and the computer isn't breaking a sweat:

The GPU usage on a B580 remains pretty low, the framerate is more stable than any other game I've seen even in the action scenes and the temperatures are pretty manageable. I will say that my Ryzen 7 5800X does run a bit hot under load, though I could probably undervolt it a bit to help with the cooling further. Either way, it's not thermally throttling and it runs the game just fine:

The only thing I didn't quite enjoy was the fact that Vulkan seems to have had issues both on Windows 10 and Windows 11, where capturing Vulkan games both through OBS and even something as simple as just hitting Print Screen doesn't seem to work and returns a black screen. I took these screenshots through Steam and also had to run the game itself windowed initially (Print Screen would work then), that's why the resolution shows up a bit goofy:

Either way, here's some output from the Intel Graphics Software. There are a few spikes here and there while I was taking the screenshots, but I'm surprised at how well everything ran, the GPU rarely even utilized all of its power in a game that looks great, runs great and has the graphics more or less maxed out:

Maybe I should also have a look at Wolfenstein II: The New Colossus, Doom Eternal and Indiana Jones and the Great Circle sometime, because those also use the engine. I'm surprised that more companies don't, none of these games are known for bad launches or technical issues (maybe outside of some poor managerial decisions, such as forcing raytracing to always be on).

Hunt: Showdown (2019)

Here's a CryEngine game as well, I've heard a lot of good about it but haven't really played it much before myself. Either way, I just booted it up to see how well it works and it was another example of a well optimized game that runs and looks great:

CryEngine is an interesting option: there were previously forks like Amazon Lumberyard that never took off, but now we basically get the tech repackaged as O3DE with its own improvements as well, a project that happens to be backed by The Linux Foundation of all people and is Apache 2.0 licensed.

While I very much share hopes for a bright future for engines like Godot (which is amazing for 2D and is getting better at 3D), if you need a powerful 3D engine, then O3DE might at the very least be worth a look, rather than just jumping into Unreal Engine 5 and realizing that you can't make a game that runs well to save your life.

Isonzo (2022)

Here's also a modern Unity game, that does sit somewhere between Battlefield 1 and the now retired Heroes & Generals game in how it plays, focused on the infantry portion. Again, a game that I want to play in the future when I'll actually have some free time, but it ran just fine:

Unity is also a pretty decent engine, even if their HDRP can be somewhat demanding. It's still cool to see an engine that has historically had more success with indie and mobile games be more than capable of pulling its weight both in complex projects, as well as pretty modern graphics.

Road to Vostok (2022, but not released yet)

Speaking of Godot, there is precisely one notable project attempting to make a modern looking 3D game in it, which is Road to Vostok:

The project initially started out in Unity, but because of their runtime fee debacle a lot of developers looked elsewhere. I'm not sure how far the project will get, but it really seems like the developer has done a lot of good work and is proving that Godot is viable for more serious games too.

Other interesting stuff

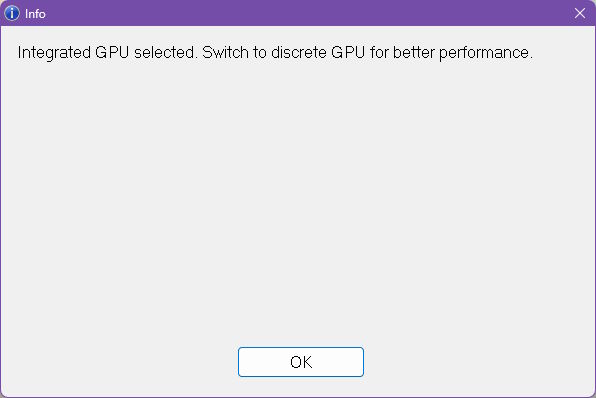

Along the way, I also ran into something interesting with Enlisted and War Thunder. Both of them complained that I'm running on an integrated GPU, which is a bit silly, because my CPU literally doesn't have one. They're probably just checking if the GPU vendor is listed as "Intel" because back when the engine and games were made, Intel wasn't in the discrete GPU market yet:

The good news is that the games just run after you click OK and the rest works just fine. Furthermore, War Thunder even supports XeSS upscaling, so it's a bit silly that the warning is there in the first place, since you kind of need to acknowledge that Intel GPUs exist to offer the upscaling integration.

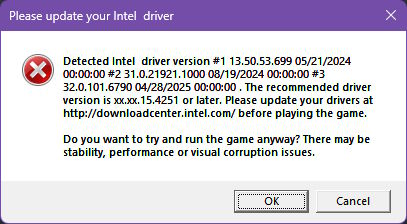

Curiously, Need for Speed: Heat also complains about my driver version and suggests that there might be bugs in it:

This is a bit silly, because I'm running the latest GPU drivers for both my Intel Arc and AMD card at the time of the writing, so it's probably just bad version checking logic. The reason why none of this is a problem though, is the fact that the games still run and it's essentially a win-win situation:

- if the game doesn't run because of an actual driver issue, at least they've provided me with a disclaimer (and instructions that might fix the problem in that particular case)

- if the game runs well and it's just their version check logic that's bad, then I can dismiss the warning and enjoy the game

It's very much the same as with the resolution selection dialog that also lets you pick the exact monitor you want to run the game on: it gives more control to the person running the software, which is how things should be, same as how you should have a good options menu where you can toggle whatever settings you like or dislike on or off.

The results

The above might have seemed a bit rambly, but I did want to show off some of the games and if nothing else, then prove that Intel Arc GPUs can handle most of the games out there with relatively few issues along the way. Actually, after testing out 60 games in total, across various genres (there were a few RTS games in there as well, racing games, even 2D games like ZERO Sievert and Stardew Valley), I found that most of them just work:

| Game | Engine | GPU Selection | Notes |

|---|---|---|---|

| Nexus: The Jupiter Incident | Black Sun Engine | OK | |

| Hearts of Iron IV | Clausewitz | OK | |

| Skyrim (Special Edition) | Creation | OK | |

| Hunt: Showdown 1896 | CryEngine | OK | |

| Carrier Command 2 | Custom | OK | |

| Homeworld: Remastered Collection | Custom | OK | |

| Sleeping Dogs | Custom | OK | |

| Starpoint Gemini Warlords | Custom | OK | |

| Transport Fever 2 | Custom | OK | |

| Enlisted | Dagor | OK | Complains about iGPU, runs fine |

| War Thunder | Dagor | OK | Complains about iGPU, runs fine |

| Arma Reforger | Enfusion | OK | |

| Need for Speed: Heat | Frostbite 3 | OK | |

| Star Wars Squadrons | Frostbite 3 | OK | |

| Fallout: New Vegas | Gamebryo | OK | |

| Oblivion (original) | Gamebryo | OK | |

| ZERO Sievert | GameMaker | OK | |

| deltaV: Rings of Saturn | Godot | OK | |

| Road to Vostok (Demo) | Godot | OK | Launches on the wrong monitor |

| Half Life | GoldSrc | OK | |

| DOOM 3: BFG Edition | id Tech 4 | OK | |

| DOOM (2016) | id Tech 6 | OK | |

| Wargame: Red Dragon | IRISZOOM V4 | OK | |

| Sid Meier’s Civilization V | LORE | OK | |

| Barotrauma | MonoGame | OK | |

| Stardew Valley | MonoGame | OK | |

| Morrowind | NetImmerse | OK | |

| Burnout Paradise Remastered | RenderWare | OK | |

| Jagged Alliance 3 | Sol | OK | |

| Black Mesa | Source | OK | |

| Half Life 2 | Source | OK | |

| BeamNG.drive | Torque 3D | OK | |

| Captain of Industry | Unity | OK | |

| Corpus Edax | Unity | OK | |

| Due Process | Unity | OK | |

| Hardspace: Shipbreaker | Unity | OK | |

| Isonzo | Unity | OK | |

| Nuclear Option | Unity | OK | |

| Peripeteia | Unity | OK | |

| Regiments | Unity | OK | |

| Train World | Unity | OK | |

| Deus Ex: Game of the Year Edition | Unreal 1 | OK | Game runs too fast |

| Brothers in Arms: Road to Hill 30 | Unreal 2 | OK | |

| Deus Ex: Invisible War | Unreal 2 | OK | Resolution doesn’t go past 1280x1024 |

| Bioshock: Infinite | Unreal 3 | OK | |

| Dishonored | Unreal 3 | OK | |

| The Bureau: Declassified | Unreal 3 | OK | |

| The Bureau: XCOM Declassified | Unreal 3 | OK | |

| ADACA | Unreal 4 | OK | |

| Ashen | Unreal 4 | NOT OK | Launches on the wrong monitor |

| Battlefleet Gothic: Armada | Unreal 4 | OK | |

| Dakar Desert Rally | Unreal 4 | OK | |

| Deep Rock Galactic | Unreal 4 | OK | |

| Delta Force | Unreal 4 | NOT OK | |

| Vladik Brutal | Unreal 4 | OK | |

| Abiotic Factor | Unreal 5 | OK | |

| Dark and Darker | Unreal 5 | OK | |

| Everspace 2 | Unreal 5 | OK | |

| Motor Town: Behind The Wheel | Unreal 5 | OK | |

| Satisfactory | Unreal 5 | OK |

The only ones that downright didn't work were the following:

- Delta Force - uses the RX 580 no matter what I do, ignores Windows preferences

- Ashen - uses the RX 580 no matter what I do, ignores Windows preferences, launches on the wrong monitor

Both of those are Unreal Engine 4 games, but at the same time neither other engine versions had that particular problem, nor did other Unreal Engine 4 games that I tested: ADACA, Battlefleet Gothic: Armada, Dakar Desert Rally, Deep Rock Galactic, Vladik Brutal. Most of these are a bit niche because I couldn't be bothered to download games that are like 50 GB in size, but it might also prove that even smaller teams are capable of making the engine work just fine.

Even if the rest of the post and the overall situation proved to be pretty positive, then the cause of the issue is now pretty much clear in my eyes...

Summary

Who's to blame for all this? Developers. Much like Steve Ballmer shouted that from a stage years ago, I can now point in the direction of the people who made those games and repeat who is to blame, loud and clear.

I'm under no illusion that those games will be patched out or even that there's an easy solution for the issues, because dual GPU setups are uncommon in of themselves, to the point where nobody is going to spend a lot of time testing for them and debugging (which is also how War Thunder and Enlisted complain about the Arc GPUs supposedly being integrated ones, though the games themselves thankfully work). However, there is no good reason for the games to be broken like this. The operating system would definitely benefit from a mechanism to hide some GPUs from a specific process, the same way how you can set the process affinity for specific CPU cores, but at least the engines and games that play nice with the OS have no issues.

This still does mean that if you have 100 games then maybe 3-4 of those might not work, or if you have 500 games, then that number might jump to 16-17 games. It's just me extrapolating from my little experiment here, but while that number is pretty low, it's still there. Imagine not being able to listen to a song that you enjoy, just because it refuses to play on your device. Or alternatively, having to use a specific device to play the song, which might be a bad analogy because some people definitely enjoy playing vinyl records, whereas me having to disable my GPU in the Device Manager wouldn't be too hard to do, but annoying nonetheless. Plus, if I wanted to stream/record any of those games without my RX 580 connected to the PC, I'd have to reconfigure OBS which is a pain.

It's not all bad, though. Modern game engines give me plenty of hope, or at least this somber sense of knowing the truth: there are plenty of games made in CryEngine, Godot, id Tech, Unity and various versions of Unreal Engine, all of which work well. Sometimes it's because the engine pushes you towards good methods during development, other times it's because the games are a bit on the older side by now and thankfully even budget hardware can run them perfectly fine, but it's possible to make games that run well and look great.

Since I do have to do a little rant about that here, I will admit that it just isn't happening to a big part of the market - for whatever reason many use Unreal Engine 5 in a way that they try to push graphics above all else, oftentimes releasing broken, buggy and really badly optimized products: look at how S.T.A.L.K.E.R. 2 was on release and how it still is.

Look at how any of the following run:

- Alan Wake 2

- Ark: Survival Ascended

- Black Myth: Wukong

- Immortals of Aveum

- MechWarrior 5: Clans

- Redfall

- Remnant 2

- Silent Hill 2

- The Matrix Awakens (yes, even the UE5 demo)

Games like Satisfactory that actually get optimized properly are a rarity in comparison. You can try to say that it's just about how the developers use the engine, but it's only a valid argument up to the point where we have a widespread industry issue with most modern releases on the engine that come out running really badly. It's the same as saying that developers who use C++ are just bad at memory management, yet time after time after time there are CVEs that are caused by this. At one point you just have to draw a line and say that enough is enough and look at alternatives (in the case of programming, it'd be memory safe languages, in the case of game engines - literally any other engine)

Upscaling and frame generation should make games more accessible even on lower end hardware, not get used as a crutch for bad performance because the developers couldn't be bothered to fix it. In part, that is probably driven by stupid industry trends, in part because the people running the companies care more about deadlines than they do about the experience that gamers will get on release day - since apparently that's the status quo now.

I do believe that if they could fix No Man's Sky, if they could fix Cyberpunk 2077, then games like S.T.A.L.K.E.R. 2 will also get fixed eventually, but the sad part is that many games will never get fixed and will be perpetually broken and impossible to enjoy on anything but unreasonably overpowered (and exorbitantly expensive) hardware. If anything, it's teaching me to never get a game on release day anymore and never do preorders, unless I care more about supporting the developers than I care about getting a game to play.

If I wanted to have a bit more fun with this, I'd probably conjure up a scene in my imagination, where a bunch of shady Nvidia execs are giving employees of Epic briefcases full of money, for them to subtly push graphics technology on the industry that will absolutely get misused and necessitate more GPU purchases, all the while actually delivering on the promise of making iterating on assets and game scenes that much faster and more convenient, meaning that numerous studios will ditch their own proprietary engines in favor of Unreal, sometimes to good effect, but also sometimes with outstanding failures.

Either way, Intel Arc GPUs are actually pretty cool and there's lots of nice games out there to enjoy, I wonder if any of them would run better on Linux, though in the case of Delta Force I guess it's a matter of not being able to play it at all due to the intrusive anti-cheat solution they have, another case of a part of the community falling through the cracks because the business people controlling its development don't care (albeit from a business perspective I have to say that it probably makes sense), even if Steam and Proton are also finally making proper gaming on Linux a thing.

Aside from that, I yearn for the day when we all have dual GPU setups: where when you get a new GPU, you can use the old one as a secondary for browsing and all of the background tasks. Why aren't we doing that? Windows 11 is finally a decent enough OS to take advantage of it and as I just proved, even most games are finally passable in that regard. You don't need a fancy SLI or CrossFire setup to benefit from two GPUs in your system!

Other posts: « Next Previous »