Nginx configuration is broken

Date:

A little while ago i wrote about how to use Nginx as a reverse proxy and i rather enjoy that piece of software! That said, it's not without its faults, about some of which i'd like to tell you today.

What upsets me about Nginx the most is that it takes the fail-fast approach, which isn't entirely suitable for combining it with a container workflow, at least in situations where you're running it without something like Kubernetes (which has its own customizations).

For example, currently i run my own Nginx ingress with Docker Swarm:

service A / B / C <--> Nginx <--> browserThe reverse proxy configuration feels easier to do than that of Apache2/httpd, for example:

server {

listen 80;

server_name app.test.my-company.com;

return 301 https://$host$request_uri;

}

server {

listen 443 ssl http2;

server_name app.test.my-company.com;

# Configuration for SSL/TLS certificates

ssl_certificate /app/certs/test.my-company.com.crt;

ssl_certificate_key /app/certs/test.my-company.com.key;

# Proxy headers

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

# Reverse proxy

location / {

resolver 127.0.0.11 valid=30s; # Docker DNS

proxy_pass http://my-app:8080/;

proxy_redirect default;

}

}However, suppose that the "my-app" container has a health check set up for it. What does that mean? Docker Swarm won't route any traffic to it until it's finished initialization. Except that it also means that DNS requests won't return anything for "my-app" until at least one healthcheck passes. That in of itself shouldn't be too problematic, right? Any web server surely would just log that one request as an error and return an error until eventually everything would work.

Wrong. Nginx does throw the error and the request fails, but the ENTIRE web server goes down:

nginx: [emerg] host not found in upstream "my-app"What does that mean? If you have 1 Nginx instance on the server that needs to proxy 10 apps in total, one app not being available will prevent the other 9 from working! Whoever thought that such defaults would be okay has reasoning that is beyond me, especially considering that the Caddy web server does something similar and will go down if 1 out of 10 sites fails to provision the SSL/TLS certificate, instead of letting the others work - completely opposite of what someone who cares about resiliency would want!

But fear not, supposedly there's a solution!

# Reverse proxy

location / {

resolver 127.0.0.11 valid=30s; # Docker DNS

set $proxy_server my-app;

proxy_pass http://$proxy_server:8080/;

proxy_redirect default;

}Except that, you know, that also doesn't work:

nginx: [emerg] "proxy_redirect default" cannot be used with "proxy_pass" directive with variablesNow, that's not an issue in my case, since i just jotted down the "proxy_redirect default;" value to remember what it does, but it's unfortunate to hear that it is neither ignored (since it's the default value which is functionally identical to it not being there), nor could other values be used: http://nginx.org/en/docs/http/ngx_http_proxy_module.html#proxy_redirect

Summary

In summary, it's nice that there are workaround for problems like that, but it's a bit odd that the web server is otherwise so quick to fall over and die, as well as the fact that the defaults aren't oriented towards maximum reliability and resilience. That said, i still do like Nginx, it's just that it's finnicky to work with sometimes.

But at the same time, in situations where load isn't high enough for the web server to be the bottleneck, it's also helpful to evaluate the alternatives, notably: Apache2/httpd, Caddy and Traefik. It was actually pretty recently that Apache2/httpd got mod_md, which allows provisioning Let's Encrypt certificates out of the box, which is certainly promising: https://links.kronis.dev/pxSiGGHTwA

Update

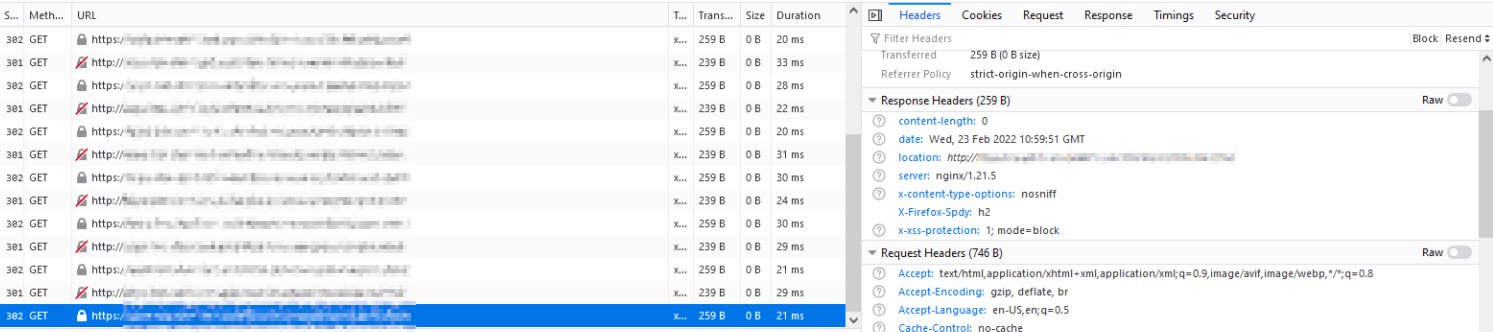

Uh oh, seems like i spoke too soon about the workarounds! Most of the external software that i use (Zabbix, Matomo, Apache Skywalking, Portainer, ...) seemed to work without issues, but for some reason some of the older legacy in house applications can break! Now, i won't go into details since that's not necessarily public information, but you can definitely expect things to break in unexpected ways with the above configuration:

It seems that for some reason the HTTPS versions of the app are redirected back to HTTP for some reason, which then redirects back to HTTPS - an unexpected loop that breaks everything! As of yet, i have no idea how to prevent this whilst allowing Nginx to work while not all of the apps are online, to come up with a workaround:

So, thanks for nothing Nginx, now i'll need to run the apps in a configuration where test environments are all dependent on every single app being up for even the reverse proxy to work, which instantly means that i cannot show user friendly 502 pages while redeployments are happening etc. Alternatively, in prod, multiple adjacent Nginx containers will need to be running, with some other method of ingress in front of them, which is an unnecessary amount of configuration for something as simple as keeping a web server that acts as a reverse proxy up!

Other posts: « Next Previous »